ub4ty

Senior member

- Jun 21, 2017

- 749

- 898

- 96

Overall, modern processor architecture is not a cake walk.

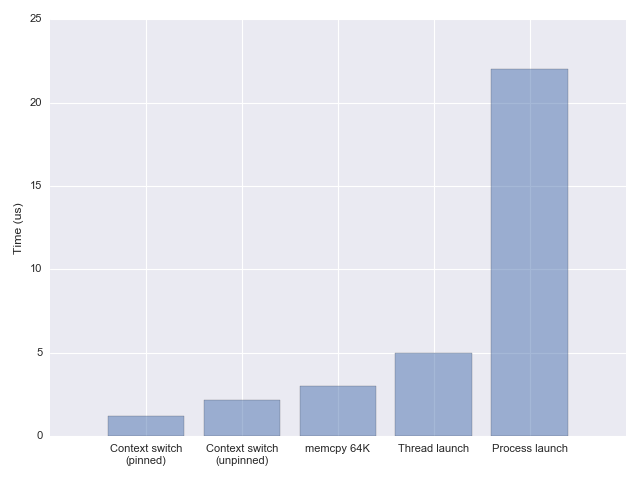

You would have to have a full plate of undergraduate course and graduate coursework centered on computer engineering with a focus on computer architecture coupled with a wealth of industry experience to even begin accurately dissecting, making suggestions, and/or comparing Intel to AMD. From a K.I.S.S perspective, single threaded vs multi-threaded performance are often in opposition due to architectural changes for multi-threaded that improve throughput at the cost of latency. You can widen pipes but the high-way still terminates and hits local roads. SIMD and Floating-points tasks are best suited for the GPU and dedicated accelerators. It's absolutely moronic to load down a CPU with such tasks. For every : AMD should do X,Y,Z for single threaded performance there's a trade-off that impacts power utilization, throughput, etc.

The future is multi-threaded. Has been for some time. It is the job of software engineers to catch up not AMD revert back to yester-year's battle especially for a vocal minority known as the never-ending screecher take my money vidya-gamer who likely knows nothing about software engineering or computer engineering disciplines.

AMD has made a processor centered around multi-threaded work flows. The dies are hand-downs from EPYC. This should excite anyone with a formal understanding of computing in that you are getting a server grade architecture. This is what attracted me to AMD's platform. It is highly scalable and there is uniformity from desktop to server. Now even moreso. The single threaded performance has been sufficient since the 1st generation of Zen. When software is authored towards modern hardware, it should not be heavily reliant on single threaded performance... This goes for game engines (Yes, I'm looking at you severely antiquated code). When you do code analysis and a singular process/thread is mucking up performance, you have crappy code.. Period.

The future is massive multi-threaded. Single threaded performance is for the birds and soon to be defunct software.

https://www.eteknix.com/world-of-tanks-engine-get-massive-multi-thread-overhaul/

Get with the times people. Stop yapping about Intel's yester-year processors that have been tuned for single threaded performance which can't scale. And stop pretending you can out-wit people with PhDs in multi-processor architecture with decades of industry experience. Single threaded performance is sufficient. When it is sufficient, you can focus on far more important and complex tasks centered on multi-threading and scaling core count. AMD is pursuing a beautiful roadmap. The single threaded performance is going to be what its going to be : sufficient. The core scaling is the focus... Has been from the start. The focus should be an a revolutionary new chiplet design and the architecture therein. Meanwhile, people are focusing on Comp Arch (101) undergraduate level single-threaded performance.... Muh e-celeb IPC values.. That vocal minority of screechers who wont be the volume buyers nor those focused on the future. Learn how to write proper software !

You would have to have a full plate of undergraduate course and graduate coursework centered on computer engineering with a focus on computer architecture coupled with a wealth of industry experience to even begin accurately dissecting, making suggestions, and/or comparing Intel to AMD. From a K.I.S.S perspective, single threaded vs multi-threaded performance are often in opposition due to architectural changes for multi-threaded that improve throughput at the cost of latency. You can widen pipes but the high-way still terminates and hits local roads. SIMD and Floating-points tasks are best suited for the GPU and dedicated accelerators. It's absolutely moronic to load down a CPU with such tasks. For every : AMD should do X,Y,Z for single threaded performance there's a trade-off that impacts power utilization, throughput, etc.

The future is multi-threaded. Has been for some time. It is the job of software engineers to catch up not AMD revert back to yester-year's battle especially for a vocal minority known as the never-ending screecher take my money vidya-gamer who likely knows nothing about software engineering or computer engineering disciplines.

AMD has made a processor centered around multi-threaded work flows. The dies are hand-downs from EPYC. This should excite anyone with a formal understanding of computing in that you are getting a server grade architecture. This is what attracted me to AMD's platform. It is highly scalable and there is uniformity from desktop to server. Now even moreso. The single threaded performance has been sufficient since the 1st generation of Zen. When software is authored towards modern hardware, it should not be heavily reliant on single threaded performance... This goes for game engines (Yes, I'm looking at you severely antiquated code). When you do code analysis and a singular process/thread is mucking up performance, you have crappy code.. Period.

The future is massive multi-threaded. Single threaded performance is for the birds and soon to be defunct software.

https://www.eteknix.com/world-of-tanks-engine-get-massive-multi-thread-overhaul/

Get with the times people. Stop yapping about Intel's yester-year processors that have been tuned for single threaded performance which can't scale. And stop pretending you can out-wit people with PhDs in multi-processor architecture with decades of industry experience. Single threaded performance is sufficient. When it is sufficient, you can focus on far more important and complex tasks centered on multi-threading and scaling core count. AMD is pursuing a beautiful roadmap. The single threaded performance is going to be what its going to be : sufficient. The core scaling is the focus... Has been from the start. The focus should be an a revolutionary new chiplet design and the architecture therein. Meanwhile, people are focusing on Comp Arch (101) undergraduate level single-threaded performance.... Muh e-celeb IPC values.. That vocal minority of screechers who wont be the volume buyers nor those focused on the future. Learn how to write proper software !

Last edited: