Blitzvogel

Platinum Member

- Oct 17, 2010

- 2,012

- 23

- 81

Draw calls

Holy balls Batman I didn't realize they were so expensive on the CPU side.

Draw calls

You cannot have more than one Thread per Core (No SMT etc) per cycle.

What modern superscalar processors are able to do is to split each thread in to multiple sub portions (instructions) and decode, execute and retire them. But you always have a single thread per Core per cycle.

Stop trying to read this as some sort of technical information, it's not. It's just a marketing slide to show 6 threads being used. It doesn't matter what he puts in those boxes the result is the same. That isn't technical information ether, but is at least a somewhat better representation.

Holy balls Batman I didn't realize they were so expensive on the CPU side.

Um, no. Not for Intel's HT implementation,

Dude!Marketing what? This presentation isn't really meant for gaming consumers, but for developers. He's talking about DirectX 12, which is something AMD supports of course but will be equally usable by Nvidia.

Dude!

http://forums.anandtech.com/showpost.php?p=31520674&postcount=28

Nvidia usesthis technologymultithreaded rendering for so long that people don't even remember that they do.

AMD is ,like in so many cases,marketing themselves as the inventor of the wheel.

They putting a spin on having the worst drivers possible for so long by trying to convince people that they single-handedly crated mantle and forced the industry to move to dx12.

It's multithreaded rendering no matter how you look at it and that's what aten-ra is crying about the last few pages.

He was talking about that point specifically and not about mantle in general because he thought that that would promote his multicore concept.

Sure the low level APIs are the better option but that did not stop nvidia from releasing proper drivers 4 years ago that would at least use what was available at the time instead of saying lets wait 4 years for the next api before making hasty decisions...

Oh is that why nvidia released a driver update last year that provided similar gains with what amd managed with mantle?Don't confuse multi-thread with multi-core. FWIU all "rendering" is done on one core with DX11. Johan Andersson said that the biggest problem with DX11 is it's "painfully serial". The reason that multi-threading DX11 gives such minimal improvement (Which is AMD's complaint. It's just not worth it.) is because of these issues.

It's multithreaded rendering no matter how you look at it and that's what aten-ra is crying about the last few pages.

He was talking about that point specifically and not about mantle in general because he thought that that would promote his multicore concept.

Oh is that why nvidia released a driver update last year that provided similar gains with what amd managed with mantle?

http://www.maximumpc.com/nvidia-takes-mantle-enhanced-dx11-driver-2014/

Yeah but you still haven't showed us how long in cycles a draw call needs for it to be send to the gpu.No, i was specifically talking about DX-12, Multi-Core CPUs and how much more work you can feed the GPU simultaneously.

Yeah but you still haven't showed us how long in cycles a draw call needs for it to be send to the gpu.

Or how this will overcome the much slower execution of the game code itself.

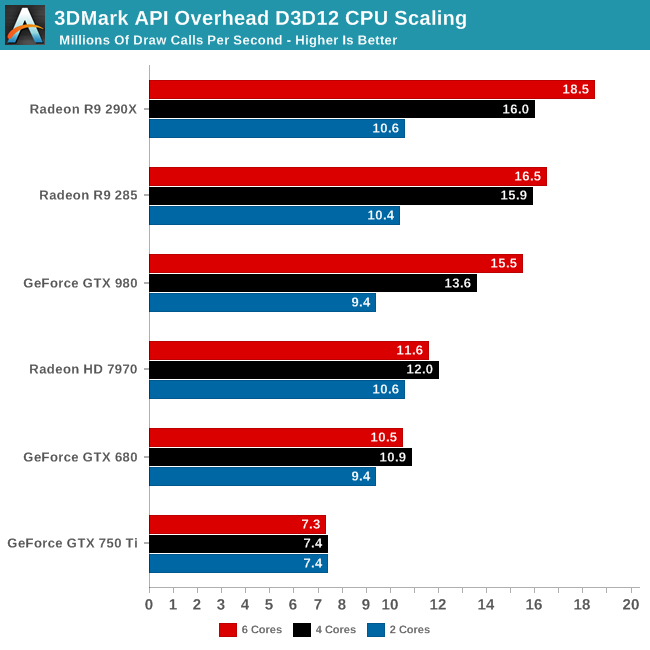

According to the Eurogamer 3D Mark API review, the slower Single Thread performance FX CPUs (Higher Core Count) are as fast or faster than the higher Single Thread performance Intel CPUs issuing draw calls in DX-12.

For example the Triple Module 6x Threads FX6300 is faster than Dual Core 4 Threads Core i3.

And the Quad Module 8 Threads FX8350 is close/faster vs the way Faster Single Thread Quad Core Haswell Core i5 4690K.

So we went from definitely being faster on a 6 core than on only 4 cores to being quite slower with 6 and just barely the same speed with 8 cores...That is true only if you have something running in the CPU alone. In games that the GPU also do a lot of work, if your DX-12 game engine can scale to 6-8 treads, in order to feed the GPU more work at any given time, 6-8 slower cores will give you higher fps than 4 faster cores.

That is because you manage to feed the GPU doing more work per given time.

So we went from definitely being faster on a 6 core than on only 4 cores to being quite slower with 6 and just barely the same speed with 8 cores...

According to the Eurogamer 3D Mark API review,you posted.

FX 6300 DX12 7.7m 12.6m 12.5m 12.7m

FX 8350 DX12 7.7m 14.1m 16.0m 14.8m

i5 4690K DX12 8.1m 14.1m 14.5m 14.7m

Again, the Eurogamer review above is only about drawcalls. In actual games your CPU will also have to perform other tasks simultaneously like AI, Physics etc etc and Draw calls.

We could have a way faster Quad Core that will be faster than a way slower 6 or even 8 core CPU but that needs to be in an order of 2x faster or something to accomplish this.

This is also where the slow CPU will be hurt the most. Because the game logic itself wont be multithreaded like no tomorrow. Sure you can find a few exceptions. But that is about equal to claiming we all play chess games.

Also those draw calls will never be hit in real life.

In short, its unlikely that anything will change from how it looks today. If anything you will be able to get away with less CPU performance. But more likely no real change.

Heh, even today with DX-11 and latest games that can take advantage of 4-6 threads show significant performance increase with the 8-core FX CPUs against Core i5. You really believe that DX-12 games will not benefit more from higher core count than DX-11 ??

Oh is that why nvidia released a driver update last year that provided similar gains with what amd managed with mantle?

http://www.maximumpc.com/nvidia-takes-mantle-enhanced-dx11-driver-2014/

You greatly overestimate the benefit. The short answer is no, I dont think DX12 games will benefit from more core count than DX11 if we exclude slow CPUs. Sure thing the draw calls will be distributed more and benefit slower CPUs due to lower overhead. But that is also about it. Everything related to the game logic doesnt change. And by distributed it seems the sweet spot is still 4 cores for DX12. The scaling to 6 cores gave 0-15%. And anything above that 0. And that is assuming you are draw call limited.