While I hear this, the trend is towards a Hybrid Solution whereby you only put what you absolutely need to in somebody else's data center : https://www.infoworld.com/article/3...ng-why-some-are-exiting-the-public-cloud.html

This is far more sensible and will eventually hit a tipping point back to private instances. My sentiments like those who left the cloud is security and a whole host of other problems with leasing someone else's equipment. There are a number of cases where doing so is far more expensive over the lifetime of equipment than actually purchasing yourself. I also note a huge chasm in understanding forming due to people choosing this EZ-bake oven solution to performance. A lot of runtime performance of compute solutions has actually gone to the gutter because no one know how to actually deal with the underlying hardware because they're just slapping things into containers or provisioning software.

Hardware is becoming more complex not less. If I asked if a server instance in the cloud was using NVME drives and if so what version, what do you think the answer would be? Do people know how to configure a sever to optimally handle their workload? GPU or CPU? Memory bound or CPU Bound? Yes, a lot of people love the idea of not having to know about any of these details and they pay for this luxury in many ways. A lot wouldn't know the first thing about distributed computing or load balancing beyond clicking a configuration in software. This leads to a lot of crappy software and security issues. You can't have your cake and eat it too. I imagine you could cute compute requirements in half if people really understood the principals behind building such software themselves and speccing the hardware to their specific requirements.

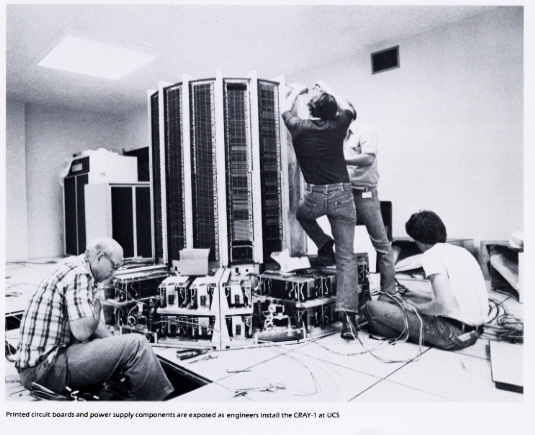

Instead, we exist in an age where people would rather run 800 cores worth of machines at full tilt brute forcing solutions using meme learning. There's a significant cost associated with this practice which is why its cyclical. Hardware is becoming complicated again and increasing substantially in capability. Mainframe/Cloud eras go through a flux during such periods... Namely because innovators begin writing new approaches to software now that they have an amazon AWS rack worth of computing in their room. New and widely varied hardware starts raining on the scale parade. Enterprise level features begin trickling their way down to the consumer.

You can provision instances with SSD vs without, with GPU compute vs without, they do give you quite a bit of granularity. At least with Amazon, you can ask people those questions and get the specific answers. You pay for complexity and scale whether you own it or Amazon owns it though, it's just a matter of whether the $$ goes to Amazon or payroll/tooling (VMware aint cheap). Increasingly it doesn't make sense for general purpose sorts of software shops to run their own server farms. Some specific uses it would matter, I agree, like video related services where the exact codecs and hardware that run them will matter a lot. I first used AWS in 2009 and the amount of new stuff since even then blew me away when I looked into it again in 2017