Confirmed - i9 9900k will have soldered IHS, no more toothpaste TIM

Page 2 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Jun 10, 2004

- 14,607

- 6,094

- 136

Who cares whether the decision to not use solder was to save $ in the BoM or to save $ by reducing RMA rates. In the end, it was all about $. It was the wrong decision for an enthusiast product, and correctly panned by the enthusiast community.

They made the right choice by re-introducing solder for the enthusiast parts.

They made the right choice by re-introducing solder for the enthusiast parts.

With regards to the thermal cycling. The stresses needed to fracture the solder is directly proportional to the temp delta. In the paper above , its 180 degrees Celsius for the cycle range. Below a critical temperature delta, most likely there will be no fractures as the thermal stress never exceeds the fracture strength of the material or of the bonds.I wish people would start reading a bit,from the link above that explains it all.

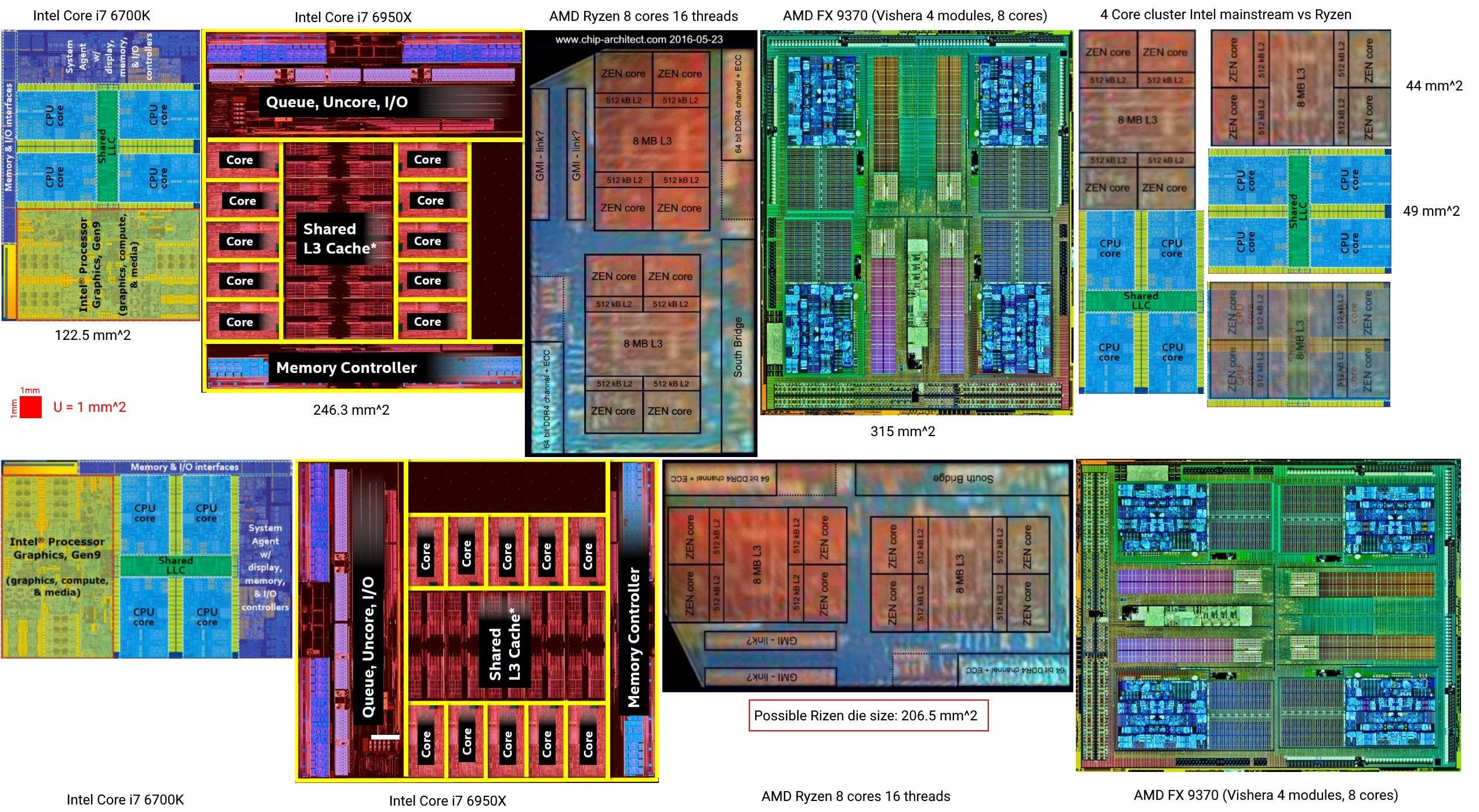

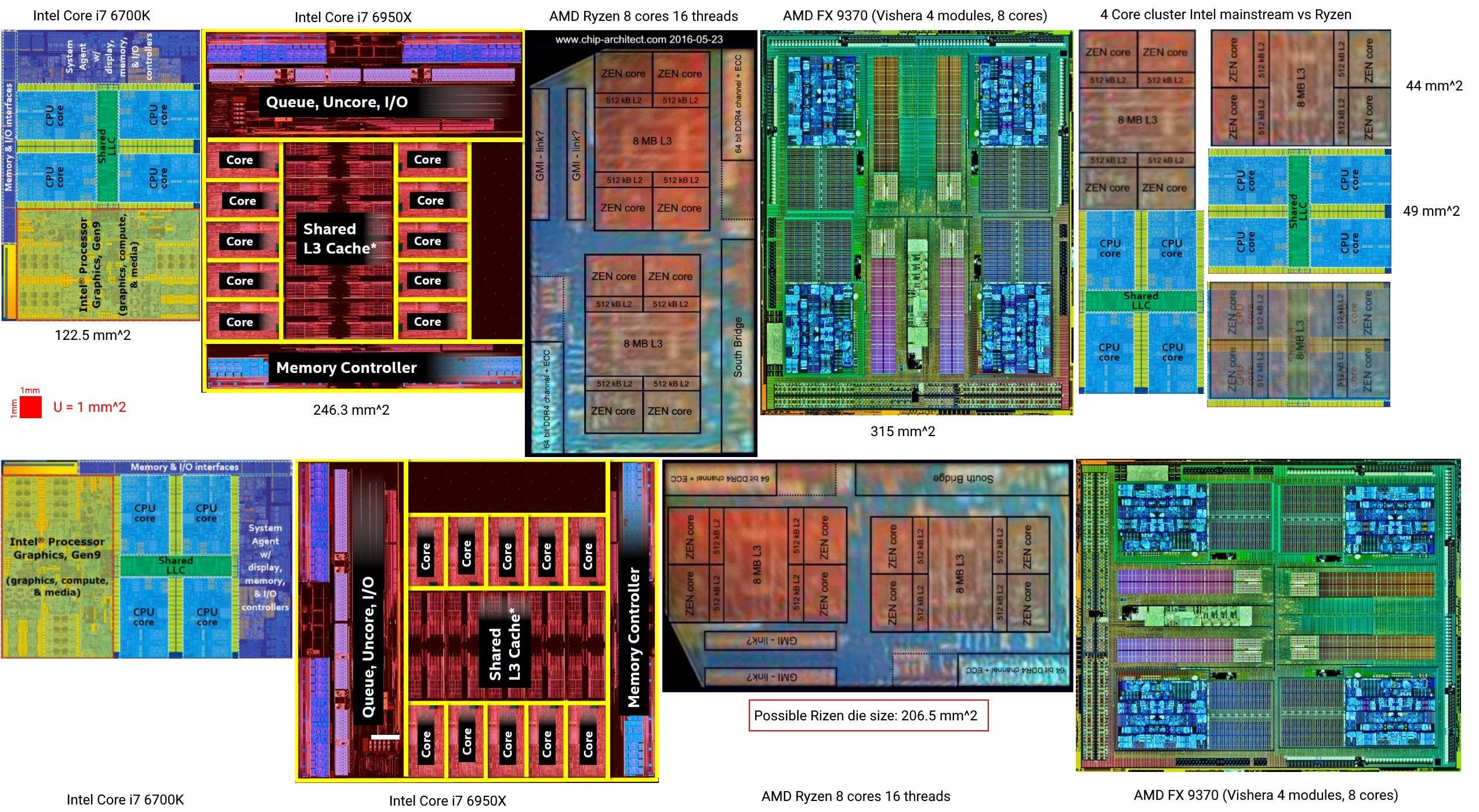

On small dies (what you call cheapo for AMD) neither intel or amd uses solder for this reason.

Intel's 4/8 cpus were less then half the size of the 8 core FX series while doing the same work,the risk is too high to use solder on such small surfaces because the heat can't spread evenly.

Now intel's 8 core has enough die area so they will use solder,it's that simple.

Sauce:

https://www.reddit.com/r/Amd/comments/5st41v/speculation_i_tried_to_make_this_little/

Any 2 dissimilar materials bonded together will have a stress generated due to different coefficients of expansion. The key is to prevent that stress exceeding the strength of the material. Pretty much like fatigue failure modes.

No one is bashing Intel for using solder. I don't see where you got that idea. In my case, I'm bashing all the posters who spent the last year ridiculing those of us, who were bashing Intel for NOT using solder on their high end CPUs, They claimed all sort of technical reasons why solder is bad, and now have gone silent.

It reads like it - in a thread about Intel soldering CPUs,we get linked to an article saying why soldering is BAD,and how it can cause problems. Imagine a lurker,who wants to buy the new chip,they might get overly worried thinking after a few years,the solder will crack the chip. Heck,its even putting doubt in my mind! :help:

I also agree with you,I couldn't see why such large company could not find a way even if there was some "hurdle" to cross.

You do know that people in labs don't have 4-5 years time to test something right?

They will often accelerate processes that would otherwise take too long.

I'm not going to get into an argument about each and every single CPU.

The article said CPUs under 130MM2 have problems,but Intel has used TIM on CPUs above that for years, which is what my Core i7 3770 uses. Another poster said the E8200 was soldered. Those chips have lasted for years soldered,and they are only 100MM2. A 180C delta is huge,and these are consumer chips not designed for that - any chips designed for such usage would have to be a chip designed for extreme conditions like the RAD750 and RAD6000 which are used in space.

Anyway,I am not going to worry about solder causing the CPU problems. I would be more concerned about shoving excessive voltage through a CPU when overclocking.

Last edited:

It's a double edged sword. I mean if the soldered IHS is very close to keeping the die cool producing results similar to delidded cpus with gallium, etc. then sure I'm all for it. However if the results are still rather mediocre then you're stuck with it as delidding a soldered IHS is nearly impossible for the hobbyist and the risk isn't worth it.

It seems you don't know that De8auer himself delidded a Ryzen CPU and found only a slight improvement, ~ 4 Celsius.It's a double edged sword. I mean if the soldered IHS is very close to keeping the die cool producing results similar to delidded cpus with gallium, etc. then sure I'm all for it. However if the results are still rather mediocre then you're stuck with it as delidding a soldered IHS is nearly impossible for the hobbyist and the risk isn't worth it.

https://www.youtube.com/watch?v=VEUG9d4Mjug

The physical problems are the same, this is just an engineering/accounting tradeoff between RMA rates and performance. Now that AMD provides effective competition again, delivering optimal performance for the top mainstream SKUs has become a higher priority than minimizing RMA rates, because this will optimize Intel profits.Well apparently Intel thinks solder now does not have that problem,as they are soldering their K series chips,which consume the most power of the consumer line,which by extension will cause more issues with thermal cycling if its true.

If AMD had used TIM on Ryzen and consequently lost 10%(?) of overclocking headroom, with no single chip hitting even 4 GHz without delidding, how successful would Ryzen have been? Yeah, right. Basically the same tradeoff as for Intel – accept higher per-unit cost as well as RMA rates, make up for it with far more sales. Profit maximization.AMD apparently does not agree as all their Ryzen CPUs,and the Threadripper CPUs are all soldered and their cheapo CPUs don't have it. AMD has far less leeway for increased RMA rates than Intel due to their financial situation anyway - they should be using thermal compound as their margins are lower.

Yeah well. The same worries people had when Intel switched from solder to TIM – "OMG what if it dries up after a few years?!?!". People will always find something to worry.Also,people are literally bashing Intel for using solder now and now trying to spread fear that the CPUs are more likely to fail. So are people saying we should buy a Core i7 8700K instead of a Core i7 9700K,since the solder "might" cause more problems?? People worry too much - I hate to think how many of you would have felt about pencil mods and old Athlons which would catch fire if the cooler failed!

beginner99

Diamond Member

- Jun 2, 2009

- 5,320

- 1,768

- 136

Lets hope so. After all these years of people griping about TIM, will be interesting to see how much difference solder actually makes. My bet is really not that much.

The TIM wasn't the biggest problem, the problem was the tolerance if the black glue used to fix the IHS. Too much glue and now you have air gaps between TIM and IHS. Simply fixing that and keeping the TIM already leads to serious temp improvements.

He is an extreme overclocker. He is measuring a 180C delta.

Exactly. That link has been going around for years. This thermal cycling stress comes from extreme oc with liquid nitrogen. Even a normal Overlcoker won't even get close to such thermal stress, not even half ot that delta-t.

moonbogg

Lifer

- Jan 8, 2011

- 10,734

- 3,454

- 136

Who cares whether the decision to not use solder was to save $ in the BoM or to save $ by reducing RMA rates. In the end, it was all about $. It was the wrong decision for an enthusiast product, and correctly panned by the enthusiast community.

They made the right choice by re-introducing solder for the enthusiast parts.

Yes, but screw them anyway for the years of suffering they caused.

- Feb 14, 2005

- 10,341

- 678

- 126

I suspect previous decisions to use TIM over solder was cost driven.

If there was really an inherent flaw in using solder, then why have we not seen loads of 2500ks / 2600ks running high OCs and high volts fail due to this.

If it is to do with die area, then why haven't Intel used solder on the Skylake X CPUs which have massive dies and heat output.

And why have AMD decided to use solder on their Ryzen CPUs.

It has to be cost related, which if correct is poor because we buy K CPUs for overclocking yet they are gimped.

If there was really an inherent flaw in using solder, then why have we not seen loads of 2500ks / 2600ks running high OCs and high volts fail due to this.

If it is to do with die area, then why haven't Intel used solder on the Skylake X CPUs which have massive dies and heat output.

And why have AMD decided to use solder on their Ryzen CPUs.

It has to be cost related, which if correct is poor because we buy K CPUs for overclocking yet they are gimped.

Also regarding RMA rates,surely making your chips run hotter through having a poor thermal interface between the bare chip and the cooler,will also increase potential failure rates?

cytg111

Lifer

- Mar 17, 2008

- 26,719

- 16,010

- 136

What are you talking about? Solder's melting point is far higher than the Tj. max of the CPU. The solder isn't changing state.

I believe the argument you are trying to make is about micro fractures (almost like cold solder joints) in the solder causing damage to the die. I don't think this argument has any merit though as there hasn't been any proof that soldered chips have a shorter life span. In fact it seems more to the contrary; I've had an Athlon 64 X2 (Brisbane) essentially break on me because the paste dried up and caused the temperatures to sky rocket no matter what cooling was used. It wasn't until I delided the CPU and used it bare die that the temperatures returned to normal.

At the end of the day it's all about cost just as it's always been. Intel has been using paste for it's CPUs for a long time, much longer than Ivy bridge. For example, a Core 2 Duo E7200 uses paste but a Core 2 Duo E8200 is soldered. It obviously wasn't something technical that caused Intel to use paste for the E7200, it was price as they're almost the same exact chips.

You will have a hard time arguing with data, if a study provides proof that microfractures be a thing then either discount the authors of said study, the study it self, or get with the program. Getting into how duos were soldered or not, back in the day, is specifically not helpful to current state of affairs.

I wish people would start reading a bit,from the link above that explains it all.

On small dies (what you call cheapo for AMD) neither intel or amd uses solder for this reason.

Intel's 4/8 cpus were less then half the size of the 8 core FX series while doing the same work,the risk is too high to use solder on such small surfaces because the heat can't spread evenly.

Now intel's 8 core has enough die area so they will use solder,it's that simple.

Sauce:

https://www.reddit.com/r/Amd/comments/5st41v/speculation_i_tried_to_make_this_little/

Wtf, some core2duo smaller than that, but still soldered, and even amd stated themselves that why they don't use solder on ryzen Apu it was because of cost, not because of engineering challenge.

Last edited:

You will have a hard time arguing with data, if a study provides proof that microfractures be a thing then either discount the authors of said study, the study it self, or get with the program. Getting into how duos were soldered or not, back in the day, is specifically not helpful to current state of affairs.

But he present another data point, in his case why small core2duo soldered and the study was flawed, it's only true for extreme condition in this case the study use extreme Delta temperature.

Last edited:

But he present it another data point, in the his case why small core2duo soldered and the study was flawed, it's only true for extreme condition in this case the study use extreme Delta temperature.

Yeah,the kind of temperature deltas that consumer chips like this are not designed for - specialised chips like the RAD series used for space operations are,and that includes optimised packaging.

I still don't get why people are now spreading "data" trying to push that solder is now making chips more likely to fail. So what is the point of this?? To scare off people buying a Core i7 9700K or Core i9 9900K.

Intel has just decided to solder its consumer chips with the biggest possible temperature deltas,so it can't be that big of an issue. That should help with 8 cores clocked at nearly 5GHZ and mean more efficient usage of coolers.

To those who are that worried about solder,then you might as well panic and buy a Core i7 8700K before stocks run out - nobody is forcing you to buy chips which are soldered if you are that worried.

Let the people like us who are genuinely happy Intel is doing this,be happy instead of trying to be spoilsports!!

Last edited:

Because it has to be redone every couple of years.Question, why don't they use liquid metal anyway? Must be cheaper than solder using exotic metals.

while i am slightly happier (not in the market for a CPU for the foreseeable future), i will not be "genuinely" happy until they also cut the IGP from enthusiast chips.Let the people like us who are genuinely happy Intel is doing this,be happy instead of trying to be spoilsports!!

chrisjames61

Senior member

- Dec 31, 2013

- 721

- 446

- 136

while i am slightly happier (not in the market for a CPU for the foreseeable future), i will not be "genuinely" happy until they also cut the IGP from enthusiast chips.

Easy fix to this is buy into the AM4 platform and build a Ryzen rig.

french toast

Senior member

- Feb 22, 2017

- 988

- 825

- 136

Ah, cheers.Because it has to be redone every couple of years.

frozentundra123456

Lifer

- Aug 11, 2008

- 10,451

- 642

- 126

Don't know what it would have been with Ryzen, but the loss in overclocking headroom is far, far less than 10% with Skylake. Think about it, most coffee lake cpus can reach 4.8, usually 5ghz. 10% additional from that would be another 0.5 ghz. There is no way simply changing to solder would allow coming even close to that, no way. Now granted, cooling is better, but actual overclocking headroom is what, only .2ghz or so?The physical problems are the same, this is just an engineering/accounting tradeoff between RMA rates and performance. Now that AMD provides effective competition again, delivering optimal performance for the top mainstream SKUs has become a higher priority than minimizing RMA rates, because this will optimize Intel profits.

If AMD had used TIM on Ryzen and consequently lost 10%(?) of overclocking headroom, with no single chip hitting even 4 GHz without delidding, how successful would Ryzen have been? Yeah, right. Basically the same tradeoff as for Intel – accept higher per-unit cost as well as RMA rates, make up for it with far more sales. Profit maximization.

Yeah well. The same worries people had when Intel switched from solder to TIM – "OMG what if it dries up after a few years?!?!". People will always find something to worry.

- Jun 10, 2004

- 14,607

- 6,094

- 136

Don't know what it would have been with Ryzen, but the loss in overclocking headroom is far, far less than 10% with Skylake. Think about it, most coffee lake cpus can reach 4.8, usually 5ghz. 10% additional from that would be another 0.5 ghz. There is no way simply changing to solder would allow coming even close to that, no way. Now granted, cooling is better, but actual overclocking headroom is what, only .2ghz or so?

Depends on how bad your TIM application/IHS gap is. My temps when testing with an AVX stress test like Prime95 or AIDA64 CPU/FPU/cache won't allow going past 4.7GHz. Getting 5.1-5.2GHz with a delid is >50% of samples (Silicon Lottery, >86% 5GHz+, >50% 5.1+) so I'd have a better than 50/50 shot of +400-500MHz if I delidded. A 15°-25°C temperature improvement is nothing to sneeze at.

But with the soldered i9-9900K coming out next Q, I don't plan to waste my time chasing that last ~10%.

It seems you don't know that De8auer himself delidded a Ryzen CPU and found only a slight improvement, ~ 4 Celsius.

https://www.youtube.com/watch?v=VEUG9d4Mjug

But this is a discussion about Intel not AMD.

If you want the best results, don't care about cost why even deal with a heatspreader and just engineer your own direct die contact block and be done with it?

And 4C is not what I'd consider slight if you're pushing things to the very edge! Anyone who is delidding chips isn't your typical OC for 24/7 100% load person that's looking to maximize throughput and have longevity and stability suitable for heavy, continuous workloads.

- Feb 14, 2005

- 10,341

- 678

- 126

But this is a discussion about Intel not AMD.

If you want the best results, don't care about cost why even deal with a heatspreader and just engineer your own direct die contact block and be done with it?

And 4C is not what I'd consider slight if you're pushing things to the very edge! Anyone who is delidding chips isn't your typical OC for 24/7 100% load person that's looking to maximize throughput and have longevity and stability suitable for heavy, continuous workloads.

4C just isn't enough of a delta to be meaningful and justify delidding.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.