I thought that is what I just said.

Yes, I was just backing up what you said with a quote from the release notes.

I thought that is what I just said.

And I think you missed the part where he said "The Async Compute mode is slightly faster (<5%). "I think you missed the part where he said "No difference at all."

The ones I bolded out?What "Extremes" are these?

I'm not creating stories..This article was from december 2014 after Maxwell v2 was launched and nVidia had announced FinFet for Pascal. Obviously nVidia will skip 20nm for their GPUs... :\

Where is the information that nVidia announced Maxwell will be using 20nm?

The line is very clear to me. nVidia gamers would be concerned about Pascal because of your fanfiction. Yet AMD's user dont care about missing features like CR, ROV...

You are creating stories over stories to hype AMD and you cant even back them up like this:

Tell us how we can ask the driver about it. With your insider information is should be easy to link to a article or programm.

No,https://forum.beyond3d.com/threads/intel-gen9-skylake.57204/page-6#post-1869935

https://forum.beyond3d.com/threads/intel-gen9-skylake.57204/page-7#post-1869983

Don't ever bother arguing with these ADFs, they are clueless as hell and don't know anything except to slander and FUD about things they don't understand.

I'm not creating stories..

http://www.techpowerup.com/mobile/2...s-amd-gcn-better-prepared-for-directx-12.html

And where did they get that quote? I invited Oxide over to overclock.net. Here's where the quote is from:

Second link page 122 of the thread:

https://www.google.com/search?q=oxi...#q=oxide+async+compute+disaster+overclock.net

Many other links reporting it there.

Now you want to know how I know all of these things? If you read the thread you'll find out.

I already put up a Beyond3D test which confirmed it ans further testing has also confirmed it. Findings published here:Do you have any other statements than from (AMD sponsored)Oxide that have rounded wccftech usually?

I already put up a Beyond3D test which confirmed it ans further testing has also confirmed it. Findings published here:

http://ext3h.makegames.de/DX12_Compute.html

Do you have any other statements than from (AMD sponsored)Oxide that have rounded wccftech usually?

Actually yes, Fable Legends.So no. So what happens if AOTS isn't showing what will be the standard for DX12 games?

I'm not making any of this up.

Exactly, while I don't doubt nvidia's support for 12_1, it is true that "the nvidia driver exposed Async Compute as being present" but when Oxide went to use it it was "an unmitigated disaster". Oh and I didn't say what's in quotations, Oxide did.

Umm, Microsoft tools query the driver in order to determine feature support.I still wait for any proof that Microsoft has designed a way to get this information about multi engine support from the driver.

Actually yes, Fable Legends.

Asynchronous compute testing showed the same thing:

http://wccftech.com/asynchronous-compute-investigated-in-fable-legends-dx12-benchmark/

Second page:

http://wccftech.com/asynchronous-compute-investigated-in-fable-legends-dx12-benchmark/2/

Fable Legends uses very little Async Compute, less than AotS. Hitman will truly show off the feature.

Very little Async Compute as I already mentioned, and you can witness with your own eyes in those graphs from wccftech, but you missed something...I see you really like wccftech.

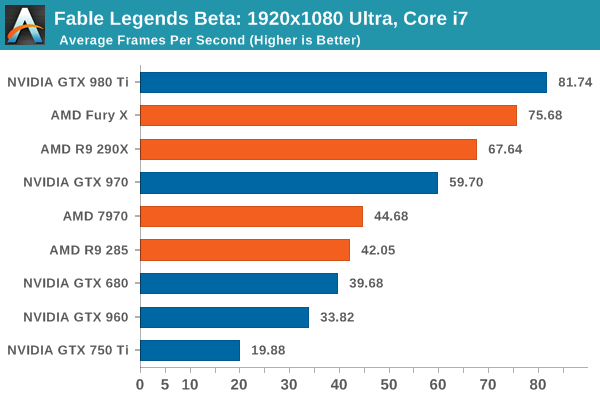

So Fable is DX12. And how is the GPU performance there?

http://techreport.com/review/29090/fable-legends-directx-12-performance-revealed

Or is there too much tessellation there?

It's not "a little tessellation". AMD GCN can handle up to a factor of x15/x16 without suffering the small triangle problem in GCN1,2,3. It's only when you over tessellate that GCN1,2,3 tanks. Of course Tonga and Fiji fare better than Tahiti and Hawaii. Polaris will likely rectify this. Hence the brand new geometry processing units.But the result matters doesn't it? What if the vast majority of games look like this?

Add some extra tessellation and AMD performance goes bonkers.

Of course, but we're theorising future performance based on our collective knowledge of GPUs and API features.Can we agree that the only thing that matters is the result and what people will buy and play? Tessellation, Async or not. Its all secondary.

I see you really like wccftech.

So Fable is DX12. And how is the GPU performance there?

http://techreport.com/review/29090/fable-legends-directx-12-performance-revealed

Or is there too much tessellation there?

I still wait for any proof that Microsoft has designed a way to get this information about multi engine support from the driver.

Umm, Microsoft tools query the driver in order to determine feature support.

All GPU tools query the driver, see GPU Caps Viewer:

http://www.ozone3d.net/gpu_caps_viewer/

Oxide did the same, the nvidia driver exposed the feature, Async Compute, as being available but once they attempted to use it... Disaster.

Nvidia wanted Oxide to "shut it down" meaning remove all Async Compute from the game. Oxide refused and came to an agreement on creating a vendor ID specific path.

Again, drivers expose features and tools which look for feature support query the driver.

Now let's ask nvidia:

https://developer.nvidia.com/nvemulate

" By default, the NVIDIA OpenGL driver will expose all features supported by the NVIDIA GPU installed in your system."

Or Microsoft:

https://msdn.microsoft.com/en-us/library/jj134360(v=vs.85).aspx

" DirectX 9 drivers (which expose any of the D3DDEVCAPS2_* caps)"

Drivers expose features.

You havent answered the question. What is the cap which describes the multi engine support of the underlaying hardware.

Seriously, what you just wrote is nothing else than:

nVidia has provided a DX12 driver so they are supporting everything AMD supports. :\

I still wait to see how a "DX12 driver" can or cannot support Async Compute.