TheELF

Diamond Member

- Dec 22, 2012

- 4,029

- 753

- 126

Yes,yes it is,1.1W of power draw is amazeballs,best CPU ever.

But those are also the people that will never run an AVX heavy stress test for the very simple reason that they don't even know that it's a thing.The problem is users are going to get burned: the same users that know little about overclocking and cooling are the ones tempted by motherboards with disabled limits and ridiculous stock voltage settings to "safely" enable MCE by default.

They are going to run battlefield or fortnite and that's it,and the CPU is going to run cool and quiet even if it draws more power than necessary.

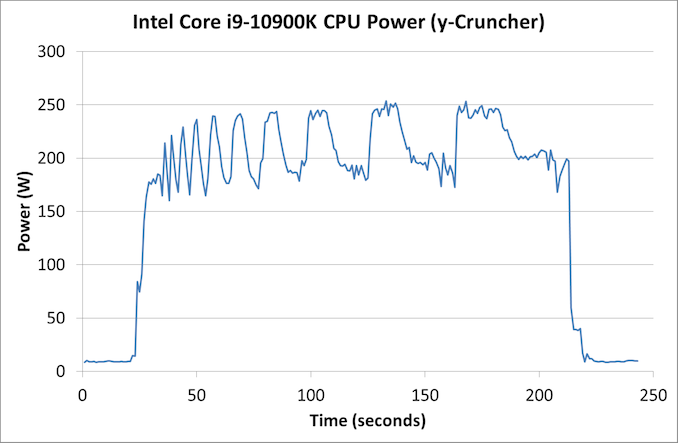

No max draw is not average draw,the CPU is optimized to such a degree that it alternates between high and low extremely fast.Your points on wattage are rediculous. The average wattage for a 10900k is 254 if pl2 is used. The peak was 380 watt.

You would have to lock the CPU to Pl2 indefinitely ,which would be a pretty heavy O/C to get 250W ,and even then it would not be the average because speed step would still be doing its job.

It would be the power draw during testing or running heavy loads only.

380 is only possible with every restrain removed.

Also for everybody having an issue with phoronics ,computer base has an average and they only use software that heavily favors ryzen,all their tests are 3d rendering, video transcoding, archiving, photoscan and y-cruncher.

Completely ignoring the gaming win.

On single the 10900k is 8% ahead of the 3900x while on multi the 3900x is 10% ahead of the 10900k, a whopping 2% win for the 3900x while it has 20% more cores...so basically still an 18% loss compared to the ~23% of phoronics.

Intel Core i9-10900K und i5-10600K im Test: Benchmarks in Spielen und Anwendungen

Core i9-10900K und i5-10600K im Test: Benchmarks in Spielen und Anwendungen / Testsystem und Methodik