For cache latency, well it is what you might expect from this organization. For details you will have to look for official sources or some leaks...

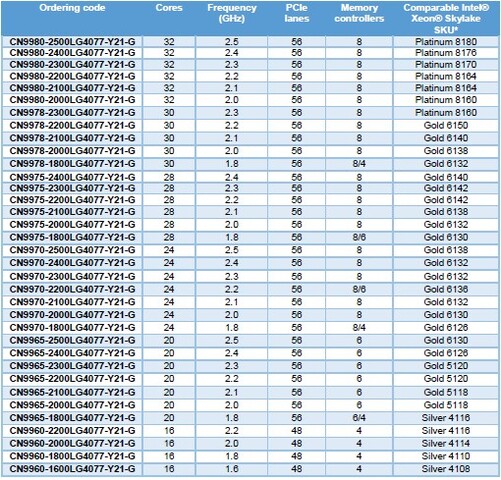

Well, this is pretty important. Node is already better and 7nm will be well practically well ahead of 10nm. There is no "mess in node names".Qualcomm already has improved Core and IPC for 7nm Centriq in the pipeline likely coming in 2019. Intel will have 10nm ( A node that should be slightly better then Samsung's 7nm ) at around the same time. So it really isn't a advantage.