HBM1s main advantage is power consumption. Its disadvantage is price.

Speed wise HBM1 and GDDR5 is about equal. 4 stacks(4096bit) 1Ghz HBM=512GB/sec. GDDR5 8Ghz 512Bit=512GB/sec.

If we forget about the fact that driving GDDR5 at 8ghz over a 512 bit bus is very power demanding as well as die space consuming, yeah, its equal.

It's been publicly said that GDDR5 memory subsystem is 1/5th to 1/3rd (depending on the bus size) of the die area as well as overall TDP.

Note that any power savings as well as die space savings from HBM can be devoted to performance.

Latency matters for computing & rendering, due to multiple pass required (shadow, bump maps, lighting, post effects and much more) to render a complex scene, inbetween, that data is shuffled around. Lower latency => higher uptime on shaders and less idle cycles resulting in increased efficiency or IPC per shader, thus, overall performance.

Also, R290/X isn't competing versus 970/980. It's the reason for the major marketshare loss for AMD. The average buyer sees that the R290X system uses 100W more, they avoid it and willing to pay extra for less performance/crippled vram 970. Thus, to "rebrand" it to further compete with 970/980 for the next cycle is suicidal.

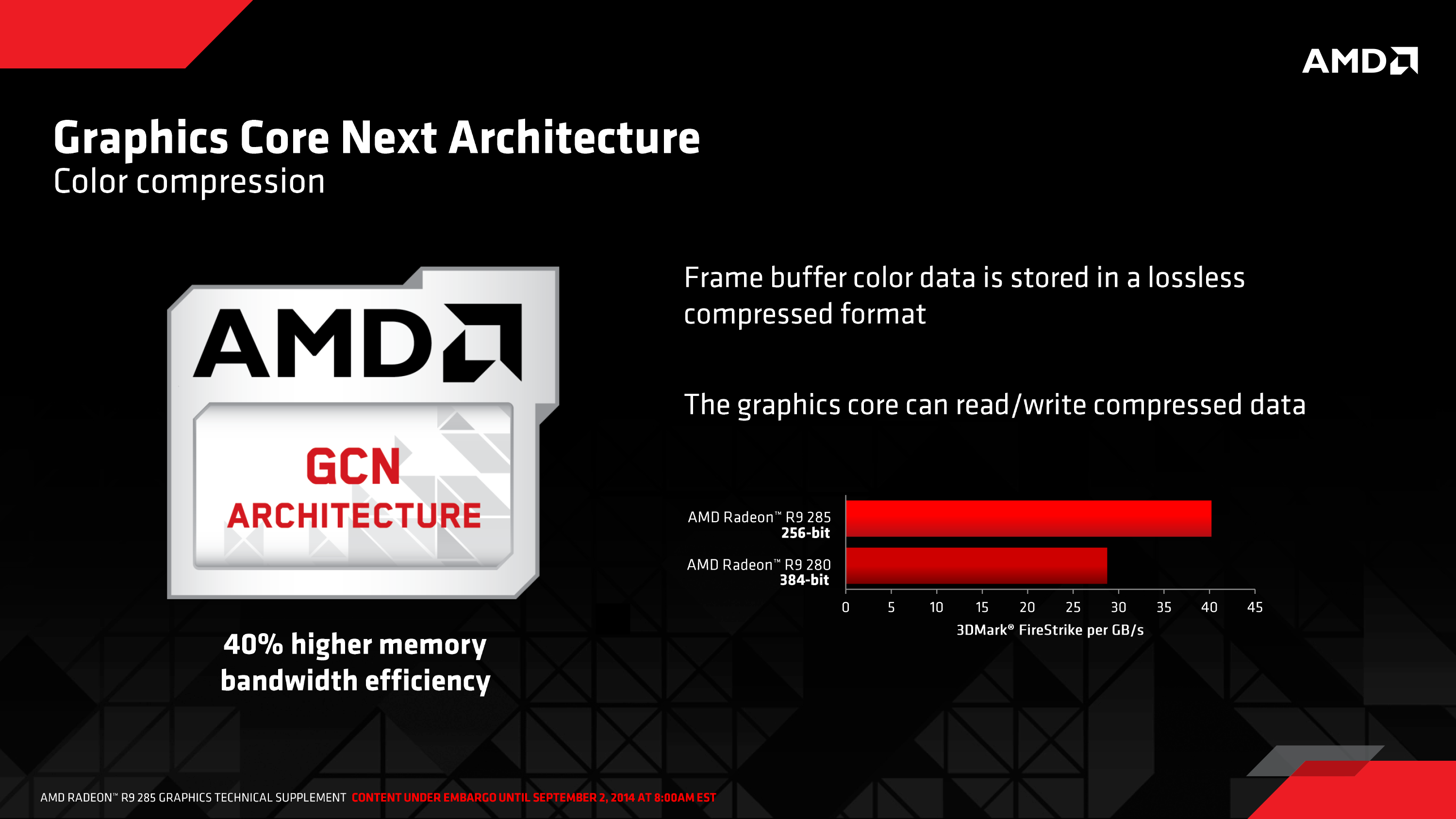

And Tonga XT is a stop-gap, a test measure, which AMD is lucky to pawn off to Apple. It does not significantly improve efficiency or performance over Tahiti to make it competitive. AMD needs a "Maxwell leap" of their own in order to compete. Tonga is not going to compete well in the segment where the 960/XT occupies as long as it uses much more power.

So, re-branding products that aren't selling and failing to be competitive and asking them to compete for the next cycle? No. Only utter newbies would even think of such a move. I have doubts that AMD is that stupid.