AMD is so awful that games are subpar and are a dissaster. Even Intel Skylake GPU are way stronger that those crappy consoles GPU according to Intel users.

Facepalm. You have

no clue what you are talking about. Literally ever since you've joined the forum, what you need to do is learn, read, learn, research, learn more, reflect, read, learn. Instead, you post the most unsubstantiated drivel with the confidence of a senior technical member, yet fail to substantiate any of your opinions. How old are you 15?

Let's actually look at how ludicrous your statements are:

Technical analysis

The GPU in the Xbox One is about as fast as an HD7790, and that performance level is very close to the GTX750. The GPU in the PS4 is

at least as fast as an R7 265, which is faster than the GTX750Ti by

16%. Since the PS4's GPU also has custom features such as 8 ACE units and 176GB/sec memory bandwidth, along with an extremely fast inter-connect between the CPU and GPU, the actual maximum GPU performance lies somewhere between an HD7850 and the HD7870. That level of GPU performance is

miles away from any reasonably affordable Skylake iGPU.

Let's talk about 3 specific custom areas of PS4's GPU that ensure that overall package is even better than the HD7850:

1) There is a separate bus from the CPU to the GPU that allows it to read

directly from system memory or write directly to system memory, bypassing its own L1 and L2 caches. As a result, if the data that's being passed back and forth between CPU and GPU is small, you don't have issues with synchronization between them anymore. PS4 can pass almost 20 gigabytes (20GB/sec) a second down that bus.

That's faster than PCIe 3.0 x16.

2) Next, to support the case where you want to use the GPU L2 cache simultaneously for

both graphics processing and asynchronous compute, PS4's custom GPU has tags of the cache lines, aka 'volatile' bit. You can then selectively mark all accesses by compute as 'volatile,' and when it's time for compute to read from system memory, it can invalidate, selectively, the lines it uses in the L2. When it comes time to write back the results, it can write back selectively the lines that it uses. This innovation allows compute to use the GPU L2 cache and perform the required operations without significantly impacting the graphics operations going on at the same time -- in other words, it radically reduces the overhead of running compute and graphics together on the GPU.

Considering NV's and Intel GPUs of 2013 were vastly inferior to GCN 1.0/1.1 in compute, this means as far as long-term longevity goes, they weren't even on the map in 2013 when it comes to using Asynchronous Compute + graphics.

3) "The original AMD GCN architecture allowed for one source of graphics commands, and two sources of compute commands. For PS4,

Sony has worked with AMD to increase the limit to 64 sources of compute commands (8 ACE engines) -- the idea is if you have some asynchronous compute you want to perform, you put commands in one of these 64 queues, and then there are multiple levels of arbitration in the hardware to determine what runs, how it runs, and when it runs, alongside the graphics that's in the system."

http://gamasutra.com/view/feature/191007/inside_the_playstation_4_with_mark_.php?page=2

In other words, when it comes to pure graphics throughout, PS4's GPU with 32 ROPs, 72 TMUs, 1024 stream processors over 176GB/sec memory bandwidth bus, 8ACE engines, volatile compute, and direct 20GB/sec bus to the CPU would wipe the floor with any Skylake iGPU in gaming performance.

Considering neither ARM, nor Intel, nor NV could provide an affordable quad-core or 8-core CPU that could match 1.6-1.75Ghz Jaguar APU cores, even if the Jaguar APU is slow relative to an i5 2500K or similar, it was hands down THE best option given the budgets MS/Sony had to work with.

Financial situation of MS and Sony before PS4/XB1's launch

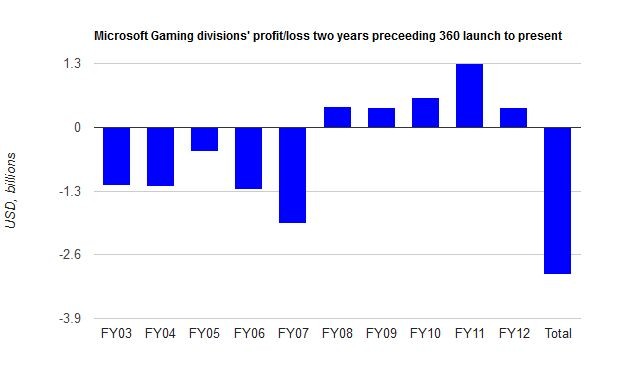

Report: Microsoft's Xbox division has lost nearly $3 billion in 10 years

July 2015,

IGN reported that MS posted a $2.1B loss.

Sony's Game business has been nothing short of abysmal prior to PS4.

I am not going to do more research on 2011-2013 years but in October 2014,

IGN reported that Sony overall posted $1.2B loss.

In other words, Xbox 360 and PS3 were utter failures from a business perspective. That means neither Sony nor MS could afford or could sustain a strategy of selling a console which bill of materials substantially exceeded its sale price.

Tying Xbox 1/PS4 BOM to launch MSRP

"The bill of materials (BOM) for the PlayStation 4 amounts to $372. When the manufacturing expense is added in, the cost increases to $381. This comes in $18 lower than the $399 retail price of the console.

When other expenses are tallied, Sony initially will still take a loss on each console sold. But the relatively low BOM of the PlayStation 4 will allow the company to break even or attain profitability in the future as the hardware costs undergo normal declines."

http://press.ihs.com/press-release/...-breakeven-point-playstation-4-hardware-costs

"Microsoft may be spending slightly more to build and distribute the Xbox One than it's taking in, according to a new report from IHS iSuppli.

The research firm said Wednesday that its preliminary teardown of the new game console and calculation of its bill of materials (BOM) put the cost of components in the Xbox One at $457. Tacking on an estimated $14 for manufacturing puts the system's total estimated cost to build at $471, according to IHS, just $28 less than the $499 Microsoft and its partners are charging for the next-gen console at retail."

Source

Summary

Taking all of that information into account, to this date not a single person online, with vastly superior technical knowledge and all the extensive research done compared to you could still come up with a superior PS4 console than Sony did. Knowing the BOM of PS4, it's literally impossible to have made a more powerful console. Not surprising, considering hundreds of engineers have worked on the design with the top firms in the semi-conductor field for years prior to PS4's launch. You think you are smarter than even 1 of those people?

MS could have made a more powerful console than Xbox One but they decided to priorities on other features.

Technically speaking, there were very few choices of a superior GPU for Sony/MS around mid-2013 when they would have needed to finalize their design at the latest. HD7970M/8970M was actually faster than Nvidia's 680MX or 770M.

Sony/MS would have needed to get a deal of a lifetime on the 780M to be able to fit that much horsepower in a TDP limited/constrained console. Do you care to take a guess how much a 780M style binned GPU cost in the summer of 2013?

Also... MS current generation was a dissaster thanks to AMD.stupidity. Maybe they will leave permanently the console division after the failure of Xbox One.

AMD had nothing to do with MS's decisions such as prioritizing Kinect over GDDR5 and a faster GPU which forced MS to compromise on the GPU and go with a weaker embedded ram and DDR3. MS could have easily incorporated an APU at least as good as PS4's had they ditched Kinect from the start.

AMD had nothing to do with MS's decision of always on connection to play games, non-upgradeable internal HDD, blocking used games, no rechargeable lithium batteries in the controller, external PSU, VCR styling, $499 MSRP with useless Kinect, etc.

MS's failure with Xbox 1 is 100% on MS, no one else. AMD simply provides what they were contracted to provide but it's ultimately MS that determines what their priorities are and what they are willing to pay for a given level of CPU+GPU performance.

Sony wants to reduce.costs and they might move to 12 ARM core or a 8 Atom core chip in order to prepare the next generation.

Once again showing that you have no idea what you are talking about. Switching to a new CPU architecture mid-cycle in the console generation breaks BC on existing consoles and it breaks future x86 BC on PS4/XB2. The fact that you keep posting this nonsense either tells me you are 15 or less or are just trolling.

Despite AMD did well here (selling millions to get some millions dollars), still their efforts weren't enough. Sony will move from AMD to find better prices. Intel can easily sells their Atoms at subsided prices.

Right, like Intel offered superior processors to MS/Sony in 2013....oh wait they didn't. Why is that? Because Intel aims for >60% gross margins. They have 0 interest in consoles and their budget i3s aren't a good fit a for a console that allocates 1-2 cores for the OS and background functions alone.

And if AMD dies sooner than expecting, Nintendo will have an horrible time since they won't have a real sucessor to.counter Ps4 and Xbox One. If not, Nintendo must know that AMD will die sooner than expecting, so is better to prepare the replacement of that short lived console.

Last quarter AMD had 829M of Cash & Equivalents, that's higher than in the previous

4 consecutive quarters preceding Q2 2015. In fact, as of Q2 2014, AMD only had 503M of C&E.

https://www.google.com/finance?q=NASDAQ:AMD&fstype=ii&ei=YdUJVsCWN4XHmAGh4rOABQ

Chances are, AMD is not what's going to make or break the NX, but Nintendo's strategies and priorities of the

new NX eco-system.

Again, even if AMD were to fail (something I've heard for 10 years+ :sneaky

, there are certainly contracts in place that will allow for Sony, MS and Nintendo to fabricate the chips directly at TSMC, GloFo or Samsung, etc. If AMD were to fail, it means it has breached its own contract by being unable to provide the product per the Supply Agreement.

You honestly think the lawyers at Sony, MS and Nintendo are 15 year old kids that don't plan for contingencies in their contracts? :hmm:

In few words, AMD is dooming the gaming market hard.

More clueless drivel. Your reputation on AT is already 0, but keep digging into the negatives with 0 facts.

"In an interview with Eurogamer, co-founder of CD Projekt Red, Marcin Iwinski, explained to them...

If the consoles are not involved there is no Witcher 3 as it is, we can lay it out that simply. We just cannot afford it, because consoles allow us to go higher in terms of the possible or achievable sales; have a higher budget for the game, and invest it all into developing this huge, gigantic world."

http://www.cinemablend.com/games/Witcher-3-Wouldn-t-Exist-Consoles-72064.html

My recommendation for you is to start learning to back up your arguments with data/facts/some support or otherwise, it's just baseless opinions that sound like childish vitriol against AMD (or insert any other firm you decide to bash like your ludicrous arguments of how Intel's APUs will soon crush AMD/NV discrete graphics...ya sure!).