It's certainly perfectly in character for Raja, eh?

While 1000x seems outrageous the math behind it makes it a tad more feasible, though I doubt Intel manages it until 2027:

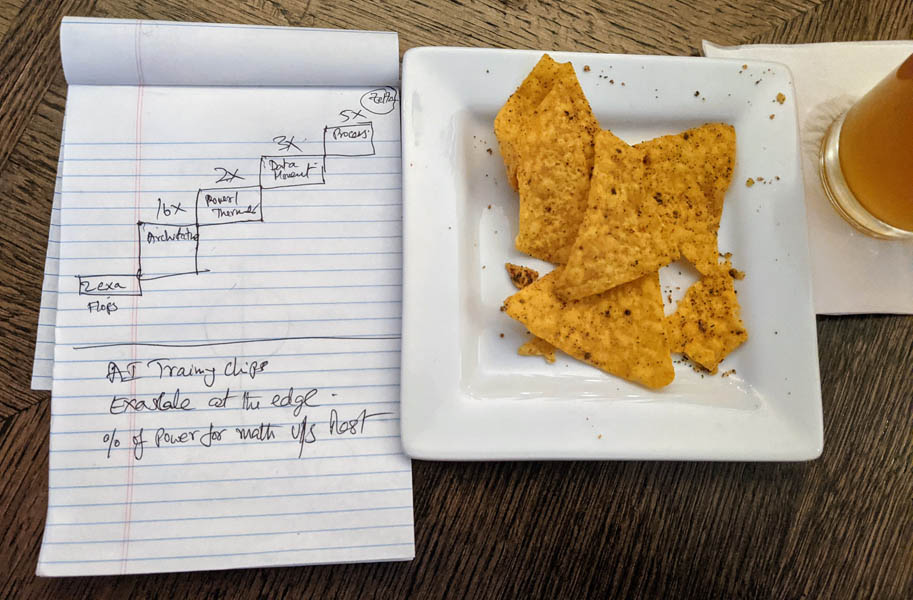

Current Aurora >= 2 EFLOPS x 16 architecture x 2 power/thermals x 3 data movement x 5 process nodes

Looking at this in detail may help imagining future development in the server space:

- Aurora is already said to be at half the efficiency of AMD's Frontier, so that's room Intel needs to catch up anyway.

- As I wrote before in architecture there's a lot of wiggle room by supporting and accelerating specific formats. Nvidia currently excels at INT8, both AMD and Intel will want to catch up there. By supporting full speed double precision in MI200 AMD built a lot of resources that still need to be efficiently made use of with lower precision formats. Packed FP32 is a first step in that direction.

- Data movement is the old story of power consumption for uncore and I/O. Due to needing a lot of bandwidth GPU and compute units are essentially the worst case scenarios for I/O. I expect more and more focus on caching (see AMD's Infinite Cache) and different packaging techniques.

- Power/thermals and process nodes seem to be one thing to me. With PVC Intel kinda cheated by using TSMC, it's interesting that they still see room for 6 times improvement in 6 years there. That's where I expect the deadline to slip, though Intel is behind AMD by efficiency so for AMD it's actually "only" 3 times, which should be more feasible.

www.hpcwire.com

www.hpcwire.com