NVidia doesn't need to be defended.

That's all you been doing wrt to AC for the last week, isn't it?

NVidia designs GPUs that perform well for the here and now, not 4 or 5 years down the road. That tactic has cost AMD a lot of market share.

Ya, so more evidence that you think GPUs should focus on maximizing the short-term performance, not worry about any next generation games, any next generation VRAM requirements. Again then why do you care so much about how Maxwell will perform in DX12 or its ACE functionality? It contradicts your statements that you don't think GPUs should be forward-thinking in their design.

Sure, the ACEs are now going to be very useful, but how long have they been sitting there wasted and taking up die space? Apparently for years..

This has been covered years ago -- AMD cannot afford to spend billions of dollars to redesign brand new GPU architectures like NV can given AMD's financial position and also the fact that their R&D has to finance CPUs and APUs. NV can literally funnel 90%+ of their R&D into graphics ONLY.

Therefore, AMD needed to design a GPU architecture that was flexible and forward looking when they were replacing VLIW. That's why GCN was designed from the start to be that way. When HD7970 launched, all of that was covered in great detail. Back then I still remember you had GTX580 SLI and you upgraded to GTX770 4GB SLI. In the same period, HD7970 CF destroyed 580s and kept up with 770s but NV had to spend a lot of $ on Kepler. Then NV moved to Maxwell and you got 970 SLI and then 980SLI but AMD simply enlarged HD7970 with key changes into R9 290X. Right now Fury X is just an enlarged HD7970 more or less and in 5 years NV already went through 3 separate architectures just to be ahead. If AMD had almost no debt, primarily focused on graphics and had a lot more cash in the bank and could design new GPU architectures every 2 years like NV, I am sure they would. I don't think you are looking at it from a realistic point of view.

But that's why I keep asking, why do

you in particular care about DX12 and AC? It's not as if you'll buy an AMD GPU and it's not as if you won't upgrade to 8GB+ HBM2 Pascal cards when they are out. Therefore, for you specifically, I am not seeing how it even matters and yet you seem to have a lot of interest in defending Maxwell's AC implementation, much like to this day you defend Fermi's and Kepler's poor DirectCompute performance. That's why it somewhat comes off like PR damage control for NV or something along those lines. Since you will have upgraded your 980s to Pascal anyway, who cares if 980 hypothetically loses to a 390X/Fury in DX12? Doesn't matter to you.

AMD's long term strategy was brilliant in many ways, but it cost them dearly as well.

Even when AMD had HD4000-7000 series and had massive leads in nearly every metric vs. NV, AMD's GPU division was hardly gaining market share, and in rare cases where they did market share (HD5850/5870 6 months period), it was a loss leader strategy long term with low prices and frankly by the end of the Fermi generation NV gained market share. In other words, NONE of AMD's previous price/performance strategies worked to make $. Having 50-60% market share and making $0 or losing $ is akin to having 50-60% of "empty market share." In business terms, that's basically worthless market share. It's like Android having almost 90% market share worldwide by Apple makes 90% of the profits.

Agreed, although I disagree with you about GW. You severely overestimate the impact of GW on games. Time and time again reality has shown us that it simply does not matter.

No, time and time again when checking many reviews, it has been shown that the most poorly optimized and broken PC games released in the last 2 years have been GW titles.

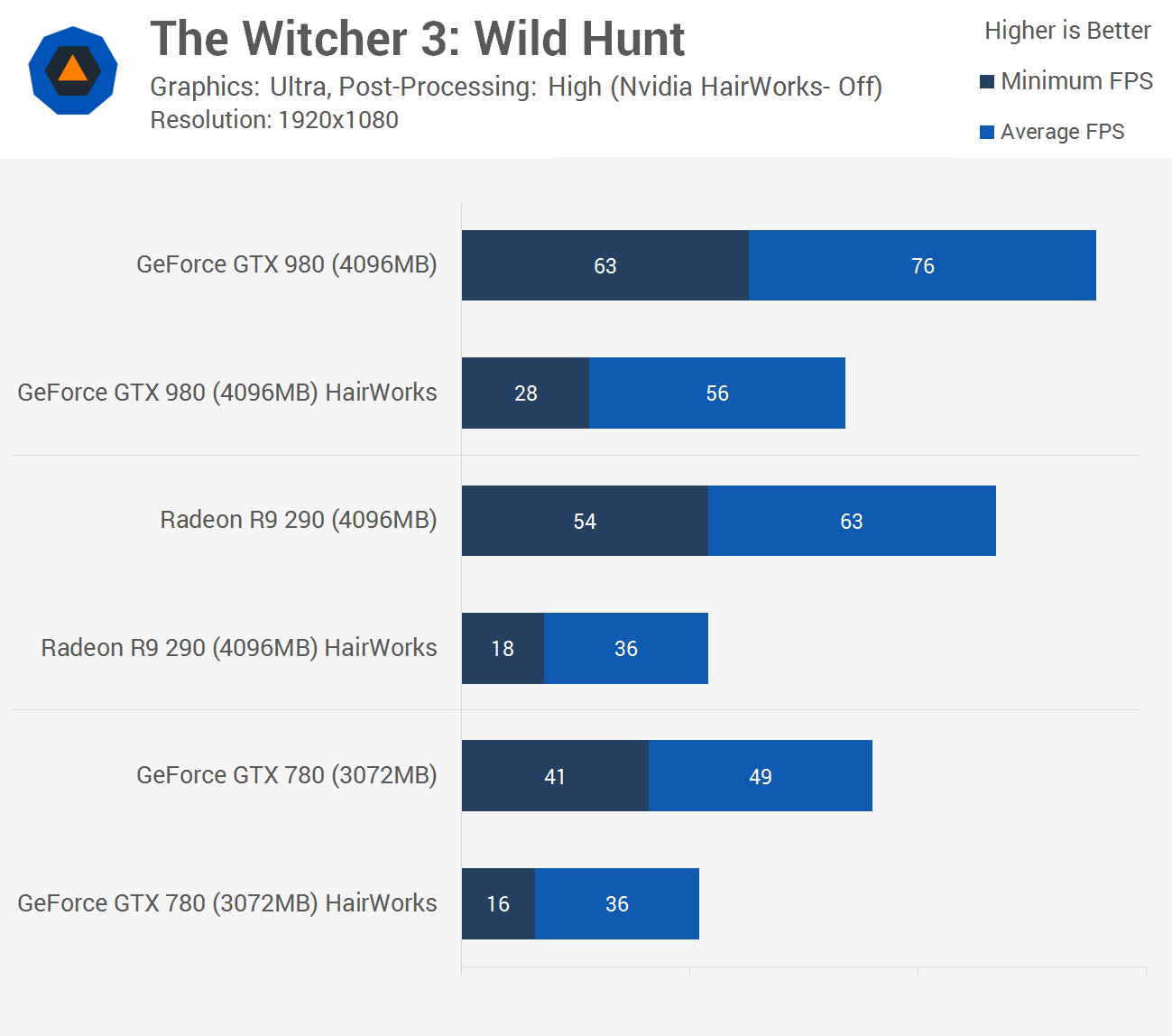

HardOCP recently tested the Witcher 3 and the Radeons performed very well.

The performance penalty for enabling hairworks was very close between them even..

That's BS, especially now that HardOCP has shown its true face. We have known for a fact that Hairworks has a bigger impact on AMD's cards for 3 reasons:

1) AMD implemented an optimization in the drivers to vary the tessellation factor since the performance hit was much greater on AMD's hardware that cannot handle excessive tessellation factors;

2) Actual user experience. I trust that far more than any review HardOCP does.

3) 3rd party reviews from sites other than HardOCP. Why do you think so many sites turned off HairWorks in the TW3? If the performance hit was the same, then it wouldn't be unfair to test with HW on. The reason some sites turned it off is because it unfairly penalized AMD's cards by NV having excessive tessellation full well knowing that AMD's cards suffer at high tess. factors.

Good thing there are objective professional sites we can rely upon to tell us the truth:

"With HairWorks disabled the minimum frame rate of the R9 290 is

3x greater, while the GTX 780 saw a 2.5x increase and the GTX 980 a 2.2x increase. The average frame rate of the GTX 980 was boosted by 20fps, that's 36% more performance. The GTX 780 saw a 36% increase in average frame rate which was much needed going from just 36fps at 1080p to a much smoother 49fps. The

R9 290X enjoyed a massive 75% performance jump with HairWorks disabled, making it 17% slower than the GTX 980 -- it all started to make sense then. We should reiterate that besides disabling HairWorks, all other settings were left at the Ultra preset.

The issue with HairWorks is the minimum frame rate dips."

TressFX seems far more efficient than HairWorks as well (or alternatively it doesn't use worthless tessellation factors to kill performance).

"With TressFX disabled the R9 290's minimum frame is 1.5x greater while the GTX 780 and GTX 980 saw a 1.6x increase --

roughly half the impact we saw HairWorks have in The Witcher 3. We realize you can't directly compare the two but it's interesting nonetheless. The average frame rate of the R9 290X was 49% faster with TressFX disabled, that is certainly a significant performance gain, but not quite the 75% gap we saw when disabling HairWorks on The Witcher."

http://www.techspot.com/review/1006-the-witcher-3-benchmarks/page6.html

You and all your AMD kind said who need GPU physx if it can be done on CPU..

Considering I've owned many AMD/ATI/NV cards and currently have 2 rigs with both AMD and NV cards, and keep recommending good NV cards to gamers, you trying to labeling me as "your AMD Kind" is just another baseless post I heard every week. The difference between NV loyalists is they don't recommend an AMD card no matter what. Like you pointing out worthless benchmarks of the "only $20 more expensive" EVGA 950 card and ignoring that for barely more R9 290 stomps over it, as well as R9 280X. 950 is an overpriced turd no matter how much you defend it. 960 with a free MGS V game makes 950 irrelevant even without touching R9 200/300 cards. Funny how you didn't even mention anything about 950 being DOA on arrival due to 2GB of VRAM. Nice knowing you have no problem defending 2GB cards and think gamers flush $150-160 into the toilet though.

------------------

And yes, I am all for physics being done on the CPU if it cannot be done in a brand agnostic way. If NV released stand-alone PhysX AGEIA cards that I can buy for $100-150 and they worked even if I had AMD/NV/Intel GPU in my rig, I'd consider buying one if games looked way better with PhysX on. But instead, NV locked this feature and thus wiped out any advancements and long-term potential AGEIA had.

======

Anyway, all of this is getting off-topic.