You might want to read your own post again, you were talking about the CPU scheduling tasks for the CPU, I'm talking about the CPU scheduling tasks for the GPU (you know the only thing that's actually relevant in this case, since the GPU is the one doing the actual processing)

So no I did not say what you claimed.

The context of my post was obvious. The GPU obviously cannot schedule instructions by itself, and this entire thread is about GPU performance in DX12, so how on Earth you thought I was talking about

non 3D software is astonishing given that we have been discussing games the entire time.

And none of those things are relevant in the scenario we're discussing here. Again we're not talking about the CPU scheduling tasks for itself (in which case stuff like OoO would absolutely play a role), where talking about the CPU scheduling tasks for the GPU.

Well this post certainly explains a lot of why I am having such difficulty with you. You don't really seem to understand the fundamental basics of computing.

The CPU is the component which receives instructions from the program, and then sends them to the GPU. As such,

ALL of the CPUs attributes matter when it comes to matters of performance in 3D games.

If they were irrelevant as you suggest, then they would not affect performance. But the benchmarks don't reflect this at all. Cache size, SMT, on die memory controllers, OoO execution, IPC etcetera

ALL affect the performance of 3D games because as I said, it's the CPU that is ultimately feeding the instructions to the GPU.

In this case the only real way to mitigate the communications latency between the CPU and the GPU, is to basically avoid (or minimize) the communication altogether, which is of course why you would prefer to handle the scheduling locally on the GPU.

Latency is going to occur no matter what you do. The only thing that matters is how you deal with it.

AMD GPUs still have to contend with latency just like NVidia GPUs, since they both receive instructions from the CPU.

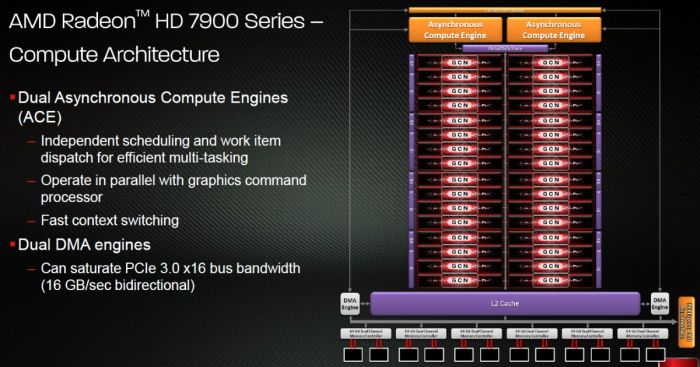

Eerm, nobody's talking about AMD doing scheduling on the CPU, we're talking about Nvidia doing scheduling on the CPU, so the nature of AMD's ACEs are quite irrelevant, in this regard.

You seem to think a lot of things are irrelevant. Might want to take a look at what this thread is about, before you dismiss AMD's ACEs are being irrelevant.

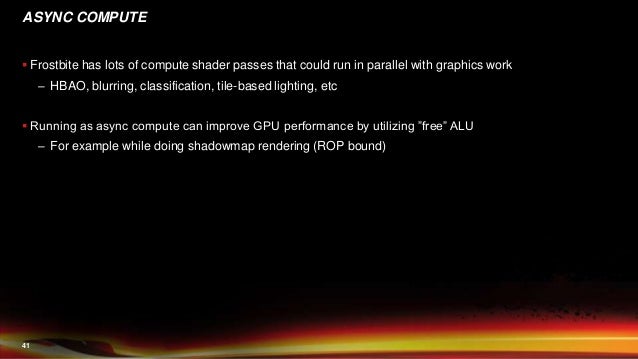

In that case you shouldn't have any problems finding me an example of a game which handles the scheduling of asynchronized compute and graphics tasks on the CPU, I'll wait.

If NVidia is to be believed, then they are programming that capability into their drivers right now and it will be available soon, hopefully before the first DX12 game ships this year..

But CPUs have been handling asynchronous compute scheduling for years now (in CUDA and OpenCL for instance). The only thing that's new is doing asynchronous compute in concurrence with rendering.