ARM challenges Intel in PCs

Page 3 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NTMBK

Lifer

- Nov 14, 2011

- 10,519

- 6,029

- 136

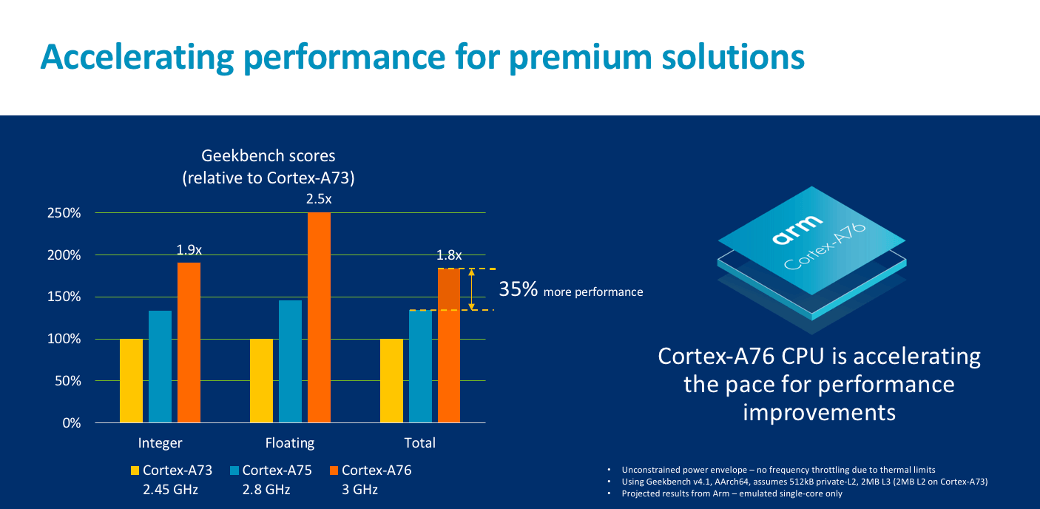

Saw this. The total bars, which I assume is a composite of the integer and float, have the A76 at 1.8x, but this is less than both the integer and float values, meaning it should really be between 1.9x and 2.5x.

How is this possible?

Total score includes crypto and memory.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

I'm going to have to challenge their claim that the A76 @ 3GHz will match a 7300U running at 3.5GHz. I know they want to use gcc because they think the Intel results are inflated by ICC, but real world applications are going to be really well optimized in favor of the existing architecture, so its also marketing BS to only use gcc.

The A75 core in SD 845 performs a little better than Goldmont Plus in Geekbench 4. It's about equal in Integer, and ahead in FP. It's really ahead in memory. But its a stupid benchmarking practice to include an Integer Test, an FP test, and a separate memory test before combining the three into one. Because you'd assume memory results are already factored in with the Integer and FP tests.

Goldmont Plus is on the level of Core 2 Penryn core. Let's see, Skylake is...

About 60% faster per clock than Penryn. Add in 16.7% clock advantage of 3.5GHz vs 3GHz, and it should be 80% faster(assuming 80% scaling from clocks, results rounded down).

ARM claims A76 @ 3GHz is 35% faster than A75 @ 2.8GHz, the latter of which is exactly the SD845.

1.8/1.35 = 33% faster in favor of the 3.5GHz 7300U.

If we take Geekbench results between Pentium Silver N5000(Goldmont Plus) and SD 845 as an accurate representation, and we take the overall result not the integer(in which the two chips are equivalent), its about 10% advantage in favor of SD845.

1.8/1.35/1.1 = Still 20% better

Skylake @ 3.5GHz being 20% better is pretty much a best case scenario for the A76 @ 3GHz. I think it'll end up being 25-30% faster.

The A75 core in SD 845 performs a little better than Goldmont Plus in Geekbench 4. It's about equal in Integer, and ahead in FP. It's really ahead in memory. But its a stupid benchmarking practice to include an Integer Test, an FP test, and a separate memory test before combining the three into one. Because you'd assume memory results are already factored in with the Integer and FP tests.

Goldmont Plus is on the level of Core 2 Penryn core. Let's see, Skylake is...

About 60% faster per clock than Penryn. Add in 16.7% clock advantage of 3.5GHz vs 3GHz, and it should be 80% faster(assuming 80% scaling from clocks, results rounded down).

ARM claims A76 @ 3GHz is 35% faster than A75 @ 2.8GHz, the latter of which is exactly the SD845.

1.8/1.35 = 33% faster in favor of the 3.5GHz 7300U.

If we take Geekbench results between Pentium Silver N5000(Goldmont Plus) and SD 845 as an accurate representation, and we take the overall result not the integer(in which the two chips are equivalent), its about 10% advantage in favor of SD845.

1.8/1.35/1.1 = Still 20% better

Skylake @ 3.5GHz being 20% better is pretty much a best case scenario for the A76 @ 3GHz. I think it'll end up being 25-30% faster.

Last edited:

Nothingness

Diamond Member

- Jul 3, 2013

- 3,364

- 2,457

- 136

Oh please not that again. It's not an ARM claim, icc makes heavy optims that specifically target SPEC, it's a known fact. Even David Kanter (from RealWorldTech) who can't be considered as an Intel basher, quite the contrary, wrote an article on that subject.I know they want to use gcc because they think the Intel results are inflated by ICC, but real world applications are going to be really well optimized in favor of the existing architecture, so its also marketing BS to only use gcc.

http://www.linleygroup.com/mpr/article.php?id=11708

This might not be readable by non-subscribers so here are some extracts:

The optimization for libquantum is extemely aggressive and unrealistic on ordinary applications.

Compiling benchmark tests with 32-bit application binary interfaces (ABIs), such as x32 for x86 or ILP32 for RISC, is a gray area for optimization.

These "optims" gain about 20%. And are mostly not applicable to *any* application outside of SPEC.

If you want to fairly compare CPU you use the same compiler and the same libraries. That's what pros do. Marketers pick icc. Choose your side.

BTW I have little doubt that if ARM had a cheating compiler they'd use it for marketing. But they don't play that silly game... yet.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

BTW I have little doubt that if ARM had a cheating compiler they'd use it for marketing. But they don't play that silly game... yet.

Whether they are cheating on the compilers or not, their claims are inaccurate. It's a marketing trick regardless.

If put properly, rather than the A76 being equal to the 7300U, I'd assume they are a generation behind according to their graph, where the A75 is.

Nothingness

Diamond Member

- Jul 3, 2013

- 3,364

- 2,457

- 136

I did not deny thatWhether they are cheating on the compilers or not, their claims are inaccurate. It's a marketing trick regardless.

If put properly, rather than the A76 being equal to the 7300U, I'd assume they are a generation behind according to their graph, where the A75 is.

IntelUser2000

Elite Member

- Oct 14, 2003

- 8,686

- 3,787

- 136

I did not deny thatThe rest of their claims look dubious, I agree. But choosing gcc instead of icc certainly is not dubious.

I saw some benchmarks of ICC and other compilers before. I don't know what the other was, one was definitely Microsoft's.

In average applications, there was a little difference between the two. We are talking few single digit %.

In HPC applications though, ICC showed double digit gains. I concluded that in average applications, the most commonly used compiler is capable of hanging with the best, because the low hanging fruits are already picked thoroughly. But with HPC, there's a whole bunch of optimization opportunities to do with vector units and parallelization. This is where an optimized compiler may really shine.

Nothingness

Diamond Member

- Jul 3, 2013

- 3,364

- 2,457

- 136

You are definitely right: there's little doubt icc is good at vectorization, better than gcc. But your typical application isn't vectorizable and that's why icc doesn't do any better than gcc.I saw some benchmarks of ICC and other compilers before. I don't know what the other was, one was definitely Microsoft's.

In average applications, there was little difference between the two. We are talking few single digit %.

In HPC applications though, ICC showed double digit gains. I concluded that in average applications, the most commonly used compiler is capable of hanging with the best, because the low hanging fruits are already picked thoroughly. But with HPC, there's a whole bunch of optimization opportunities to do with vector units and parallelization. This is where an optimized compiler may really shine.

I regularly try icc on my code: sometimes icc wins, sometimes gcc wins, but the difference is less than 5% in all cases. OTOH the number of bugs of icc is depressing. Giving it a faulty command line can lead to a segfault for instance... gcc is more stable because its user base is vastly larger.

Cerb

Elite Member

- Aug 26, 2000

- 17,484

- 33

- 86

But, does it actually work out, every time, in every SoC, and can they actually reach their claims? Usually, it's one or the other: they'll get to the better power target, or they'll get to the better performance target. But, once they start challenging fat high-power CPUs, they'll have to consistently meet both. Then, by the time they get close, Intel will have moved on, as well.I'm going to have to challenge their claim that the A76 @ 3GHz will match a 7300U running at 3.5GHz. I know they want to use gcc because they think the Intel results are inflated by ICC, but real world applications are going to be really well optimized in favor of the existing architecture, so its also marketing BS to only use gcc.

The A75 core in SD 845 performs a little better than Goldmont Plus in Geekbench 4. It's about equal in Integer, and ahead in FP. It's really ahead in memory. But its a stupid benchmarking practice to include an Integer Test, an FP test, and a separate memory test before combining the three into one. Because you'd assume memory results are already factored in with the Integer and FP tests.

Goldmont Plus is on the level of Core 2 Penryn core. Let's see, Skylake is...

About 60% faster per clock than Penryn. Add in 16.7% clock advantage of 3.5GHz vs 3GHz, and it should be 80% faster(assuming 80% scaling from clocks, results rounded down).

ARM claims A76 @ 3GHz is 35% faster than A75 @ 2.8GHz, the latter of which is exactly the SD845.

1.8/1.35 = 33% faster in favor of the 3.5GHz 7300U.

If we take Geekbench results between Pentium Silver N5000(Goldmont Plus) and SD 845 as an accurate representation, and we take the overall result not the integer(in which the two chips are equivalent), its about 10% advantage in favor of SD845.

1.8/1.35/1.1 = Still 20% better

Skylake @ 3.5GHz being 20% better is pretty much a best case scenario for the A76 @ 3GHz. I think it'll end up being 25-30% faster.

I also wonder, if they can reach these SPEC results, where actual applications fall. IE, why is an older Atom or AMD C-50 (ew) desktop or notebook so much faster, when the alleged other benchmark scores are so close? Are the applications just worse? But if so, that shouldn't apply to Chrome in Chrome OS, FI, doing light duty web stuff. But, side by side, a first generation Netbook would be preferable to a 4x or 6x A72. By the time they cacth up, the Intel Chromebooks will have moved on, though, even those sticking to Atom-based procesors. Load-store gives them some special sauce when it comes to long looping little benchmarks, so I'm going to be dubious until I see applications. Not that ARM isn't improving, but can they really meet Intel-like performance, without using a lot of space and power on memory, thus evening things out? Or, is there some real special sauce (if so, what?)? Or, will special-purpose SoCs actually succeed, and make the problem more or less moot (if the CPU is only really 50% as fast, despite tailored benchmarks showing equality, but it has a DSP, ASIC, etc. attached that's 50x as fast for something you need to do...)? If they are going to try to compete on general purpose performance, and have to deal with the same space and power issues AMD and Intel have to, it will be too much of an uphill battle, CPU wise, IMO, given the platform issues to deal with, too (like openly documented hardware standards that everyone needs to be using).

Arkaign

Lifer

- Oct 27, 2006

- 20,736

- 1,379

- 126

I browse the web and consume most of my media on an i5 dual core w/ 8GB of ddr3 memory and integrated graphics

I have 4/8/16 core but they serve different duties.

Majority of people are on dual and quad i5s. I7s/i9s and higher core count ryzen processors aren't needed for day to day computing. Lower power, smaller form factor, less heat, portability win in the broader segment. My daily coding rig used to be a lower power small form factor NUC because I could toss it in my bag when going to and from my office. Don't need hella cores for writing code. For big compiles and runtime, I export it over to my bigger rigs on demand.

This is where ARM is targeting and I'd say its the majority of desktop users.

Gamers/Producers/enthusiast who need beefy rigs are the minority. All the major codecs are hardware accelerated so don't consume much CPU. Intel makes lots of scratch on i3s/i5s and below.

https://www.macrumors.com/2018/05/29/pegatron-to-make-arm-based-apple-macbook/

Apple will set the standard in this way.

Apple is in a weird spot with ARM potential. They have industry leading mobile CPUs, but they're still well off Ryzen/Current Intel i3+.

I frequently notice that comparisons are often/usually made with extremely weak x86 SKUs to make arm look better, but as yet haven't seen evidence showing potential for ARM designs to scale with higher end x86.

Part of this I blame on Intel and Apple for the 'U' trickery. I mean seriously :

i3 8130U, 2.2/3.4Ghz, 2C/4T, 4MB L2

i5 8350U, 1.6/3.4Ghz, 4C/8T, 6MB L2

i7 8550U, 1.8/4.0Ghz, 4C/8T, 8MB L2

i5-7300U, 2.6/3.5Ghz, 2C/4T, 3MB L2

All of these were very throttle happy in everything I've ever seen them in, lowering performance notably. I added the last gen i5 7300U in simply to show what an extra pathetic joke it is as a useful source in the PR slide.

i3 8350K, 4.0Ghz, 4C/4T, 8MB L2

An 8350K non-overclocked with basic air cooling will outperform the U processors with ease, and worse, there really isn't much point in choosing one 2C/4T U over another for the price difference. It just rubs me a bit the wrong way how they first created the Pentium/i3/i5/i7 product segments, and then crapped all over it with the U designation so they could sell dual cores or otherwise pretty weak stuff as 'i5' or 'i7' complete with the premium price. The first time I really noticed this was when helping someone shop for a MBA in 2014. The "i7" was a pathetic 4650U 1.7Ghz 2C/4T, handily destroyed by your basic desktop i3 of the same generation, and even outperformed by the 2C/2T Pentium g3470. There was nothing magic about these U SKUs. Just a different cheap arrangement of Haswell cores complete with iGPU 40% slower.

But when you get to the $, then you see the truth. G3470 was easily had for $70ish, while they charged $400+ for the fake i7U.

Don't get me wrong, I can completely understand the need for very low TDP CPUs in little things like the MBA or Surface. I just think they should be priced and named accordingly. Eg; i7 4650U should have been i3-4270M. i5 4260U should have been i3-4230M, or similar, etc. I *still* run into people that don't understand that a desktop i5 8400 or Ryzen 2600 will make a complete and utter mockery of anything with a U processor to a level that is mind boggling.

Rant on U models over, I wonder if we'll see a new attempt at a more aggressive ARM based CPU that could scale up to deal with desktop usage demands. I mean we've heard this for years that ARM was coming for x86, but even AMDs derided Bulldozer/Piledriver remain targets out of reach of any ARM processor that I know of. And the previously laughable U line has introduced a variety of actual quad cores (still not matching desktop or full-scale non U laptop designations, but it's a step at least).

I say all of this despite being convinced that fairly low end ARM Chromebooks or the like could easily be sufficient for a huge number of users. Even more so if paired with decent small SSD + cloud storage eg; gapps. A Chromebook type device with a modern ARM CPU, 1080p display, 32GB nVME SSD, 4GB of ram, 6h battery is probably doable at sub $200, and would give a better experience than a Kaby i7U paired with a spinning HDD and full fat windows.

But what about Apple? If I'm right that generally no threat will surface from ARM in the next 5-7 years vs bigger x86 SKUs, then Apple has a dilemma. Transition MacOS to ARM native, and you abandon the higher end market unless all the software works on x86/ARM MacOS branches equally. I can't say it's impossible that they'd have a hybrid Mac lineup, going Motorola G* to Intel was remarkably smooth considering the depth of changes at play. Still, I don't completely trust Apple's ideas for what serious computer users need. The marketing of the iPad Pro as a computer replacement was laughably stupid, and was something of an insult to their customers. iOS is just not a productivity platform. If they had a compatible branch of MacOS and full multiitasking and device support for a future iPad Pro Gen, then they'd be cooking with fire. I swear it's like they looked at Surface RT and said "Brilliant! Let's also make a device almost completely useless for the marketed purpose". Not that iPad Pro was a bad device, it just isn't a replacement for a PC or MBA.

As always, it will be interesting to follow.

Arkaign

Lifer

- Oct 27, 2006

- 20,736

- 1,379

- 126

Well for laptops I prefer M class CPUs annd not U class ones. And use a larger battery. Yes the notebook won't be "Thin & Light" but so what?

Agreed. In a ton of cases I feel the U is a bit of a scam to be honest. Higher price for less performance so you can shave a few MM off the measurements. Peh.

I've always felt that the computer should have enough performance to do the tasks the owner brought it for. Of thereare budget constraints to deal with but....Agreed. In a ton of cases I feel the U is a bit of a scam to be honest. Higher price for less performance so you can shave a few MM off the measurements. Peh.

moinmoin

Diamond Member

- Jun 1, 2017

- 5,248

- 8,463

- 136

Honestly, with how Apple was and is expanding iOS mostly in iOS 11 specifically for iPad Pro (split screen, multitasking overview, drag 'n' drop) I wouldn't be surprised if an eventual ARM based Macbook would use iOS instead macOS. It'd certainly be easier to expand a locked down environment like iOS than port and restrict an ecosystem like macOS.But what about Apple? If I'm right that generally no threat will surface from ARM in the next 5-7 years vs bigger x86 SKUs, then Apple has a dilemma. Transition MacOS to ARM native, and you abandon the higher end market unless all the software works on x86/ARM MacOS branches equally. I can't say it's impossible that they'd have a hybrid Mac lineup, going Motorola G* to Intel was remarkably smooth considering the depth of changes at play. Still, I don't completely trust Apple's ideas for what serious computer users need. The marketing of the iPad Pro as a computer replacement was laughably stupid, and was something of an insult to their customers. iOS is just not a productivity platform. If they had a compatible branch of MacOS and full multiitasking and device support for a future iPad Pro Gen, then they'd be cooking with fire. I swear it's like they looked at Surface RT and said "Brilliant! Let's also make a device almost completely useless for the marketed purpose". Not that iPad Pro was a bad device, it just isn't a replacement for a PC or MBA.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.