No I didn't and this is what you don't seem to understand. It needs those lower end cores to drop idle power lower in the first place,whereas the X86 cores do it via agressively downclocking,etc parts of the core,so that is additional functionality added there. Then you also ignore that the Intel CPUs have an integrated GPU,security processors,fixed function hardware for Quicksync and so on. A huge percentage of the chip is not CPU too.

Maybe you need to read why BIG.Little was implemented,so those small cores and big cores are a whole unit. Also again some of you seem to not really understand that increasingly clockspeed is, not what PC forum people seem to think,ie,crank the voltage up and hey presto!!

Then as usually feeding into the hype of another phone launch,you forget Apple is using a cutting edge TSMC 7NM node,and Intel is still stuck on 14NM.

Wide cores have existed for years - in Russia they used the very wide Elbrus design which runs at lower clockspeeds. Intel hired many of the people involved with it - they certain have looked at very wide designs too.

Another example is Jaguar - Jaguar IPC is not massively worse off than Piledriver,but it obviously cannot scale that highly in clockspeed,etc. Trying to push clockspeed up alone takes power and transistors. Look at Vega - AMD themselves said a huge percentage of the transistor budget was to enable higher clockspeeds:

https://www.anandtech.com/show/11717/the-amd-radeon-rx-vega-64-and-56-review/2

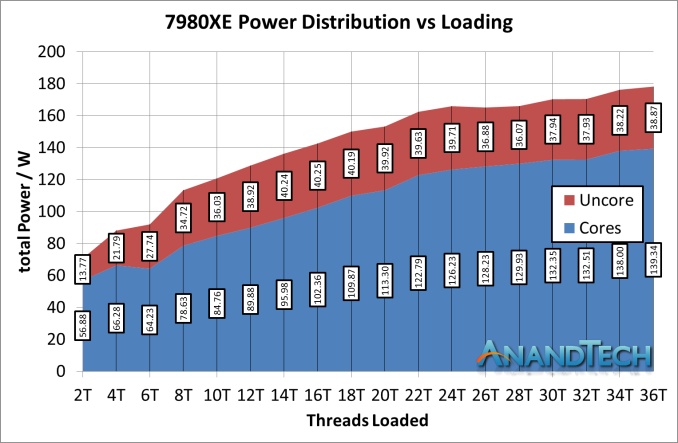

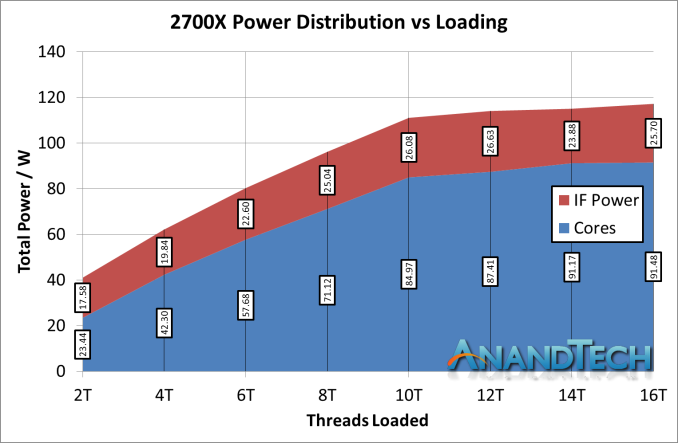

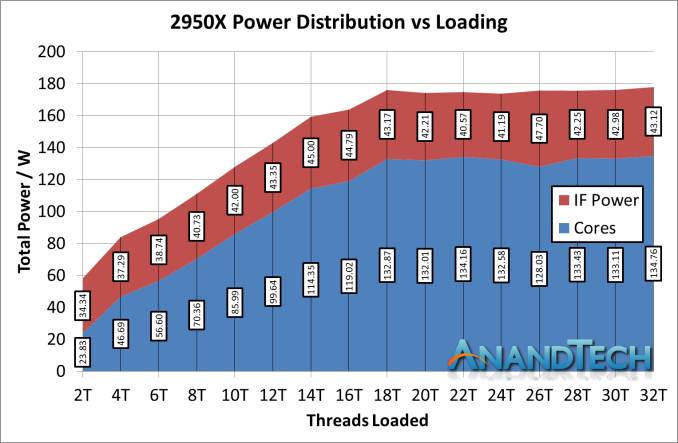

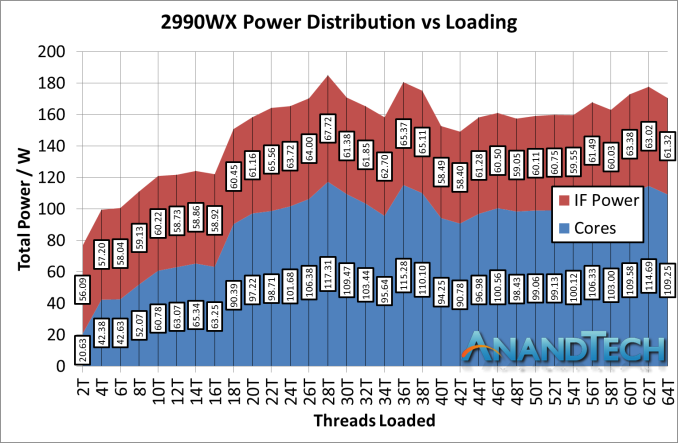

Why don't some of you actually read the articles on Anandtech about Infinity fabric and Mesh - look at how much power is devoted to that alone,to enable massive scaleability,let alone the amount of transistors.

Look at IBM and its high performance cores,which are not X86,they share some of the same problems(and solutions) and they are totally different instruction set.

Do you think AMD,Intel and IBM have spent so much money on interconnects,devotes so much of the transistors and TDP budget of their CPUs to the interconnects,because they are meaningless??

So what happens when Apple starts wanting to jack up core count??

Then as you hit higher clockspeeds and more cores,you need memory bandwidth - those very wide cores need feeding,otherwise utilisation will go down. Read why Intel has been trying to push things like AVX for example.

Now include the power requirements of faster memory controllers not running low voltage DDR4 or DDR3.That also means more transistors and more power too. Now you could use larger caches too,but then again more transistors.

Then as others have suggested the higher power and higher TDP you go,you have issues about spacing key components to make sure cooling is more effective - don't even look at AMD or Intel,look at IBM.

You cannot just expect a low power,low clockspeed core made for tablets,to suddenly jump in clockspeed,add lots more cores,etc without chip sizes growing,etc and suddenly wipe out AMD,Intel and IBM in high performance computing.

Its a way to linear and simplistic way of looking at things. Some of you have forgotten that the CPU design people have worked not only at Apple,but IBM,AMD,Intel and so on.

There are all aware of different ways of going about things.

This is the problem nowadays - every new tech launch is a hype launch. People get overexcited and predict every company is doomed. All for nought.