AnandTech Forums: Technology, Hardware, Software, and Deals

Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

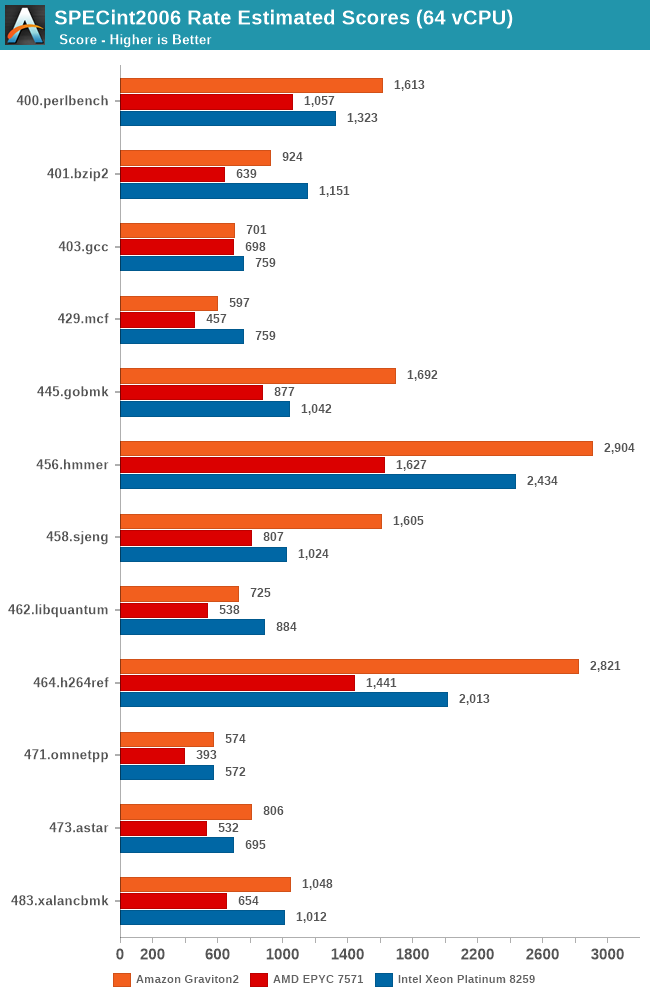

They have a comparison against current AWS offerings from AMD and Intel instances (Rome instances not yet available).

However, I grabbed the SPEC2006Int numbers from a previous article for Rome to compare. This isn't a cloud instance so I don't know how much that would effect the Rome performance.

Actual power use for Graviton 2 system is not available and Amazon didn't release a TDP number. Andrei is estimating between 80 W - 110 W. Given the Ampere 80 core ARM CPU is 210 W at 3 GHz (unclear if 3 GHz is all core turbo at 210 W or if all core turbo is lower), this CPU with 64 cores at 2.5 GHz I would put at the higher level of his range, maybe higher depending on how much of the power use is uncore (i.e. the power use won't scale as expected by frequency and core count because the uncore will be a significant portion of TDP) and what the actual all core frequency of Ampere is.

Last edited: