Alright, so we've had some leaks so far. I don't know if any of it's been confirmed yet, as it's pretty early, but here is what I've surmised so far (massive grain of salt of course):

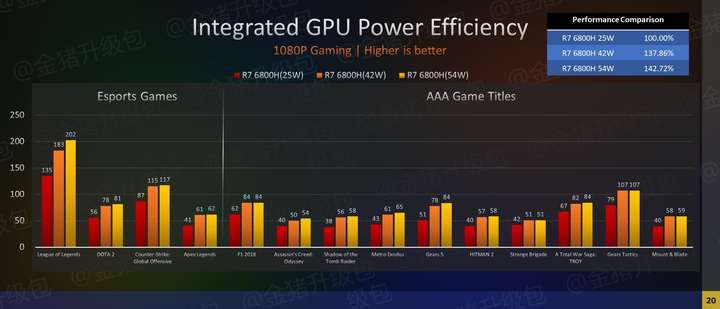

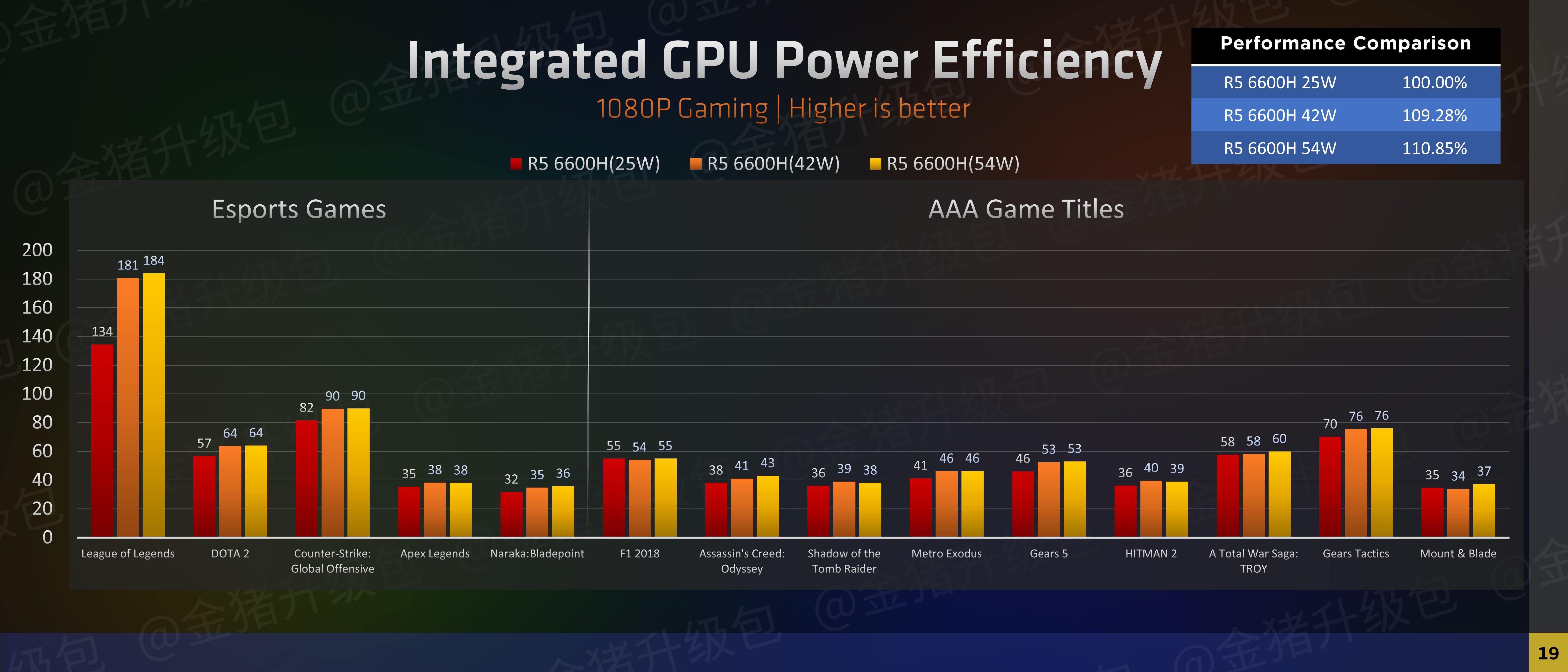

If if turns out to have RDNA 2 and 12 CU, I could see iGPU performance potentially almost doubling over Cezanne.

If I've made any mistakes or gotten anything wrong, please let me know. I'd also love to hear more knowledgeable people weigh in on their expectations.

- Zen 3 "enhanced"

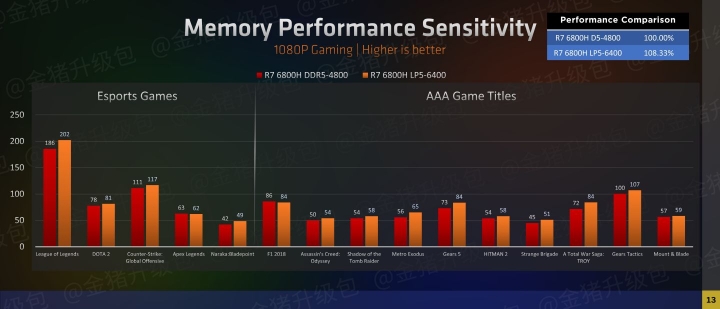

- DDR5 and LPDDR5 support

- PCIE4

- 6nm

- CVML

- Navi 2/RDNA/RDNA 2

- 12 CU

- Socket FP7/AM5

- Possible USB4

- 2021(unlikely) -2022 (more likely) release date

If if turns out to have RDNA 2 and 12 CU, I could see iGPU performance potentially almost doubling over Cezanne.

If I've made any mistakes or gotten anything wrong, please let me know. I'd also love to hear more knowledgeable people weigh in on their expectations.

Last edited: