News AMD previews Ryzen 3rd generation at CES

Page 7 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The numbers in the demo were system draw. Both systems has the same graphics cards, same ram and as close to the same motherboard as they could get. You seem to be upset that they are doing well, which is puzzling to me.My point was that a small difference in CB (so it's not 5% but 10+ % ) still makes a big difference in userbenchmark,that's what I was answering to.

I never said that the 9900k was being gimped,but around it's release we have sen how huge a difference the motherboard makes when it comes to power draw (and to a lesser degree to performance) .

Where is your proof that they selected the best possible combo?

Anecdotal but still,properly configured at 4,7Gh running cinebench the 9900k uses 131Watt....158 at stock

CINEBENCH R15.038 131Watt @1,12Volt (158Watt @1,216 Volt Stock)

https://www.computerbase.de/forum/threads/9900k-undervolt.1832939/

tamz_msc

Diamond Member

- Jan 5, 2017

- 3,865

- 3,730

- 136

These are the details regarding the test configuration.Where is your proof that they selected the best possible combo?

The 9900K is running in a default "out of the box" setup. It's debatable whether this is the best possible combination, though I fail to see what extra one can add to this setup in order to make it look better wrt Cinebench scores.Testing performed AMD CES 2019 Keynote. In Cinebench R15 nT, the 3rd Gen AMD Ryzen Desktop engineering sample processor achieved a score of 2057, better than the Intel Core i9-9900K score of 2040. During testing, system wall power was measured at 134W for the AMD system and 191W for the Intel system; for a difference of (191-134)/191=.298 or 30% lower power consumption.

System configurations: AMD engineering sample silicon, Noctua NH-D15S thermal solution, AMD reference motherboard, 16GB (2x8) DDR4-2666 MHz memory, 512GB Samsung 850 PRO SSD, AMD Radeon RX Vega 64 GPU, graphics driver 18.30.19.01 (Adrenalin 18.9.3), Microsoft Windows 10 Pro (1809); Intel i9 9900K, Noctua NH-D15S thermal solution, Gigabyte Z390 Aorus, 16GB (2x8) DDR4-2666 MHz memory, 512GB Samsung 850 PRO SSD, AMD Radeon RX Vega 64 GPU, graphics driver 18.30.19.01 (Adrenalin 18.9.3), Microsoft Windows 10 Pro (1809).

Atari2600

Golden Member

- Nov 22, 2016

- 1,409

- 1,655

- 136

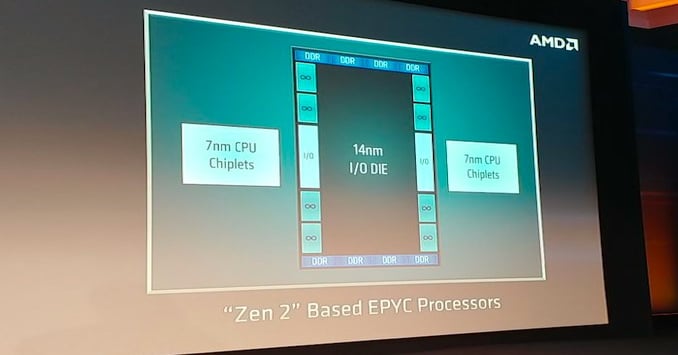

We are comparing a new node to a node that made multiple iterations with improvement in power and efficiency. And calling Zen2 a new architecture ... improved architecture yeah, but "new" :/

How can you say Zen2 is not a new architecture when its uncore is the biggest departure from convention in over a decade?

DrMrLordX

Lifer

- Apr 27, 2000

- 23,226

- 13,306

- 136

The 9900k is pushed well past the ragged edge of efficiency, and has a silly TDP as a result)

Total system power was 175W, which isn't too off-the-wall. Until a few days ago, that was a pretty reasonable power draw for what you got. Then AMD showed up and matched it with a 75W system power draw.

I'm excited to see gaming performance on Zen2, that's really the only arena that's still a big unknown at this point.

I would rather see AVX2 performance.

There is a reason why AMD choose this configuration for the demo. Performance being more predictable, less worries about threads jumping chiplets.

Jumping chiplets? The demo chip only has one chiplet. We don't even know if each chiplet has two CCXs per chiplet connected by internal IF like on Zen/Zen+ or if it's 8c monolithic.

It's debatable whether this is the best possible combination, though I fail to see what extra one can add to this setup in order to make it look better wrt Cinebench scores.

They could always hire Principled Technologies to set everything up and run the benchmarks.

They could always hire Principled Technologies to set everything up and run the benchmarks.

Lets hope Intel does not add a "disable half the cores" switch and then call it "gaming mode". So no one can take advantage of that.

Speaking of that im petty sure AMD still has not fixed that, man is a damn string.

Jumping chiplets? The demo chip only has one chiplet. We don't even know if each chiplet has two CCXs per chiplet connected by internal IF like on Zen/Zen+ or if it's 8c monolithic.

The post was under the assumption of AMD having a configuration of 1xIO 2xCPU Chiplet for 16c. If they have a 16c CPU the reason AMD choose this configuration for the test is because this one in particular (the 8c 1 Chiplet version) while being a mid range part, would have been best option to compete against the 9900k for different reasons. The three main reasons is as I pointed, without a second chiplet all work stays on one die (disregarding the IO), it will use less power (one of the things they wanted to show off) and it will clock higher. The last being the dirty secret, AMD might have a 9900k killer in games with this 8c16t single chiplet configuration. But any 2 chiplet configurations including the 16c32t version won't be as competitive. It might be more than lets say a 2700x is now. But +4c Turbo's aren't going to be as high as the retail version of the Demo'd CPU. Therefore there would still be a compromise (smaller and even if it was as large a compromise I would be more than fine with it, but its still there).

mopardude87

Diamond Member

- Oct 22, 2018

- 3,348

- 1,576

- 96

Lets hope Intel does not add a "disable half the cores" switch and then call it "gaming mode". So no one can take advantage of that.

I wonder if that is why we have a i7 9700k. I kept hearing about the Ryzen gaming mode and i wondered if that situation carries over to the i7 8600k as well? Some games run on the ht threads and causes perhaps inconsistent performance while on a i7 9700k performance would be more consistent?I found one review showing better frame times on the i7 9700k over the i7 8600k but i haven't found nothing to concrete to back up this theory of better gaming performance on a 8c over a 6c/12t chip.

ZipSpeed

Golden Member

- Aug 13, 2007

- 1,302

- 170

- 106

I as well. I'm long overdue for an upgrade, and I've waited this long. 12-core minimum for my next CPU, though I would prefer a 16-core since I do distributed computing.All I know is that I want to see a 12 core AM4 part by this year

itsmydamnation

Diamond Member

- Feb 6, 2011

- 3,139

- 4,010

- 136

My point was that a small difference in CB (so it's not 5% but 10+ % ) still makes a big difference in userbenchmark,that's what I was answering to.

I never said that the 9900k was being gimped,but around it's release we have sen how huge a difference the motherboard makes when it comes to power draw (and to a lesser degree to performance) .

Where is your proof that they selected the best possible combo?

Anecdotal but still,properly configured at 4,7Gh running cinebench the 9900k uses 131Watt....158 at stock

CINEBENCH R15.038 131Watt @1,12Volt (158Watt @1,216 Volt Stock)

https://www.computerbase.de/forum/threads/9900k-undervolt.1832939/

your the one claiming deception, So where is your proof.

If you need to go and change stock setting on a motherboard just to get an 9900K to run correctly, thats intels fault.

http://ir.amd.com/news-releases/new...eo-dr-lisa-su-reveals-coming-high-performance

Testing performed AMD CES 2019 Keynote. In Cinebench R15 nT, the 3rd Gen AMD Ryzen Desktop engineering sample processor achieved a score of 2057, better than the Intel Core i9-9900K score of 2040. During testing, system wall power was measured at 134W for the AMD system and 191W for the Intelsystem; for a difference of (191-134)/191=.298 or 30% lower power consumption.

System configurations: AMD engineering sample silicon, Noctua NH-D15S thermal solution, AMD reference motherboard, 16GB (2x8) DDR4-2666 MHz memory, 512GB Samsung 850 PRO SSD, AMD Radeon RX Vega 64 GPU, graphics driver 18.30.19.01 (Adrenalin 18.9.3), Microsoft Windows 10 Pro (1809); Inteli909900K, Noctua NH-D15S thermal solution, Gigabyte Z390 Aorus, 16GB (2x8) DDR4-2666 MHz memory, 512GB Samsung 850 PRO SSD, AMD Radeon RX Vega 64 GPU, graphics driver 18.30.19.01 (Adrenalin 18.9.3), Microsoft Windows 10 Pro (1809).

PotatoWithEarsOnSide

Senior member

- Feb 23, 2017

- 664

- 701

- 106

Intel out of the box settings are entirely random based upon which motherboard vendor you buy.These are the details regarding the test configuration.

The 9900K is running in a default "out of the box" setup. It's debatable whether this is the best possible combination, though I fail to see what extra one can add to this setup in order to make it look better wrt Cinebench scores.

Only one thing is certain; you have to go out of your way to get it to run to spec.

IMO, it was run to spec, which is 3.6GHz base, 4.7GHz ACT, 4.2GHz, 24/7 at 95w TDP.

Since cinebench 15 MT is such a short workload, the 9900K ran at 4.7GHz for the duration. Its score is consistent with what is to be expected for it at that clock.

AMD ran a fair demo from what info we have. Certainly beats the Unprincipled Thickologies testing that's for sure.

darkswordsman17

Lifer

- Mar 11, 2004

- 23,444

- 5,852

- 146

I as well. I'm long overdue for an upgrade, and I've waited this long. 12-core minimum for my next CPU, though I would prefer a 16-core since I do distributed computing.

I don't have an express need for it, but I'd like one because I do occasional rendering (CAD type stuff) and handbrake encodes and video editing, and would like to be able to do more heavy multi-tasking. I'm still on Bulldozer, so there's plenty of options that would be a big upgrade, but I've held off this long so if I can get 16 core for a reasonable price all the better. I might end up getting an 8 core and then giving that to my nephew that wants to get into PC gaming (I want to teach him how to build his own computer, and learn some things).

- Jun 10, 2004

- 14,608

- 6,094

- 136

The 9900K scored over 2000 points.

That's a known score for 4.7GHz all-core turbo.

So that is pretty much the best-case out of the box performance.

I don't know how people can claim that the 9900K was somehow gimped (it wasn't).

That's a known score for 4.7GHz all-core turbo.

So that is pretty much the best-case out of the box performance.

I don't know how people can claim that the 9900K was somehow gimped (it wasn't).

ZipSpeed

Golden Member

- Aug 13, 2007

- 1,302

- 170

- 106

I don't have an express need for it, but I'd like one because I do occasional rendering (CAD type stuff) and handbrake encodes and video editing, and would like to be able to do more heavy multi-tasking. I'm still on Bulldozer, so there's plenty of options that would be a big upgrade, but I've held off this long so if I can get 16 core for a reasonable price all the better. I might end up getting an 8 core and then giving that to my nephew that wants to get into PC gaming (I want to teach him how to build his own computer, and learn some things).

I'll probably do something similar if AMD doesn't plan to release a CPU with that second chiplet initially. Upgrade my main rig at home first, and then move it to my HTPC when the 16-core is available. I got itchy to upgrade a few times, but then reality hit when I saw RAM and video card prices. But now that prices are dropping to more sane-ish levels, don't think I'm going to be able defer that upgrade itch again.

Markfw

Moderator Emeritus, Elite Member

- May 16, 2002

- 27,406

- 16,255

- 136

The 1950x is getting very affordable@$550 and some motherboard to support it are around $200. If you don't get the spendy ram, its not too bad to upgrade, and has upgrade potenial, and its faster (quad channel ram) than a comparable 2 channel system. I am building ripper number 7 next week, parts on the wayI'll probably do something similar if AMD doesn't plan to release a CPU with that second chiplet initially. Upgrade my main rig at home first, and then move it to my HTPC when the 16-core is available. I got itchy to upgrade a few times, but then reality hit when I saw RAM and video card prices. But now that prices are dropping to more sane-ish levels, don't think I'm going to be able defer that upgrade itch again.

Are you using the same motherboard with all the builds?The 1950x is getting very affordable@$550 and some motherboard to support it are around $200. If you don't get the spendy ram, its not too bad to upgrade, and has upgrade potenial, and its faster (quad channel ram) than a comparable 2 channel system. I am building ripper number 7 next week, parts on the way

Markfw

Moderator Emeritus, Elite Member

- May 16, 2002

- 27,406

- 16,255

- 136

I have one chepo ASUS that was on sale, not happy with it, but it works. The 2990wx is on the MSI board with like phase 19 VRM (not sure the number) and it works OK< but all the rest are X399 Taichi, I love that board !Are you using the same motherboard with all the builds?

ZipSpeed

Golden Member

- Aug 13, 2007

- 1,302

- 170

- 106

The 1950x is getting very affordable@$550 and some motherboard to support it are around $200. If you don't get the spendy ram, its not too bad to upgrade, and has upgrade potenial, and its faster (quad channel ram) than a comparable 2 channel system. I am building ripper number 7 next week, parts on the way

Unfortunately here in Canada, we don't nearly get the same deals as our southern neighbours. Amazon.ca had a pretty good deal on a 1920X for $385, but sadly missed out on it. Never been that price since then.

Markfw

Moderator Emeritus, Elite Member

- May 16, 2002

- 27,406

- 16,255

- 136

Yea, I got number 6, a 1950x for $420 before Thanksgiving. Has not been close since. So I said screw it, and got the 2970wxUnfortunately here in Canada, we don't nearly get the same deals as our southern neighbours. Amazon.ca had a pretty good deal on a 1920X for $385, but sadly missed out on it. Never been that price since then.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,226

- 13,306

- 136

Just for fun, I tried to make my R7 1800x system run at the same total system power as the Zen2 ES demo machine.

Like the demo machine, I have an RX Vega, though mine is Vega FE. I also have some big honking fans on the HSF (NH-D15) which are not standard - Noctua industrialPPC 3000s, running full bore. This includes the stock fan for a D15S which completes the 3-fan configuration. Finally I have the stock system fans for a Rosewill Thor V2. So my system power draw is going to be a little higher just from the fans. The board is x370 Taichi, and I have a 480GB BPX NVMe SSD. I downclocked my DDR4-3333 to DDR4-2666 to match the system RAM configuration.

Underclocking my chip was kind of hard - I had to use the AMD CBS settings, which are a PITA versus the ASRock OC interface. Regardless, I ran CBR15 @ 3200 MHz and turned in a score of 1412. I measured 180W at the wall. In this power range, my PSU averages maybe 89% efficiency (EVGA P2 750W), meaning pre-loss draw was more in the ballpark of 160W. Subtracting all the extra fan power, I figure my power usage was close to the 135W for the ES demo machine.

And all I scored was a measly 1412.

Not sure if I can get any more clockspeed at that power level, but I doubt it.

Like the demo machine, I have an RX Vega, though mine is Vega FE. I also have some big honking fans on the HSF (NH-D15) which are not standard - Noctua industrialPPC 3000s, running full bore. This includes the stock fan for a D15S which completes the 3-fan configuration. Finally I have the stock system fans for a Rosewill Thor V2. So my system power draw is going to be a little higher just from the fans. The board is x370 Taichi, and I have a 480GB BPX NVMe SSD. I downclocked my DDR4-3333 to DDR4-2666 to match the system RAM configuration.

Underclocking my chip was kind of hard - I had to use the AMD CBS settings, which are a PITA versus the ASRock OC interface. Regardless, I ran CBR15 @ 3200 MHz and turned in a score of 1412. I measured 180W at the wall. In this power range, my PSU averages maybe 89% efficiency (EVGA P2 750W), meaning pre-loss draw was more in the ballpark of 160W. Subtracting all the extra fan power, I figure my power usage was close to the 135W for the ES demo machine.

And all I scored was a measly 1412.

Not sure if I can get any more clockspeed at that power level, but I doubt it.

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

It would be a huge upgrade for the consumer if Ryzen 3 8C 16T would give 90% (or more) of Core i9 9900K Gaming performance at 65W TDP and half the price.

piesquared

Golden Member

- Oct 16, 2006

- 1,651

- 473

- 136

It would be a huge upgrade for the consumer if Ryzen 3 8C 16T would give 90% (or more) of Core i9 9900K Gaming performance at 65W TDP and half the price.

AMD seem pretty confident about it's abilities for gaming. I think they've said it was one of the focuses of the chip so i am doubting that we are looking at a 10% deficit. This is a potent little chip from what we've seen so far and that's at 65W! That's crazy efficient, clocks will definitely be higher than they were in this demo, which would have been LOWER than the 9900K. This looks like a smack down with a wicked 7nm process and excellent scalability.

DarthKyrie

Golden Member

- Jul 11, 2016

- 1,617

- 1,395

- 146

Yep it's 14nm

The question is if this is 14LPP or IBM's HPC 14nm process.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.