Learn to zen them or try to laugh. Works mostly.Even when I log in just to activate my ignore list, the garbage still gets quoted for pages at a time.

I can't win. :thumbsdown:

AMD Polaris Thread: Radeon RX 480, RX 470 & RX 460 launching June 29th

Page 40 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Suppose they have broad availability before nVidia? Does that put them ahead? It's easier to release something if you only have a 5 minute supply.

It doesn't, because AMD didn't promise broad availability by the end of the month. They mentioned mid-2016, but the fact that were teasing Polaris months before Pascal (January-March) and vague statement like 'several months advantage' made people believe that they were in fact ahead of the FinFET transition.

Bacon1 said:If Nvidia is ahead so far, why can't they keep tiny amounts of under performing

If this is under performing, you probably consider other VGAs garbage.

overheating "premium" 1080's in stock anywhere?

Are you trolling now? ShintaiDK doesn't seem to have problems with his FE unit running the latest drivers, and there's plenty of custom models that can handle boost clocks perfectly fine at lower temps/noise. Now please keep the NVIDIA discussion out.

yea because it goes above 150w , rx480 won't.

145W typical gaming, 154W maximum according to TechPowerUp.

Last edited:

Interest, whats your source on this?

hahaha, oh stop it, you

It would be a huge (probably unrealistically optimistic given the sales in the previous generation) success for AMD to get half of this part of the market vs NV.

Even if they did manage that though, I think they'd still drop overall market share.

So even if AMD has the sub-$350 market, where 85% of sales are, all for themselves they will not gain market share? How can you say that with a straight face?

Now please keep the NVIDIA discussion out.

Wow, you guys are on a roll!

Nothing points to AMD will regain market share with their mainstream/low end lineup.

Also a card like the GTX 1070 will most likely be the single best selling card at all. Just like the GTX 970 was.

GP106 from the rumoured specs looks to be better than Polaris 10 in the metrics. so all in all, the dream of regaining a big part of the lost market share and revenue seems to be just that, a dream.

Eh, they're pricing the 1070 too high to do that. $380+ is a long way above the $330/$280 of the 970. It's not bad perf/$ or anything but it's still too expensive to be a huge seller I'd think.

Or not maybe, we'll see.

torlen11cc

Member

- Jun 22, 2013

- 143

- 5

- 81

If the 8-pin designed to supply up to 150W of power, is it possible that RX480 will consume about 110W? it has TDP of 150W.

If the 8-pin designed to supply up to 150W of power, is it possible that RX480 will consume about 110W? it has TDP of 150W.

Going to have to wait for reviews, that sort of thing isn't public yet - anything you read is (wild) speculation.

fingerbob69

Member

- Jun 8, 2016

- 38

- 10

- 36

gtx 1070 uses 161w apparently...

http://www.guru3d.com/articles_pages/nvidia_geforce_gtx_1070_review,8.html

...hence the the pcie +8pin for a 150w tdp :\

http://www.guru3d.com/articles_pages/nvidia_geforce_gtx_1070_review,8.html

...hence the the pcie +8pin for a 150w tdp :\

Slaughterem

Member

- Mar 21, 2016

- 77

- 23

- 51

It doesn't, because AMD didn't promise broad availability by the end of the month. They mentioned mid-2016, but the fact that were teasing Polaris months before Pascal (January-March) and vague statement like 'several months advantage' made people believe that they were in fact ahead of the FinFET transition.

Did Nvidia promise broad availability when they released their cards? And again we all know that the context of the several months advantage was in reference to laptops and mainstream back to school products which you are spinning as verses the release of a fin fet product or transition.

Are you trolling now? ShintaiDK doesn't seem to have problems with his FE unit running the latest drivers, and there's plenty of custom models that can handle boost clocks perfectly fine at lower temps/noise. Now please keep the NVIDIA discussion out

And if he did would he mention it on this forumn? I think not. Please don't try to use him as a character reference for a NV product that many other people have RGA back due to the throttling issue - it is almost laughable.

kraatus77

Senior member

- Aug 26, 2015

- 266

- 59

- 101

exactly, they increased tdp of both 1070 to 150 from 145 of 970's , and 1080 to 180 from 165w of 980's. so it's pretty obvious 1060 will atleast have 120w tdp just like 960. it's likely to be above 120w. if we look at 1070/1080. 960 also had less p/w than 980. usually nvidia's lower chips have less p/w. but it's opposite for amd, their low chips are more p/w efficient ( compared to their high chips).gtx 1070 uses 161w apparently...

http://www.guru3d.com/articles_pages/nvidia_geforce_gtx_1070_review,8.html

...hence the the pcie +8pin for a 150w tdp :\

everything hints to be rx480 being at least same p/w as 1060(pascal). but i'm still not saying it's confirmed, just an educated guess.

gtx 1070 uses 161w apparently...

http://www.guru3d.com/articles_pages/nvidia_geforce_gtx_1070_review,8.html

...hence the the pcie +8pin for a 150w tdp :\

:\

kraatus77 said:exactly, they increased tdp of both 1070 to 150 from 145 of 970's , and 1080 to 180 from 165w of 980's

Yet it still draws less power according to TPU.

Slaughterem said:Did Nvidia promise broad availability when they released their cards?

No, but they still managed to launch Pascal a month before AMD's first FinFET GPUs and many people I know already own the cards.

And again we all know that the context of the several months advantage was in reference to laptops

Where is Polaris 11? Despite being the tiniest chip, no show at Computex. Yes, it should be inside Apple's designs, but there's no launch date yet.

and mainstream back to school products

You mean Geforce GTX 970/980 level of performance at lower prices? Looks like that's where the competition is going next a well.

Last edited:

Maybe he's saying real world performance difference of the bandwidth is only 5-7%? If so, he didn't state it very clearly. That's all I can think, as 5-7% is probably in that ballpark of real world performance gain of +25% memory bandwidth.

Correct.

torlen11cc

Member

- Jun 22, 2013

- 143

- 5

- 81

Going to have to wait for reviews, that sort of thing isn't public yet - anything you read is (wild) speculation.

But 6-pin PCI-E connector is rated up to 75 watts, and that's not a speculation.

gtx 1070 uses 161w apparently...

http://www.guru3d.com/articles_pages/nvidia_geforce_gtx_1070_review,8.html

...hence the the pcie +8pin for a 150w tdp :\

Yes, but RX 480 sips power from a single 6-pin PCI-E connector and not 8-pin.

So... at max load it will consume about 100-110w.

cusideabelincoln

Diamond Member

- Aug 3, 2008

- 3,275

- 46

- 91

:\

Yet it still draws less power according to TPU.

No, but they still managed to launch Pascal a month before AMD's first FinFET GPUs and many people I know already own the cards.

Where is Polaris 11? Despite being the tiniest chip, no show at Computex. Yes, it should be inside Apple's designs, but there's no launch date yet.

You mean Geforce GTX 970/980 level of performance at lower prices? Looks like that's where the competition is going next a well.

Different games draw different amounts of power.

Reviewers should be testing power draw like they test frametimes. On a per game and per microsecond basis.

But 6-pin PCI-E connector is rated up to 75 watts, and that's not a speculation.

Yes, but RX 480 sips power from a single 6-pin PCI-E connector and not 8-pin.

So... at max load it will consume about 100-110w.

Yeah, but 75W PCI-E base + 75W from the additional connector is not 110W. Where are you getting that from?

Yeah, but 75W PCI-E base + 75W from the additional connector is not 110W. Where are you getting that from?

He is saying it's not going to pull the absolute max it can, it will consume a good bit less. You don't want to be pushing the power limit while stock.

http://www.guru3d.com/news-story/amd-radeon-rx-480-3dmark-11-performance-benchmark-surfaces.html

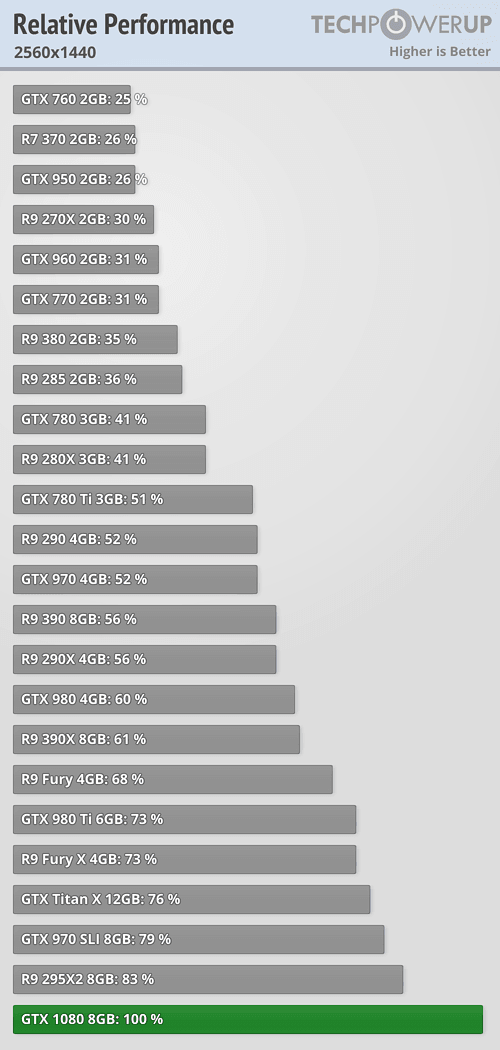

Fury/980 for $200-250 under 150w?

Nice. Now it remains to be seen how GCN4 on 14nm overclocks. Driver version seems to be old.

Fury/980 for $200-250 under 150w?

Nice. Now it remains to be seen how GCN4 on 14nm overclocks. Driver version seems to be old.

I'll just reply to this as I see it as the key.

Unreasoned rumour is a waste of time as far as I'm concerned. We have too many here who post the most ridiculous things to incite a nasty response.

The problem is that there are too many people here who only consider rumors that make them (or the company they like) seem good which they believe, while any rumor that they don't like (or makes "their team" look bad) get dismissed.

No single rumor or leak is useful in a vacuum, but when you combine them together with other information you have you can start to paint a better picture of the situation. Rumors that seem to fit with known information or reality get a better weight and if information changes you reevaluate rumors or see which conflict with reality.

As far as we know, Vega (either a Q4 2016 or Q1 2017 release depending on what leaks you believe) is still going to be on GloFo. Most of the heavy lifting on 14LPP was done by Samsung anyway; GloFo is basically tasked with implementation of an already engineered process.

Which is even more sad. GF got something that we know works well because Samsung has some good SoCs and Apple's chip made on their node is just as good as the version made on TSMC's node or at least their close enough that it doesn't matter.

AMD is going to be much more healthy as a company once they're completely rid of Global Foundries.

It takes 90 to 120 days to complete a wafer on 14nm. Do you go the route of a paper launch in a high volume mainstream segment or do you stock up inventory to meet the expected demand? And yes it appears that they are months ahead in this segment especially for laptops and OEM desktops, even if NV rushes out 1060 card that will not be able to compete with the 480.

I have no doubt that they're stocking up inventory, but at the same time they had working silicon out at the beginning of the year. My guess is that the rumor about them getting a bad batch of chips had a little bit of truth to it and they lost a potential 3 month lead. Like I said though, they never jumped the gun so they don't look terrible in the press, but it's hard to believe they didn't have some problems at GF.

This is a new architecture, do you maybe think that the amount of shaders per cu is different? Maybe 1 cu is not 4 X 16 ALU? Maybe 36 cu does not equal 2304 shaders. Maybe the patent they received makes them have 2 X 16 ALU with 2 X 8, 2 X 4, 2 X 2, and 2 highspeed scalers. maybe its 2160 shaders with 72 high speed scalers capable of running 4 threads in 4 clock cycles which would give them 2448 effective shaders?

All of that's certainly possible, but it seems less likely from what information we do have released. Almost every site I've seen has indicated 2304 shaders, so it's really unlikely that AMD made such a big change in GCN, especially on top of everything else they've done.

No one was really sure if the patent that was posted a while back was in Polaris or not and a lot of people seemed to think it was going to be Vega only, which makes some sense given their earlier roadmap indicated Vega would scale performance even better than Polaris, which does suggest that not everything made it into Polaris.

coercitiv

Diamond Member

- Jan 24, 2014

- 7,509

- 17,954

- 136

You spec your power connectors based on maximum power draw, not TDP. Keep in mind and AMD representative declined to confirm RX 480 having 150W TDP, as the question was under NDA territory. That means that for them the term "Power" used in the slides may not have meant TDP, but rather Max Power (card design).Yeah, but 75W PCI-E base + 75W from the additional connector is not 110W. Where are you getting that from?

Moreover, for those of you who still expect the worst, ask yourselves the following: since they are both ~150W TDP, why does the 1070 come with an 8-pin connector, and why does 970 come with 2x 6-pin connector?

kraatus77

Senior member

- Aug 26, 2015

- 266

- 59

- 101

because both consume more than 150w.You spec your power connectors based on maximum power draw, not TDP. Keep in mind and AMD representative declined to confirm RX 480 having 150W TDP, as the question was under NDA territory. That means that for them the term "Power" used in the slides may not have meant TDP, but rather Max Power (card design).

Moreover, for those of you who still expect the worst, ask yourselves the following: since they are both ~150W TDP, why does the 1070 come with an 8-pin connector, and why does 970 come with 2x 6-pin connector?

can i have a cookie now ?

You spec your power connectors based on maximum power draw, not TDP. Keep in mind and AMD representative declined to confirm RX 480 having 150W TDP, as the question was under NDA territory. That means that for them the term "Power" used in the slides may not have meant TDP, but rather Max Power (card design).

Moreover, for those of you who still expect the worst, ask yourselves the following: since they are both ~150W TDP, why does the 1070 come with an 8-pin connector, and why does 970 come with 2x 6-pin connector?

Sure, I agree completely it's speculation. My point is that everything on this is - 110W is no more or less credible than close to 150W. They're just guesses based on very limited information, that's actually what I said.

You know, it would make me extremely happy if cards just stopped pulling any power from the slot unless they're actually slot powered. It's silly to pull 12V power from the MB connector through the MB and then through a card edge connector when you could just draw current from a connector actually designed for such a task.

because both consume more than 150w.

can i have a cookie now ?

Nope.

Single 8pin gives the illusion of less power than 2 6pin although they are equal. Guess the benefit would be it's probably cheaper as far as cost goes.

kraatus77

Senior member

- Aug 26, 2015

- 266

- 59

- 101

i meant both cards consume more power than 150w. i know what they do.Nope.

Single 8pin gives the illusion of less power than 2 6pin although they are equal. Guess the benefit would be it's probably cheaper as far as cost goes.

1x8pin = 2x6pin = 150w (not counting 75w from pcie)

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

Nope.

Single 8pin gives the illusion of less power than 2 6pin although they are equal. Guess the benefit would be it's probably cheaper as far as cost goes.

I believe its more about PSU available 6-pin cables over 8-pin than anything else.

Latest PSU models include 6+2 Pin PCI-e cables so both AMD and NVIDIA are able to use a single 8-Pin slot over 2x 6-pin slots.

- Status

- Not open for further replies.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.