Again is recommended you read the thread that you call "my thread" as the chart you publish have already be published. Why go through all the work?I don't see 2.8x better performance per watt either, can't even match 2-year old 28nm planar Maxwell.

AMD Polaris Thread: Radeon RX 480, RX 470 & RX 460 launching June 29th

Page 179 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

ShintaiDK

Lifer

- Apr 22, 2012

- 20,378

- 146

- 106

What puzzles me is why 6-pin if it clearly needs more juice?

Deception, cost saving etc. The result is clear and they break PCIe specs at stock.

Deception, cost saving etc. The result is clear and they break PCIe specs at stock.

The same reason they used 8 pin + 6 pin for R9 290x which uses 450+ watts when not throttling.

lol, all this hype for a card that matches my 6 month old R9 390 in price and performance. Sweet!

Keep your VGA. Hawaii is still a darn good GPU, should last longer than you expect.

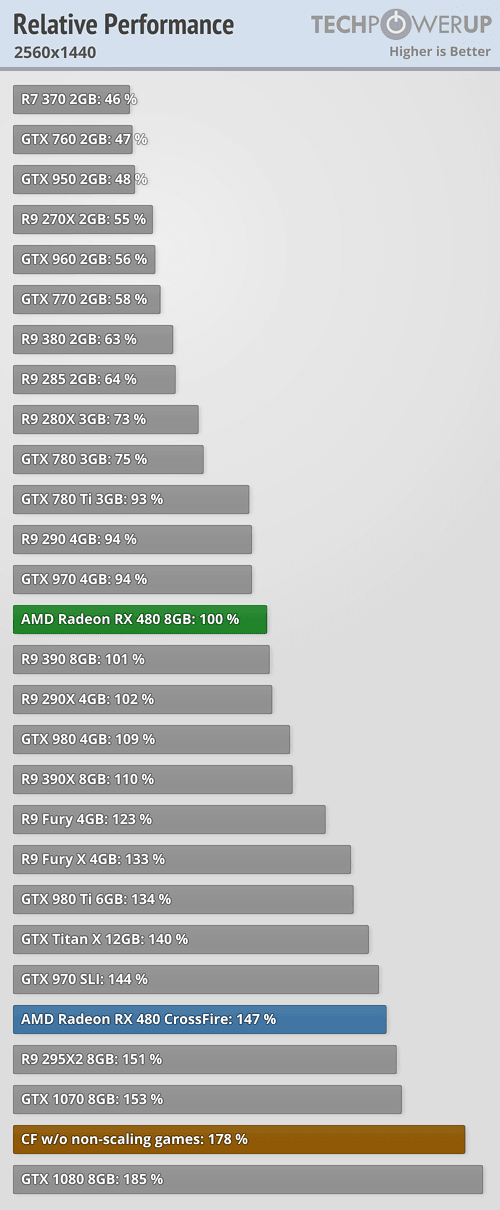

Next gen Performance/watt per TPU is awful. 167W peak power usage with 163W typical. That's a disaster because 1070 is 50-53% faster while using less power. My expectations for Vega are now at an all time low.

This means with a full node shrink and a new GCN architecture AMD didn't even match 980's performance/watt.

https://www.computerbase.de/2016-06/radeon-rx-480-test/9/

Very disappointing indeed, but also shows how far ahead of AMD the competition was with Maxwell in 2014. No wonder they chose to play safe with some of Pascal's architecture changes.

I believe AMD should just stick to designing APUs for now, 1070 is way faster and consumes less power.Extremely disappointing debut for polaris, it's only saving grace is the price.

PS:

Kyle was right after all

The only saving grace is perf/$ (at least till Geforce GTX 1060/1050). It's basically a fail in other relevant metrics. Makes us question what they have in store for Vega. Polaris 10 is not exactly small, probably bigger than GP106 while using more power for similar performance.

Well, this has been another so-so launch.

I won't get too worked up on the price yet; at the equivalent of US$260 here in Canada that's not terrible, and Newegg and NCIX don't even have theirs up yet. Value is good, the cheapest GTX970 or 390 you can buy is the same price, and the 8GB 480 is a better card than either of those. Once the price reaches the MSRP the performance/$ ratio will be quite good.

Power usage is pretty disappointing if the TPU numbers are to be believed. I would love to see a breakdown of the power used by the different subsystems; IE the GDDR5, the memory controller, the CUs, etc. I can't imagine even AT will give anything like that, but it would be immensely interesting.

If this card had come in at 110-120W, it would be a massive success. The performance/$ would be top of the charts (by a good margin vs Pascal), the perf/watt would at least split the difference between the 980 and 1080, and the issues with the reference blower would be largely mitigated. As it stands though, I do worry about how successful it's going to be. In the desktop space it will be fine; anything resembling a decent cooler will handle 160W no problem and it's still a low power single 6-pin card. Polaris could have been a massive win for high end laptops though, and if this is any indication it's going to have a very hard time competing against GP106 in that space even if GP106 can't touch GP104's efficiency.

Interesting times ahead.

I won't get too worked up on the price yet; at the equivalent of US$260 here in Canada that's not terrible, and Newegg and NCIX don't even have theirs up yet. Value is good, the cheapest GTX970 or 390 you can buy is the same price, and the 8GB 480 is a better card than either of those. Once the price reaches the MSRP the performance/$ ratio will be quite good.

Power usage is pretty disappointing if the TPU numbers are to be believed. I would love to see a breakdown of the power used by the different subsystems; IE the GDDR5, the memory controller, the CUs, etc. I can't imagine even AT will give anything like that, but it would be immensely interesting.

If this card had come in at 110-120W, it would be a massive success. The performance/$ would be top of the charts (by a good margin vs Pascal), the perf/watt would at least split the difference between the 980 and 1080, and the issues with the reference blower would be largely mitigated. As it stands though, I do worry about how successful it's going to be. In the desktop space it will be fine; anything resembling a decent cooler will handle 160W no problem and it's still a low power single 6-pin card. Polaris could have been a massive win for high end laptops though, and if this is any indication it's going to have a very hard time competing against GP106 in that space even if GP106 can't touch GP104's efficiency.

Interesting times ahead.

Hasn't the perf/watt got rather worse from Maxwell though? Which is not what they needed.

I really do hope the power is a problem with pushing the clock speeds too high to get the performance and the mobile versions get much better efficiency.

I really do hope the power is a problem with pushing the clock speeds too high to get the performance and the mobile versions get much better efficiency.

sze5003

Lifer

- Aug 18, 2012

- 14,320

- 683

- 126

Sure I can spend $450 but I was hoping it would be 390x level at least and that will hold me for a while. I like to keep my cards a long time and wanted to wait for Vega. With nvidia support ending for their models due to new ones coming out, that's why I didn't want to go that route.Why don't you upgrade based on price? If you had $450 to spend, the 480 would never have been in your market range.

I'll just wait for an AIB 480 and go for that when I can get one. I still game at 1080p for now so it should be fine for my needs but a new monitor would be nice, oh well.

lupi

Lifer

- Apr 8, 2001

- 32,539

- 260

- 126

No. The card sucks because it isn't any better than a 2 year old 28nm gtx 970.

Other than being less expansive than and able to utilize newer architecture technology you mean?

Hasn't the perf/watt got rather worse from Maxwell though? Which is not what they needed.

I really do hope the power is a problem with pushing the clock speeds too high to get the performance and the mobile versions get much better efficiency.

GP104 will once again rule supreme at the 100W level and dictate all pricing, like GM104 and GK104 before it.

Going by previous architectures, Polaris 10 will be the highest AMD will go and it will slot into the 100W level, making it comparable to the Pitcairn that was the top chip for AMD in the 100W level.

Ofcource efficiency matters but at what cost?I believe, like many, that power efficiency matters alot when talking about the architecture/design of a card.

And polaris is (although there are outliers) pretty bad at it.

What does this mean? well scaling up such an architecture to compete with 1070/1080 and higher will be a huge problem without major design changes.

Assume linear power consumption to performance, amd will need 290W to match the 1080 with such an architecture. So yes it does matter when talking about the architecture that is polaris.

When talking about the 480 in its price bucket, it is simply the best value money can buy with the current advertised prices and during the time nvidia doesn't launch 1060 or floods the market with 980.

Who gives a frak if its 160w or 130w. Its not excactly hitting the 250w wall.

Vega is bringing hbm2 and probably lower freq.

Trading cost for effiency.

Its not going to be 300 usd but probably 400 and up.

Then you can choose.

Dont hope for more efficient vega arch. Lower freq and hbm but it will show. And probably aib launch if amd comes to senses.

Maxwell/pascal is just damn effient archs and Maxwell was just outright fantastic. Look at maxwell to pascal efficiency increase vs 390x to 480 eff gains.

zinfamous

No Lifer

- Jul 12, 2006

- 111,994

- 31,557

- 146

Now can I say that the 32ROPs killed the card?:sneaky:

With the new front end I was expecting that this card will pull over the 390X in various DX11 games in 1080p and be the same in DX12 and AMDGE games. But is loosing too often with the same shader power and almost same bandwith with compression.

:thumbsdown:

I don't get this. It is matching previous generation comparable card family with half the ROPs.

How is that being killed by ROPs? Seems to me that they have crammed twice the performance out of each ROP.

Do you also think that every 12MP camera is better than every 6MP camera? All "pixels" are the same, right?

Mercennarius

Senior member

- Oct 28, 2015

- 466

- 84

- 91

A 380X replacement with 390 level performance, DP 1.4, excellent DX12 performance, only needs 1 6 pin power connector, $199 starting price....and people are saying it failed already LOL.

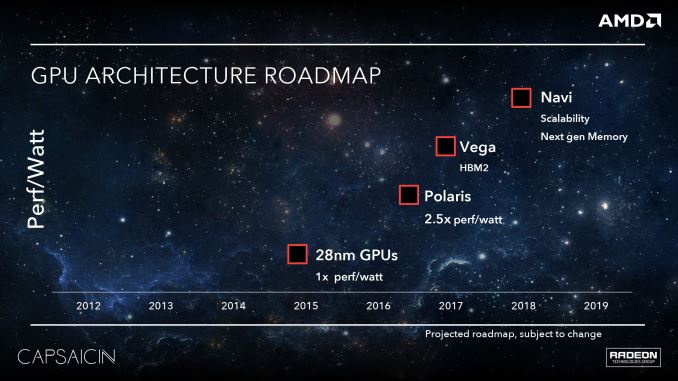

This is pr crap. Why beliewe it. Perhaps vega is 2.5 vs hawai. Lol.According to Anandtech, RX80 power consumption is 45% less than 390.Whatever happened to 2.5X per/watt improvement?

I don't get this. It is matching previous generation comparable card family with half the ROPs.

How is that being killed by ROPs? Seems to me that they have crammed twice the performance out of each ROP.

Do you also think that every 12MP camera is better than every 6MP camera? All "pixels" are the same, right?

Run an R9 290/x, R9 390/x with 32 ROPs and you will easily see the problem with your argument. (And you can, as there are 32 ROP BIOS versions specifically made for R9 290/x, R9 390/x.)

It's quite obvious that one of the main problems is that AMD's frame buffer compression is far inferior to Nvidia's.

You can test this easily by simply testing the various cards with varying VRAM bandwidth set by the tester by VRAM speed.

This is the exact same problem that the R9 285 faced.

Last edited:

AnandThenMan

Diamond Member

- Nov 11, 2004

- 3,991

- 627

- 126

The problem is AMD needed an outright win they didn't get it. Compared to the 1060 the RX480 is going to have much higher power consumption with what will likely be about the same performance. That's not good enough when you are trying to win back customers.A 380X replacement with 390 level performance, DP 1.4, excellent DX12 performance, only needs 1 6 pin power connector, $199 starting price....and people are saying it failed already LOL.

Actually agree. I never expected it. Crazy imho.Deception, cost saving etc. The result is clear and they break PCIe specs at stock.

Obviously its about getting to those 390x perf level whatever the cost. Shouldnt be nessesary for a 230usd card.

Actually agree. I never expected it. Crazy imho.

Obviously its about getting to those 390x perf level whatever the cost. Shouldnt be nessesary for a 230usd card.

Why did you not expect it?

They did this for every single card they have released since the start of this trend with R9 290/x.

Mercennarius

Senior member

- Oct 28, 2015

- 466

- 84

- 91

The problem is AMD needed an outright win they didn't get it. Compared to the 1060 the RX480 is going to have much higher power consumption with what will likely be about the same performance. That's not good enough when you are trying to win back customers.

The 1060 has less VRAM, costs more, and will likely be slower (especially in DX12) even if just marginally. Not only that but the 480 released first....sounds like AMD won that battle.

Last edited:

SlitheryDee

Lifer

- Feb 2, 2005

- 17,252

- 19

- 81

To be honest it isn't necessarily a bad strategy, if the AIB version is the main version AMD is betting on (since it makes the AIB version look better by comparison), but if that was the case then we should be able to buy AIB versions right now instead of only reference models.

It still doesn't make sense to me though. You want to make a great impression on the release of a new product. You want it to be all it can be because there's a good chance that consumer's will forever have their opinions about it be based on the reviews from that release. It would be smarter to simply forego a reference version if making the AIB versions look good was your goal.

It's a fail.

Such a small chip, mainstream, should not be using that much power without any OC headroom.

NV should just release a 970/980 with 8GB and call it a day. That's how bad Polaris is.

Regression is simple. 5.8 TFlops, performing around a 390 that has 5.2 TFlops.

They really don't need anything more than current rumours suggest. A high-clocked 1280 SPs, 48 ROPs, 192-bit can probably match it. Fun times ahead for PC gamers.

Can't understand the regression either. Wasn't effective bandwidth close to Hawaii without compression? How can it be slower than Radeon R9 390 at 1440p, given all the improvements made by AMD?

I don't care about perf/watt itself but in how it ties into the flagship card(s) down the line. That's because AMD/NV are both limited by the die size and upper end power usage. If NV wins in perf/watt by 50%+, it means AMD will need a much larger die to keep up. This time NV already tapped out a 610mm2 GP100, which means AMD won't have any die size advantage to play with. Even if GP102 has a 450mm2 die, AMD is going to need to make up A LOT of ground in perf/watt to have any chance to be as fast as Big Pascal. I am not counting on it.

That's exactly what crisium said, and I agree. There is room for improvement with HBM2 and architecture changes, but after today's results one should not expect miracles. Will be curious to see how much silicon Vega 10 needs to match GP104.

Performance against Hawaii at 1440p falls off a cliff.

http://images.anandtech.com/graphs/graph10446/82391.png

http://images.anandtech.com/graphs/graph10446/82393.png

http://images.anandtech.com/graphs/graph10446/82395.png

http://images.anandtech.com/graphs/graph10446/82397.png

http://images.anandtech.com/graphs/graph10446/82399.png

http://images.anandtech.com/graphs/graph10446/82403.png

http://images.anandtech.com/graphs/graph10446/82409.png

256-bit + 32 ROPs? Or something else?

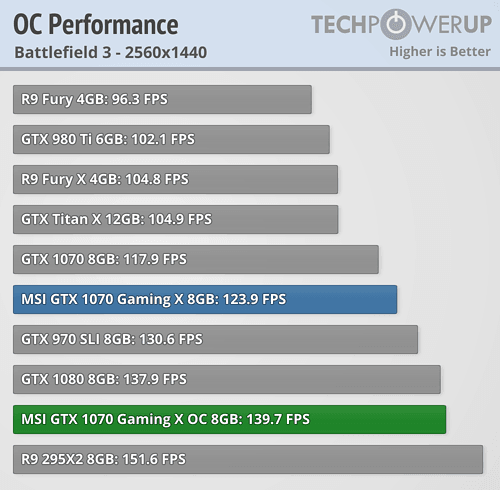

1070 is certainly a great bang for the buck as well from what it seems. But it will still be slower than 480s in crossfire.

Actually OCed Geforce GTX 1070 gets pretty darn close to stock Geforce GTX 1080 FE's performance, so it should be close. I'm not a big fan of multi GPU though, I'd opt for single GPU even if slightly slower. That MSI model was on sale for $429, so it's probably cheaper than 2x Radeon RX 480 in Crossfire as well.

The 1060 has less VRAM, costs more, an will likely be slower even if just marginally. Not only that but the 480 released first....sounds like AMD one that battle.

The people likely to be in the ~200 USD GPU market tend to have less than the top of the line Core i7 6700k @ 4.5 ghz or better.

This means that the Nvidia massive driver overhead advantage over AMD would come into effect and make the purchase of 1060 a no-brainer over 480 if they are even anywhere close to the same perf/dollar.

AnandThenMan

Diamond Member

- Nov 11, 2004

- 3,991

- 627

- 126

AMD needs a clear win, not just a bit better that's the problem in my view.The 1060 has less VRAM, costs more, and will likely be slower (especially in DX12) even if just marginally. Not only that but the 480 released first....sounds like AMD won that battle.

Mercennarius

Senior member

- Oct 28, 2015

- 466

- 84

- 91

The people likely to be in the ~200 USD GPU market tend to have less than the top of the line Core i7 6700k @ 4.5 ghz or better.

This means that the Nvidia massive driver overhead advantage over AMD would come into effect and make the purchase of 1060 a no-brainer over 480 if they are even anywhere close to the same perf/dollar.

In DX12 games Nvidia has no advantage over GCN cards. And from the benchmark results so far, the 480 performs great in all the newer games. I don't see the 1060 out performing the 480, and costing more and having less VRAM just means it lost on all fronts.

Mercennarius

Senior member

- Oct 28, 2015

- 466

- 84

- 91

AMD needs a clear win, not just a bit better that's the problem in my view.

Better performance for less money than the NVidia equivalent (1060) is not a clear win?

Mercennarius

Senior member

- Oct 28, 2015

- 466

- 84

- 91

If the trend continues then it looks like the 480 > 1060, the 490 > 1070, and the Fury replacement will be > than the 1080.

- Status

- Not open for further replies.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.