He also mentions how the reduction in power usage of HBM will mean AMD will allocate more power usage towards the ASIC/GPU itself which he says is worrying to him. This is actually a good thing, not a bad thing since it allows to make a much larger GPU and cram more transistors since when you have enough memory bandwidth, you should use the extra power towards a component that makes the product faster -- and that is the GPU. How he managed to put a negative spin on this is amazing.

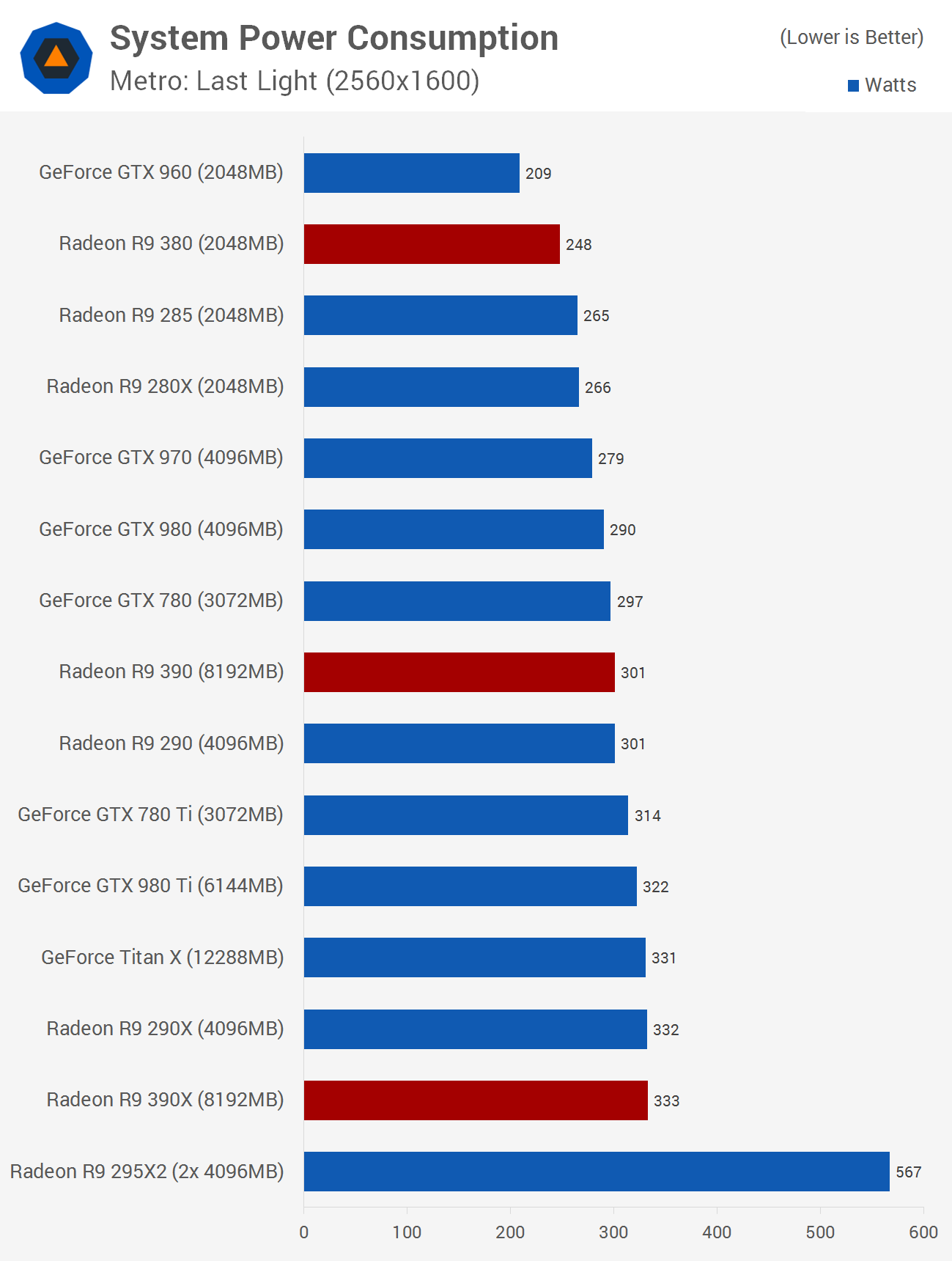

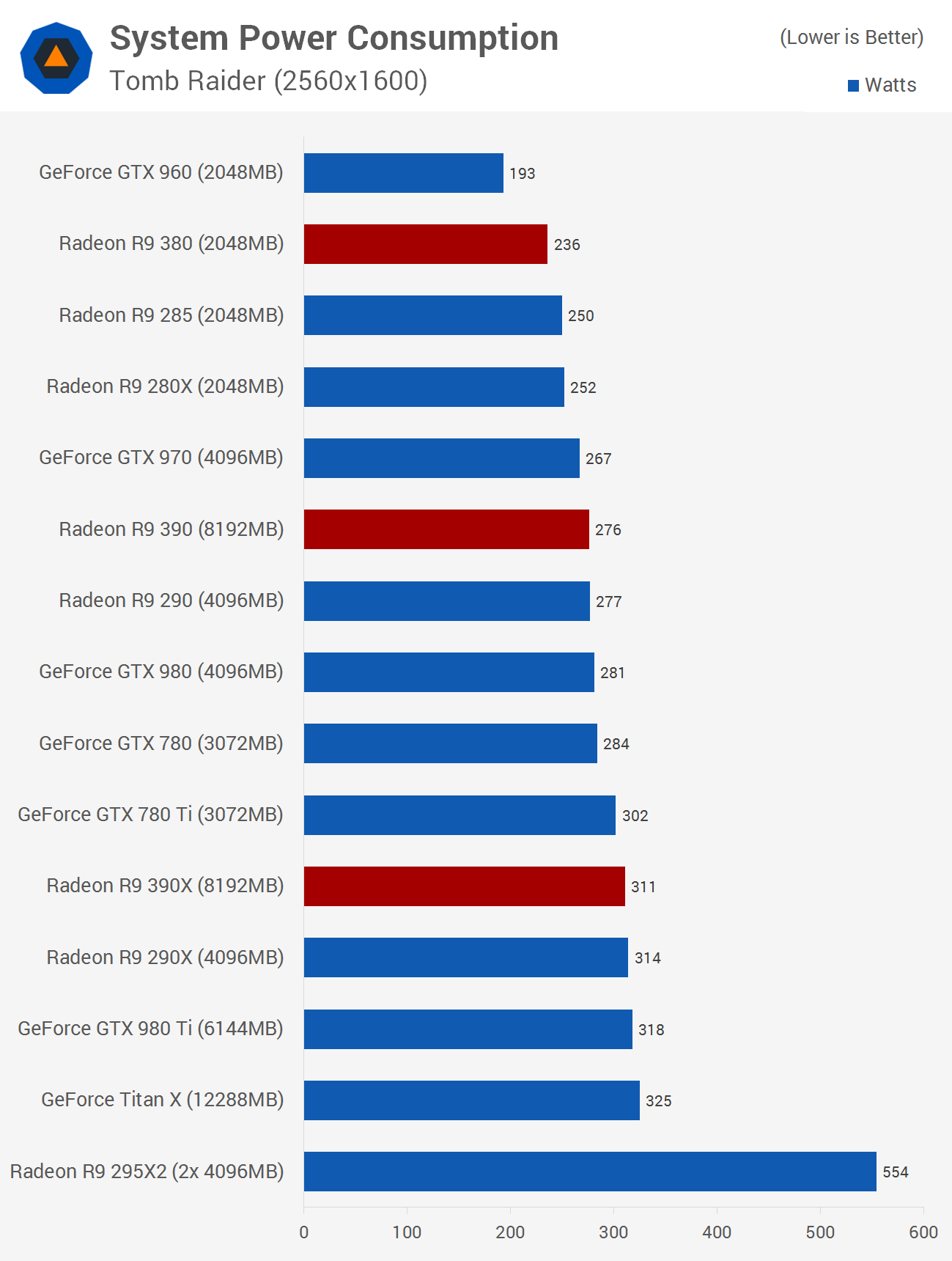

Not everyone wants a blast furnace crammed into their case. This is why he was probably saying that AMD's insistence on cannibalizing all process improvements to roll back into MOAR POWER is worrisome. To be fair, the Fury Nano does indicate that AMD recognizes not everyone wants absolute top performance at all costs, and that many people care about perf/watt and are working within a modest TDP limit. On the other hand, the R9 390X (370W gaming, 424W FurMark: much worse than Fermi) is an absurd and even obscene product, much like the FX-9590: outdated silicon made to run way past its limits to camouflage the inability of the company to provide a competitive design. AMD should be embarrassed to ever have released either one.

and he makes uneducated statements how the entire R9 200 series are re-badges of HD7000 series, from 2011. The only cards that were paper launched in 2011 were 7970/7950 but no HD7700-7800-7900 series was ever for sale in 2011, they only launched Q1 2012, which means they are 3 years old, not 4 years old. Another loss of credibility by fudging up data.

Yes, he should have been a bit more clear (and he also seems to think Tahiti was being rebadged again for the 300 series, which thankfully is not true now that they've got Tonga). But while you can nitpick around the edges, the fact remains that of the five ASICs used in the retail 200 series cards in 2013, four were rebadged (Cape Verde, Bonaire, Pitcairn, Tahiti) and only one was new (Hawaii). Tonga was also new, but that was released in late 2014 and really didn't belong in the 200 series at all, which is why it got such an odd numerical designation.

He also talks about how none of R9 200 series were competitive at all which is utter rubbish since R9 270/270X smoked 750/750Ti in gaming, same for 280X and 290 vs. 960 2GB. He also didn't even mention how in the UK one could get much cheaper AMD cards than NV which made a lot of them worth buying.

Some of the 200 series cards were competitive, especially during the Kepler era. During that time, they didn't fall far behind in efficiency, and defeated Nvidia products in perf/$. But Maxwell absolutely demolished them, leaving nothing for AMD to do but cut prices to the bone (and later, rebadge in hopes of tricking some buyers into thinking they were getting something new).

Your specific comparisons are flawed. 270/270X requires at least one six-pin power connector (and more in the case of the 270X), while 750/750 Ti requires no power connector. That means the two cards are in separate classes and shouldn't really be compared to each other. For someone who has an OEM system with a crappy 300-350W PSU that lacks PCIe connectors, the 750 Ti is a realistic option, but Pitcairn cards aren't. Someone doing a similar upgrade with AMD would have to resort to a much worse performing Cape Verde card. (The OEM R9 360 Bonaire card fits under 75W, but for some reason, the consumer R7 360 doesn't.)

Likewise, in terms of die size and TDP, it's inappropriate to compare GM206 (GTX 960) to much larger, more power-hungry chips like Tahiti and Hawaii. The appropriate comparison is between GM206 and Pitcairn, and Pitcairn gets absolutely demolished in that contest (not surprising, given that it's a 2012 design up against a 2015 design).

And he wrote off the entire AMD CPU stack in his GPU preview of AMD's new cards.

Well, AMD put an Intel CPU in their Project Quantum PC. The Bulldozer family has been described by high-ranking AMD employees as an "

unmitigated failure". Seems to me that even AMD has essentially written off their current CPU stack. With the possible exception of Carrizo (assuming it appears in anything but cheap craptops), there won't be anything worth seeing on that front until Zen.