News AMD Announces Radeon VII

Page 4 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Justinbaileyman

Golden Member

- Aug 17, 2013

- 1,980

- 249

- 106

I would have liked to have seen a $499-$599 price tag but not a $699 price tag.

This is only going to be slightly better then the Vega 64, with what the same TDP or there abouts??

Really dont like the look of these prices compared to performance value.

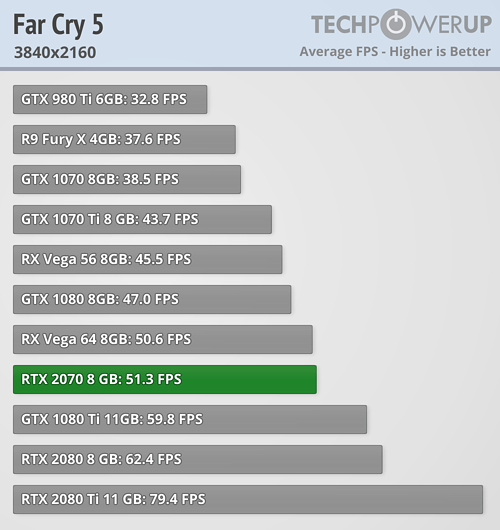

at least on FC5 it looks to be around 20% faster, but Vega 64 is $399 at newegg right now, so yes, it's hard to justify the pricing.

PhonakV30

Senior member

- Oct 26, 2009

- 987

- 378

- 136

I'd actually pay to see Lisa Su whoop his arse....Literally.

Saylick

Diamond Member

- Sep 10, 2012

- 4,137

- 9,663

- 136

I don't think AMD has much option to price these any lower; 7nm ain't cheap, especially for a GPU dies given their size. Mind you, Vega II products are most likely cut-down dies that did not make the cut for 7nm Instinct products. AMD has to charge as much as it thinks it can get away with for these dies.I would have liked to have seen a $499-$599 price tag but not a $699 price tag.

This is only going to be slightly better then the Vega 64, with what the same TDP or there abouts??

Really dont like the look of these prices compared to performance value.

Stuka87

Diamond Member

- Dec 10, 2010

- 6,240

- 2,559

- 136

I love how he uses DLSS as a reason that 2080 is better. Because everybody wants to spend $700 on a GPU and then make their game look like crap by turning that "feature" on.

Stuka87

Diamond Member

- Dec 10, 2010

- 6,240

- 2,559

- 136

Yeah but people spending $700 to not have the option to use Raytracing. So i guess it makes sense that DLSS would be the perfect option for them...

*shrug* Personally I could care less about having tiny pieces of the scene be ray traced, especially when it has such a huge performance hit.

frozentundra123456

Lifer

- Aug 11, 2008

- 10,451

- 642

- 126

it does according to AMD themselves but that is too slow IMHO. Yeah 2080 is expensive but 16 GB of HBM2 will also cost a lot. I doubt they can offer that cheaper than a 2080.

Yea, and if Anand's estimated power consumption of 300 watts is correct, still poor performance per watt relative to nVidia. (2080 is 215 watt TDP)

*shrug* Personally I could care less about having tiny pieces of the scene be ray traced, especially when it has such a huge performance hit.

I have the same feeling over 4K. Funny how this works, huh?

The problem here is that you have neither with this card: No Raytracing, no TensorCores (DLSS...), no MeshShading, no Variable Rate Shading, no USB-C, no better video encoder... This is just a Vega shrink with 16GB as the only marketing point. There will be games where a RTX2070 will be faster and even a 2060 wont be far away.

Harry_Wild

Senior member

- Dec 14, 2012

- 860

- 170

- 106

- Jun 10, 2004

- 14,608

- 6,094

- 136

The average gamer is going to react exactly like they did to the RTX lineup and say "nope" to the price tag.

Why so?an 8GB version would have half the memory bandwidth.

UsandThem

Elite Member

- May 4, 2000

- 16,068

- 7,383

- 146

Yea, and if Anand's estimated power consumption of 300 watts is correct, still poor performance per watt relative to nVidia. (2080 is 215 watt TDP)

This is what I'm curious about as well. Many of the AIB partner RX64 cards recommended a 850w power supply.

I know some don't care about power/heat, but plenty do (like I do for Folding).

GodisanAtheist

Diamond Member

- Nov 16, 2006

- 8,544

- 9,978

- 136

Like most here, my interest in this card is going to be purely academic. I ain't spending $700 on a videocard (the total cost of my last CPU/Mobo/RAM/GPU upgrade combined) no way no how. I get that someone at AMD looked at the number of dies they had, the recoup cost, and figured it didn't make sense to price this thing any lower, but geez. HD 4870 this thing is not.

With that out of the way, there are a couple things that really did pique my interest:

- AMD being "nimble". Its heartening to see that AMD is aware enough of their GPU mindshare to fairly quickly convert their defective M125 dies into a "competitive" (a word that can be broadly interpreted) product. Its a good reminder to the faithful and the consumer GPU space as a whole that AMD isn't content to wander the desert for the next year while Navi bakes.

- 128 ROPS. Wat. As mentioned earlier in the thread, I was sure there was a whitepaper out there stating the GCN arch maxed out at 4096SP/128TMU/64ROP. Anandtech is just casually dropping the 128 ROP thing and walking away like its no big deal. Did I misunderstand or did AMD have to build in a workaround so the 128 ROPs won't double pixel throughput as expected? Interested to know more about this.

- AMD's branding is ****ing garbage. Radeon VII?! Has AMD just totally given up on the entire concept of a "GPU Generation" and we can look forward to all their cards having some cutesy one off name with nothing to inform the consumer where in the GPU landscape the card falls? We're looking at Radeon VII, Vega 64, Vega 56, Radeon RX 590, 580 etc... Aside from price, its anyone's guess how all these cards stack up.

- Possible Radeon "V" further cut down die to compete with the RTX 2070. An additional 25% off the shader cores would land us in 48 CU territory. Nothing terribly new or exciting in terms of moving the performance bar, but I have to imagine AMD has to have a pile of even more defective chips that they can try and move if only for the purpose of keeping themselves at the front of tech websites during the 2019 year.

If nothing else, we got something new to argue about for the next couple months...

With that out of the way, there are a couple things that really did pique my interest:

- AMD being "nimble". Its heartening to see that AMD is aware enough of their GPU mindshare to fairly quickly convert their defective M125 dies into a "competitive" (a word that can be broadly interpreted) product. Its a good reminder to the faithful and the consumer GPU space as a whole that AMD isn't content to wander the desert for the next year while Navi bakes.

- 128 ROPS. Wat. As mentioned earlier in the thread, I was sure there was a whitepaper out there stating the GCN arch maxed out at 4096SP/128TMU/64ROP. Anandtech is just casually dropping the 128 ROP thing and walking away like its no big deal. Did I misunderstand or did AMD have to build in a workaround so the 128 ROPs won't double pixel throughput as expected? Interested to know more about this.

- AMD's branding is ****ing garbage. Radeon VII?! Has AMD just totally given up on the entire concept of a "GPU Generation" and we can look forward to all their cards having some cutesy one off name with nothing to inform the consumer where in the GPU landscape the card falls? We're looking at Radeon VII, Vega 64, Vega 56, Radeon RX 590, 580 etc... Aside from price, its anyone's guess how all these cards stack up.

- Possible Radeon "V" further cut down die to compete with the RTX 2070. An additional 25% off the shader cores would land us in 48 CU territory. Nothing terribly new or exciting in terms of moving the performance bar, but I have to imagine AMD has to have a pile of even more defective chips that they can try and move if only for the purpose of keeping themselves at the front of tech websites during the 2019 year.

If nothing else, we got something new to argue about for the next couple months...

piesquared

Golden Member

- Oct 16, 2006

- 1,651

- 473

- 136

So Radeon VII is Equal/Faster than a 2080 in gaming and it's also a great prosumer card. That puts AMD Radeon right back into high end high performance market. So this Vega II die is probably about half the size of the 2080? Wow if true. And it has less CUs than the previous core, so did they shrink the die further by eliminating compute units, or are some fused off?

PeterScott

Platinum Member

- Jul 7, 2017

- 2,605

- 1,540

- 136

Interesting Quote about RTX:

When asked about the negative reaction that the company’s GeForce RTX cards have received from more budget conscious customers, Huang was contrite.

“They were right,” Huang said. “[We] were anxious to get RTX in the mainstream market... We just weren’t ready.”

Though he goes on to say, now they are with 2060...

fierydemise

Platinum Member

- Apr 16, 2005

- 2,056

- 2

- 81

I think it is more correct to say this card exists at all as a consumer product because of Nvidia's pricing. If the RTX 2080 started at more traditional $550 or $600 AMD doesn't even bother to bring this to market, it would be correctly derided as overpriced.that basically justifies Nvidia's RTX pricing.

Instead, since Nvidia raised the price they created an opportunity for AMD to sell these to consumers at a competitive price point. I agree that the value proposition for Vega VII is pretty terrible, just like the 2xxx series, but that is the state of the market at the moment.

Ottonomous

Senior member

- May 15, 2014

- 559

- 293

- 136

Its based on the intrinsic balance of the uarch. Vega10 was a bit unbalanced in that the ROPs were memory constrained, the doubled HBM2 memory and memory controllers should help.- 128 ROPS. Wat. As mentioned earlier in the thread, I was sure there was a whitepaper out there stating the GCN arch maxed out at 4096SP/128TMU/64ROP. Anandtech is just casually dropping the 128 ROP thing and walking away like its no big deal. Did I misunderstand or did AMD have to build in a workaround so the 128 ROPs won't double pixel throughput as expected? Interested to know more about this.

Stuka87

Diamond Member

- Dec 10, 2010

- 6,240

- 2,559

- 136

Why so?

Because thats how HBM works, each stack has its own memory channel. By going to 16GB, they went from two to four channels. Each stack has a 1024bit bus. Four stacks (16GB) equals 4096bit bus. 8GB (Like Vega10) equals 2048bit bus. Plus the memory clocks are higher. The reason Vega wasn't as fast as it should have been was because it was ROP and Memory bandwidth starved. By going to 128 ROPs and the most memory bandwidth of any gaming GPU ever (1TB/sec), it should be able to perform like it should have when it first launched.

But why do you continue to blab about power consumption? Its getting pretty old, and is a bit pointless. Vega was designed as a low power chip for mobile/SFF use. When you crank up the clocks on this style of chip, power consumption sky rockets. Both Polaris and Vega were designed for other OEMs (Sony, Apple, MS). They all wanted low power chips. AMD then decided to sell a gaming GPU based on these designs, and that performance was more important than power consumption, which in the big world, is true. So they cranked the clocks up, which is why under-volting AMD GPU's can result in giant power savings. The vast majority of buyers could care less about power consumption. All the care about is price and/or performance. If price is a bigger deal, people go for AMD. If top end performance is more important, they spend more and go with nVidia.

Paratus

Lifer

- Jun 4, 2004

- 17,765

- 16,119

- 146

So last Christmas for $699 I got a Powercolor Red Devil RX Vega 56 that in some games, like Grand Theft Auto V is equivalent to the 1070 FE and in others like Tom Clancy’s The Division is equivalent to the 2070 FE

Now for $699 we’ll be able to get:

That’s not a bad upgrade. The only thing we don’t know is power consumption.

My card averages about 215W. 4 more CUs at 210 more megahertz is about 25% more theoretical performance and power. Double the 30w 8GB HBM2 2048 memory and a theoretical VII would use about 305W.

However that is before any 7nm power savings. So I’ll put it between 250-300w depending on how hard they push voltage vs performance curve.

The other thing I’ll say is man did I lick the wrong time to build a new rig last year.

Now for $699 we’ll be able to get:

- 2X the Ram 8GB to 16GB

- 2.5X the memory bandwidth 409 to 1024GB/s

- 2X ROPs 64 to128

- 4 more CUs 56 to 60

- 210 more peak MHz 1590 to 1800mhz

That’s not a bad upgrade. The only thing we don’t know is power consumption.

My card averages about 215W. 4 more CUs at 210 more megahertz is about 25% more theoretical performance and power. Double the 30w 8GB HBM2 2048 memory and a theoretical VII would use about 305W.

However that is before any 7nm power savings. So I’ll put it between 250-300w depending on how hard they push voltage vs performance curve.

The other thing I’ll say is man did I lick the wrong time to build a new rig last year.

beginner99

Diamond Member

- Jun 2, 2009

- 5,320

- 1,768

- 136

AMD's branding is ****ing garbage. Radeon VII?! Has AMD just totally given up on the entire concept of a "GPU Generation" and we can look forward to all their cards having some cutesy one off name with nothing to inform the consumer where in the GPU landscape the card falls?

It looks like a not so funny pun, especially made visible in the slide of the talk. The VII was written to look like Vega II. So it's probably mean to mean "Vega 2" and also align with Ryzen series where R7 = top class. Ryzen 7, Radeon 7. Eg. if you buy a ryzen 7, pair it with a radeon 7.

Sonikku

Lifer

- Jun 23, 2005

- 15,916

- 4,959

- 136

The 1080 ti was $700 two years ago. So AMD is bringing us 1080 ti levels of performance two years later for... $700. "But it has more memory!" Meh.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.