Non-gaming machine.

6-cores, better motherboard, lower price.

or

8-cores, mediocre motherboard, slightly higher price.

I would go with AMD.

5700G wins in following categories (and while not a gaming PC, 5700G Vega seems to be about 2X faster compared to Intel UHD.)

office

programming (developing)

browsing

encoding

power usage / efficiency

11600K wins Adobe CC (Photoshop & Premiere practically a tie, After Effects clear win for Intel)

System board should not make a big difference as OP is not doing anything extreme heavy lifting with those chosen components (of course I could be wrong). While *good* PCIE4 storage is faster than *good* PCIE3, the difference is visible mainly in benchmarks. If 1G NIC is not enough when (if) he has >1G network, 2.5G cards should be quite inexpensive by that time. Currently 2.5G (or 5G/10G) switches are quite expensive.

Both platforms have different upgrade paths if he chooses. Intel gets PCI4 storage from the start & can use PCIE4 GPU. CPU can be upgraded to 11900K max.

AMD has 5950X currently available and new & faster V-Cache version possibly on the CPU side (and of course PCI4 for storage & GPU with nonapu 5X00 processors assuming B550/X570 system board)

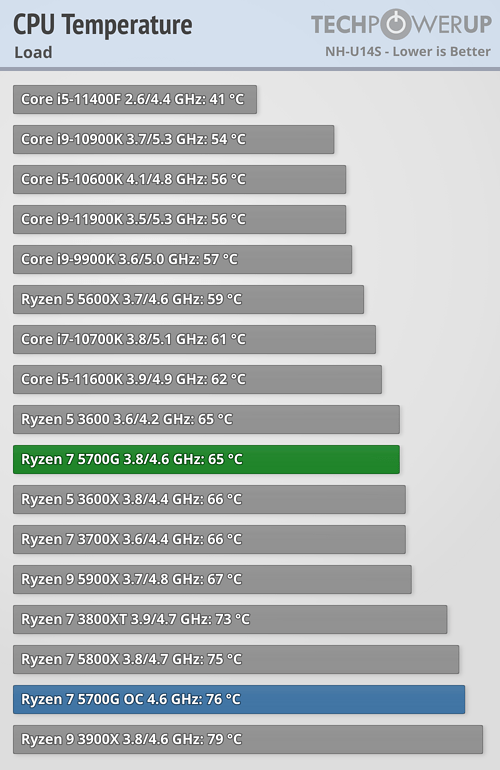

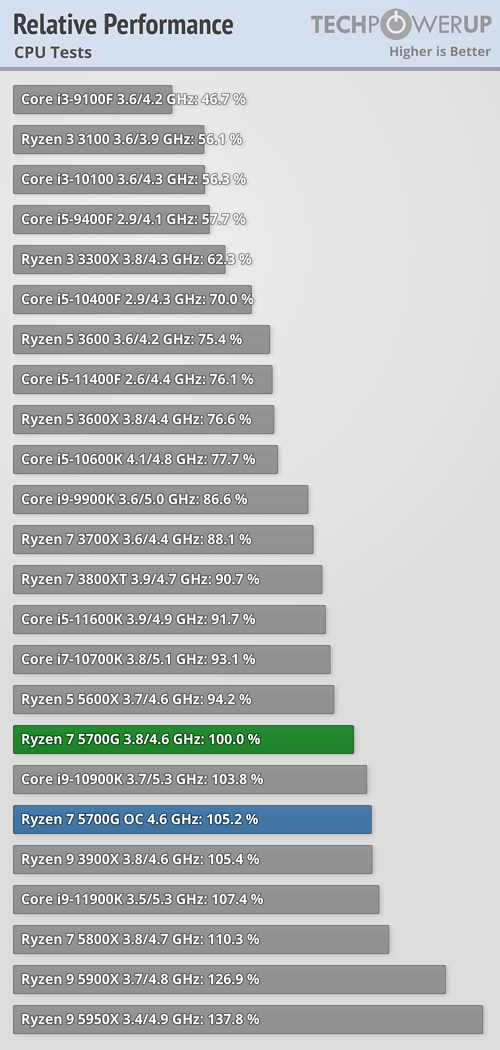

(I used numbers from TPU 5700G review)

Office use

5700G > 11600K (2% - 23% better, AMD wins 3/3 tests)

Adobe CC

5700G < 11600K (-12% - +1% worse, Intel wins 2/3 tests)

Visual Studio C++

5700G > 11600K (5% better, AMD wins 1/1 test)

Browsing

5700G > 11600K (-2% - +11% better, AMD wins 2/3 tests)

Encoding

5700G > 11600K (2% - 29% better, AMD wins 4/4 tests)

Power usage from whole system

Power usage IDLE

5700G > 11600K (9% better, 52W/57W )

Power usage ST

5700G > 11600K (20% better, 74W/89W )

Power usage MT

5700G > 11600K (56%-89% better, 150W/235W CB, 107W/203W Prime95)

Efficiency ST (Kj, less is better)

5700G = 11600K (1% better, 14.1/14.3)

Efficiency MT (Kj, less is better)

5700G > 11600K (92% better, 9.8/18.9)