CakeMonster

Golden Member

- Nov 22, 2012

- 1,642

- 821

- 136

That last tweet was always strange. I had thought that AMD have pretty well solved the bandwidth issue already.his last tweet (with his perf predictions) was deleted

Not quite the right place for this but:

Looks like we're going to see an Ampere refresh next year. Would be nuts not to have a refresh at this stage, given how few cards have actually made it into the general public's hands as well as what is shaping up to by an absolutely nutty second hand market here.

Why start rolling out new arch's when there a 3/4 tank left in the current one...

Don't see the point unless it's on SS7. Full GA103 is too close to a 3080. There wouldn't be any room for a 3080 Super.

-A lot of speculation that GA103 will be a slimmed down chip with a much smaller footprint, but similar or higher performance to a 3080/Ti/GA102 thanks to higher clocks.

- Realign the now far too low MSRP on the original Ampere line much higher to bring MSRP more in line with actual market value of the cards.

- Un-**** the RAM situation with the current line up that includes a 10GB top end card.

Can't find any public info on 16 Gib modules. Wonder if it would be worth it to go with 16 Gib GDDR6 from Samsung (or elsewhere). Offering 16 GB of VRAM on a card with ~3070Ti performance would be a worth while trade off for competing with AMD.Don't think they will fix this without 2 GB chips being available for GDDR6X. Not entirely clear that's happened.

Can't find any public info on 16 Gib modules. Wonder if it would be worth it to go with 16 Gib GDDR6 from Samsung (or elsewhere). Offering 16 GB of VRAM on a card with ~3070Ti performance would be a worth while trade off for competing with AMD.

Too bad it isn't 384b. Tough sell in some respects against the higher memory capacities that AMD has. With prices high, 'future' proofing must be on purchaser's minds. Nvidia guessed wrong in some respects, but they are selling everything they make, so it's not hurting them at all.They could have done that with the 3070 Ti but chose not to. Full GA103 is rumored to be 320 bit although they could change it.

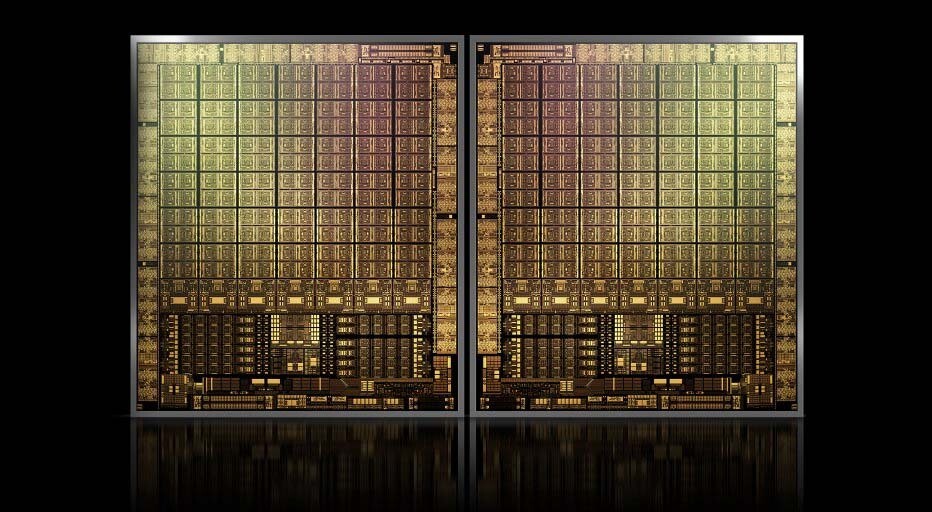

Are these really any different than old cards like the GTX 590 or the Radeon 5970 where it's just an SLI/Crossfire setup on a single board? I'm supposing that these are going to be chips on the same package as opposed to just the same board, but It doesn't seem as though either company is using the same kind of approach as with Zen and rather this is only being implemented because not doing so would push the resulting monolithic chip beyond the reticle limit.

It looks like lovelace is TSMC 5nm not samsung, thst's close to 2 node shrinks (definitely 1.5+ as Samsung 5nm is roughly equal to TSMC 7nm in power draw):

NVIDIA GeForce RTX 40 "Ada Lovelace" series design allegedly finalized, using TSMC 5nm - VideoCardz.com

NVIDIA Ada Lovelace launching next year using TSMC 5nm? Over the past few days, leakers have been sharing information on AMD RDNA3 and NVIDIA Hopper/Lovelace architecture. Important details were revealed by Greymon55 today. Greymon: Lovelace’s design is finalized, it is using TSMC 5nm node...videocardz.com

Against the rumored chiplet RDNA3 Nvidia is gonna need all the help it can, but the node shrink alone should allow significantly more SMs. If they can overhaul the architecture decently and add major new features (unlike the 3xxx series), they should be fine. Hard to see them keeping the rasterization crown though.

It looks like lovelace is TSMC 5nm not samsung, thst's close to 2 node shrinks (definitely 1.5+ as Samsung 5nm is roughly equal to TSMC 7nm in power draw):

- As if supply constraints weren't bad enough. If true, also says a lot about how NV's felt its Samsung experiment went and looks to go which is a bit of a bummer if we're back to Samsung = high volume low performance parts.

I mean, it made them buttloads of money and they didn't have to fight for capacity with all the other TSMC 7nm customers. Samsung gets a really bad rep but I'm not sure NV would have wanted to make this choice differently.

For the next generation, buying into TSMC 5nm after Apple is done with most of the volume (If we assume release in late '22) would make sense for the economy of a large scale order (like Samsung made sense in '20).

They will have supply issues with Lovelace, since it will be on N5, with a ton of other products. Maybe they'll still source some lower end GPUs from Samsung.NVIDIA has definitely had less supply issues than AMD. Hopefully going forward supply won’t be an issue.

I mean, it made them buttloads of money and they didn't have to fight for capacity with all the other TSMC 7nm customers. Samsung gets a really bad rep but I'm not sure NV would have wanted to make this choice differently.

For the next generation, buying into TSMC 5nm after Apple is done with most of the volume (If we assume release in late '22) would make sense for the economy of a large scale order (like Samsung made sense in '20).

Crysis (Remastered), naturally.I wonder what people are going to play on this. Are games really going to get that demanding in a year or two? The existing 3000 cards are already fast enough for pretty much everything except 4K RT without scaling or top end VR. They should just make it easier to buy the existing cards.

All the game studios also seem to be moving to multiplayer live service games with microtransactions, where top end graphics is not really the priority to begin with.

RTX4090 owners when they only get 40fps in Crysis:The vtol level in the original game will still run at 40fps on your 4090 because it's CPU bottlenecked.

With this much extra performance, maybe they can make a power efficient card for the peasants again.I wonder what people are going to play on this. Are games really going to get that demanding in a year or two? The existing 3000 cards are already fast enough for pretty much everything except 4K RT without scaling or top end VR. They should just make it easier to buy the existing cards.