Discussion Ada/'Lovelace'? Next gen Nvidia gaming architecture speculation

Page 2 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

....And then shows a graphic showing the 3070 as the most efficient solution out there with it's simple board and generic RAM...ok lol

It's not uncommon at all for the smaller (or more cut down) dies to be more efficient. It's also not the most efficient solution, that's actually the RX6800.

The point I was making though, is that the performance per watt testing only shows a slight advantage for the 3070. If the board/memory power consumption was such a huge problem, you would think you would see the 3070 having a huge lead in performance per watt, but we don't see that, it has a very small lead which, again, is not uncommon to see the lower tier card being more efficient. So if the 3090 is burning all this power at the board and memory level, above and beyond what is normal for a modern graphics card, why is it so close to the 3070 in efficiency? How much power does the 8 GB of GDDR6 on the 3070 consume? What are its board level losses like?

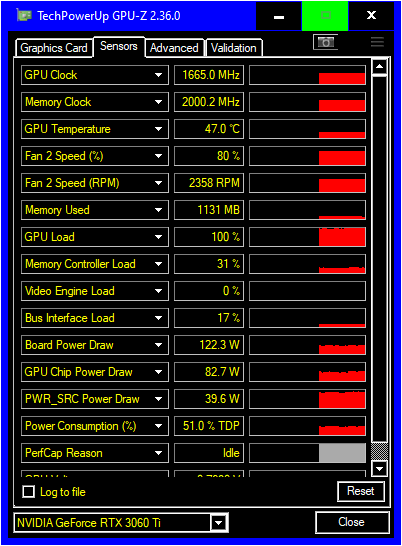

How much does PWR_SRC power draw say? I assume that's the memory power.

I haven't owned an NV card since Pascal, but PWR_SRC I believe relates to the power regulating circuitry on the board, not the memory. If someone knows for sure they can chime in.

At ~175W - 50% powerlimit

GPU = ~30W

RAM = ~110W

Other = ~40W

Therefore considering the size of the chip compared to a Ryzen or Intel TopEnd CPU, I dont think the power draw of the GPU itself is excessive. Like I alluded to, the biggest power component easily goes to the GDDR6 when running under load

It's not uncommon at all for the smaller (or more cut down) dies to be more efficient. It's also not the most efficient solution, that's actually the RX6800.

The point I was making though, is that the performance per watt testing only shows a slight advantage for the 3070. If the board/memory power consumption was such a huge problem, you would think you would see the 3070 having a huge lead in performance per watt, but we don't see that, it has a very small lead which, again, is not uncommon to see the lower tier card being more efficient. So if the 3090 is burning all this power at the board and memory level, above and beyond what is normal for a modern graphics card, why is it so close to the 3070 in efficiency? How much power does the 8 GB of GDDR6 on the 3070 consume? What are its board level losses like?

But these tests barely even fill a quarter/third of the GDDR6 capacity of the 3090, so they are not a good indicator of the powerdraw of 24GB running at full-tilt

I haven't owned an NV card since Pascal, but PWR_SRC I believe relates to the power regulating circuitry on the board, not the memory. If someone knows for sure they can chime in.

gpu-z says PWR_SRC is the supplyu to the laptop! (im assuming that it means power regulating circuitry or suchlike as you say)

The important ones are the ones I highlighted on my second screengrab.

Note, Im just showing the results I found on my board with my quick test, I'm not an expert and assume the numbers GPUZ shows are reasonably correct

gpu-z says PWR_SRC is the supplyu to the laptop! (im assuming that it means power regulating circuitry or suchlike as you say)

The important ones are the ones I highlighted on my second screengrab.

Note, Im just showing the results I found on my board with my quick test, I'm not an expert and assume the numbers GPUZ shows are reasonably correct

I'm thinking they are not, or at least the interpretation is not. If you add up all of the power consumption numbers, it goes way above the reported board power consumption. I'm guessing that the way Ampere sensors report is different than previous gens and GPUz hasn't been properly adjusted yet (partially evidenced by the PWR_SRC description).

Also, are you overclocking your RAM?

But these tests barely even fill a quarter/third of the GDDR6 capacity of the 3090, so they are not a good indicator of the powerdraw of 24GB running at full-tilt

What are you testing with that loads the 3090 VRAM so much more than modern 4K games?

I'm thinking they are not, or at least the interpretation is not. If you add up all of the power consumption numbers, it goes way above the reported board power consumption. I'm guessing that the way Ampere sensors report is different than previous gens and GPUz hasn't been properly adjusted yet (partially evidenced by the PWR_SRC description).

HWInfo has better numbers that almost add up. Ampere seems to have quite a few sensors for dram, cache, misc sources. Easy to import to MSI afterburner and then display as usual in RTSS.

I make my own assets and visualizations/games in UE / C4D / blender etc. Unoptimized assets can kill anything - I can bring my 3090 down to 1FPS! LOL - and you can have 3 games on 3 screens for the win!What are you testing with that loads the 3090 VRAM so much more than modern 4K games?

I'm thinking they are not, or at least the interpretation is not. If you add up all of the power consumption numbers, it goes way above the reported board power consumption. I'm guessing that the way Ampere sensors report is different than previous gens and GPUz hasn't been properly adjusted yet (partially evidenced by the PWR_SRC description).

Also, are you overclocking your RAM?

I have studied the GPU-z results and I think they make sense when you understand them. I dont think you can add them all up though - because as you say, it goes well above 350W. Some are subtotals I believe, like PWR_SRC.

I make my own assets and visualizations/games in UE / C4D / blender etc. Unoptimized assets can kill anything - I can bring my 3090 down to 1FPS! LOL - and you can have 3 games on 3 screens for the win!

With such an extreme and unusual workload it's hard to say what the real consequences are without similar tests on other cards, at least to the point they are able to with the smaller VRAM. If you are running blender and more 3D rendering type loads, behavior may be very different than what happens during gaming for each card.

I have studied the GPU-z results and I think they make sense when you understand them. I dont think you can add them all up though - because as you say, it goes well above 350W. Some are subtotals I believe, like PWR_SRC.

The problem is understanding them. If you can't add them all up, then it's hard to say what is actually using power where because if you add just the GPU chip power and what is supposedly memory power, you come well short of the board power. But if you then add the PWR_SRC reading, it's well above board power. So is there overlap between sensors? Should some amount of some sensors be added to others to be equal with earlier generation readings? It'd be nice to see the HWinfo numbers if @JoeRambo is correct that they seem to add up better.

Maybe, but it quite clearly states total power, GPU power and RAM power, and thats all I was really interested in.The problem is understanding them. If you can't add them all up, then it's hard to say what is actually using power where because if you add just the GPU chip power and what is supposedly memory power, you come well short of the board power. But if you then add the PWR_SRC reading, it's well above board power. So is there overlap between sensors? Should some amount of some sensors be added to others to be equal with earlier generation readings? It'd be nice to see the HWinfo numbers if @JoeRambo is correct that they seem to add up better.

Maybe, but it quite clearly states total power, GPU power and RAM power, and thats all I was really interested in.

Yes, but is it really what you think it is? That's the question. Are you able to check HWinfo to see if it shows the same results?

Edit: Your GPUz screenshots also show only 6 GB of VRAM being used, well within 4K gaming usage so it's not a VRAM usage issue here. There's something else going on and I'm guessing it has far more to do with the sensors being misinterpreted than with actual power usage.

I dont think the workload matters so much - the card is rated at 350W max, and I created a workload to push everything to 100%. I reached a max power draw of 350W, and saw the ratio between GPU and RAM powerdraw when operating at max, and clearly the RAM has capacity to draw considerably more juice than the GPU when both are flat-out. (at stock).With such an extreme and unusual workload it's hard to say what the real consequences are without similar tests on other cards, at least to the point they are able to with the smaller VRAM. If you are running blender and more 3D rendering type loads, behavior may be very different than what happens during gaming for each card.

I cant imagine there would be a scanario where I would get higher powerdraw on either the GPU or RAM by reducing the powerdraw of the other component(s) so I think my test is reasonably reliable.

I dont think the workload matters so much - the card is rated at 350W max, and I created a workload to push everything to 100%. I reached a max power draw of 350W, and saw the ratio between GPU and RAM powerdraw when operating at max, and clearly the RAM has capacity to draw considerably more juice than the GPU when both are flat-out. (at stock).

I cant imagine there would be a scanario where I would get higher powerdraw on either the GPU or RAM by reducing the powerdraw of the other component(s) so I think my test is reasonably reliable.

Workload absolutely matters, otherwise furmark wouldn't be a thing. From what I've seen of others posts, a 3090 typically uses around 75 W - 80 W on the MVDDC (FBVDD) rail when doing high res gaming and upwards of 100W if overclocked. But again, what is actually being fed by that line? Is it really just the actual memory or is this feeding into the GPU IC as well (e.g. memory controller voltage). It seems to me like the assumption that GPU Chip power is total GPU IC power draw as reported by GPUz is false. Unfortunately I don't have much to go off of without having a 3080/3090 to test myself.

Yes, but is it really what you think it is? That's the question. Are you able to check HWinfo to see if it shows the same results?

Edit: Your GPUz screenshots also show only 6 GB of VRAM being used, well within 4K gaming usage so it's not a VRAM usage issue here. There's something else going on and I'm guessing it has far more to do with the sensors being misinterpreted than with actual power usage.

I DL this now, im not familiar with this, but I think its showing the same info

If the board power and VRAM power was so bad (compared to other modern graphics cards), you would expect that the 3070 with GDDR6 (non-x) and a much simpler PCB and components would be much more efficient than a 3080 or 3090, but that doesn't seem to be the case?

I'm not really sure what you're trying to prove. The 3080 only has 10 GB of GDDR6X and isn't using twice as many chips and the 3070 has the clocks pushed a little bit further than the 3080 so you shouldn't expect to see much of a difference between the two.

You'd want to include a 3090 in there since the clocks are the lowest and it has twice the RAM chips as a 3080.

View attachment 36649

I DL this now, im not familiar with this, but I think its showing the same info

Yes and no. It does show lines that are the same as GPUz, but it also shows multiple GPU core powers (that have a very large difference in power consumption) as well as multiple misc. powers, output powers, and other powers also. Without knowing what each sensor is actually reporting it's hard to say what exactly is happening power wise outside of board power. There's obviously a lot of overlap between multiple sensors. I'll try to look more into it before the holiday break is over.

I'm not really sure what you're trying to prove. The 3080 only has 10 GB of GDDR6X and isn't using twice as many chips and the 3070 has the clocks pushed a little bit further than the 3080 so you shouldn't expect to see much of a difference between the two.

You'd want to include a 3090 in there since the clocks are the lowest and it has twice the RAM chips as a 3080.

I'm not trying to prove anything really. I'm just pointing out that the evidence doesn't support the theory that Ampere's efficiency is significantly hurt because of the memory type and capacity compared to other modern video cards. By the way, the 3090 was included in my second screenshot and showed higher efficiency than the 3080 according to HWU. I didn't include TUP with a 3090 in the chart because they didn't review a 3090 reference card.

tviceman

Diamond Member

The efficiency on Ampere is only bad because Nvidia did what AMD had been doing for several generations and pushing the cards to the limits of the silicon which makes them guzzle power.

Based on testing from numerous websites and forum users the power draw can be cut dramatically with a very small decrease in clock speed and an accompanying under-volt.

A die shrink makes sense just because adding more CUDA cores doesn't make a lot of sense, but I think they'll want to find ways to better utilize all of those cores and overhaul the RT portions of the architecture to aim for at least doubling the performance again.

Nvidia definitely pushed Ampere to the limit, but given that Navi 21 is about 15% more dense on TSMC 7nm AND AMD's huge perf/w increase within the same TSMC 7nm node, I think it's a forgone conclusion that Samsung's 8nm is noticeably inferior to TSMC. A straight port to TSMC would likely yield a 10-15% drop in die sizes and a 10-20% increase in efficiency at the same clock speeds.

Kopite's tweeting about lovelace again, this time its going to show up in a next gen tegra chip? Wonder if lovelace is especially low power or if its just what nvidia has on their roadmap for next gen. doesn't seem like an ampere+ at this rate

Kopite's tweeting about lovelace again, this time its going to show up in a next gen tegra chip? Wonder if lovelace is especially low power or if its just what nvidia has on their roadmap for next gen. doesn't seem like an ampere+ at this rate

Ampere+ is exactly what it looks like. Theoretical SS 5 density is almost double that of SS8 however so they could easily just jack up the SM counts by 25% or more.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.