This is exactly what everyone thought when ampere was leaked as having gddr6x, which still has no JEDEC specs. I'd absolutely believe micron would sell nvidia gddr7 before its officially in specification, but id believe that part of his 'leak' is actually just speculation more. Samsung is pushing standard gddr6 modules up to speeds higher than currently available g6x, so a new generation/iteration of gddr6x does seem very likely.Though the GDDR7 part seems silly, afaik it's not even done being finalized by JEDEC as a spec yet, let alone coming out this year.

Discussion Ada/'Lovelace'? Next gen Nvidia gaming architecture speculation

Page 8 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Saylick

Diamond Member

- Sep 10, 2012

- 4,133

- 9,650

- 136

This was my exact thought. This will just be Fermi v2.0, and with twice the power consumption of the original Fermi to boot. Good lord, how is anyone going to game with a 500W+ GPU without their room heating up 10 degrees. It's almost a guarantee that the AC unit will need to be turned on whenever you game.i'm getting GTX480 vibes again

moonbogg

Lifer

- Jan 8, 2011

- 10,736

- 3,454

- 136

If increasing the power means miners don't want them, then I'm fine with a 1000 watt GPU.

nVidia has solved the mining problem! by making cards that draw enormous amounts of power, they make mining on them not profitable! Praise Jensen!

ETH miners actually run their cards with an underclock since it's memory bound. The Ampere cards perform quite efficiently when they aren't being pushed to the limits for that last 5% performance bump.

Even if they were mining something that's compute bound, clock speeds and voltage will be adjusted to maximize profit. NVidia would have to create a worse performing card in general for miners to shun it.

How can it be slightly less than 1000mm2 and monolithic?

It will be single die per wafer - sized :-D

The 4090 with 144SMs (well probably slightly less) and rumored 96 MB of L2 cache sounds great. Will be all over it, but hope the price wont be higher than current 3090 inflated price...which is like 2500 EUROs with VAT.

igor_kavinski

Lifer

- Jul 27, 2020

- 28,174

- 19,219

- 146

600W cardsHow is Nvidia planning to counter 256MB/512MB of stacked Infinity Cache on RDNA3?

Saylick

Diamond Member

- Sep 10, 2012

- 4,133

- 9,650

- 136

96 MB of on-die cache, humongous monolithic die, ramping up clocks and consequently power, and most importantly of all: launch Lovelace before RDNA3 with the full might of the Nvidia marketing team.How is Nvidia planning to counter 256MB/512MB of stacked Infinity Cache on RDNA3?

Saylick

Diamond Member

- Sep 10, 2012

- 4,133

- 9,650

- 136

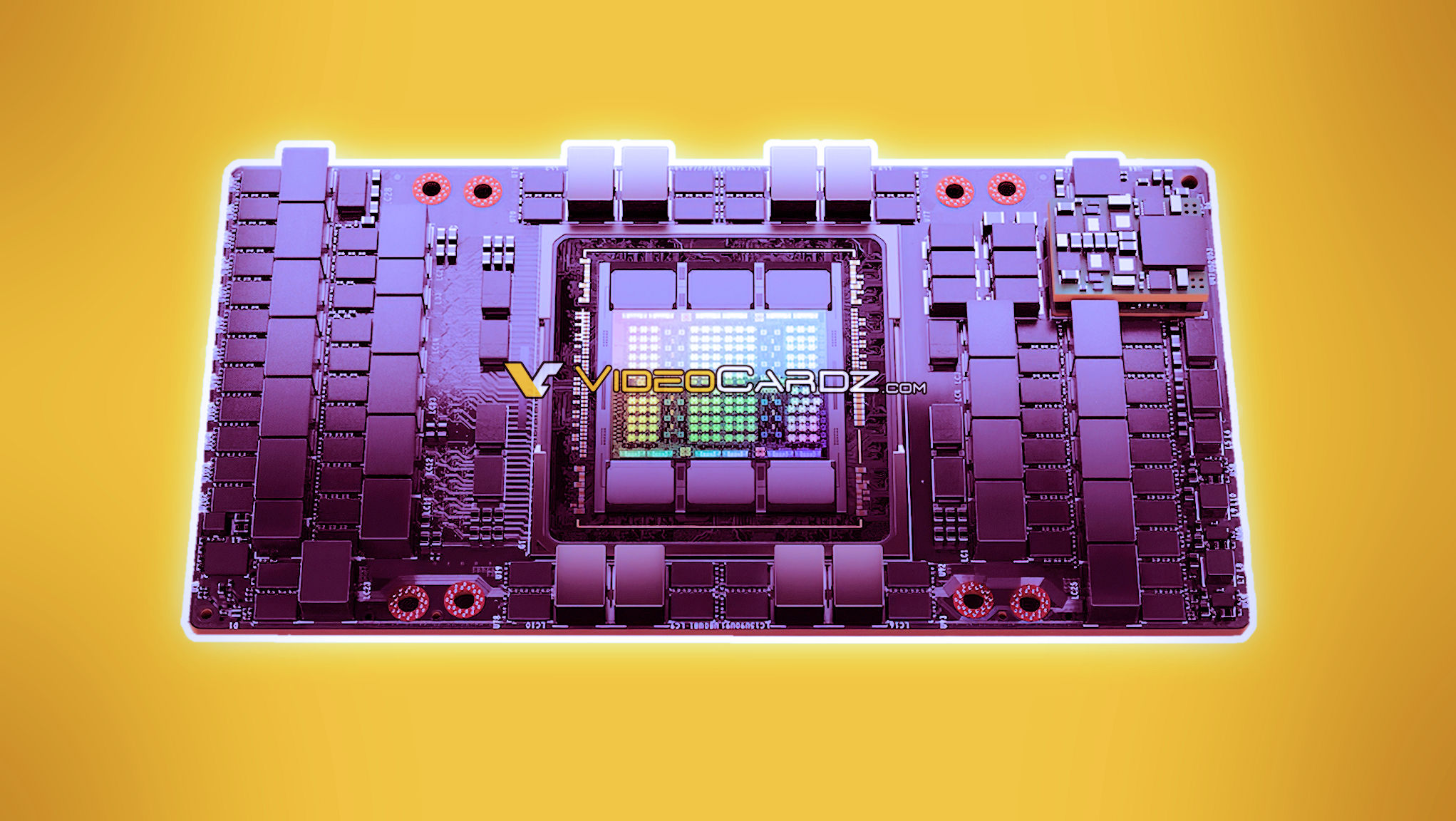

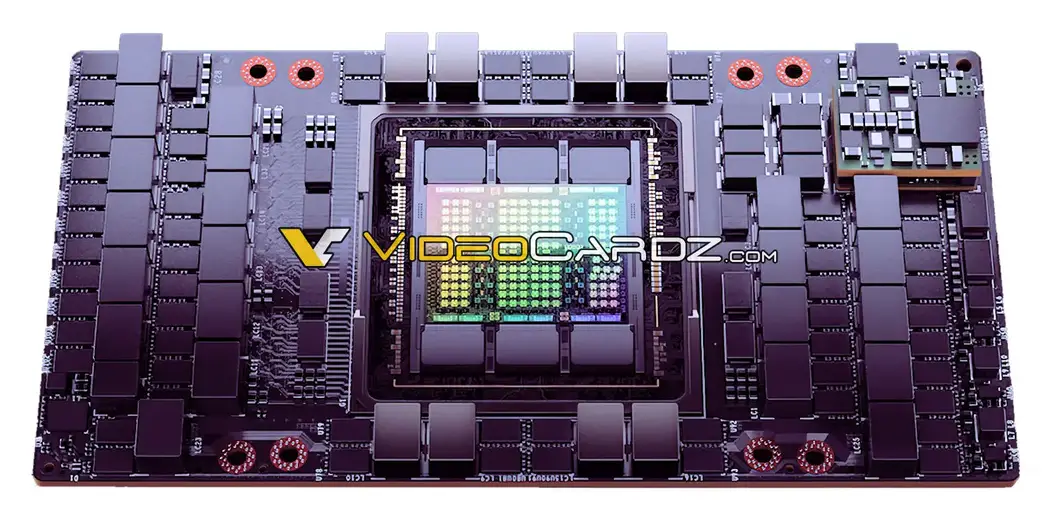

Videocardz has some leaks (more so from the usual Twitter leakers) about Hopper.

videocardz.com

videocardz.com

NVIDIA GH100 Hopper GPU comes with 48MB of L2 Cache, only one GPC has graphics enabled - VideoCardz.com

NVIDIA GH100 Hopper: only one GPC with graphics enabled An interesting part of the NVIDIA leak has just resurfaced. Twitter user Locuza specializing in creating visual summaries of the existing knowledge on upcoming hardware products, has made a new GPU diagram featuring NVIDIA GH100 GPU, the...

igor_kavinski

Lifer

- Jul 27, 2020

- 28,174

- 19,219

- 146

Saylick

Diamond Member

- Sep 10, 2012

- 4,133

- 9,650

- 136

Yeah, but it has a 50% bigger memory bus with memory that delivers more transfers per second.If Nvidia manages to win with just 96MB cache against RDNA3's 512MB, I'll be like "WOAHHHHH!".

Aapje

Golden Member

- Mar 21, 2022

- 1,530

- 2,106

- 106

This is exactly what everyone thought when ampere was leaked as having gddr6x, which still has no JEDEC specs. I'd absolutely believe micron would sell nvidia gddr7 before its officially in specification, but id believe that part of his 'leak' is actually just speculation more. Samsung is pushing standard gddr6 modules up to speeds higher than currently available g6x, so a new generation/iteration of gddr6x does seem very likely.

GDDR6X uses PAM4 signalling to send more data over the same size bus. This is expensive, so I wouldn't expect GDDR7 to use PAM4.

GDDR7 will require a smaller production process, but Samsung has been having problems with their 5 nm yield and there is a shortage of production capacity for the forseeable future. So they might not be able to produce it right now. They should be already be tooling up their plants for GDDR7 to make it into the first version of Lovelace, which I haven't heard anything about, so I don't see that happening. Perhaps for a refresh in 2023.

GodisanAtheist

Diamond Member

- Nov 16, 2006

- 8,533

- 9,969

- 136

If Nvidia manages to win with just 96MB cache against RDNA3's 512MB, I'll be like "WOAHHHHH!".

- Its NV's market to lose, quite honestly. They're in kind of an awkward position (from a hobbyist perspective) since if they win its what everyone expected because its what they've done for every launch since 7xxx Ghz editions, while if they lose it will be a shocking upset for the same reason.

RDNA 2 competing across the stack in raster performance while also brining RT performance to the table is already a massive feat that I don't think most folks really expected (a lot of "AMD will be competing with the 3070" posts pre-launch).

AMD weirdly had the benefit of basically 0 expectations after the Vega/Polaris debacle, although folks are certainly more expectant of RDNA3 than either of the prior archs.

Aapje

Golden Member

- Mar 21, 2022

- 1,530

- 2,106

- 106

I think that AMD's GPU side is really benefiting from the investments on the CPU side, as they seem to have really taken advantage from innovations that were made for CPU's. For example, both multi-die and infinity cache were developed for the CPU's, but also used in the GPU's.

I wonder if this is why Nvidia tried to buy ARM, to also take advantage of such synergy. In the future, Nvidia will be up against two competitors with a strong CPU division, who can thus make investments that pay off in two markets, while Nvidia has to earn it all back by selling GPU's.

I wonder if this is why Nvidia tried to buy ARM, to also take advantage of such synergy. In the future, Nvidia will be up against two competitors with a strong CPU division, who can thus make investments that pay off in two markets, while Nvidia has to earn it all back by selling GPU's.

Saylick

Diamond Member

- Sep 10, 2012

- 4,133

- 9,650

- 136

Yeah, being the market leader, it's always been Nvidia's market to lose. I don't think a competitor has ever been this close to Nvidia in terms of overall performance, perf/W, and feature set in a LONG time. AMD was already on-par with Nvidia in raster performance with RDNA 2, and they are expected to make another step-function jump up in raster and RT performance with RDNA 3. Even if Lovelace beats RDNA 3 in RT (even if it were say 30-50% faster), I feel like we're still in that awkward transition phase where RT is getting better every year but there's still no real game that leverages RT in a way that makes the game unplayable or not enjoyable if RT wasn't enabled. In other words, RT is still taking a back seat to rasterization in modern games; it is only used to enhance certain visual effects but as a whole it isn't a "requirement". Going back to feature set, FSR 2.0 is going to come out later this year, and if all reports are true, AMD is really trying to button it up so that RDNA 3 hits it out of the park without controversy or trouble. Lastly, if AMD is already gunning at Nvidia's top end, Intel will come in and hit Nvidia's bottom end when Arc launches later this year. Rumors are saying that Intel is willing to make less profit per card just to get a foothold into the discrete GPU market, and they plan on launching ASAP to wring out the customer's wallet before AMD or Nvidia can properly launch their next-gen cards. Nvidia is being squeezed in the mobile/laptop space as well for the aforementioned reasons. Being that they don't design CPUs, once AMD and Intel get a competitive laptop GPU in both performance and feature set, Intel and AMD will simply just bundle their CPU+GPU together and push Nvidia out.- Its NV's market to lose, quite honestly. They're in kind of an awkward position (from a hobbyist perspective) since if they win its what everyone expected because its what they've done for every launch since 7xxx Ghz editions, while if they lose it will be a shocking upset for the same reason.

RDNA 2 competing across the stack in raster performance while also brining RT performance to the table is already a massive feat that I don't think most folks really expected (a lot of "AMD will be competing with the 3070" posts pre-launch).

AMD weirdly had the benefit of basically 0 expectations after the Vega/Polaris debacle, although folks are certainly more expectant of RDNA3 than either of the prior archs.

If you've been following Nvidia's business moves in the last few months, you'll notice that Nvidia has been buying a bunch of smaller companies to flesh out their HPC/enterprise software stack. I think Nvidia knows that their core markets will be more competitive than ever before, and in response they have to diversify and expand their other markets to compensate.

Saylick

Diamond Member

- Sep 10, 2012

- 4,133

- 9,650

- 136

Absolutely. The GPU side benefits not just from the fact that they are leveraging learning from their CPU design teams in the design of GPUs, but the fact that the CPU side was successful and profitable means that the amount of financial resources AMD can allocate to their GPU side has never been greater.I think that AMD's GPU side is really benefiting from the investments on the CPU side, as they seem to have really taken advantage from innovations that were made for CPU's. For example, both multi-die and infinity cache were developed for the CPU's, but also used in the GPU's.

I wonder if this is why Nvidia tried to buy ARM, to also take advantage of such synergy. In the future, Nvidia will be up against two competitors with a strong CPU division, who can thus make investments that pay off in two markets, while Nvidia has to earn it all back by selling GPU's.

And both AMD and Nvidia see that the future (for certain tasks) is putting memory, compute right next to the CPU with stronger and stronger "glue".

ARM acquisition mades sense in that regard but I don't think it is *necessary* in order for Nvidia to create converged accelerators or whatever you want to call them.

ARM acquisition mades sense in that regard but I don't think it is *necessary* in order for Nvidia to create converged accelerators or whatever you want to call them.

Glo.

Diamond Member

- Apr 25, 2015

- 5,930

- 4,991

- 136

Short story, they won't.If Nvidia manages to win with just 96MB cache against RDNA3's 512MB, I'll be like "WOAHHHHH!".

Again: don't expoect miracles from Ada. Its Ampere, on smaller node, with larger L2 cache, that lifts the mem bandwidth bottleneck.

To some degree...

Saylick

Diamond Member

- Sep 10, 2012

- 4,133

- 9,650

- 136

Videocardz dropping some bombs before tomorrow's GTC presentation:Videocardz has some leaks (more so from the usual Twitter leakers) about Hopper.

NVIDIA GH100 Hopper GPU comes with 48MB of L2 Cache, only one GPC has graphics enabled - VideoCardz.com

NVIDIA GH100 Hopper: only one GPC with graphics enabled An interesting part of the NVIDIA leak has just resurfaced. Twitter user Locuza specializing in creating visual summaries of the existing knowledge on upcoming hardware products, has made a new GPU diagram featuring NVIDIA GH100 GPU, the...videocardz.com

NVIDIA next-gen H100 Hopper GPU for High Performance Computing pictured - VideoCardz.com

NVIDIA H100, company’s first 4nm GPU Tomorrow NVIDIA CEO Jensen Huang will announce the new H100 series of products for data-centers. The render that leaks just hours ahead of the announcement confirms some key details on NVIDIA’s Hopper GH100 GPU. Such as the fact that GH100 is a large...

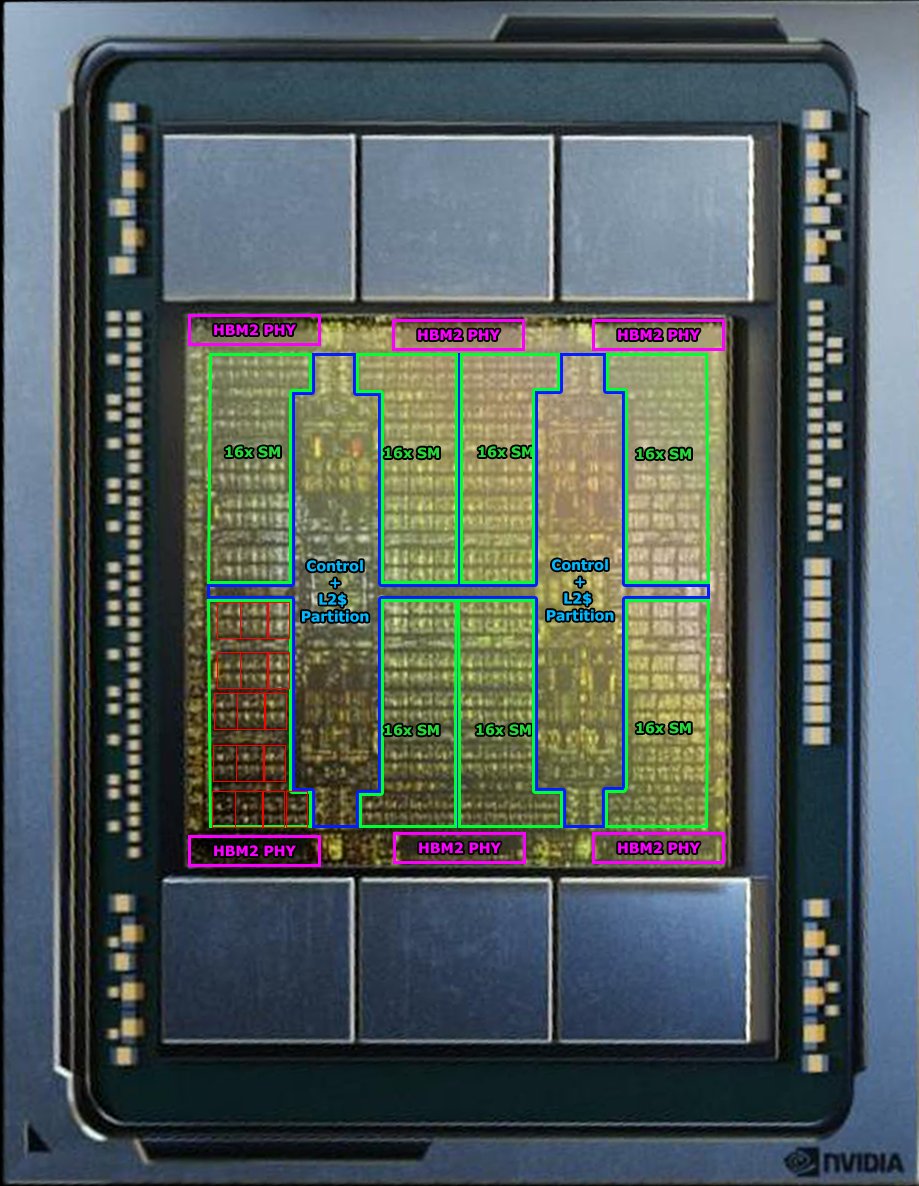

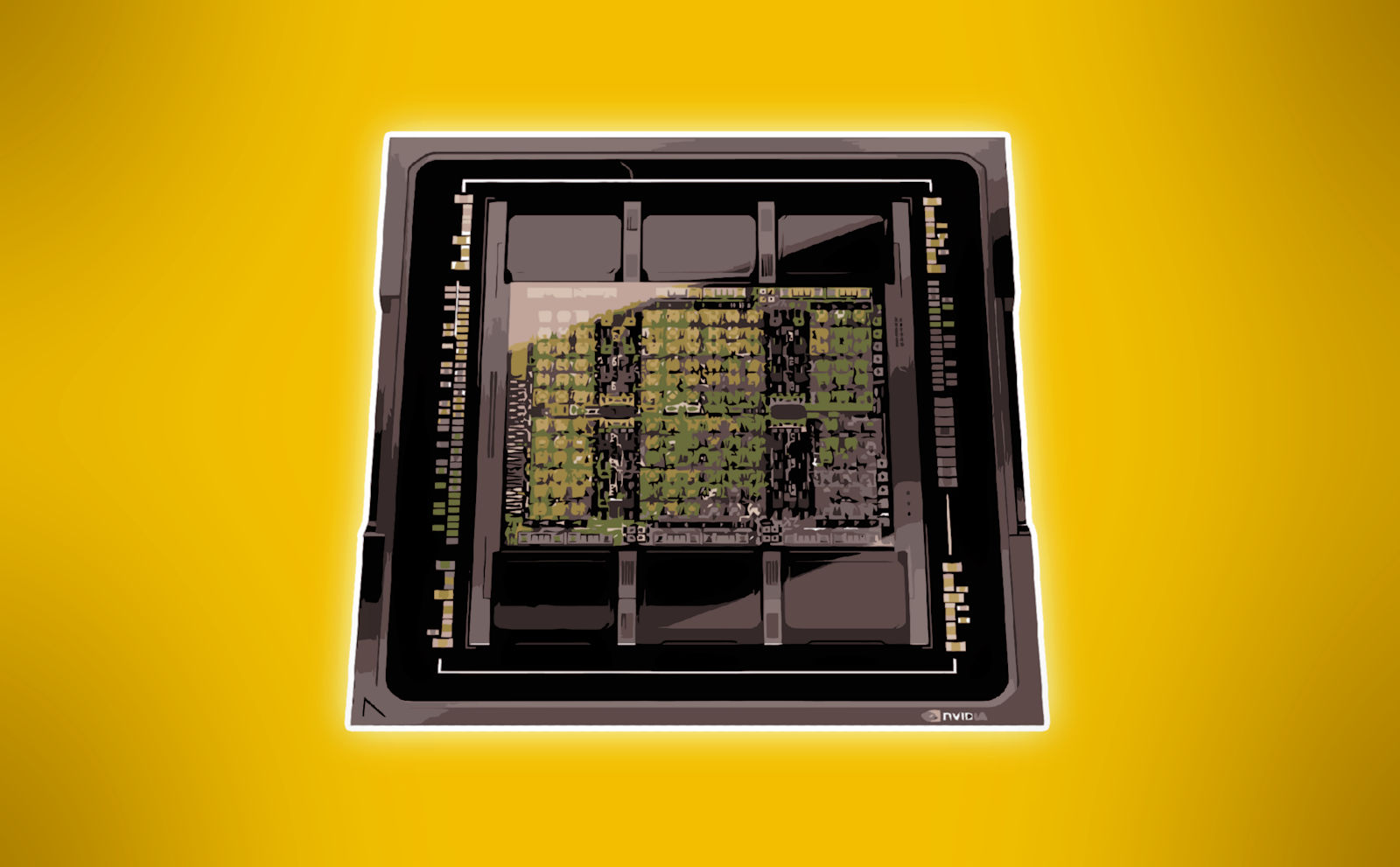

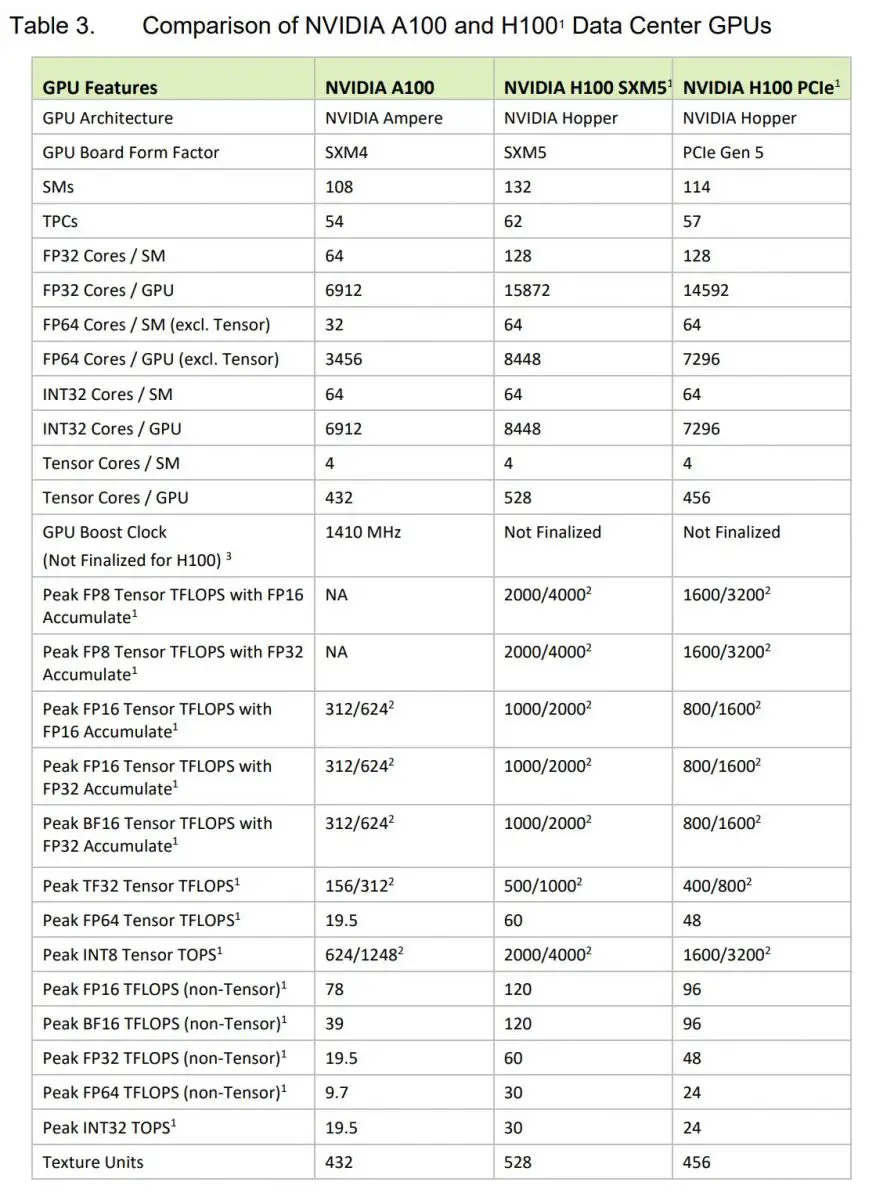

Looks like 144 SMs in total, assuming the die shot is representative. I count 12 SMs per row, and there's about 12 rows (6 above and 6 below centerline). Nvidia will likely disable some SMs for yield reasons, however.

Edit: Just wanted to bring up GA100 for comparison. Overall, the layout looks pretty much similar to server Ampere but I'm sure there's some secret sauce to Hopper, e.g. next generation tensor units and/or doubling of FP32 units like gaming Ampere. GH100 does look a little bigger than GA100 just going off of the size of the HBM stacks. In the Hopper render, you can see that the die is wider than 3 stacks of HBM (there's some gaps between each stack), while for Ampere the die is slightly less wide than 3 stacks without any gaps between the stacks. The height of the die looks comparable between the two generations, seeing that GA100 is more square in aspect ratio while GH100 is wider than it is tall.

Last edited:

NVIDIA H100 GPU features TSMC N4 process, HBM3 memory, PCIe Gen5, 700W TDP - VideoCardz.com

NVIDIA H100 Specs Some details on NVIDIA’s next-gen AI accelerator have leaked just an hour ahead of the announcement. Contrary to the rumors that NVIDIA H100 based on Hopper architecture will be using TSMC N5, NVIDIA today announced that its latest accelerator will be using a custom TSMC N4...

Yikes, 700 W. And it's on N4 too instead of N5. Of course the big increase is in low precision (6x)

Saylick

Diamond Member

- Sep 10, 2012

- 4,133

- 9,650

- 136

Looks like there's a doubling of FP/tensor units somewhere, not unlike gaming Ampere, because the transistor count is only 80B, which isn't a 3x bump over GA100. Also, it's not like the die is 3x bigger either. They are up against reticle limits already.

NVIDIA H100 GPU features TSMC N4 process, HBM3 memory, PCIe Gen5, 700W TDP - VideoCardz.com

NVIDIA H100 Specs Some details on NVIDIA’s next-gen AI accelerator have leaked just an hour ahead of the announcement. Contrary to the rumors that NVIDIA H100 based on Hopper architecture will be using TSMC N5, NVIDIA today announced that its latest accelerator will be using a custom TSMC N4...videocardz.com

Yikes, 700 W. And it's on N4 too instead of N5. Of course the big increase is in low precision (6x)

igor_kavinski

Lifer

- Jul 27, 2020

- 28,174

- 19,219

- 146

Meh. It can't run Crysis

NVIDIA H100 GPU features TSMC N4 process, HBM3 memory, PCIe Gen5, 700W TDP - VideoCardz.com

NVIDIA H100 Specs Some details on NVIDIA’s next-gen AI accelerator have leaked just an hour ahead of the announcement. Contrary to the rumors that NVIDIA H100 based on Hopper architecture will be using TSMC N5, NVIDIA today announced that its latest accelerator will be using a custom TSMC N4...videocardz.com

Yikes, 700 W. And it's on N4 too instead of N5. Of course the big increase is in low precision (6x)

Saylick

Diamond Member

- Sep 10, 2012

- 4,133

- 9,650

- 136

Yep, looks like ratio of 2:1 for INT32 to FP32 units just like gaming Ampere:Looks like there's a doubling of FP/tensor units somewhere, not unlike gaming Ampere, because the transistor count is only 80B, which isn't a 3x bump over GA100. Also, it's not like the die is 3x bigger either. They are up against reticle limits already.

Edit: Some more tables from Nvidia:

Edit2: Here's the link to Nvidia's whitepaper, essentially: https://developer.nvidia.com/blog/nvidia-hopper-architecture-in-depth/

Last edited:

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.