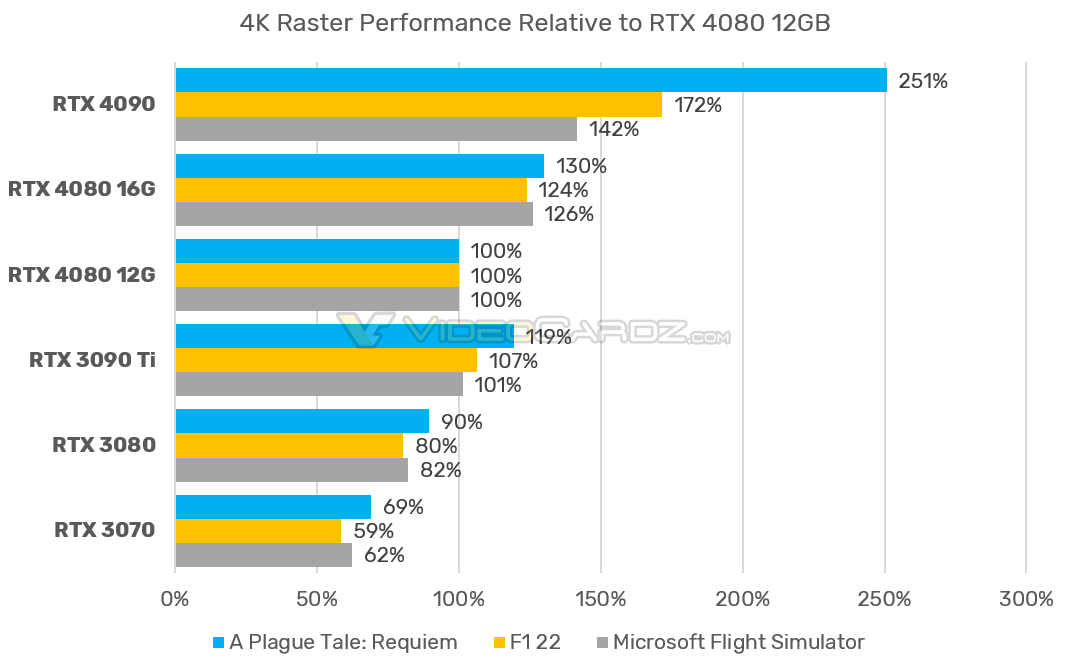

GeForce RTX 4080 16GB is up to 30% faster than 12GB version, according to NVIDIA's new benchmarks - VideoCardz.com

NVIDIA RTX 4080 16GB is much faster than RTX 4080 12GB NVIDIA shares some new benchmarks for its upcoming RTX 4080 series, reports Overclock3D. These benchmarks feature three games, all tested at 4K resolution with Core i9-12900K system. Games tested include A Plague Tale: Requiem, F1 22 and...

Apparent alleged benchmarks of the 4080 12 GB and 16 GB provided by nVidia. The 12 GB is close to the 3090 Ti but not quite. This is at 4K so that might be part of it, given the memory bandwidth. The 16 GB is 25-30% faster than the 12.