psolord

Platinum Member

- Sep 16, 2009

- 2,142

- 1,265

- 136

With these prices, the value of my non interest, has also grown by a factor of 12X to 16X.

If you compare native to DLSS 2 Performance you get similar results (bluring). If you compare native to DLSS 2 Quality the image quality is about the same (no bluring). Hence a better comparison vs native would be DLSS 3 using Quality not Performance.Sometimes pictures speaks larger than words..

*edit*

Disclaimer: dont know if real or not.. some people say its fake

*edit2*

Not fake, found digital foundry shill video here

I know LTT typically tries to keep companies happy (for obvious reasons), but this video really sounds like an apologists video. Sure he mentions how the 4080 12GB should probably have been a 4070, but he goes on in several cases about how we should be happy that the cards are as cheap as they are?!

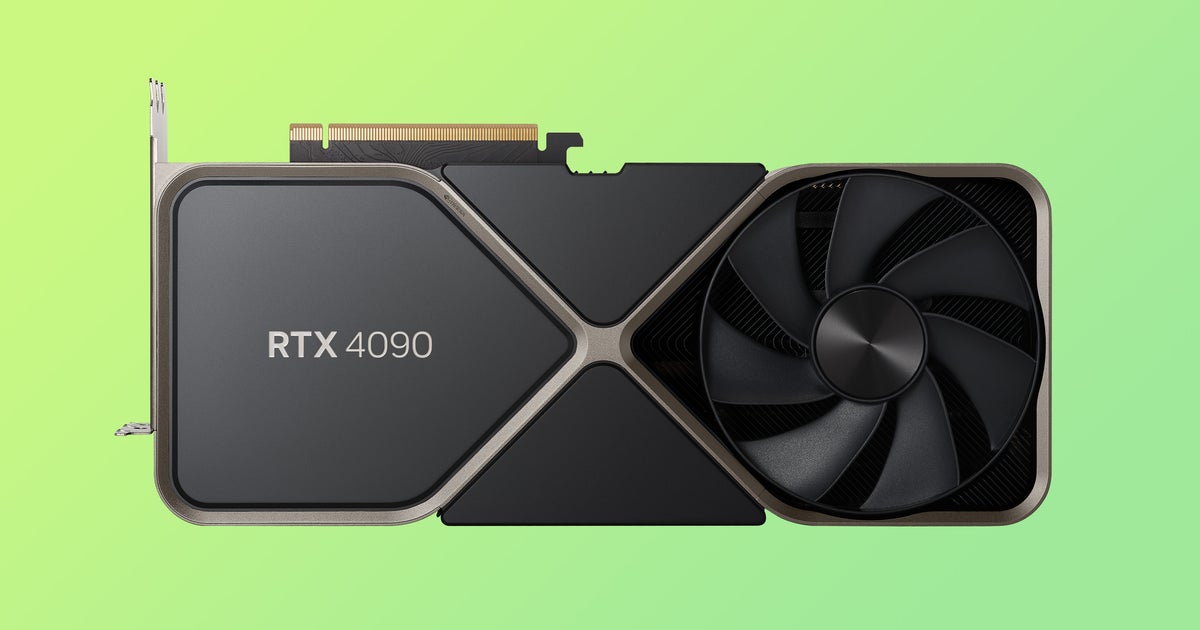

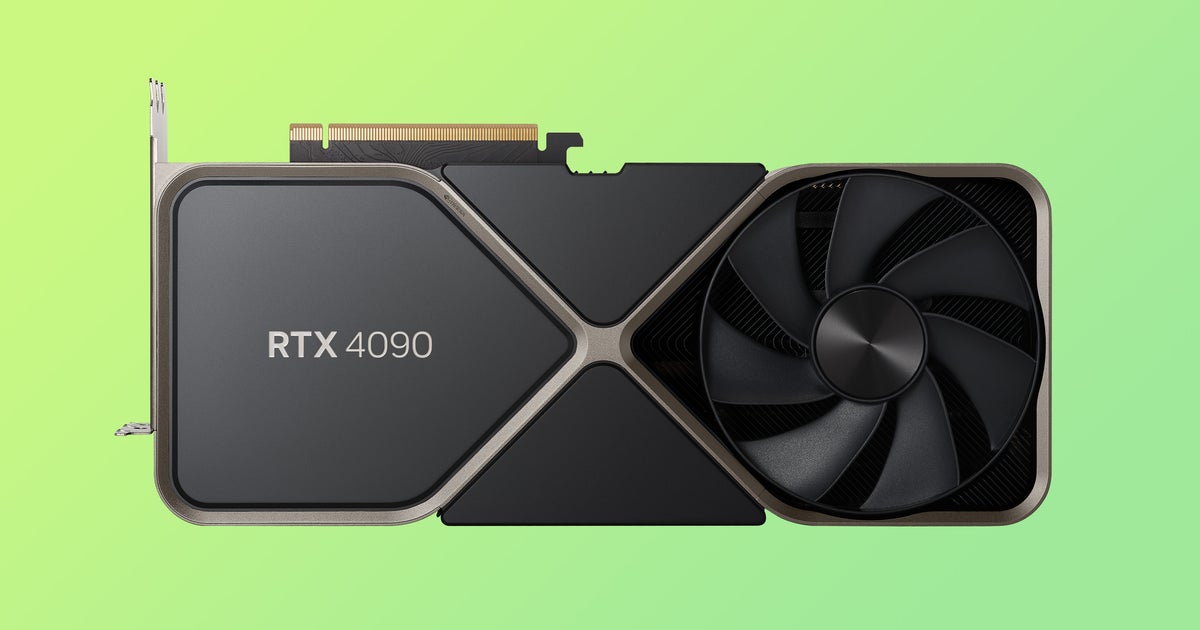

Gamer's Nexus has a rather scary and amusing video on how each of the Nvidia partners are marketing Ada Lovelace. Scary because all of the 4090 designs are just ludicrously chonky, and amusing because some brands have literally used wildly exaggerated marketing language, such as saying the user will have "a new level of brilliance and absolute dark power" and all of the GPU brackets are said to be "anti-gravity design". LOL. I don't know about you guys, but it seems the only "dark power" I'll get is when my homes lights shut off due to the computer tripping the breaker. It'll definitely be dark then.

Sometimes pictures speaks larger than words..

*edit*

Disclaimer: dont know if real or not.. some people say its fake

*edit2*

Not fake, found digital foundry shill video here

Someone tell CERN about these new anti-gravity brackets! I am sure the next market for GPUs will be particle beam steering in the Future Circular Collider (FCC aka LHC 2.0).Gamer's Nexus has a rather scary and amusing video on how each of the Nvidia partners are marketing Ada Lovelace. Scary because all of the 4090 designs are just ludicrously chonky, and amusing because some brands have literally used wildly exaggerated marketing language, such as saying the user will have "a new level of brilliance and absolute dark power" and all of the GPU brackets are said to be "anti-gravity design". LOL. I don't know about you guys, but it seems the only "dark power" I'll get is when my homes lights shut off due to the computer tripping the breaker. It'll definitely be dark then.

Damn, we're up to 4 slot coolers now.... Screw that. Seems like we're going in cycles between efficient and balls to the wall bloated designs. Personally I'd gladly give up 10% performance if it meant 30% reduction in heat and noise.Nvidia partners offer their RTX 4000 series cards, with some absurd examples.

You should probably ready what it actually does (or doesn't do).Not sure what you are talking about. You can force anisotropic filtering in AMD drivers.

The same is true for Nvidia.You should probably ready what it actually does (or doesn't do).

It literally says that it only works in <=DX9 games. Maybe in OpenGL too.

Just wonder how much effect of PCIe Gen5 on graphics card. RX7000 seems supporting Gen5 which means doubling the bandwidth compared to Gen4, will it improve overall frame rate?You'll just have to believe me. I've been reading reviews since the late 90's and with big reviews I'd do analysis on them to see scaling and everything.

Approach it logically. A balanced system and game would take advantage of each of the major features equally. 20-30% gains can be had by doubling memory bandwidth. Same with fillrate, and same with shader firepower.

If one generation changes the balance too much, that means there's optimization to be had. Imagine a game/video card where doubling memory bandwidth increased performance by 60-80%. Then you know somewhere in the game and/or GPU, there's a serious bottleneck when it comes to memory. This also means you are wasting resources by having too much shader and fillrate, because you could have had much better perf/$, perf/mm and perf/W ratio.

That's why it's called a Rule of Thumb though. All sorts of real-world analysis has to be done to get the actual value. Game development changes, and quality of the management for the GPU team changes.

There's no such thing as free. To get more performant shader units, generally you need more resources. Optimization is what you need to do better than that, but that requires innovation, ideas and time which doesn't always happen.

Not true (of course, there can be games where it doesn't work but in general it does work). It can even work in some DX12 games (used to use it in Horizon Zero Dawn when in game AF was broken - it had small issues but it was well worth it). It probably doesn't work in Vulkan games.The same is true for Nvidia.

If the global setting works in Nvidia it will work in AMD and vice versa.Not true (of course, there can be games where it doesn't work but in general it does work). It can even work in some DX12 games (used to use it in Horizon Zero Dawn when in game AF was broken - it had small issues but it was well worth it). It probably doesn't work in Vulkan games.

What I'd like to know: How does Intel handle that and do they have NVIDIA like v-sync settings?

Many had hoped for lower prices - but, according to Nvidia boss Jensen Huang, these costs reflect a world where Moore's law - where performance is doubled for half the price every two years - is now "dead".

Well, show me the proof then otherwise I'm inclined to believe what the driver tells me. I don't have AMD card unlike you (well, I do have Ryzen APU as work laptop but I'm not going to try running games on it).If the global setting works in Nvidia it will work in AMD and vice versa.

It has to do with how the direct x flag is handled, and Nvidia does not have any special magic sauce to change that.

I will strongly disagree. There are a lot of people who can handle a lot of cognitive dissonance by creating alternative facts and selectively accepting information that preserves their preexisting beliefs. Just look at religion, politics, etc, etc. It is all over the place and it always has been.

It will be good for their 4050 or even 4030 part, especially the 1000+ laptop models released with mobile 4050

NVidia responding to price complaints:

Falling GPU prices are thing of "the past", Nvidia says

Falling GPU prices are thing of "the past", Nvidia says

Earlier this week, Nvidia announced its next series of GeForce RTX cards - and raised eyebrows at how much some of them…www.eurogamer.net

I know LTT typically tries to keep companies happy (for obvious reasons), but this video really sounds like an apologists video. Sure he mentions how the 4080 12GB should probably have been a 4070, but he goes on in several cases about how we should be happy that the cards are as cheap as they are?!

Obviously DLSS running at lower resolution, therefore latency will be low.My apologies if this was already posted (it's new product season, so AT Forums is more active), but looks like Nvidia gave some more info regarding DLSS 3.0:

NVIDIA shows GeForce RTX 4090 running 2850 MHz GPU clock at stock settings - VideoCardz.com

NVIDIA RTX 4090 2.85 GHz at stock, less power use with DLSS3 At yesterday’s NVIDIA Editors Day for RTX 40 series, the company demonstrated its RTX 4090 graphics card in a short gameplay demo. Wccftech, who took part in this press briefing, posted screenshots and videos of what that was shown...videocardz.com

View attachment 67963

Latency sees a reduction from what Nvidia calls Native to with DLSS 3.0, but who knows if that's just coming from not enabling Reflex for Native and turning it on for DLSS 3.0 to make it look like you get a reduction. Also, where is DLSS 2 in this comparison? Those of us with Ampere won't be able to take advantage of DLSS 3 so I'd like to know how much of a benefit comes from DLSS 3.0 alone, specifically from the Frame Generation feature. If the DLSS 2 results look exactly like the DLSS 3 results except the frame rates are just simply half, then we all know what's going on: the higher frame rate comes from inserting those additional "fake" frames.

. . .Well, show me the proof then otherwise I'm inclined to believe what the driver tells me. I don't have AMD card unlike you (well, I do have Ryzen APU as work laptop but I'm not going to try running games on it).

Now that we’ve covered all of the AMD and Nvidia anti-aliasing driver options, we have some bad news: the driver-set options often don’t work.

Precisely why I want to have the comparison, because there's a handful of features all being used simultaneously to make DLSS 3 look super good. Those features are:Obviously DLSS running at lower resolution, therefore latency will be low.