So you're saying that better cooling alone will decrease power consumption for the same workload.

But then you said you don't know what workload actually measures, that it could be anything. If what you're saying is true then it's whatever the same workload 10W cTDP is. Now I have no idea what that represents, but cTDP isn't really new so maybe there's some information out there. I expect Intel should still be able to run the CPU cores at its base clock at least, under "normal" programs that fully load it (ie not doing weird power virusy things). And with the GPU only doing minimal GUI stuff.

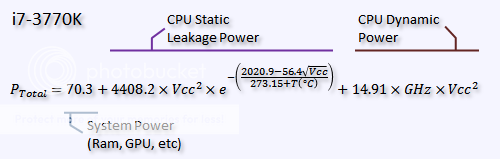

The problem is that nowhere do I see that Intel is clearly describing this. Maybe to you it's implied by the slide, but I think there's still some room for ambiguity. 10W to 7W is a huge reduction just from 25 degrees C at TJ, which I imagine is nowhere close to saying a 25C reduction in ambient; the temperature of the cooling elements on the tablet are going to be nowhere close to 25C over the air around you.

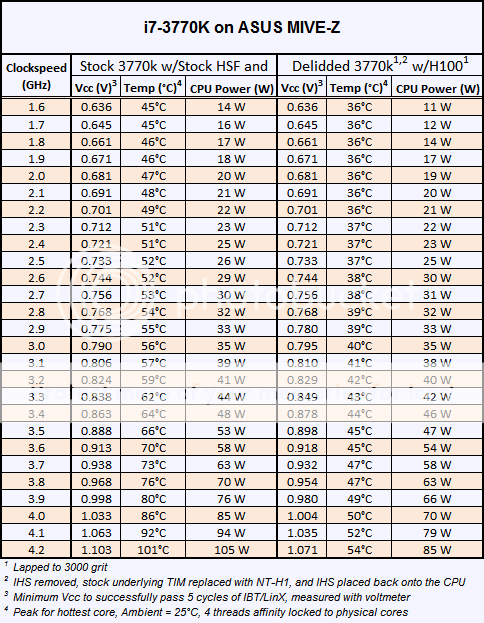

The savings should be less dramatic if already starting at lower voltage levels, especially given that Intel's 22nm voltage/clock curve rises more steeply than on its older processors. For your graph you have things fixed at a high 1.29V which I'm sure exaggerates the results.

Where are you arriving at the assumption that it is anything less than 100% theoretical load at 80C? I didn't see this other than information introduced by posters trying to criticize the idea.