With the current rate of Intel CPU performance increases, could AMD be catching up?

Page 11 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

How nice of GloFo to pump out 20nm chips for AMD at no cost to AMD.

We are talking about designing/developing the IC, not manufacture it.

We are talking about designing/developing the IC, not manufacture it.

And you think glofo is going to spend 2x as much to develop a 20nm IC and not pass any of that cost down to their customers? Again, how nice of them.

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

And you think glofo is going to spend 2x as much to develop a 20nm IC and not pass any of that cost down to their customers? Again, how nice of them.

Again, we are talking about how much will cost AMD to design the CPU, not how much it will cost them to manufacture the wafer in GloFos Fab.

Edit: NVIDIA R&D is spend in designing the GPU, not developing the next node in TSMC

Last edited:

Arachnotronic

Lifer

- Mar 10, 2006

- 11,715

- 2,012

- 126

Again, we are talking about how much will cost AMD to design the CPU, not how much it will cost them to manufacture the wafer in GloFos Fab.

Edit: NVIDIA R&D is spend in designing the GPU, not developing the next node in TSMC

AtenRa,

You seem to be confused. First, if the process development becomes more expensive for the wafer guys, the wafers themselves cost more. This is typically offset by feature size scaling, but the big worry at the 20nm node is that the size decrease won't be enough to make up for the wafer cost increases.

Next, developing a chip at the 20nm node simply requires more resources than at the 28nm one...and that's what everyone is trying to tell you.

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

You said it wouldn't cost them a penny, and that's an inaccurate statement. It will cost them, whether it's directly or indirectly is irrelevant.

It will not cost them a penny/cent of their R&D budget, they ONLY spend their R&D for designing the IC. Manufacturing the IC will ONLY effect the final cost of the product(CPU) not their R&D budget.

cytg111

Lifer

- Mar 17, 2008

- 26,536

- 15,861

- 136

AtenRa,

You seem to be confused. First, if the process development becomes more expensive for the wafer guys, the wafers themselves cost more. This is typically offset by feature size scaling, but the big worry at the 20nm node is that the size decrease won't be enough to make up for the wafer cost increases.

Next, developing a chip at the 20nm node simply requires more resources than at the 28nm one...and that's what everyone is trying to tell you.

And all while expenses go up, returns goes down, for each new node the advantage is less and less ... I do not know, but I imagine, that it is quite expensive to be the front-runner(intel) of process tech in the face of diminishing returns.

Arachnotronic

Lifer

- Mar 10, 2006

- 11,715

- 2,012

- 126

It will not cost them a penny/cent of their R&D budget, they ONLY spend their R&D for designing the IC. Manufacturing the IC will ONLY effect the final cost of the product(CPU) not their R&D budget.

Wrong! Not only do the additional costs on GloFo's side eat into AMD's gross margin, but the actual development costs for a chip on 20nm are just much, much higher than on an older process. These chips get much more complex to design, the tools become more expensive, validating larger/more complex chips gets more expensive, etc.

There is a reason Qualcomm specifically mentions that it is spending money to stay at the leading edge of the foundries' process tech - it isn't just a gross margin/COGS hit.

AtenRa

Lifer

- Feb 2, 2009

- 14,003

- 3,362

- 136

Wrong! Not only do the additional costs on GloFo's side eat into AMD's gross margin, but the actual development costs for a chip on 20nm are just much, much higher than on an older process. These chips get much more complex to design, the tools become more expensive, validating larger/more complex chips gets more expensive, etc.

There is a reason Qualcomm specifically mentions that it is spending money to stay at the leading edge of the foundries' process tech - it isn't just a gross margin/COGS hit.

What gross margin has to do with R&D cost ??? You seam to be confusing product cost, margins and R&D.

1)AMDs R&D budget is spend for designing the CPU. (1Billion)

2)GloFo will spend its R&D budget for developing the 20nm process and for new fab machinery. (1-1.5Billnios for developing and more for machinery)

The combination of 1 and 2(R&D) will have an effect on the final cost of the product.

Bottom line, it will cost them. Like I said, directly or indirectly, the move will cost them, regardless of whether its AMD's R&D or GloFo's R&D, it's AMD that's going to pay for it at the end. You can bring your strawman argument all you want but it's pretty clear the folks here see right through it and down to the real issue for AMD.

Bottom line, it will cost them. Like I said, directly or indirectly, the move will cost them, regardless of whether its AMD's R&D or GloFo's R&D, it's AMD that's going to pay for it at the end. You can bring your strawman argument all you want but it's pretty clear the folks here see right through it and down to the real issue for AMD.

The amount of money AMD paid GLF for the development of SOI is a paltry 80 million per year, while the 2013 budget allows for a 1.2 billion in R&D, some 300 million less than the 2011 peak. So on top of the SOI reduction, there's some 220 million that were cut straight from the product development budget. So no matter how you look, you can see that AMD budget is cash strapped.

About the discussions you were having about costs to move to a new node, the node costs won't go to AMD R&D line, but there are other costs associated with the migration for a new node, like redesigning the current chip with different design rules, then updating with new power saving states, new features, new instruction sets, fixing errata, then last but not least go through the validation process. And those costs are by no means small, and they go straight to AMD R&D line.

This is the reason why Rory keeps talking about using nodes for more time, and given that AMD is suffering from structurally low gross margins and a smaller R&D budget, they do not have a choice but to stretch the use of a given node, but this strategy means that they are out of the bleeding edge, be it on ARM or x86, and maybe even on GPUs.

ShintaiDK

Lifer

- Apr 22, 2012

- 20,378

- 146

- 106

And all while expenses go up, returns goes down, for each new node the advantage is less and less ... I do not know, but I imagine, that it is quite expensive to be the front-runner(intel) of process tech in the face of diminishing returns.

And this is why larger and larger volume is needed. And why more and more companies need to go belly up for technology to progress. 14nm alone gonna kill off half the industry.

- Feb 6, 2010

- 4,423

- 664

- 126

Bottom line, it will cost them. Like I said, directly or indirectly, the move will cost them, regardless of whether its AMD's R&D or GloFo's R&D, it's AMD that's going to pay for it at the end. You can bring your strawman argument all you want but it's pretty clear the folks here see right through it and down to the real issue for AMD.

I think you're twisting your arguments now that it's obvious you were wrong. There was a discussion regarding AMD's R&D budget, and whether it would be enough to develop new microarchitectures. But now you're trying to turn it into a discussion about cost of goods sold, which is completely different.

Some points to make:

1. The cost of developing 20 nm process technology will be taken from GloFo's R&D budget, not AMDs.

2. If 20 nm process tech is expensive to develop for GloFo, they will have to charge more for the wafers that AMD orders from them. Yes, it's a cost that will have to be absorbed (by the consumers in the end). However it will not affect AMD's R&D budget, only the price of the AMD CPUs, which is something completely different.

3. 20 nm may be more expensive to develop, but it also means you can fit twice as many transistors per die area as on 28 nm. Hence the cost per transistor goes down, which also brings down the cost per CPU (all else equal).

4. Intel CPUs also have to bear the cost of developing 22/14 nm process technology. Meaning:

a) Any cost increase similar to 2) will affect Intel too. Hence no cost advantage for Intel CPUs due to that (all else equal).

b) Intel develops their process tech themselves. This means it will be taken from their R&D budget. So when comparing how much $$$ Intel vs AMD has to spend on developing new uarch, it's not correct to compare Intel's complete R&D budget vs AMD's. After subtracting the R&D budget Intel spends on developing new process tech from their total R&D budget, the gap to AMD narrows. If you say developing new process tech gets more expensive for each node, then this effect will become even greater going forward.

Last edited:

- Feb 6, 2010

- 4,423

- 664

- 126

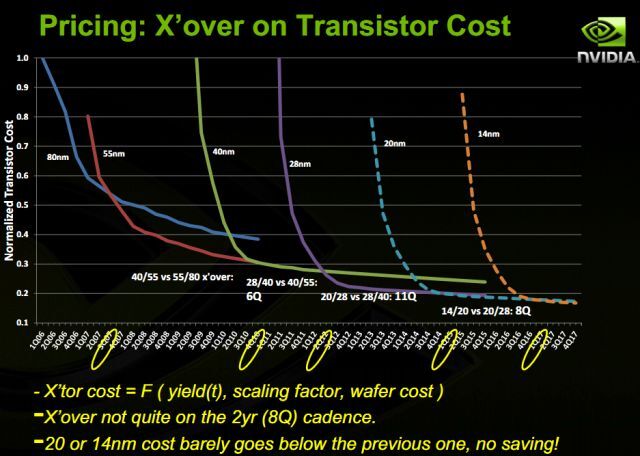

Also in terms of production cost per wafer. This is what NVidia thinks about it as a customer:

Interesting diagram. And especially the comment:

"20 or 14 nm cost [per transistor] barely goes down below previous one, no saving!"

I assume the cost that is referred to is from NVidia's point of view as a buyer of wafers, meaning that the R&D costs for developing the process tech has been taken into account (and "spread out" over the wafers produced)?

In that case going from 22->14 nm doesn't seem to have that many benefits:

-According to the diagram it doesn't bring down cost per transistor for the buyer of the wafers.

-Going from 32->22 nm did not add much room for increased CPU frequency. I assume it's likely that this will also be true when going from 22->14nm?

-It will likely provide lower TDP. But SB(2600K)->IB(3770K) "only" meant 95W-77W=18W TDP reduction (to be fair, the iGPU also grew a bit adding to the transistor count). So the difference is not huge.

Based on that, does it mean the process tech advantage (1 node or so) that Intel has over AMD will have less impact going forward?

- Feb 6, 2010

- 4,423

- 664

- 126

Remember out of Intels 8B$+ R&D budget, only around 2 goes to the processnode. The rest is chip design cost.

Not quite. Intel develops lots of other stuff too that consumes R&D money. We're talking SSDs, motherboards, RAID controllers, Smart TV set top boxes, Ethernet chipsets & controllers, WiFi devices, Mobile phone platforms, lots of Software, etc. So it's not like they are spending 8-2=6 billion dollars on developing uarch for mainstream desktop CPU cores.

AMD also develops other stuff than desktop CPUs (most notably graphics cards). But it's not at all as diverse as Intel.

It would be interesting to know the actual R&D budget that Intel vs AMD has dedicated to developing new uarch for desktop CPUs.

ShintaiDK

Lifer

- Apr 22, 2012

- 20,378

- 146

- 106

Interesting diagram. And especially the comment:

"20 or 14 nm cost [per transistor] barely goes down below previous one, no saving!"

I assume the cost that is referred to is from NVidia's point of view as a buyer of wafers, meaning that the R&D costs for developing the process tech has been taken into account (and "spread out" over the wafers produced)?

In that case going from 22->14 nm doesn't seem to have that many benefits:

-According to the diagram it doesn't bring down cost per transistor for the buyer of the wafers.

-Going from 32->22 nm did not add much room for increased CPU frequency. I assume it's likely that this will also be true when going from 22->14nm?

-It will likely provide lower TDP. But SB(2600K)->IB(3770K) "only" meant 95W-77W=18W TDP reduction (to be fair, the iGPU also grew a bit adding to the transistor count). So the difference is not huge.

Based on that, does it mean the process tech advantage (1 node or so) that Intel has over AMD will have less impact going forward?

Not at all. Volume offsets it. Also why only half the semiconductor companies can afford 14nm. The rest will go belly up, and the volume taken by the bigger players. Its no different than it has been the last 20 years+.

You either shrink or follow companies like VIA, or even worse.

AMD fighting Intel with 28nm parts vs 14nm is a clear indication its game over for the company. Its just a matter of how they dissapear. Will it be slowly like VIA? Or will it go out with a bang?

Not at all. Volume offsets it. Also why only half the semiconductor companies can afford 14nm. The rest will go belly up, and the volume taken by the bigger players. Its no different than it has been the last 20 years+.

You either shrink or follow companies like VIA, or even worse.

AMD fighting Intel with 28nm parts vs 14nm is a clear indication its game over for the company. Its just a matter of how they dissapear. Will it be slowly like VIA? Or will it go out with a bang?

Isn't it strange how people will on one hand have absolutely no issues accepting the economical realities behind why every single other x86 design house went belly up, withdrew from the market, or shriveled and shrunk to fit within a niche...but it suddenly becomes inconceivable that those same concepts might be applicable to AMD?

This is the part that baffles me. I can understand how non-professional people might not be able to grasp that an Intel operates at economies of scale in R&D that makes its R&D dollars deliver more per dollar than other companies (design house synergy)...after all many of these folks have never seen the inside of a fab, or attended a spec meeting for spice models on a node that won't see a fab for 4 yrs yet.

But what baffles me is that despite all they don't know, they will readily accept the economics of what they think they know as applied to Cyrix, National Semi, Via, Texas Instruments, Transmeta, IDT, etc...but don't you dare try and explain how AMD is not so special as to be excluded from that list, it becomes a sacred cow at that point for some reason. To be defended to the death (or the absurd as I read in the past many posts in this thread).

Via never had it so good, no one ever valiantly said "Via will make it happen, Intel and AMD can't possibly beat them into the ground, look at the cost of wafers that impacts AMD and Intel too.." etc etc.

Nope, for some reason it all made perfectly logical sense that Via (and everyone else in x86) went away as they did...but those same reasons simply can't be applied to AMD. Ever.

If 20 nm process tech is expensive to develop for GloFo, they will have to charge more for the wafers that AMD orders from them. Yes, it's a cost that will have to be absorbed (by the consumers in the end). However it will not affect AMD's R&D budget, only the price of the AMD CPUs, which is something completely different.

The increase occurs in both areas, manufacturing in design. For manufacture, it will be as you described, cost per wafer will go up but COGS should go down because of the extra quantity.

But designing is also affected. The costs for AMD to design a chip using GLF 20nm rules will be more expensive than design to design the chips for 28nm, and this regardless of the COGS. That's why we were supposed to see an increase, not a decrease in the R&D budget if AMD were to keep the same development pace.

The question "how AMD R&D budget compares against Intel's budget" is moot, because we can verify a decline when compared to AMD's own previous numbers, meaning that even at current nodes they would face a slower development pace. At more expensive nodes, the slow down is likely to be stronger.

The boy who cried "Wolf!" has been crying wolf since 1995.Isn't it strange how people will on one hand have absolutely no issues accepting the economical realities behind why every single other x86 design house went belly up, withdrew from the market, or shriveled and shrunk to fit within a niche...but it suddenly becomes inconceivable that those same concepts might be applicable to AMD?

It's more the point that people stopped listening to self-proclaimed financial experts (no offence intended) a while ago since AMD was supposed to go belly up for the last twenty years. Can't blame them, considering the track record of mispredictions.

AMD is in a bad shape and a bad situation, but this isn't something new. Short term they have a stable roadmap and some products close to market. Medium and long term isn't something we can predict well enough.

Also, for all we know Intel could be the next Sony, extremely profitable but spending a lot of money on R&D which never made products and self-imploding within a century. Actually, thinking about my example it's a bit creepy how closely these two companies resemble each other (before Sony imploded). A highly sought after product line which got competition through cheaper and worse alternatives. They were still good enough though and got gradually better while staying dirt cheap in comparison.

The boy who cried "Wolf!" has been crying wolf since 1995.

It's more the point that people stopped listening to self-proclaimed financial experts (no offence intended) a while ago since AMD was supposed to go belly up for the last twenty years. Can't blame them, considering the track record of mispredictions.

So because someone (who?) is predicting AMD's demise since 1995 AMD is now immune to bankruptcy?

- Feb 6, 2010

- 4,423

- 664

- 126

That's not a node advantage, that is economy of scale. So what you mention is not any technological advantage of moving to a later node.Not at all. Volume offsets it. Also why only half the semiconductor companies can afford 14nm. The rest will go belly up, and the volume taken by the bigger players. Its no different than it has been the last 20 years+.

You either shrink or follow companies like VIA, or even worse.

As long as there's a company that can produce AMD's wafers they should not be affected by it, since the cost per transistor apparently is about the same on 14 vs 22 nm.

Also note that GloFo doesn't produce wafers for AMD only. They have other customers too like Qualcomm keeping the volumes up.

Your own diagram concluded that the benefit of moving to a newer node was not that great anymore.AMD fighting Intel with 28nm parts vs 14nm is a clear indication its game over for the company.

Also, AMD is fighting with 28 nm parts (Trinity) vs 22 nm parts (IB) for Intel. If you're comparing Kaveri (28 nm) vs Broadwell (14 nm) I think that is unfair, since Kaveri is expected to be released in late 2013 vs Broadwell in mid-2014. Also, you are comparing at a point where the node difference is at its peak, with Broadwell being a tick meaning that Intel will just have switched over to 14 nm vs Kaveri that likely is at the end of the 28 nm tock cycle for AMD.

Of course not. Just like I said, they're in bad shape and in a bad situation. But imho their current market position is better than the one 1-2 years ago. Talking about doom and gloom now strikes me as odd, honestly.So because someone (who?) is predicting AMD's demise since 1995 AMD is now immune to bankruptcy?

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 23K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.