NTMBK

Lifer

- Nov 14, 2011

- 10,486

- 5,908

- 136

Ehh, I wouldn't use an integrated GPU for everything when they have a far more restricted programming model compared to CPUs and there's very little register space too. There's essentially too many pitfalls for an integrated GPU to entirely replace tasks that CPU's SIMD extension would handle.

First of all there's an overhead cost to be paid between CPU and GPU communication despite all of AMD's gallant efforts with HSA whereas the SIMD extension is usually integrated onto the CPU.

Second, there's not many established frameworks out there that would embrace the idea of heterogeneous programming. Many app writers would rather an ISA extension instead of dealing with other ISAs altogether if we take a look at how slowly CUDA adoption has progressed and then we have the Cell processor too which is a spectacular example of a failed heterogeneous processor. (it didn't help that it also had an extreme NUMA system) The consensus is that symmetry is highly valued when it comes to hardware design.

Third, I feel that GPUs are arguably too wide for their own good to take advantage of the many different vectorizable loops that a CPU SIMD would. A growing trend with GPUs is that you need an extreme amount of data level parallelism to exploit it but another problem that arises with it is that you're also limited with the amount of vector registers you have on a GPU. With a CPU the common worst case you can do is spill to the L2 or even the L3 cache but with a GPU you'll run your caches dry very quickly and then soon enough you'll hit the wall that is the main memory which will cause significant slowdowns when you're practically bandwidth bound.

In short GPUs have too many pitfalls in comparison to CPU SIMDs. I think that AVX-512 strikes the ideal balance between programming model and parallelism. It makes vectorization at the compiler level easier too compared to AVX2 or previous SIMD extensions. Game consoles should have AVX-512 since it meets the needs of game engine developers and arguably hardware designers but a bonus side effect is a lower latency.

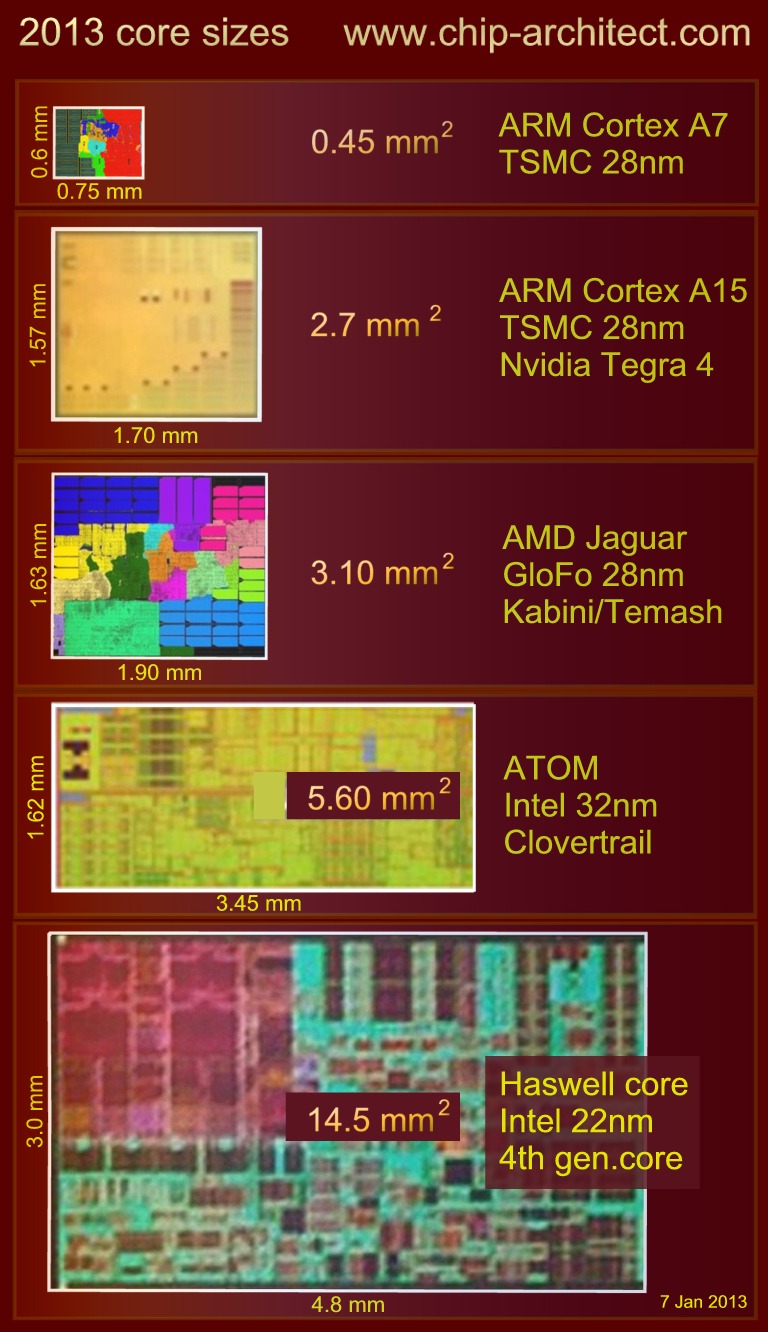

AVX-512 is too a low hanging fruit when we want to increase the performance of next gen console CPUs (we're already at our limit on the CPU side for current gen consoles and there will always be loops that are too small to see a speedup on GPUs so a wider SIMD would come in handy along with a vastly more refined programming model. I believe AVX-512 is the console manufacturers salvation in their perf/mm^2 and perf/watt targets when we consider that ALUs are cheap these days. They can't increase clocks without sacrificing perf/watt and they definitely do not want something as beefy as Ryzen to sacrifice so much die space for relatively little gains in ILP (graphics and physics programmers are going to get mad where all that die space went into) so I don't think it's coincidence that AVX-512 would slot in nicely in these scenarios ...

The area spent on AVX-512 and the datapaths to support it would be considerable. There are better ways to spend that area- Jaguar CPUs are far from maxed out in scalar ILP. What you are proposing sounds more like Xeon Phi than a general purpose CPU. And even if you don't want to beef up the CPU, you can throw some more GPU shaders in instead.