Silverforce11

Lifer

- Feb 19, 2009

- 10,457

- 10

- 76

Yeah you are correct. Video cards are never the battle of good and evil like some make it out to be, I was just clarifying in context. My bad if it seemed out of place.

I mean at the end of the day it doesn't matter why Kepler tanked from purely a predictive standpoint because past performance is no guarantee of future results. I think completely making an arbitrary decision like "Nvidia will drop Maxwell like Kepler," or that future Nvidia GPUs can't overcome deficiencies is folly. And I don't know if I can except that future AMD GPUs will get the same console advantage going forward, seeing as how the newish GCN GPU Fiji is a basket case when it comes to performance.

It will be fun to see for sure, but I think the retrospective discussion is relevant today.

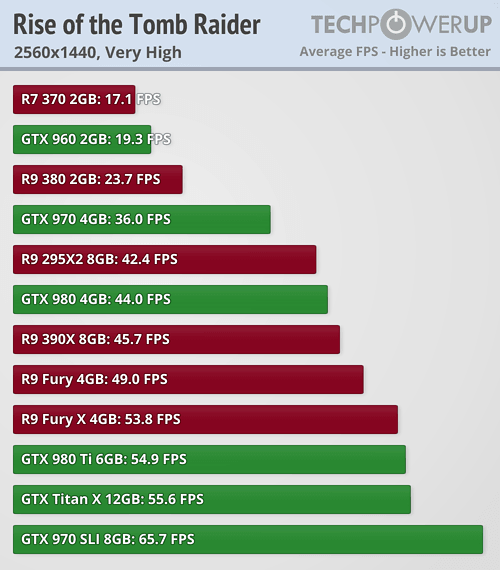

Just be aware Rise of Tomb Raider benches, the pre-release version is not representative, because Nixxes had a release day update that improve performance on AMD across all SKUs. AMD also released a driver 3 days later to fix Fiji performance and stutter.

This was the release build.

But definitely Fiji needs special driver treatment compared to other GCN. I suspect it's the HBM.