- Nov 6, 2009

- 1,511

- 73

- 91

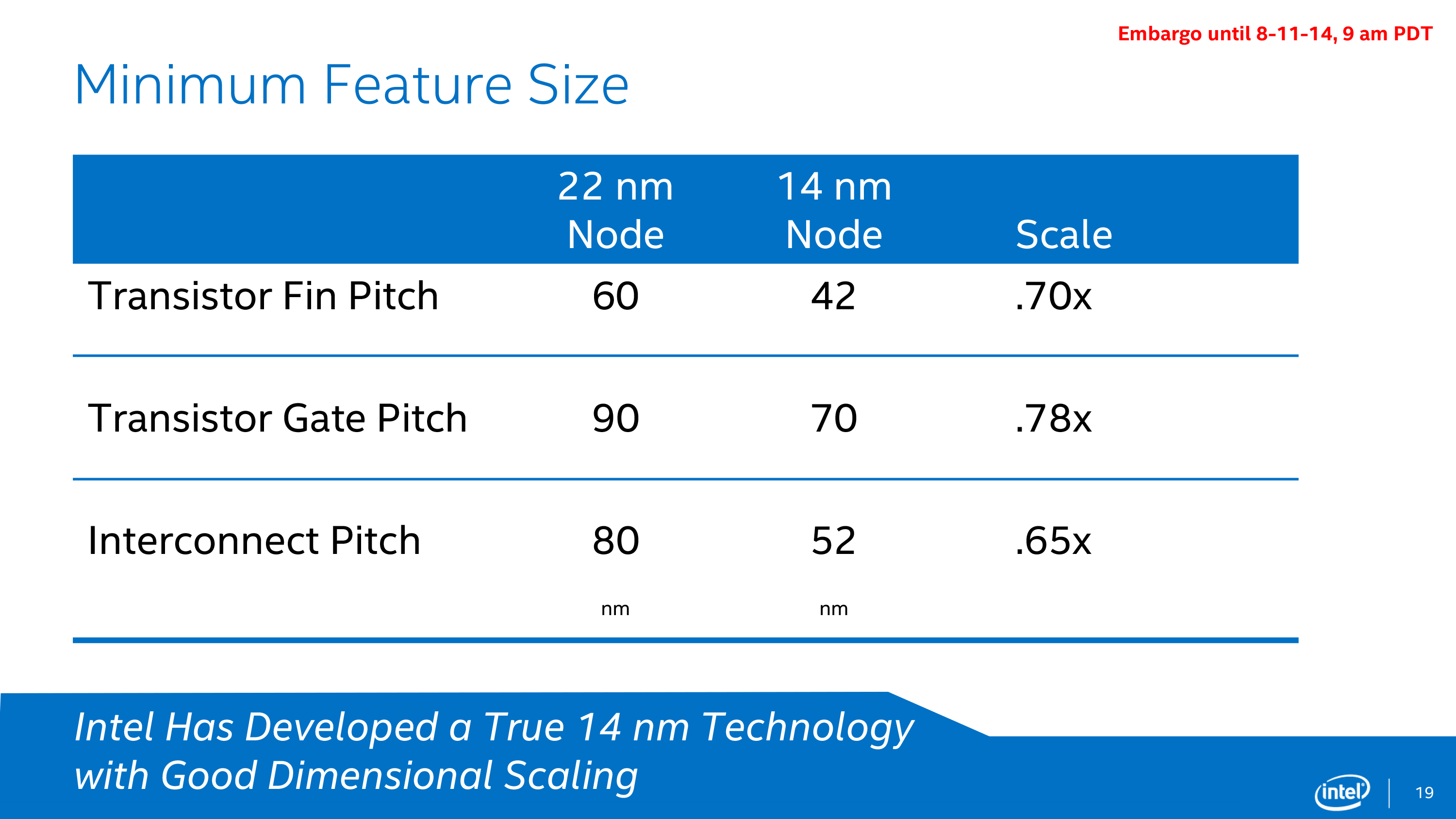

OK. I know that node sizes are chosen for marketing reasons, and don't actually represent the actual sizes of any features, still those numbers are supposed to stand for something.

Now, as far back as I know, the node sizes are 65nm, 45nm, 32nm, 22nm and 14nm, with

10nm and 7nm yet to come. I have seen 28nm and 20nm referred to as "half nodes." I can see that from 65nm to 22nm each step represented a shrinkage of sqrt(2) until we reach 14nm. The continuity breaks there.

Worse, this is all supposed to have derived from a master size of 193nm, the wavelength of blue light. But on a little spreadsheet I cannot reach the current series unless I do 193nm/3 to get 64.33nm. And I seem to recall there were 135nm and 95nm node sizes (I may be remembering those incorrectly). Those don't fit very well.

Can someone please list the correct node sizes, and explain why they were chosen?

Now, as far back as I know, the node sizes are 65nm, 45nm, 32nm, 22nm and 14nm, with

10nm and 7nm yet to come. I have seen 28nm and 20nm referred to as "half nodes." I can see that from 65nm to 22nm each step represented a shrinkage of sqrt(2) until we reach 14nm. The continuity breaks there.

Worse, this is all supposed to have derived from a master size of 193nm, the wavelength of blue light. But on a little spreadsheet I cannot reach the current series unless I do 193nm/3 to get 64.33nm. And I seem to recall there were 135nm and 95nm node sizes (I may be remembering those incorrectly). Those don't fit very well.

Can someone please list the correct node sizes, and explain why they were chosen?