When to go 6 or eight cores?

- Thread starter ronopp

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Yay, another person with a Qnix monitor! Had mine for years, and recently retired it. At this point, with that card you'll be GPU bound anyway regardless of what processor you went with. Hang in there for Zen 2, and whatever Lake Intel has at that time and see.System is in the sig, I am wondering when to make a move? I game at 2k which is fine for me. I want to upgrade when I would get the biggest bang for my buck.

Thanks in advance

beginner99

Diamond Member

- Jun 2, 2009

- 5,320

- 1,768

- 136

When you need them. If you only do single-player games and not streaming 4-core is enough mostly. If you play something like BF1 or upcoming BF5, then in multiplayer 6 core will help.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,177

- 13,264

- 136

And as far as the "moar cores" thing goes, I think that's kind of a red herring. Intel (and to a lesser extent, AMD) has grown beyond Haswell's gaming IPC. I think you can actually game faster on a 2700x than you can a stock 4790k, and possibly even faster than a "typical" 4.5-4.7 GHz overclocked 4790k. I know a Coffee Lake 8700k or 9900k at the same clockspeed will get you higher IPC than that Haswell, guaranteed.

There are some titles out there, right now, that you may struggle to hit 80 fps in with that 4790k. And that's not considering games like Total War etc. where there are other CPU-related factors.

If you are going to get a 6c or 8c CPU, look first at the relative IPC of the chip in question versus your Haswell, and then decide 75% based on that. The extra cores are just the icing on the cake.

There are some titles out there, right now, that you may struggle to hit 80 fps in with that 4790k. And that's not considering games like Total War etc. where there are other CPU-related factors.

If you are going to get a 6c or 8c CPU, look first at the relative IPC of the chip in question versus your Haswell, and then decide 75% based on that. The extra cores are just the icing on the cake.

arandomguy

Senior member

- Sep 3, 2013

- 556

- 183

- 116

The impact of tariffs and possibly future trade war escalation add more uncertainty to the issue.

If we ignore that I feel end of 2019 will be a better time in terms of value if you are content with your current setup. Right now the general forecast for next year is continued memory price decline and possibly higher competition landscape with both Intel and AMD moving to new uarch and processes (at least one will for sure).

If I were you and I ignored the trade issues I would strongly consider holding to end of 2019 for the cpu/platform change.

If we ignore that I feel end of 2019 will be a better time in terms of value if you are content with your current setup. Right now the general forecast for next year is continued memory price decline and possibly higher competition landscape with both Intel and AMD moving to new uarch and processes (at least one will for sure).

If I were you and I ignored the trade issues I would strongly consider holding to end of 2019 for the cpu/platform change.

And as far as the "moar cores" thing goes, I think that's kind of a red herring. Intel (and to a lesser extent, AMD) has grown beyond Haswell's gaming IPC. I think you can actually game faster on a 2700x than you can a stock 4790k, and possibly even faster than a "typical" 4.5-4.7 GHz overclocked 4790k. I know a Coffee Lake 8700k or 9900k at the same clockspeed will get you higher IPC than that Haswell, guaranteed.

There are some titles out there, right now, that you may struggle to hit 80 fps in with that 4790k. And that's not considering games like Total War etc. where there are other CPU-related factors.

If you are going to get a 6c or 8c CPU, look first at the relative IPC of the chip in question versus your Haswell, and then decide 75% based on that. The extra cores are just the icing on the cake.

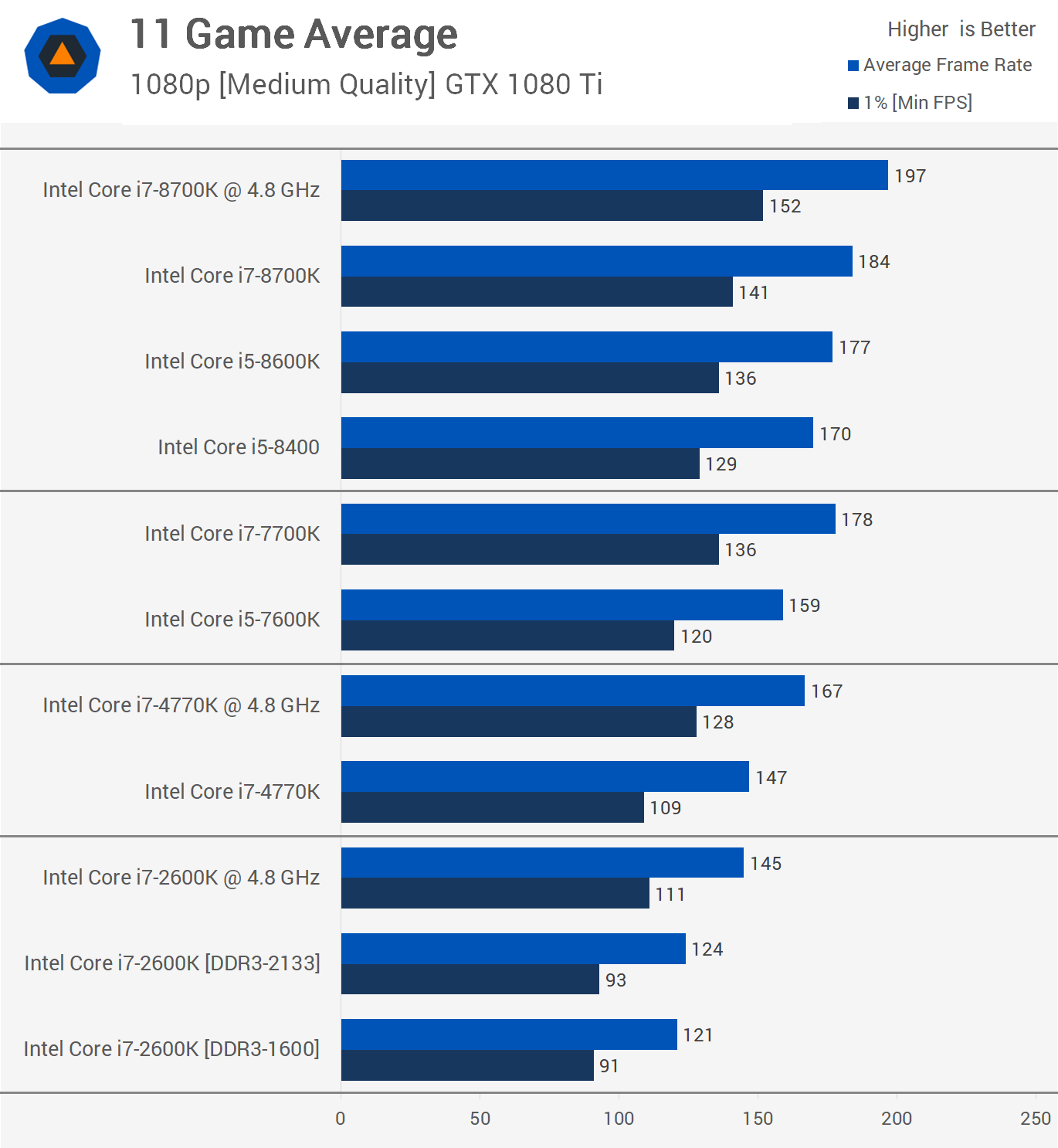

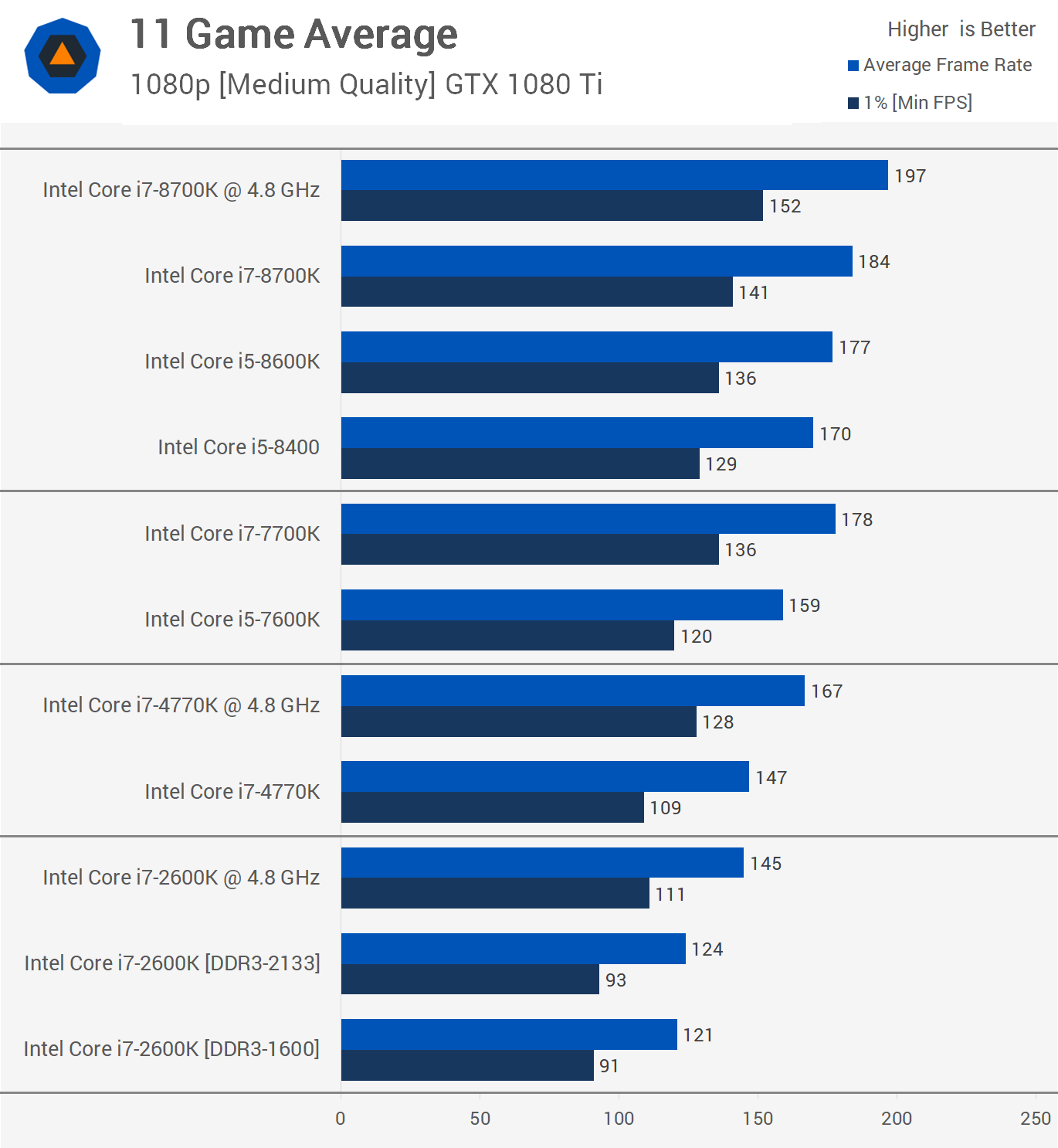

A 8700K would indeed provide a tangible increase in framerates, but we are still talking about less than a 20% difference at 1080P *medium* settings on a 1080 Ti: https://www.techspot.com/review/1546-intel-2nd-gen-core-i7-vs-8th-gen/page5.html

At 1440P, which the OP games at, that margin is probably going to shrink to 10% or less as it is much more GPU bound than 1080P.

I wouldn't even consider Ryzen at this point as a true 'upgrade' for gaming compared to a 4790K. As the charts above show, a 4770K/4790K @ 4.8GHz is basically equal to an i5 8400... which in turn is basically equal to an overclocked Ryzen 2600 @ 4.2GHz https://www.techspot.com/review/1627-core-i5-8400-vs-ryzen-5-2600/page8.html

Yes, the overclocked 2600 is a bit faster than the 8400 but that's because it's using DDR4-3400 against DDR4-2666 for the i5 8400. Pair the same memory for both CPUs and they will effectively be equal.

Basically,for gaming, an OC 4790K ~= i5 8400 / OC Ryzen 5 or 7. The only truly worthwhile chips worth upgrading to would be the costly ones - we are talking 8700K/9700K/9900K here, and to a lesser extent a 8600K/9600K. And that is a heck of an expense for a max ~10% gain at 1440P.

Last edited:

DrMrLordX

Lifer

- Apr 27, 2000

- 23,177

- 13,264

- 136

Basically,for gaming, an OC 4790K ~= i5 8400 / OC Ryzen 5 or 7. The only truly worthwhile chips worth upgrading to would be the costly ones - we are talking 8700K/9700K/9900K here, and to a lesser extent a 8600K/9600K. And that is a heck of an expense for a max ~10% gain at 1440P.

I would say that's mostly fair. The main gains would be in higher minimums, I think. Also I kind of wonder if that 1070Ti will prevent sustaining 80 fps minimums at 1440p. But I do know, a 4790k will probably not pull it off with a faster dGPU.

I would say that's mostly fair. The main gains would be in higher minimums, I think. Also I kind of wonder if that 1070Ti will prevent sustaining 80 fps minimums at 1440p. But I do know, a 4790k will probably not pull it off with a faster dGPU.

Yeah agreed. To be honest I don't really notice any difference between my old 6700K and current 8700K for gaming, not even in minimums, but maybe that is because they have the same IPC and close enough clockspeed, and games just aren't that multi-threaded yet to the point that a highly clocked 4C/8T CPU becomes a noticeable bottleneck. I'm sure that day will come though...

Hans Gruber

Platinum Member

- Dec 23, 2006

- 2,541

- 1,370

- 136

A 8700K would indeed provide a tangible increase in framerates, but we are still talking about less than a 20% difference at 1080P *medium* settings on a 1080 Ti: https://www.techspot.com/review/1546-intel-2nd-gen-core-i7-vs-8th-gen/page5.html

At 1440P, which the OP games at, that margin is probably going to shrink to 10% or less as it is much more GPU bound than 1080P.

I wouldn't even consider Ryzen at this point as a true 'upgrade' for gaming compared to a 4790K. As the charts above show, a 4770K/4790K @ 4.8GHz is basically equal to an i5 8400... which in turn is basically equal to an overclocked Ryzen 2600 @ 4.2GHz https://www.techspot.com/review/1627-core-i5-8400-vs-ryzen-5-2600/page8.html

Yes, the overclocked 2600 is a bit faster than the 8400 but that's because it's using DDR4-3400 against DDR4-2666 for the i5 8400. Pair the same memory for both CPUs and they will effectively be equal.

Basically,for gaming, an OC 4790K ~= i5 8400 / OC Ryzen 5 or 7. The only truly worthwhile chips worth upgrading to would be the costly ones - we are talking 8700K/9700K/9900K here, and to a lesser extent a 8600K/9600K. And that is a heck of an expense for a max ~10% gain at 1440P.

There are things that benchmarks do not measure. Like smoothness of the CPU when gaming or rendering. The newer CPU's have the advantage over old CPU's. I think there are enhancements made to the CPU architecture that are not accounted for in benchmarks.

There are things that benchmarks do not measure. Like smoothness of the CPU when gaming or rendering. The newer CPU's have the advantage over old CPU's. I think there are enhancements made to the CPU architecture that are not accounted for in benchmarks.

I have no idea what you are talking about. What is this 'smoothness'?

100fps = 100fps, there isn't some magic sauce in new CPUs that makes games run 'smoother'. If they are smoother, it is because of higher framerates (particularly minimums), simple as that.

No.Will my eyes actually be able to tell the difference between 130 fps and 144 fps?

Not necessarily true. There is a visible advantage to being locked onto the max refresh rate of the monitor panel for monitors that do not have adaptive sync. So 130 to 144hz would be visible if 144hz is the max refresh of that panel and you're not running adaptive sync.

This isn't because 130 fps vs 144 fps is easily noticeable on its own, rather that when you cap the panel's refresh rate (w/ vsync) you will see some beneficial frame timing differences. It will appear smoother due to lining up with the panel better.

This is all moot if you're running adaptive sync. That would make it nearly impossible to notice a difference between 130 and 144.

DrMrLordX

Lifer

- Apr 27, 2000

- 23,177

- 13,264

- 136

Not necessarily true. There is a visible advantage to being locked onto the max refresh rate of the monitor panel for monitors that do not have adaptive sync. So 130 to 144hz would be visible if 144hz is the max refresh of that panel and you're not running adaptive sync.

This isn't because 130 fps vs 144 fps is easily noticeable on its own, rather that when you cap the panel's refresh rate (w/ vsync) you will see some beneficial frame timing differences. It will appear smoother due to lining up with the panel better.

This is all moot if you're running adaptive sync. That would make it nearly impossible to notice a difference between 130 and 144.

Spot on. Adaptive Sync/FreeSync/Gsync are designed to help cover up the jankiness you can get when your system is swinging between, let's say 60 fps and 120 fps, depending on the scene.

Obviously one solution is to get a 60 Hz monitor. Then you only have to shoot for 60fps minimum, and you won't see any swings at all.

If you try for a monitor with higher-than-60 Hz refresh rate, now you have to push your minimums higher. Sometimes you can't get there, and the result can be timing issues when framerates start to dip.

bfun_x1

Senior member

- May 29, 2015

- 475

- 155

- 116

I have no idea what you are talking about. What is this 'smoothness'?

100fps = 100fps, there isn't some magic sauce in new CPUs that makes games run 'smoother'. If they are smoother, it is because of higher framerates (particularly minimums), simple as that.

Average FPS is irrelevant to smoothness. A game running at 60fps can seem smother than a game that average 200fps with drops into the 20s. Newer CPUs score better on frame times so they can make games seem smother regardless of the average frame rate.

Average FPS is irrelevant to smoothness. A game running at 60fps can seem smother than a game that average 200fps with drops into the 20s. Newer CPUs score better on frame times so they can make games seem smother regardless of the average frame rate.

RAM speed/timings is more important to smoothness for games from the last 3 years than it has been for a very long time as well. With how small CPU speed increases have been generation over generation RAM is actually more important in many cases. FX to Ryzen notwithstanding

Indus

Lifer

- May 11, 2002

- 16,601

- 11,410

- 136

Yay, another person with a Qnix monitor! Had mine for years, and recently retired it. At this point, with that card you'll be GPU bound anyway regardless of what processor you went with. Hang in there for Zen 2, and whatever Lake Intel has at that time and see.

I have that monitor too but mine was at 100% brightness and I couldn't lower it. Eventually got tired of it and up/ down/ sidegraded to a TN 2560x1440 24" 144hz display.

Love the 144hz. Miss IPS. And I'm not sure about 27 vs 24". I kinda like 24 when gaming but 27 when using photoshop.

bfun_x1

Senior member

- May 29, 2015

- 475

- 155

- 116

I have that monitor too but mine was at 100% brightness and I couldn't lower it. Eventually got tired of it and up/ down/ sidegraded to a TN 2560x1440 24" 144hz display.

Love the 144hz. Miss IPS. And I'm not sure about 27 vs 24". I kinda like 24 when gaming but 27 when using photoshop.

I had a Qnix and it was the jankiest monitor ever. The enclosure had a mystery button on the front and when I pushed it, it fell inside the monitor and left a hole. So then I opened the enclosure and found that the panel was only being held in place by some randomly placed foam blocks that fell out when I opened it. The stand was held in place by one tiny screw and it was stripped out. The panel was actually pretty good quality but the light bleed was horrible since the panel wasn't really secured to anything.

With Zen2 @7nm around the corner and intel's severe supply shortage this is the worst time since long to upgrade.

Wait for intel to fix it's supply issues and the arrival of Zen2 in spring next year. Even if you decide to go with Intel they'll need to respond in pricing.

Especially if you live in europe the pricing could be 40-50% lower than the current situation if they truly feel the need to compete on price.

Wait for intel to fix it's supply issues and the arrival of Zen2 in spring next year. Even if you decide to go with Intel they'll need to respond in pricing.

Especially if you live in europe the pricing could be 40-50% lower than the current situation if they truly feel the need to compete on price.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.