Bigger CPU die and/or corners cut in design to keep the die size down.

More concentrated heat.

More throttling requirements.

Higher stress on power delivery circuitry since it's now having to feed both a CPU and a GPU.

Designing an APU so that the iGPU can be disabled to increase yields is one partial work-around.

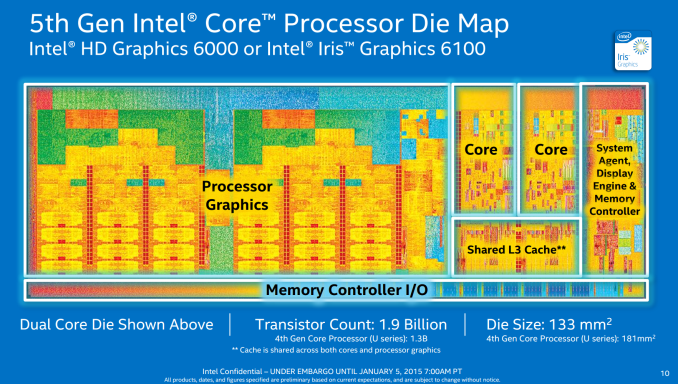

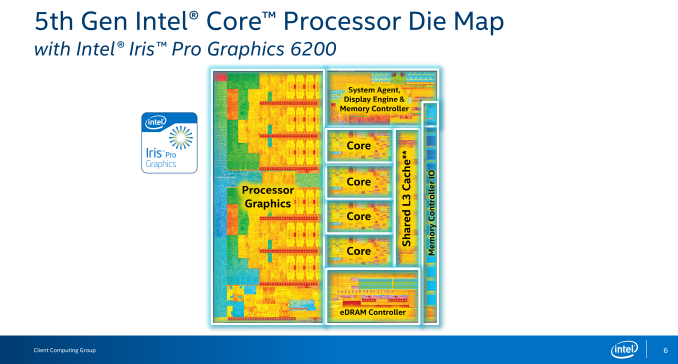

When an expensive CPU like the Broadwell 5775C has half the CPU chip taken up by integrated graphics and the four actual CPU cores are only a small portion of all the circuitry on the die that's weird. (The EDRAM helps in efficiency in terms of CPU performance by being a victim cache but it's a separate chip on the die.) When the performance of the graphics is killed easily by a relatively inexpensive discreet GPU that's even worse. However, if Intel had done enthusiasts a favor by releasing a harvested Broadwell C with the iGPU disabled for yields and a higher TDP that could have made the chip less disappointing.

Even in laptops it's not optimal to have all the heat concentrated into such a small area, unless it's just to cut costs.

That's really what APUs are about, nothing more. They're about cutting costs at the expense of efficiency. The only way that will change is when something like HBM2 can be used for both the APU graphics and system RAM. Unified system memory at very high speeds will finally make the APU model more useful.

An APU prevents the cost of needing a discreet GPU. Discreet GPUs need VRM circuitry, RAM, PCBs, connectors, separate processors, etc. But, they offer less concentration of heat and VRM stress. They offer the ability to not sacrifice graphics performance nearly as much to fit into the die size and thermal envelope (including cooling) parameters. Discreet GPUs are also easily replaceable in the desktop format.

A jack of both trades and a master of neither.