what is the "x" in hexadecimal strings?

- Thread starter khold

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Shalmanese

Platinum Member

- Sep 29, 2000

- 2,157

- 0

- 0

It meant purely for humans. Computers store everything in base 2 but humans want to read the output in different bases so the 0x tells the human that hes reading base 16

Woodchuck2000

Golden Member

- Jan 20, 2002

- 1,632

- 1

- 0

That would be mine then

Just wondering, why do we use Hex at all? Is it simply because it's somewhat easier to deal with large numbers than with binary, but conversions are easy because 16 is a power of two?

Just wondering, why do we use Hex at all? Is it simply because it's somewhat easier to deal with large numbers than with binary, but conversions are easy because 16 is a power of two?

I guess it's mainly because you can nicely read a byte as a two-digit number, without obfuscating the bit positions as much as a base that isn't a power of two would do. Veeeery much earlier in computing history, octal was being used for the same reason.

Real question is, why are we using decimal? Because we have ten fingers. The Maya used a base-5 system, counting with one hand only.

regards, Peter

Real question is, why are we using decimal? Because we have ten fingers. The Maya used a base-5 system, counting with one hand only.

regards, Peter

"why do we use hex?"

Comes from the older days IIRC when you worked with a nibble. 4 bits that is. Then two nibbles became a byte, then you had a "word" which could be multiple bytes.

For now it is just a low easier to work in hex then looking at a string of binary or even decimal. With hex there are nice even "bit boundaries" that can be seen in the number.

Comes from the older days IIRC when you worked with a nibble. 4 bits that is. Then two nibbles became a byte, then you had a "word" which could be multiple bytes.

For now it is just a low easier to work in hex then looking at a string of binary or even decimal. With hex there are nice even "bit boundaries" that can be seen in the number.

William Gaatjes

Lifer

- May 11, 2008

- 22,457

- 1,461

- 126

Not really on topic, but when thinking about hexadecimal and the powers of 2, the most disgusting thing is the MiB and MB. It really hurts my eyes and my technical heart when i see MiB in an article where MB is the proper way. Bytes are a power of 2. Therefore M is a power of 2.

Not really on topic, but when thinking about hexadecimal and the powers of 2, the most disgusting thing is the MiB and MB. It really hurts my eyes and my technical heart when i see MiB in an article where MB is the proper way. Bytes are a power of 2. Therefore M is a power of 2.

The prefix M or mega is a SI prefix meaning 10^6. The colloquial use of M or mega as meaning 2^20 came after the date the SI prefix was established. I guess this might be "disgusting" to you as it lacks new age flakery and exceeds a code munkey's understanding.

William Gaatjes

Lifer

- May 11, 2008

- 22,457

- 1,461

- 126

The prefix M or mega is a SI prefix meaning 10^6. The colloquial use of M or mega as meaning 2^20 came after the date the SI prefix was established. I guess this might be "disgusting" to you as it lacks new age flakery and exceeds a code munkey's understanding.

The prefix afcourse is set for a decimal system to 10^6. But everybody who understands binary logic knows that M here means 2^20. You are right about the history, but use strange logic because you yourself admit the difference is obvious and really a non issue.

Which is my real point. Besides, the amount of free space on for example a HDD depends on the file system used. As such whatever the HDD manufacturer writes down as capacity, is not going to be equal to what is the actual free available space. And that was the reason why this whole MB, MiB nonsense started.

EDIT:

I understand your point. But you must not look at a mass storage device as for example a HDD or an antique shugart drive as a device you are going to communicate with. It is your cpu that does all the work and it works by powers of 2.

Another example is addition of CRC and 8b/10b coding when using serial communication. The amount of actual transferred user data is never going to be equal to the amount of bit's transferred in the same timeframe over that serial line. Because the marketing department of company x boasts the raw bitrate instead of the net amount of data bytes, does not mean they are lying or using the wrong prefix, it means you as a user should try to inform yourself better.

Last edited:

Therein lies the issue. My take on it is that M means mega which is a million (bytes), end of story.The prefix afcourse is set for a decimal system to 10^6. But everybody who understands binary logic knows that M here means 2^20.

William Gaatjes

Lifer

- May 11, 2008

- 22,457

- 1,461

- 126

Therein lies the issue. My take on it is that M means mega which is a million (bytes), end of story.

That means that before the whole issue became apparent and widespread in the news a few years ago, your computer did not have 1024*1024 bytes but 1000*1000 bytes ? Or that your old home computer only had 8,000 bytes instead of 8192 bytes ?

What are you driving at?That means that before the whole issue became apparent and widespread in the news a few years ago, your computer did not have 1024*1024 bytes but 1000*1000 bytes ? Or that your old home computer only had 8,000 bytes instead of 8192 bytes ?

The use of SI units to mean binary numbers was initially only used as a shortcut of convenience when measuring RAM size (because, RAM by its nature tended to be in power-of-2 sizes, due to manufacturing techniques). At that time, this was a satisfactory shortcut, as taking 'k' to mean 1024, was only 2.4% inaccurate - which was tolerated as an acceptable deviation.

It's worth nothing that for communications channels (e.g. serial, parallel, modems, networking) the binary equivalents have never been used. Measures such as 'baud', bps, etc. have always used the SI prefixes to mean decimal numbers - e.g. a 14.4 kbps modem, really did offer 14400 bits per second.

Similarly, early mass storage, such as magnetic tapes and early floppy disks, also only ever used decimal numbers (at least for 'unformatted' capacity). E.g. tapes were marketed as 10 Mbits on a reel - meaning 10 megabits. Of which some would be used as sync/sector markers, etc. giving a reduced formatted capacity.

Once floppy disks and hard disks started to become mainstream - everything went crazy with every company interpreting units in a different way. (Driven in part, by software reporting capacities in binary units). I've seen hard drives with capacity stated in MB where MB has been defined as 1,048,476; 1,024,000 and 1,000,000 bytes.

Now we have GB and TB capacities, the discrepancy between binary and decimal units is becoming intolerable (nearly 10% for TB/TiB) - hence the effort made to try and restandardise the units.

It's worth nothing that for communications channels (e.g. serial, parallel, modems, networking) the binary equivalents have never been used. Measures such as 'baud', bps, etc. have always used the SI prefixes to mean decimal numbers - e.g. a 14.4 kbps modem, really did offer 14400 bits per second.

Similarly, early mass storage, such as magnetic tapes and early floppy disks, also only ever used decimal numbers (at least for 'unformatted' capacity). E.g. tapes were marketed as 10 Mbits on a reel - meaning 10 megabits. Of which some would be used as sync/sector markers, etc. giving a reduced formatted capacity.

Once floppy disks and hard disks started to become mainstream - everything went crazy with every company interpreting units in a different way. (Driven in part, by software reporting capacities in binary units). I've seen hard drives with capacity stated in MB where MB has been defined as 1,048,476; 1,024,000 and 1,000,000 bytes.

Now we have GB and TB capacities, the discrepancy between binary and decimal units is becoming intolerable (nearly 10% for TB/TiB) - hence the effort made to try and restandardise the units.

William Gaatjes

Lifer

- May 11, 2008

- 22,457

- 1,461

- 126

The use of SI units to mean binary numbers was initially only used as a shortcut of convenience when measuring RAM size (because, RAM by its nature tended to be in power-of-2 sizes, due to manufacturing techniques). At that time, this was a satisfactory shortcut, as taking 'k' to mean 1024, was only 2.4% inaccurate - which was tolerated as an acceptable deviation.

It's worth nothing that for communications channels (e.g. serial, parallel, modems, networking) the binary equivalents have never been used. Measures such as 'baud', bps, etc. have always used the SI prefixes to mean decimal numbers - e.g. a 14.4 kbps modem, really did offer 14400 bits per second.

Similarly, early mass storage, such as magnetic tapes and early floppy disks, also only ever used decimal numbers (at least for 'unformatted' capacity). E.g. tapes were marketed as 10 Mbits on a reel - meaning 10 megabits. Of which some would be used as sync/sector markers, etc. giving a reduced formatted capacity.

Once floppy disks and hard disks started to become mainstream - everything went crazy with every company interpreting units in a different way. (Driven in part, by software reporting capacities in binary units). I've seen hard drives with capacity stated in MB where MB has been defined as 1,048,476; 1,024,000 and 1,000,000 bytes.

Now we have GB and TB capacities, the discrepancy between binary and decimal units is becoming intolerable (nearly 10% for TB/TiB) - hence the effort made to try and restandardise the units.

I agree fully. Because of the confusion created in the past between people always thinking in decimals and people occasionally thinking binary or decimal, now a separate system has been created where there is no more possibility to create confusion. But even then confusion will always arise when care is not taken. Because serial data is physically more easy to transfer then parallel, techniques used as error correction, encoding, packets and protocol layers will always use up some of the bandwidth. As such raw bandwidth or storage space is nice, but it says hardly anything about the net capacity of usable data if you are not aware of what techniques are used to protect the data from corruption and to find the data back where you left it (file system). Without doing the proper background research, mistakes are bound to be made.

Anyway, i will lay my disgust to rest but continue to use MB while obviously stating i only use binary numbers .

I have to stay in my role of an occasional @hole afcourse ()

Last edited:

Modelworks

Lifer

- Feb 22, 2007

- 16,240

- 7

- 76

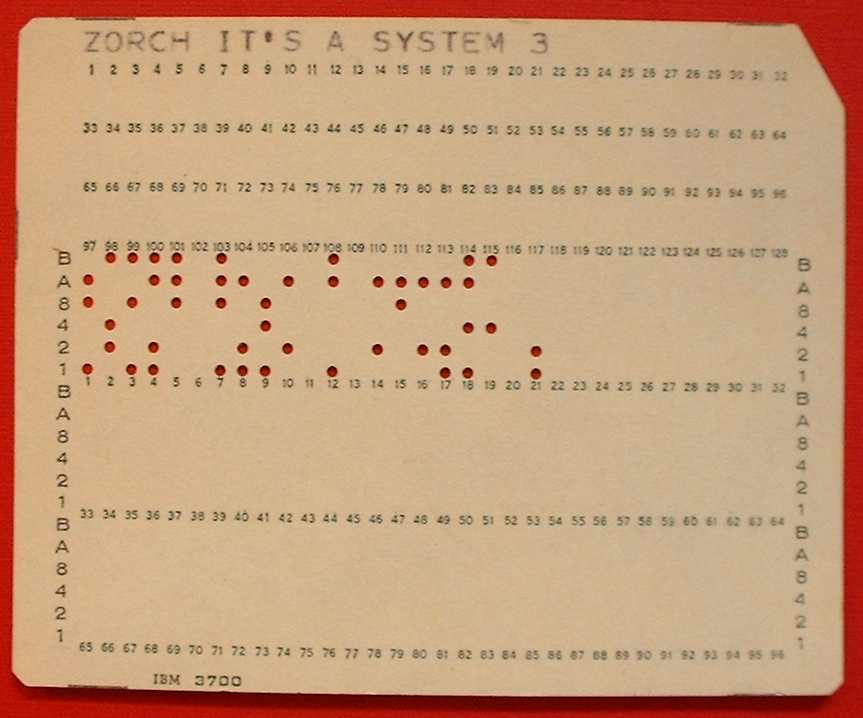

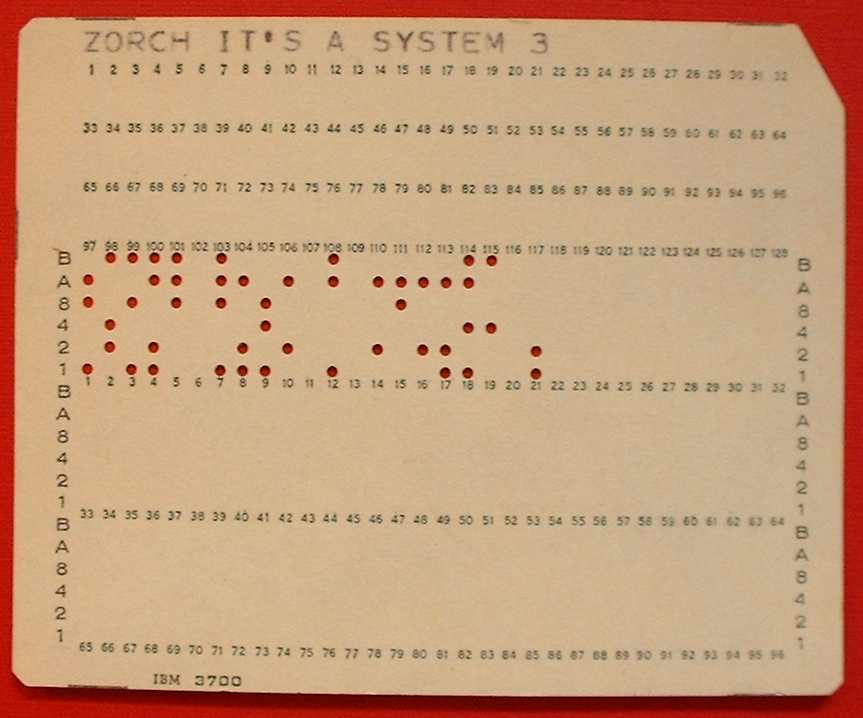

The reason for hexadecimal goes back about 30 years. Originally programs were on punch cards. You would put a blank card into the writer. On the writer you had a row of keys, either 4 or 8 across for entering binary. To program a single byte you would hit a key in the correct spot to make it a 0. When you were done setting that byte you turned a wheel and it advanced the card one notch, and then you programmed the next byte. Repeat until done with the program.

Next it improved somewhat with the cards being replaced by magnetic media but you still had to key it in as binary. When program and memory sizes increased the entering of binary became a chore. One slip up from a data entry person could be very hard to find. Hexadecimal is born. It was a way to shorten entering programs to as few characters as possible. The old 0 and 1 keys were replaced with keypads that were 0-9 and A-F, you can still find some of these on ebay and some of them are sought after by collectors. Hexadecimal is still in use because it is still the easiest way to represent large numbers.

Bnary coded decimal punch card, filled the gap between hex and binary

Next it improved somewhat with the cards being replaced by magnetic media but you still had to key it in as binary. When program and memory sizes increased the entering of binary became a chore. One slip up from a data entry person could be very hard to find. Hexadecimal is born. It was a way to shorten entering programs to as few characters as possible. The old 0 and 1 keys were replaced with keypads that were 0-9 and A-F, you can still find some of these on ebay and some of them are sought after by collectors. Hexadecimal is still in use because it is still the easiest way to represent large numbers.

Bnary coded decimal punch card, filled the gap between hex and binary

Last edited:

William Gaatjes

Lifer

- May 11, 2008

- 22,457

- 1,461

- 126

MarkCooperstein

Junior Member

- Dec 28, 2010

- 1

- 0

- 0

I agree fully. Because of the confusion created in the past between people always thinking in decimals and people occasionally thinking binary or decimal, now a separate system has been created where there is no more possibility to create confusion. But even then confusion will always arise when care is not taken. Because serial data is physically more easy to transfer then parallel, techniques used as error correction, encoding, packets and protocol layers will always use up some of the bandwidth. Snip...

Not sure I understand, or agree with you. Serializing data requires ECC, packetization eg: TCP/IP and more wherein parallel data transfer, although it has multiple bit lines requires only a clock to synchronize the transfer. 8 bit parallel can transmit one byte in one cycle, vastly simpler then serial. This is why perhaps, CPU to memory busses are always parallel. Mark

William Gaatjes

Lifer

- May 11, 2008

- 22,457

- 1,461

- 126

Not sure I understand, or agree with you. Serializing data requires ECC, packetization eg: TCP/IP and more wherein parallel data transfer, although it has multiple bit lines requires only a clock to synchronize the transfer. 8 bit parallel can transmit one byte in one cycle, vastly simpler then serial. This is why perhaps, CPU to memory busses are always parallel. Mark

I think more of the subject as this :

You mention tcp/ip, this is not just a physical layer but a complete model in software(protocols) and hardware to reliable send and receive data.

You are better of to compare it more with the usb bus.

When talking about hardware only.

As long as it is internal in the chip and only about hardware you are right for using parallel buses. But when external buses are used, it is true. There is a lot of compensation that has to be done if you have large bus widths. For example QPI uses differential line techniques for each bit with a separate clock in each direction. And although i do not know the details there is a lot of impedance matching to be done. And uses ecc. The higher the speed, the more difficult it becomes to keep everything in sync when you need to trace layout. Not impossible, but more expensive. In essence, you could say that this bus is made up of a lot of separate serial links. Same as pci express and hypertransport.

When it comes to memory, there is afcourse no need for such overhead because of memory being close to the cpu. And bandwidth is needed. As long as these 2 requirements can be met it is not going to change. But i think all cpu designers dream of having all the memory on the cpu die or in the cpu package for higher speed.

When you want to transfer data over long distances, serial is the way to go.

Last edited:

Modelworks

Lifer

- Feb 22, 2007

- 16,240

- 7

- 76

8 bit parallel can transmit one byte in one cycle, vastly simpler then serial. This is why perhaps, CPU to memory busses are always parallel. Mark

Parallel is still used mainly because it is a legacy bus. In the early days you had to have 1 wire for every switch, want to switch 8 bits you needed 8 wires , 1 for each transistor connection, speed was never considered a benefit till much later. Switching speeds were around 5khz at the time That is fine if you have multiple io ports free and lots of room on the pc board for traces. It really sucks though when your device only has 12 i/o ports and you have to use 8 of them to send or receive data . Speed isn't really a problem with serial now it just cost more to do the same connections in serial to get the same performance.

Serial flash has replaced parallel flash on most motherboards now because of the ability to use less pins and board space.

I have an lcd display that I use for embedded micros for displaying things like voltages, debug text. The problem was it required 8 address lines and on a embedded device with 10 lines that really was a problem, left me just 2 io ports. So I made a small board with a $1 pic chip to take a 1 pin serial data line and convert it to the 8 address lines for the LCD and output the text. It made a lcd requiring 8 pins only use 1. That is a good example of the two technologies working together.

Serial also has some really nice protocols. Dallas/Maxim has the one wire protocol. I use it with temperature sensors. To interface 100 sensors you need just 1 pin on the processor. They use what dallas called parasitic power where you would have one length of wire with a ground and a data line. The sensors attach and power themselves off the data line and you supply that power by using a pullup resistor at the processor side of the connection. Each sensor has a unique serial id and when you issue a command to read that sensor the others disconnect from the line, wait a period of time long enough for your read to complete, using their internal capacitors to power themselves, then re-attach to the line. That keeps it where you don't have a bunch of sensors using up large amount of power when you need data.

Really fun parts , good accuracy 12 bit output , and not costly, about $4

http://www.maxim-ic.com/datasheet/index.mvp/id/2795

you couldn't do something like that with parallel under any cost because of the nature of the system. That doesn't mean parallel is flawed or bad it just has to be used in the right circumstances .

Last edited:

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes Discussion Threads

- Started by Tigerick

- Replies: 22K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.