So it is not only a variety of opinions as to what is stable, but really a mathematical probability of how long between crashes. And you can adjust the probability of variance of time between errors - but in theory, even at the "best" settings, that could be years or, even if extremely improbable, 5 minutes.

Exactly.

In the professional realm we don't speak of "stable" versus "unstable", we speak of probabilities and "reliability".

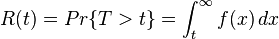

Reliability is defined as the probability that a device will perform its intended function during a specified period of time under stated conditions. Mathematically, this may be expressed as,

,where is the failure probability density function and

is the failure probability density function and is the length of the period of time (which is assumed to start from time zero).

is the length of the period of time (which is assumed to start from time zero).

The connection between reality (reliability and probabilities) and the layman's take on stability (is it stable? did it pass 50 cycles of IBT?) completely breaks down upon inspection.

What we do as enthusiasts is we OC our hardware to the point that the reliability is so bad that failure is assured within an extremely short timeframe such that we detect it before we get bored and move on.

And when we tweak the parameters such that our perception of reliability is that the machine doesn't crash in a matter of minutes or hours (or even a day) then we unjustifiably feel confident in concluding we have restored a degree of reliability that will span months or years.

In other words, ignorance is bliss