Thunder 57

Diamond Member

- Aug 19, 2007

- 4,026

- 6,741

- 136

Few questions if somebody has a idea what was K12:

- As Keller mentioned he can get more performance from ARM than x86, does it means K12 architecture was planned as Zen1/Zen2 line up successor (higher performance than Zen 2)?

- if K12 was next-gen of Zen1/2 how much possible is that Keller designed this K12 as ultra wide 6xALU core he knew from Apple?

- How much is possible that Zen 3 is based on some ideas/peaces developed for K12? Hard to imagine low resource company like AMD wasted all the work was done on K12.

- Was K12 killed completely or they just killed ARM branch and put all resources into x86 version? Is it possible that Zen 3 is x86 branch of K12 just with appropriate naming to Zen chronology? Forrest Norod said that Zen 3 completely new uarch which opens the possibility for this option.

I know you might not want to hear from me but let me try. First, thanks for posting in an appropriate thread. I'll give you my thoughts and hopefully we can have a constructive conversation. Maybe we can be nicer to each other,

1. When did Keller say he could get more performance out of ARM than x86? I know Keller is a bit of an ARM fan and wanted to keep K12 alive and well, but unfortunately AMD didn't have the resources at the time to develop both.

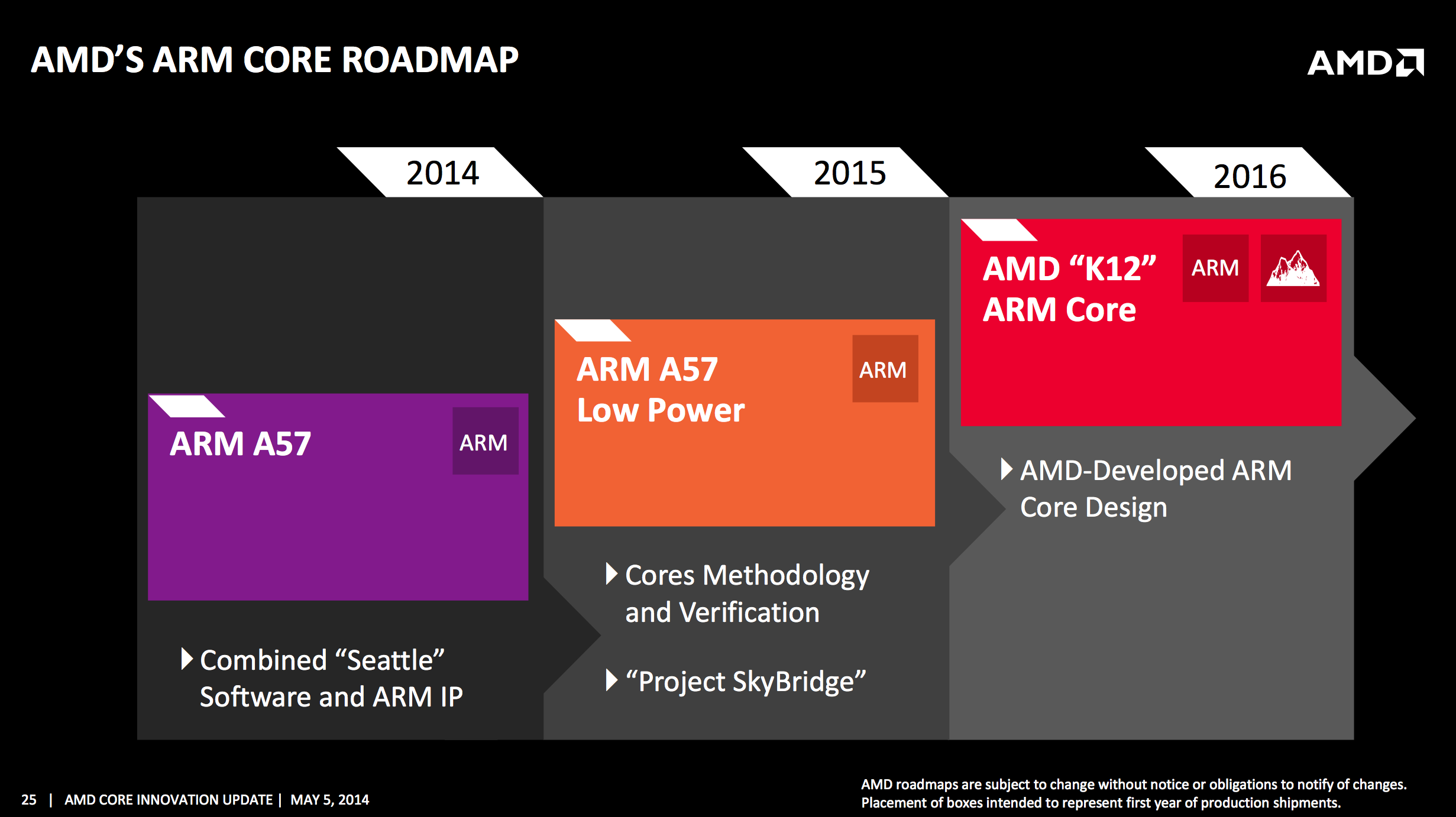

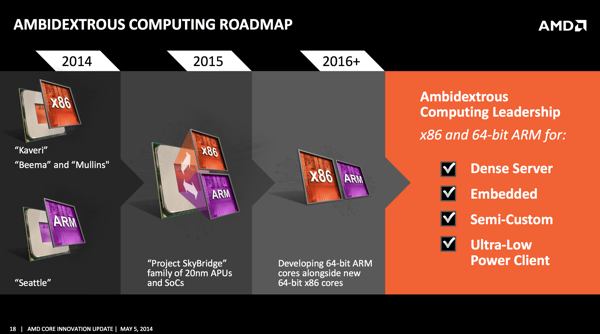

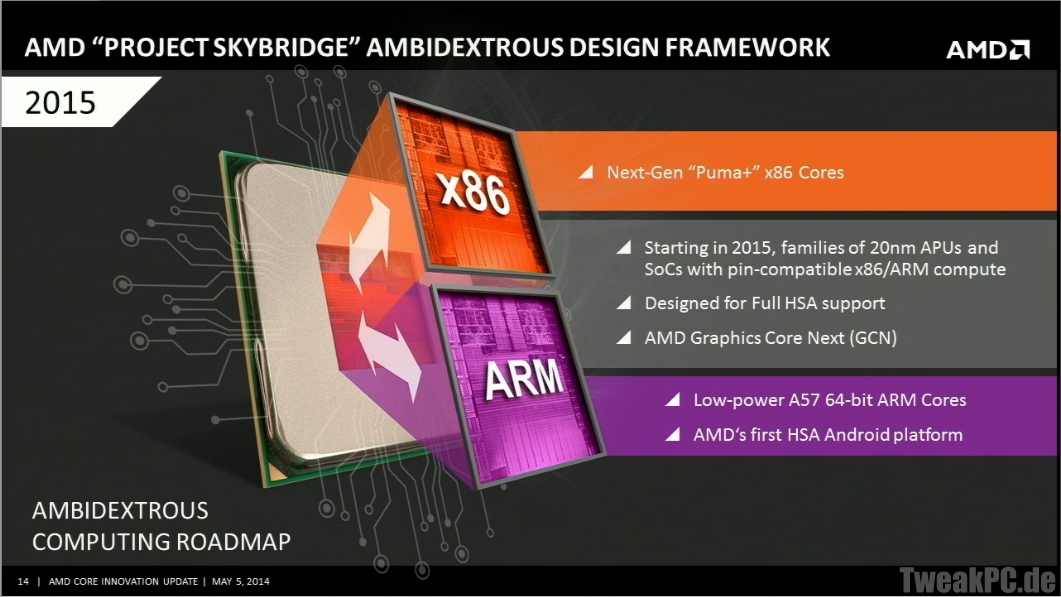

2. This is kind of a mix of your 1/2. Why do you say K12 was planned as a Zen 1/Zen 2 successor? IIRC Zen and K12 were announced around the same time. You probably remember that AMD had plans to make a single socket that could take both Zen and K12. That would've been interesting and I am not surprised it was canceled (complexity).

As recently as 2014, AMD had a public roadmap for a common socket platform between x86 and ARM cores that would bridge the two, with an HSA-enabled version of the Jaguar architecture that might have helped plug the holes in AMD’s roadmap between now and Zen’s launch in 2017. By 2015, those plans had been canceled.

Source: https://www.extremetech.com/extreme/214568-jim-keller-amds-chief-cpu-architect-leaves-the-company

So my question is, why would AMD be developing a K12 with a 6-wide ALU while they were originally developing Zen with 4? From what you are saying, you make it sound like K12 was developed later.

3. I don't know, this kind of also is answered in my #2. AMD at the time did not have many resources, I cannot say what they may have taken from K12 and put into Zen. Their GPU's still suffer because they were lacking so much in the resource department ($$$) just a few years ago.

4. I doubt many people could answer that. I'm sure they were able to use some of the stuff they learned, but who knows what? I know there has been some talk about Zen 3 being a new uarch, but I'm pretty sure Mark Papermaster brought that back somewhat. My best guess is "new uarch" will be similar to K8 --> K10. Very much based on it's predecessor but expanded for more performance. We have many months to speculate though.