But you couldnt be more wrong if you think using HDL is any kind of advancement to be used in performance chips.

My post had little to do with HDL. It's about engineers taking 1.5-2 years to re-evaluating their designs and squeezing more performance per mm2 from the same node for enhancing the existing architectural foundation.

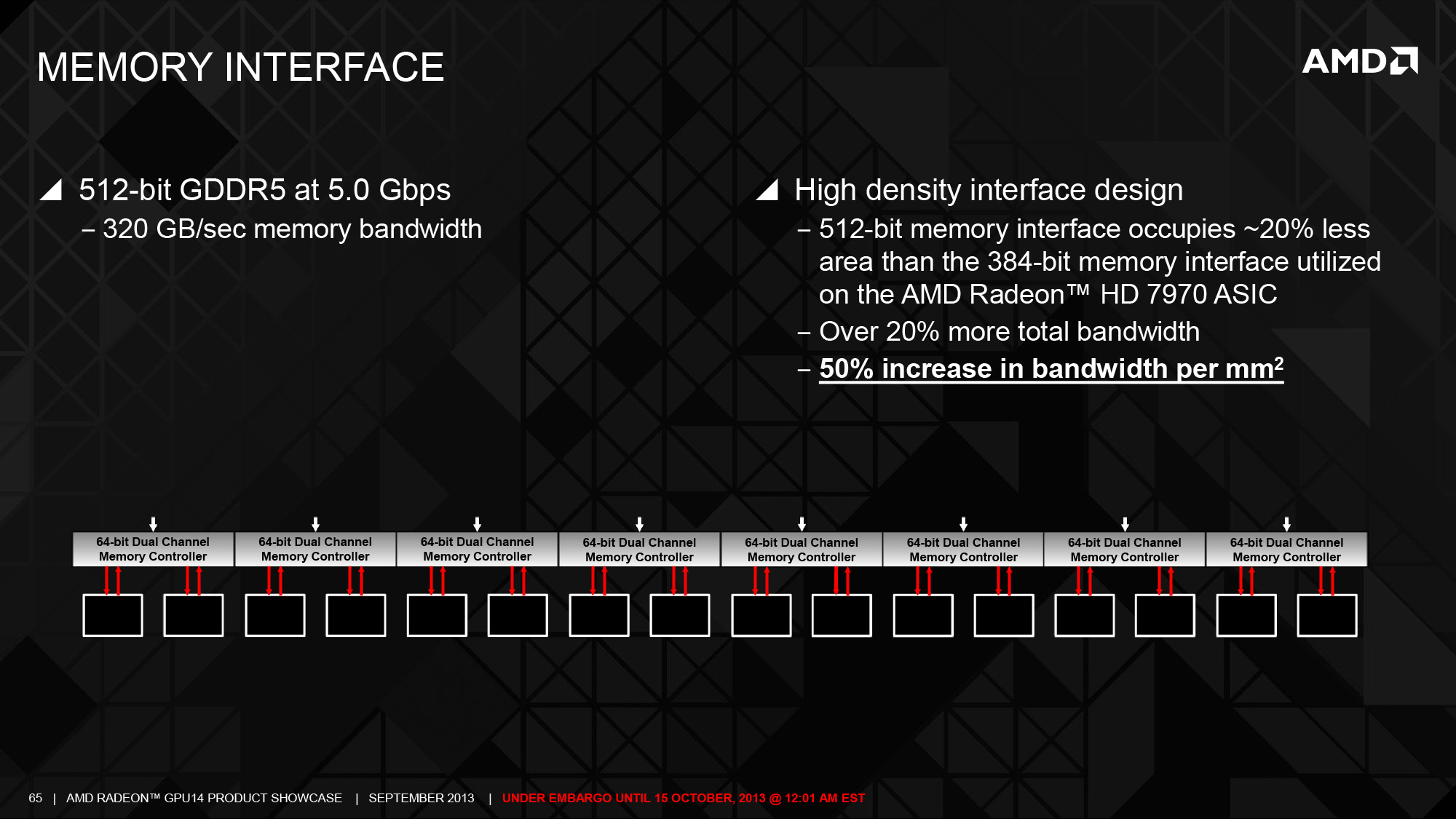

It's like everything AMD has done with Tonga,

explained by reviewers, flew

completely over your head. The problem with Tonga is that it doesn't have enough TMUs and SPs to showcase these advantages in a general sense. However, there are games that have bottlenecks elsewhere and Tonga beats an R9 280X. This should not happen under any circumstances if Tonga's architectural improvements weren't real.

R9 285 beats HD7970 by a whopping

20% in Bioshock Infinite.

Why is this important? Because on 'paper specs' 7970

matches or beats R9 285 in basically everything.

Why can't AMD's engineers take these improvements in R9 285 already and incorporate them into R9 380/390 series? These improvements will shine because R9 380/390 series will have more SPs and TMUs that will allow the architectural improvements in Tonga to stretch their wings.

AMD still desperately need a new uarch to compete in performance/watt. The constant massive marketshare bleed cyclus they are in now is very dangerous.

The statement would only be true if you have R9 300 series benchmarks at hand for the entire line-up.

People seem to not understand this or something. R9 200 series is a GTX700 competitor that is forced to compete against Maxwell because AMD is late. However, if we look at perf/watt, perf/mm2, compute, R9 200 series is very competitive against NV's architecture that was always meant to compete against it.

This is akin to reading a review of an-all new

2015 Ford Mustang beating 6-7 year old Chevrolet Camaro and Dodge Challenger and calling Chevy and Dogde a write-off and making insinuations that they'll never make a new Camaro or Challenger that will beat the new Mustang. All credit is due to NV for beating AMD to launch with a more modern architecture but it's unbelievably how people use AMD's outdated and old GCN 1.0/1.1 R9 280X/290X parts as some pessimistic gauge as to where R9 300 will end up in perf/mm2 or perf/watt. Based on these comments you'd think GPUs take only 12-15 months to design or something.

The difference is that was then, this is now. ATI used to have the personnel but after AMD's purchase of ATI, there have been so many rounds of layoffs and restructuring, I'm not sure how competitive the remaining talent is.

GPUs take 3-4 years to design. This isn't a 12 to 15 months deal. Do you honestly think AMD's engineers just started working on R9 300 series 1 day after R9 290 cards were launched? It's not how it works. It's not like 5 guys at AMD sat down on December 1, 2013 and said hmmmm...forget GDDR5, let's design R9 390 with HBM. These types of decisions take validation and thought process that takes months.

Chances are AMD used Tonga as a test-bed for let's call it Team 1 to improve some aspects of GCN that were lacking that AMD noticed a long time ago. Another team was working on improving other aspects of GCN but AMD couldn't pull all of these changes at once in the 285. With R9 300 series we should see the best of Tonga and whatever else AMD's engineers were able to add in 12-15 months leading up to manufacturing. Well the designs are already finished actually. The last 12-15 months could also have been used to focus on transistor level optimization to reduce leakage, introduce finer-grained voltage control and GPU boost, etc.

AMD still has some talent left, but I suspect it's diminished since ATI's heyday. Also, in the past AMD has used node shrinks and new memory to get its advantage, like HD4xxx GDDR5 and hopping onto new nodes faster than NV. What we're seeing is that if NV and AMD are on equal node and memory, NV is a very tough competitor.

Was HD7000/R9 290 series not competitive with GTX600/700 cards? This forum is making a critical mistake of comparing a brand new modern architecture to an outdated line-up of AMD parts and is assuming that AMD has nothing for R9 300 cards that's really monumentally better than 290X. This is akin to writing off NV when HD5850/5870 launched and GTX275/280/285 got crushed. While AMD isn't going to bring a fundamentally different architecture like Maxwell, that's not to say they can't significantly improve upon R9 200 series. I don't know why some of you guys who followed GPUs for 15-20 years became so pessimistic lately. ATI/AMD and NV have traded blows for years. If R9 300 series flops, then we have a real reason to worry. For now, the biggest issues are delays of R9 300 series, but making premature doom and gloom remarks without knowing the tech that underlines R9 380X/390X seems odd to me.

24% market share is just plain bad. That's not that much more than AMD's x86 CPU market share vs Intel, and we all know how that turned out (other than a brief period when Intel shot itself in the foot with Netburst).

It's not even remotely comparable. The reasons for why AMD's CPUs and AMD's GPUs are losing market share are different. On the CPU side, Intel has a fundamental perf/mm2, IPC, perf/watt advantages due to superior architecture and manufacturing advantage. NV has no such massive architectural advantages to speak off in perf/watt, perf/mm2, IPC when it comes to comparing like-for-like architectures (HD7000 vs. 600, R9 290 vs. 700 series). Right now it's looking like NV is miles ahead because we are comparing Maxwell to 200 series but it's true competitor are 300 series cards. The only way we can say just how much better Maxwell is if we compare it against its like competitor - that's 300 series not 200.

As far as market share goes, this is way more to the story than perf/watt. AMD didn't gain market share from HD4870 to HD7970 series despite it leading in perf/mm2 and perf/watt throughout the entire HD4000-6000 generations.

I don't think anyone is saying that AMD can't improve their efficiency. The question is whether they can improve by enough, fast enough, to matter. Because efficiency really is king, because mobile is king. Desktop discrete GPUs are transitioning from being the dog to being the tail (Quadro/Tesla excepted).

For the sake of consumers, I hope AMD remains competitive. As Jaydip said above, I don't want to see what happened in the x86 CPU space happen to the discrete desktop space.

Consumers have already shown since HD4000 series they will pay more for inferior perf/watt and price/performance products from NV. Therefore, even if R9 300 series bests NV in every metric possible, AMD won't get to 50%/50% market share. The issues of AMD's GPU products lie beyond today's technical inferiority compared to Maxwell's cards. Poor OEM customer relationships, tarnished brand and driver issue image, poor marketing, etc. Even if R9 390/390X is spectacular, there are already 2 areas where reviewers/mainstream segment could throw the card under the bus at will - spin WC as a requirement while NV can get away with air cooling only, focus on 300W TDP only without considering the gaming system's overall perf/watt, 4GB vs. 6GB of VRAM.

I think what AMD needs to do is get design wins in the low and mid-range desktop and laptop sectors and that's only possible with significantly improved parts. Focusing on 390/390X only is akin to spending hundreds of millions on <5% of the gaming market. AMD's GPU division can't be that incompetent as it would mean no new laptop chips from HD7970M May 2012 to late Fall 2016 then? It's amazing some people actually believe that. :awe:

Because that requires more SKUs. I don't want to sound overly negative but it looks to me like AMD simply does not have the resources to bring out a new line of GPUs. I honestly hope I'm wrong, will be glad to be. But look at the pattern the last few generations, we went from a top to bottom line of new chips (Evergreen) to AMD having mostly re-badges.

Based on what? I could just as easily propose a hypothesis that AMD saved financial resources for R9 300 series which is why we they only made R9 285/290 cards because they knew 200 series was a transition generation? Either theory is just a hypothesis. However, the biggest problem with the R9 300 re-badging theory is that it provides no explanation at all as to how AMD would get laptop design wins with re-badged R9 200M parts, which themselves are basically HD8000M parts. As I said above, if R9 390/390X will be the only 2 new SKUs, why would AMD spend hundreds of millions on dollars on < 5% of the GPU market and neglect >50% of the entire laptop market?!

That's the thing, even when AMD GPU's were more efficient (less power hungry) than their NVIDIA counterparts they still weren't selling as well. Brand perception/marketing is a pretty powerful thing. On the desktop side I'm not sure having maxwell like efficiency is going to do AMD a lot of good when you have a very loyal group of customers approaching Apple like statuswho now it seems would never have considered AMD anyway. AMD definitely needs to improve perf/w on the mobile side though, that's where grabbing big oem sales will matter.

AMD has released all new top to bottom stacks. They've done it months and months ahead of nVidia. They've had nVidia beat at every price point with new tech while nVidia kept peddling old stuff. Didn't really matter. nVidia was able to hang in with just a single new chip (GF100), and get right back to dominating with a second (GF104) even though it was released ~10 months later than AMD's mid range card. Efficiency didn't matter either. Their cards out sold AMD.

Agree with everything both of you said. I already did an

in-depth analysis of how AMD's superior perf/watt and price/performance didn't even make a dent in NV's market share from HD4870 all the way to HD7970 days! If that isn't proof of NV having more brand loyal customers even when AMD practically beats NV in everything but the single GPU performance crown, I don't know what is. During 6 months of HD5000 series head-start, AMD beat NV at nearly every price segment on the desktop in terms of perf/mm2, perf/watt, price/performance and absolute performance and features by getting to DX11 first. Maxwell can't even accomplish that because NV loses on price/performance in today's market and for brand angostic gamers $100-120 750Ti or $200 960 aren't good gaming products compared to $120-130 R9 270 or $200-220 R9 280X.

NV literally coasted with outdated product line-up top-to-bottom for 6 months leading up to GTX470/480 and then another 3.5-4 months until GTX460 launched, which means NV had nothing worth buying for a brand agnostic gamer below $350 GTX470 for 10 months straight. What's amazing is HD5850/HD5870 pummeled GTX275/GTX285 by way more GTX970/980 beat R9 290/290X and those AMD cards cost $259 and $369, way less than $330 and $550 for 970/980. Yet, NV's market share was basically unaffected when that generation was settled (in fact NV gained market share post Fermi) but today with far more competitive R9 280/280X/290/290X cards vs. HD5000 vs. GTX200 series, AMD's market share has been devastated. It's more obvious as ever based on buying patterns that NV users hardly switch and when AMD's market share plummets, it's brand agnostic gamers jumping to NV because they aren't waiting for AMD's cards. What's surprising is NV has been able to get gamers to switch

while raising prices. I think AMD paid a huge price for not showing up for laptops for 3 years in a row now, and having tarnished its desktop GPU brand image by the reference blower HD7970 and poor early drivers during the first 3-4 months of Tahiti's launch. Ever since they haven't been able to recover.

Therefore, R9 300 series will not be some savior to AMD. Even if R9 390X were to take the performance crown from GM200, and R9 300 cards match NV's perf/watt and beat NV's 900 cards on price/performance, NV users will be reluctant to switch as they will just wait for NV to drop prices on 900 cards and buy those. We've seen this play out since 2008 when HD4850/4870 revolutionized price/performance and for 6 months when AMD had uncontested absolute performance with HD5850/5870, but people still bought NV in greater numbers. Today we see gamers buy $200 GTX960 over a $240-250 R9 290 and the performance difference is 45-50% there...so.