Too many doom and gloomers, remember the months leading to Fury X? Many here were claiming a 4000 SP GCN GPU would be 400W+ monster (esp the Water Cooler, power hungry so it NEEDS water! lol.. Asus Fury on air, 213W...). That AMD could never be competitive on performance etc.

When was the last time AMD's generation beat NV's generation uarch at the high end? Never.

Now Fury X is matching 980Ti at 4k, all it takes is a few newer AMD-favorable games to arrive (lately its all GameWorks, don't forget), AMD's GE titles are incoming. Then DX12...

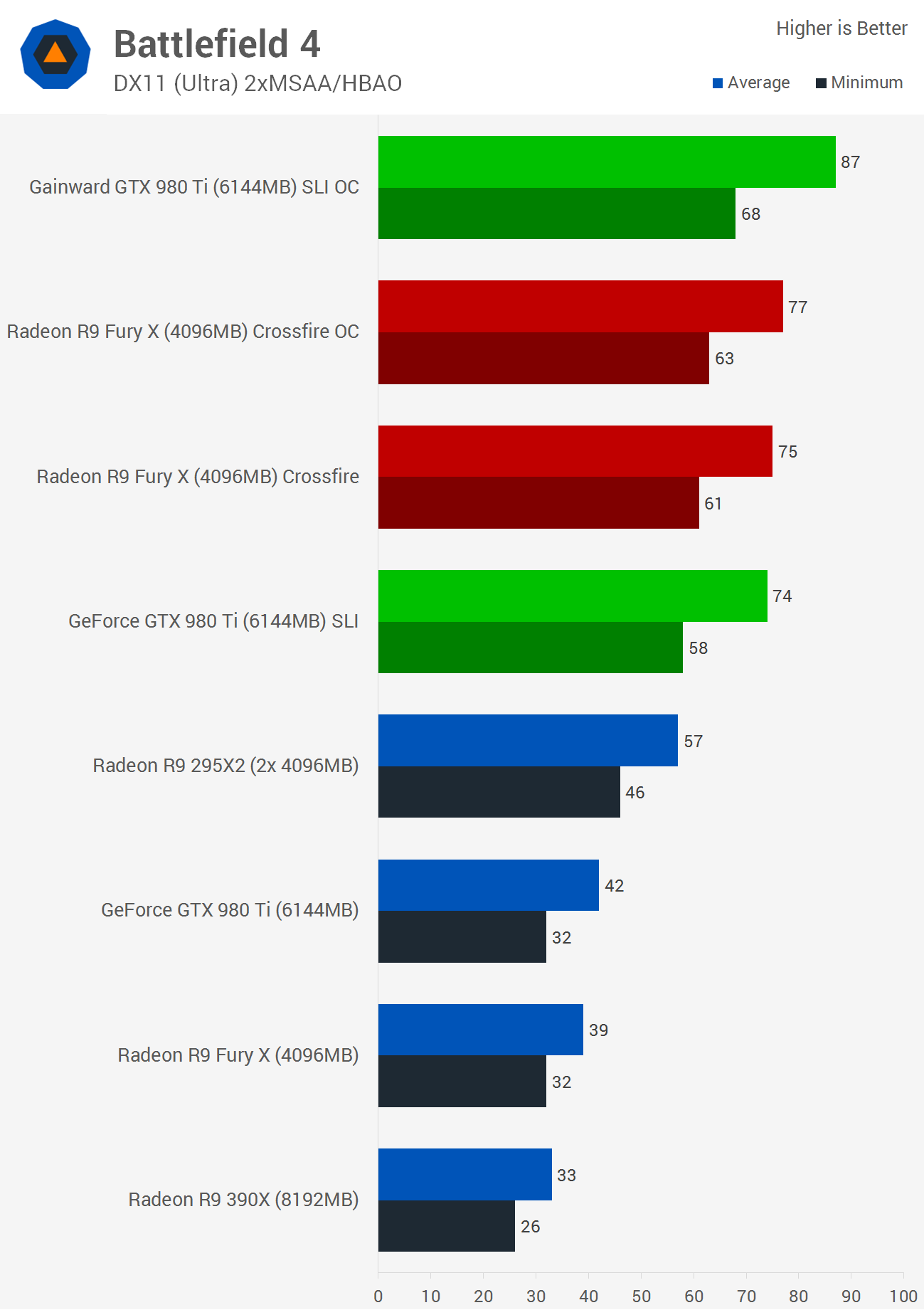

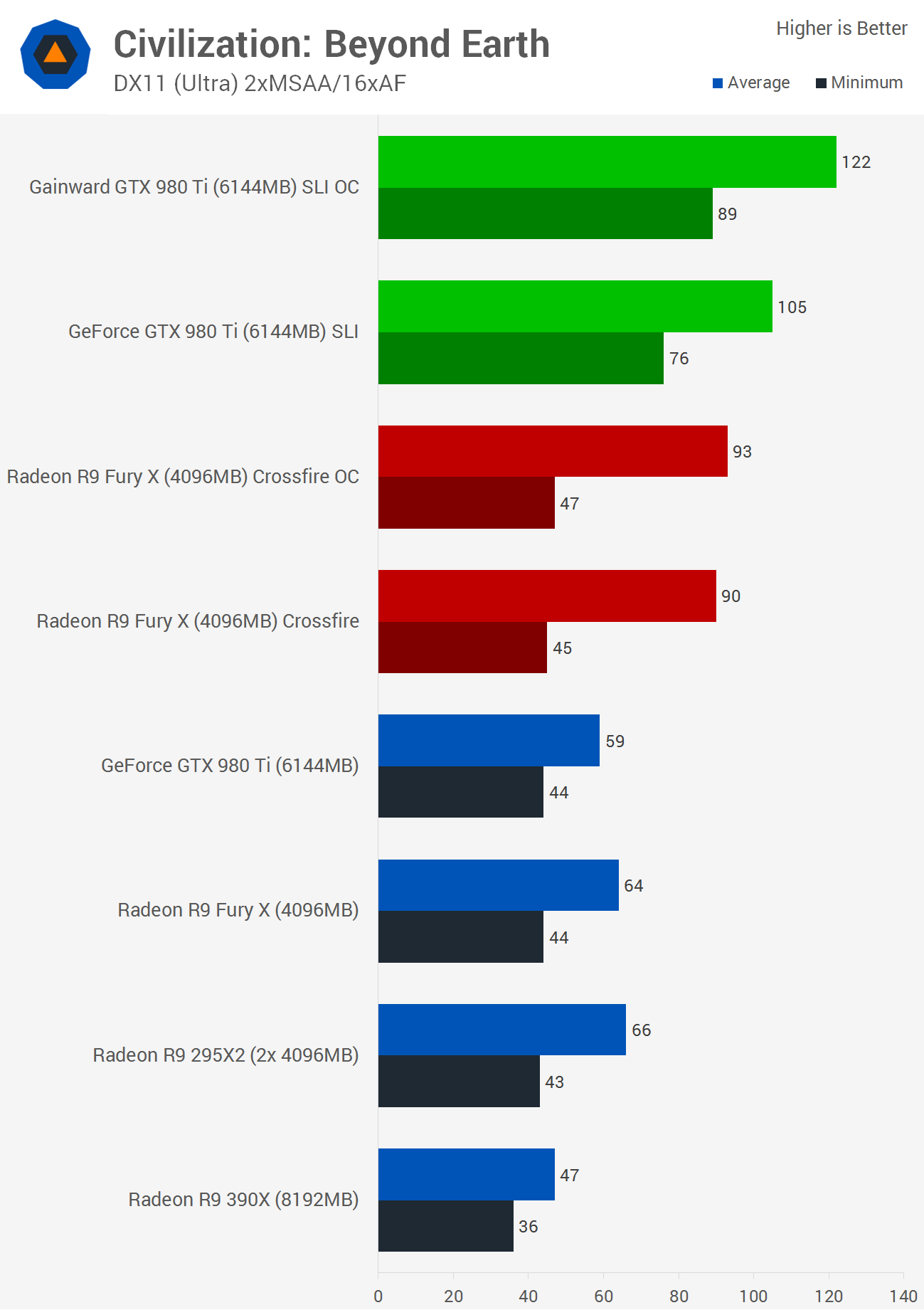

But especially for the ultra high end, Fury X CF > 980Ti SLI. In raw performance and in frame times. At stock, faster than a factory OC 980Ti SLI that nearly hit 1.4ghz boost, with 22% better frame smoothness overall.

http://www.techspot.com/review/1033-gtx-980-ti-sli-r9-fury-x-crossfire/

http://www.techspot.com/review/1033-gtx-980-ti-sli-r9-fury-x-crossfire/ (Note Witcher 3 has HairWorks on, it would be a bigger win for Fury X if GameWorks features were disabled).

When was the last time AMD managed to beat NV? This it the first time. Some people will say "meh, 4K doesn't matter" or "multi-GPU doesn't matter".. but goalposts, they can move it, I don't care. At the TOP, AMD is faster, smoother and in the DX11 era where their hardware is running crippled. In a year's time, you watch as Fury X smacks the 980Ti silly.

What they accomplish with such a limited R&D budget is outstanding.

As for your doom & gloom that AMD can't compete with Pascal. Don't be daft, AMD with each generation has gotten closer to NV on performance**, to finally beating them at the top. Next gen will continue the trend, as they have more HBM experience, HBM2 + Artic Island will shine.

http://www.kitguru.net/components/g...gpus-for-2016-greenland-baffin-and-ellesmere/

Also if there's any truth to the Hynix HMB2 exclusivity for AMD, you can look forward to a demolition of Pascal + GDDR5.

** 5870 vs 480, 6970 vs 580, 7970 vs 680, R290X vs 780Ti, note the performance gap shrinks. The R290X was slower than 780Ti on release at all resolutions. Fury X at least is competitive at 4K, albeit single GPUs aren't playable at 4K, so the real 4K battle always comes down to multi-GPU.