- Next level being TR where users can get by with less support, less high cost, but lower power efficiency and higher clock speeds.

Actually best dies are for TR, this was confirmed by AMD to Hardware.fr, ratio is 2% of the functional dies.

- Next level being TR where users can get by with less support, less high cost, but lower power efficiency and higher clock speeds.

What makes you think this statement is true? Do you have a technical article that would support your claim?

Ehh, just because Intel claims that that they are "monolithic", does NOT mean that their 10,12,14,16,18 dies are DIFFERENT chips. You've heard the term "binning", right? That means that Intel only produces three different dies off of their assembly line, a LCC, MCC, and HCC dies. Thereafter, they test them, and for those chips that have defective cores, they laser them off, and sell them as a lower core-count chip.Anandtech and others top reviewers mentioning this in their review and news articles. Plus Intel in their own slides make mention of this several times, claiming how their monolithic processors are better because they are monolithic, while AMD's is "glued" several cpu's together.

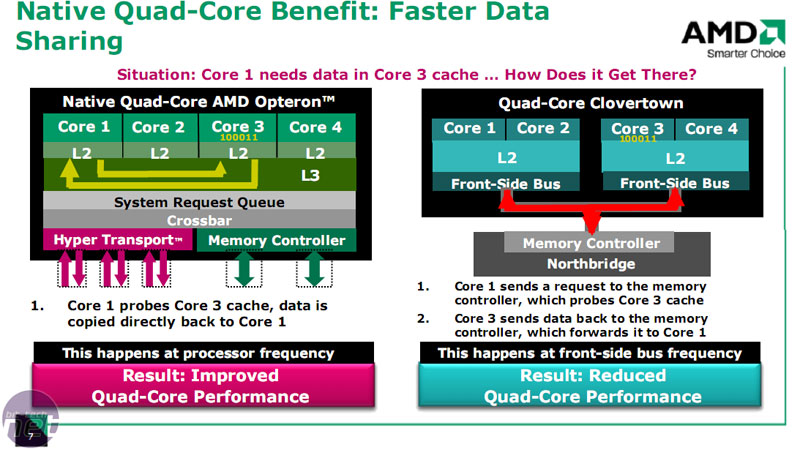

Wasn't that the same explanation that AMD gave for the weak performance of Phenom 1? That Intel cores aren't truly 4 cores, that they are glued two 2 core dies? History repeating.Plus Intel in their own slides make mention of this several times, claiming how their monolithic processors are better because they are monolithic, while AMD's is "glued" several cpu's together.

Beat me to it!Ehh, just because Intel claims that that they are "monolithic", does NOT mean that their 10,12,14,16,18 dies are DIFFERENT chips. You've heard the term "binning", right? That means that Intel only produces three different dies off of their assembly line, a LCC, MCC, and HCC dies. Thereafter, they test them, and for those chips that have defective cores, they laser them off, and sell them as a lower core-count chip.

2 Things. 1 Phenom didn't have weak performance. It's performance was really good. Just there was a bug that was missed during development that prevented the clocks that they were simulating. It had nothing to do with monolithic vs. multiple dies. 2. They did state that theirs was a true quad core and true dual core when comparing the Phenom and X2 vs the C2Q and PD respectively. But they didn't called Intel's glued, they didn't even say their method was better just implied it by making it seem like the more technical marvel.Wasn't that the same explanation that AMD gave for the weak performance of Phenom 1? That Intel cores aren't truly 4 cores, that they are glued two 2 core dies? History repeating.

For clarity:

1) Intel already has a 28c/56t CPU. While it costs an arm and a leg because it is a monolithic die, there's no technical reason why Intel can't release higher core counts for HEDT with a X299v2 in the future (margins aside). It's pretty clear that's what they're doing in X299 by making HCC dies available to HEDT for the first time.

Source: https://ark.intel.com/products/family/595/Intel-Xeon-Processors

2) Intel already has their version of "glue" in the works. It's simply the most economical way to scale to more cores without breaking the bank. Expect more cores in the core wars from everyone, Soon.™

3) AMD has confirmed that Threadripper is 2 dies + 2 silicon spacers. Even if it was 4 dies, X399 is quad channel, and you get 2 channels of memory from each die... there's nothing stopping AMD from releasing a Zen 2 with more cores, but for now Threadripper tops out at 16c/32t. You want more cores? Get Epyc 1P or 2P and knock yourself out with 32 or 64 cores.

Back when Intel put everything on Netburst they were still continuing the traditional design (Pentium 3) line with the mobile oriented Pentium M, and that is what they turned into Core 2. Today I'm not sure what alternate design they could pull out of their hat. Atom? =P1) You're assuming Intel won't adapt. They will. See: Conroe.

The big difference between 1900x and 1800x is the uncore (the thing that offers memory, PCIe lanes etc.), which can amount to up to 25 watts TDP per die, and Threadripper has two of them. Accordingly Threadripper has way higher energy consumption while idle.I'd be interested to see a 1900x vs. 1800x comparison. Not just performance, but power usage too.

Roughly 10% price difference, maybe slightly more.

Quad channel mem and the extra pcie sound nice, but otherwise I don't think i'll regret my 1800x/am4 purchase.

I agree that Intel has enough money to do anything they want - that much has not changed. But If they had their version of "Super Glue" close to fruition they would have mentioned it - more than once.

Back when Intel put everything on Netburst they were still continuing the traditional design (Pentium 3) line with the mobile oriented Pentium M, and that is what they turned into Core 2. Today I'm not sure what alternate design they could pull out of their hat. Atom? =P

They wantedBack when Intel put everything on Netburst they were still continuing the traditional design (Pentium 3) line with the mobile oriented Pentium M, and that is what they turned into Core 2. Today I'm not sure what alternate design they could pull out of their hat. Atom? =P

Mind, I personally think their actual core design is the least of their issues. In the last decade they just chose to put the focus of their R&D on advancing their own iGPU as well as bloating up AVX.

The big difference between 1900x and 1800x is the uncore (the thing that offers memory, PCIe lanes etc.), which can amount to up to 25 watts TDP per die, and Threadripper has two of them. Accordingly Threadripper has way higher energy consumption while idle.

Yeah, wanted to ask if that also applies to Tiger Lake as as you indicated Sapphire Rapids is intended for data centers.Sapphire Rapids. Although if it's radically different the core design is likely to be very server oriented and not much else.

Wasn't that the same explanation that AMD gave for the weak performance of Phenom 1?

Core 2 Quad was also built with two separate silicon dies on a MCM.You're probably thinking Athlon x2 vs Pemtium D. Going through a shared FSB was definitely subpar.

They can't turn up the speed on the EPYC chips. Not really. The R7 is already an extremely efficient die at 1800x considering the core count. The R1700 takes it to another level. But power usage as you see on TR is pretty linear. The 1950x is roughly 2x 1800. Stands to reason that the same would apply to an EPYC. 4x ~90w usage would be 360w. 4x 65w would be 260w.

As we know with the 1700 it generally doesn't clock as high as a 1800x. So chances are they aren't really competing in dies. A TR die is about clocking as high as possible within a power envelope. An EPYC die is about hitting a pretty low clockspeed with as low a power usage as possible. A 7601 for example uses the same 180w. That means for it's clockspeed it needs to limit power usage to 45w a die vs. 90w on TR. They are basically on the competing ends of the spectrum. EPYC is also not going to see a jump in power allowance since cooling is more restricted in the server space than desktop and even then AMD would need to open it up to an insane 360w for the die choices to compete.

Today I'm not sure what alternate design they could pull out of their hat. Atom? =P

Back when Intel put everything on Netburst they were still continuing the traditional design (Pentium 3) line with the mobile oriented Pentium M, and that is what they turned into Core 2. Today I'm not sure what alternate design they could pull out of their hat. Atom? =P

Mind, I personally think their actual core design is the least of their issues. In the last decade they just chose to put the focus of their R&D on advancing their own iGPU as well as bloating up AVX.

The big difference between 1900x and 1800x is the uncore (the thing that offers memory, PCIe lanes etc.), which can amount to up to 25 watts TDP per die, and Threadripper has two of them. Accordingly Threadripper has way higher energy consumption while idle.

Intel CAN drop prices - isn't their margin like 50-60%? There is no doubt AMD can make CPUs cheaper at this stage, but Intel doesn't have to be cheaper - only a little less expensive. If they dropped the 7900 down to $799, I'd take it over the 1920X. For that matter, if they dropped it even as low as $850, I'd probably still take it over the 1920.

"but Intel doesn't have to be cheaper - only a little less expensive"

that becomes an interesting question that it appears AMD has thought about that alot and INTL has not. Its not the CPU's itself AMD seemed to realize they could get an incremental advantage if they didn't segment their products like INTC has. Every Ryzen board has 64 pci lanes vs 44 at the high end for intel and as you go down he stack it gets more apparent. So AMD wasn't just thinking about Ryzen CPU vs Zeon CPU but the whole package around the chip. AMD was strategic and Ryzen/Threadripper/EPYC seem to be the real deal - the combination is why Intel cant just cut prices - Right now Intel is offering more price for less options and less performance in multithreaded workloads(which is kinda what these chips are for) I admit I don't have 1% of the technical knowlege some folks on this board have but business modeling is a passion of mine. This battle between Intel and AMD will be in case studies in college one day due to how complicated a duopoly it is.

Personally I like to have a fairly beefy pc but I won't pay a premium so I can get slightly higher benchmarks. Yet I have no problem spending 7.5k on a watch and I have to service that regularly at a grand a pop.

I don't see Intel's grip slipping. Their hardware is everywhere. Like I was posting earlier they sold 75000 CPUs in one sale. If this was a watch forum Intel would be rolex not sure who AMD would be.....

https://www.youtube.com/watch?v=_1rXqD6M614

"Their hardware is everywhere" yes that is true and they have done a great job marketing the "brand" but CPU's are a commodity (part of an end product) in a Monopoly you can try to brand it but if you look at the Steve Jobs vid above he explains that this happens to tech companies who have a monopoly. your also correct on INTL's scale - they are everywhere but the challenge now is that they have a company who is competing against them with a chip to chip cost advantage (not scale) INTC needs a competitive redesign.

Much like AMD needed a redesign when they had bulldozer burning down houses. AMD had an aweful design there but what they have done here is thrown a last minute touchdown pass with a low cost to make high performance CPU that scales from top to bottom.

2 Things. 1 Phenom didn't have weak performance. It's performance was really good. Just there was a bug that was missed during development that prevented the clocks that they were simulating. It had nothing to do with monolithic vs. multiple dies. 2. They did state that theirs was a true quad core and true dual core when comparing the Phenom and X2 vs the C2Q and PD respectively. But they didn't called Intel's glued, they didn't even say their method was better just implied it by making it seem like the more technical marvel.

In the end though there was a huge difference between that implementation of MCM and this one. The performance may be better when maintained through one die or one CCX. But it's so much better then the PD and C2Q. There were no cross talk between the dies. It's all through FSB like they were actual dual CPU systems. So instead of a setup that is kind of like multiple CPUs those were actual multi CPU setups. Comparatively what AMD is doing is like 10x better.

Isn't that what I said? They showed a technical reasons why theirs might be technically better. A lot different than stating Intel "glued" theirs together.

Isn't that what I said? They showed a technical reasons why theirs might be technically better. A lot different than stating Intel "glued" theirs together.

Source, other than an imgur link?