I have been PC gaming for nearly 10 years and I have seen this downright terrible argument time and time again.

Gamer looking for advise: Is this a good GPU to buy for my PC?

Forum expert: What monitor do you have? Don't you dare buy that graphic card if you don't at least have an xyz monitor as otherwise you will be in massive overkill territory. While in theory the argument will work when we talk about extreme scenarios. But people practically fail to apply it in an effective manner.

Right now if someone were to say that they bought a GTX 1080 to play games on their 1080p screen everyone would go like "OMG such a waste". Some people would even say that if you bought a GTX 1070 for 1080p. But I bet almost everyone will say that about the GTX 1080 hence that is what I am gonna concentrate on here.

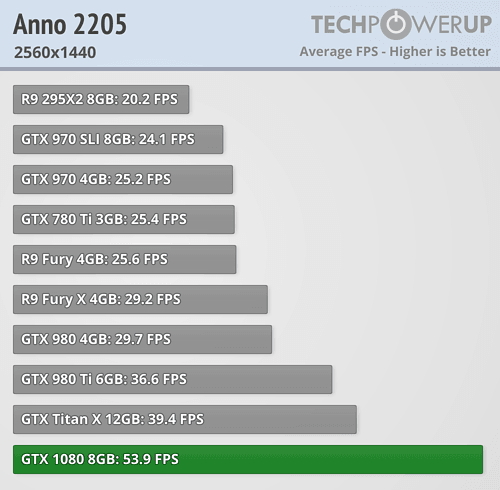

The next resolution jump while maintaining aspect ratio after 1080p is 1440p so let's just examine how the "overkill" GTX 1080 performs at 1440p shall we?

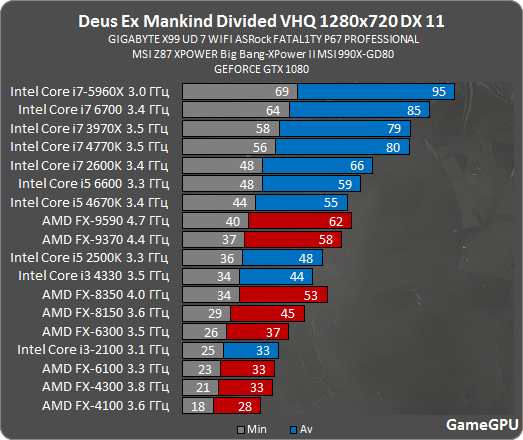

Oh no that's not a good start lol.

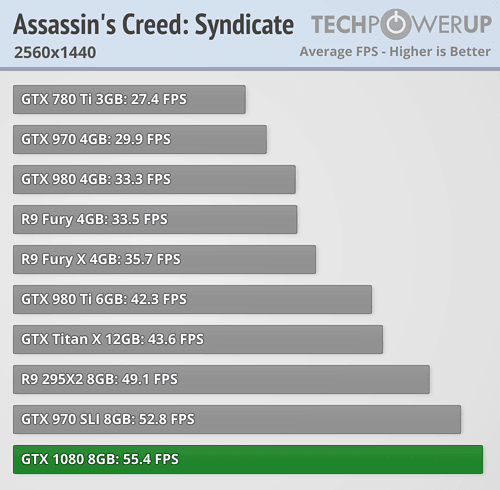

Opps...

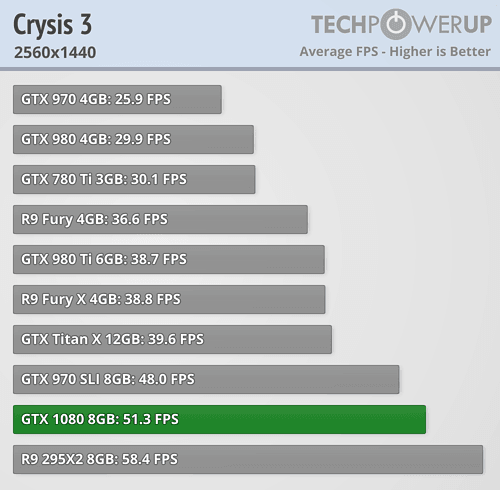

So overkill? lolol

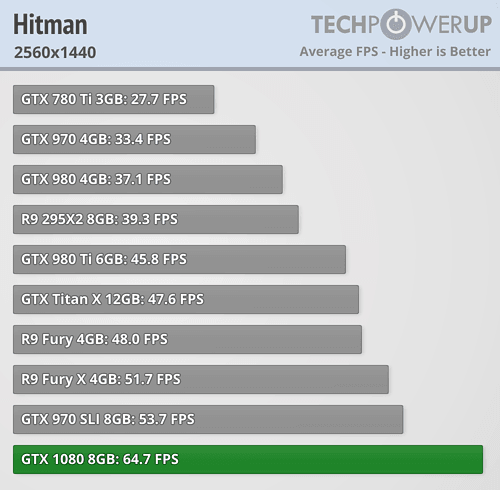

Does not look well for future games...

So the GTX 1080 does not max out every game at 1440p even at launch what do you think is going to happen going forward? Are you guys really that clueless about how fast GPU requirements increase to even consider such arguments? A GPU would need 120+ FPS in EVERY game for that argument to have any merit.

To force a noob gamer into buying an inferior GPU to what they could actually afford just because their resolution doesn't meet your lofty standards is just bad advice and will have a negative impact on PC gaming when 2 year onwards that gamer would be struggling with most games at decent settings.

Now your "PC master race" mentality may not be able to digest it but the fact is 1440p continues to be a dual card resolution. PC graphics cards have simply not left 1080p in the dust and that's fine. The consoles still don't do 1080p in every game last time I heard.

Not to mention insane resolutions are actually detrimental to the progress of graphics. If a game developer wants their game to run very well at very high resolutions with single GPUs then they are clearly sacrificing the fidelity for that.

Now YOU may be fine playing at higher resolution at the cost of lower settings and lower performance. But please stop pretending that your monster GPU eats out all games at 1440p because it really doesn't.