LTC8K6

Lifer

- Mar 10, 2004

- 28,520

- 1,576

- 126

I'd actually give that title to the 16 Core Opteron 6386 SE.

I think it's pretty clear that we are talking about desktop chips.

8350 is AMD's upper end desktop line.

I'd actually give that title to the 16 Core Opteron 6386 SE.

How does anyone consider the FX "an upper end product" in a world of Xeon's and Extreme Editions? Seriously, the FX-8350 debuted at a retail price of $199.00 in October 2012. The Intel i7 3970X debuted a month later at the retail price of $1,000.00.

I just dropped the mic.

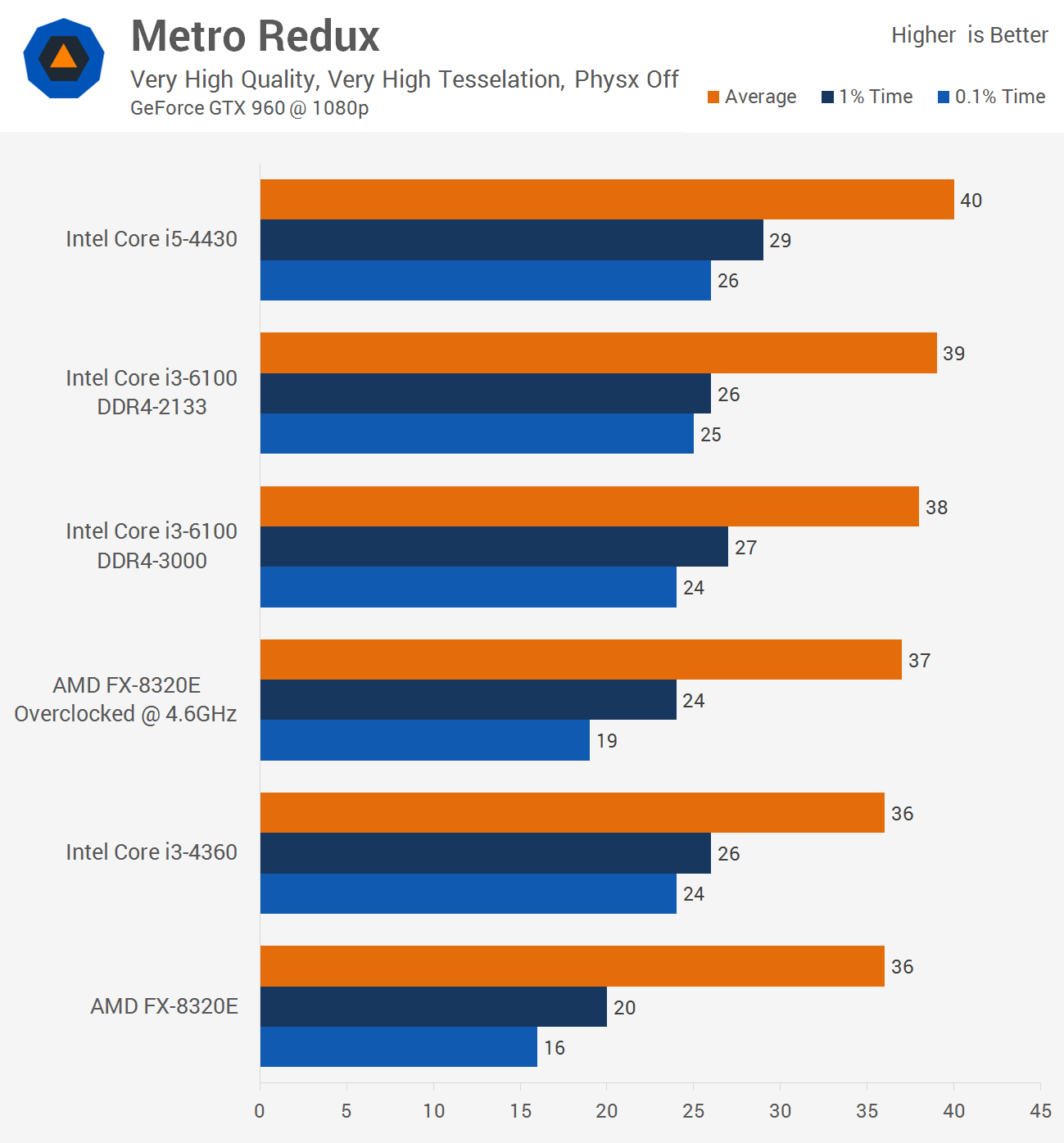

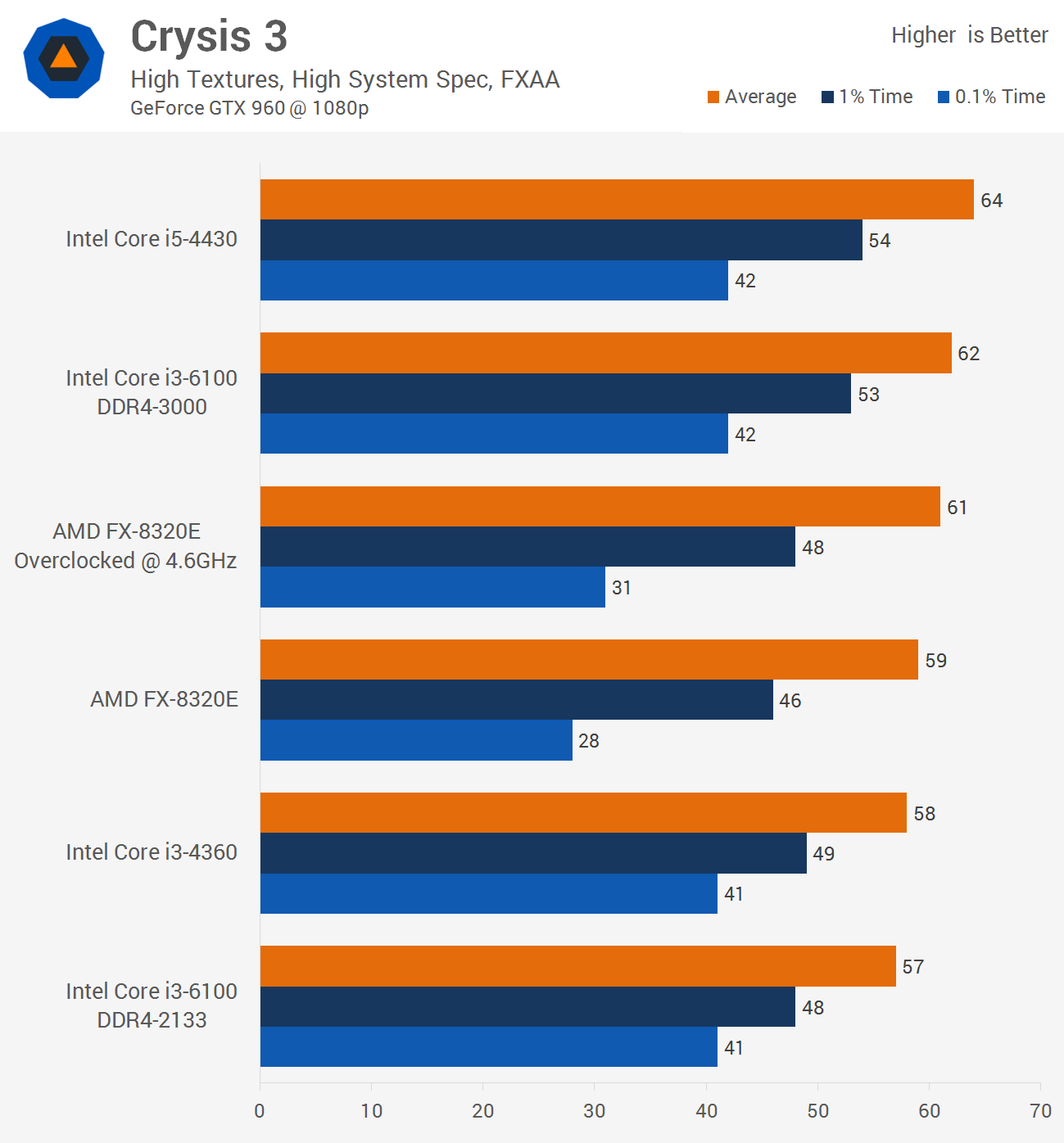

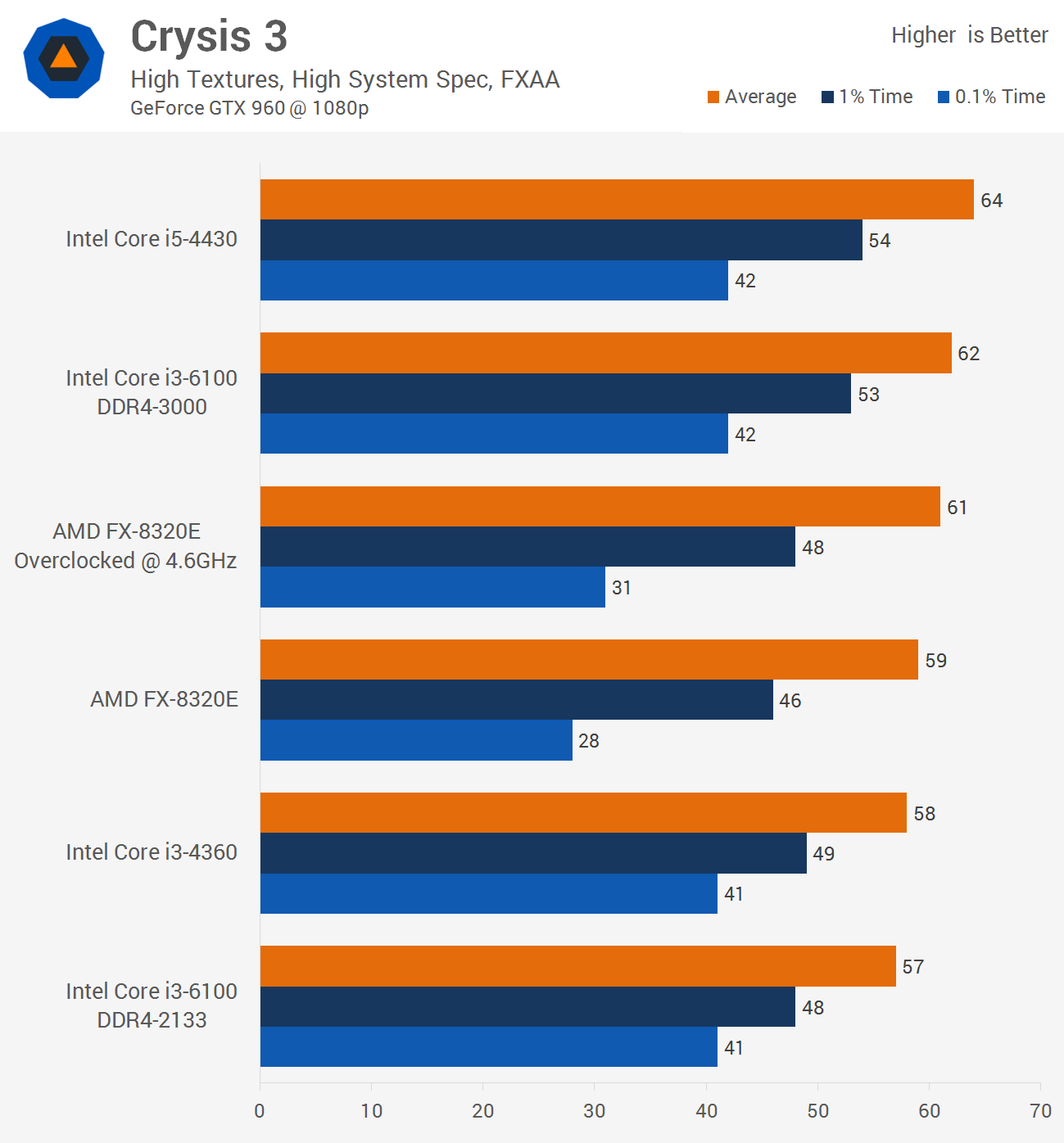

Bingo! We have a winner here. Gtx960 running Crysis3 at high settings @1080p is just not enough to show any CPU advantage that comes from overclocking.

You know what ??? Using the R9 380 + the 8-Core CPU will be much better than the Core i3 + GTX960 on the Games that support Mantle or DX-12 titles.

So if we had the same review with the AMD FX8320E + R9 380 it would be faster and with lower frame-times than the Core i3 6100 + GTX960 on Battlefield 4 MP, Thief, Civilization BE etc.

Shame they didnt included a Tonga GPU as well.

The FX is getting thoroughly beat in games. They also tested the i3-6100 with 2133MT/s and 3000MT/s RAM. I would like to see how low timing 2133MT/s performs in comparison though.

we know from Digital Foundry that the AMD GPUs suffer a bigger loss when moving from an i5 to i3 on DX11 games, but I would like to see this scenario also with slower i5s and AMD CPus

If I weren't going to upgrade or do a new build for three or four years and had no interest in OCing I'd get a cheaper socket 1150 i5 and reuse some DDR3. Newegg has an i5 4460 for $177. At least thats what I would do if it were down to just the CPUs in that Techspot review.

Does anyone know if they used the MetroLast Light Redux benchmark ?? Because at the same settings they used on the review, the FX8150 + HD7950 @1 GHz is way faster than the Core i5 + GTX 960 of the review.

I get 56fps average vs 40 on the Core i5 + 960.

my guess is that it's MetroLast Light Redux built in benchmark, but yes, that's pretty poor it could also be a gameplay scene, and it could also be a different game (metro 2033 redux), this kind of stuff, the lack of clarity on how these people test the games is pretty bad.

my guess is that it's Metro Last Light Redux built in benchmark, but yes, that's pretty poor it could also be a gameplay scene, and it could also be a different game (metro 2033 redux), this kind of stuff, the lack of clarity on how these people test the games is pretty bad.

Wow, in a typical fashion of AT, the defense and cherry-picking for i3s is stronger than ever. Let's point just close a blind eye to all the popular AAA games where FX performs well and all multi-threaded non-gaming applications because well apparently 99.9% of computer users just play games and nothing else. :sneaky:

AnandTech's CPU sub-forum has become an utter embarrassment of tech knowledge and extreme biased cherry-picking from the time I've joined this forum.

You cannot just focus in on games where an i3 does well and games where the FX would trounce it handily. That's not an objective comparison.

http://www.techspot.com/review/1087-best-value-desktop-cpu/

The FX is getting thoroughly beat in games.

This is an entirely inappropriate post.

Did you even click the link in the OP? The single game benchmark that the OP included was actually fairly middle of pack. If he had chosen a newer AAA, like GTA5, the FX would have been shown in an even worse light.

Plus, the OPs comments echoed the findings of the linked article.

Funny enough the bench you posted shows that none of the CPUs could even manage 30 fps minimums at 1080P, implying the game is heavily GPU limited. I guess playing at sub-30 fps minimums, with drops to 24-25 fps is more cinematic on a Skylake i3. I guess it's the better CPU for cinematic PC gaming experience when paired with a GTX960. Sounds like a great gaming PC on the brink of 2016.

What about the cost of buying the new motherboard, DDR4 and CPU in order to upgrade to Skylake?

Aren't you in tunnels all of the time in metro? Only encountering grups of monsters? I only played it a bit,but limited view distance and not much objects make for a light game.funny thing is that this game runs at 60FPS on the consoles most of the time,

Yes, it did. All the E is an FX-8300 (debuted in Japan in 2012) with a different multiplier. Same chip, only difference is more mature yield. The FX-8320E is a rebrand -- much like the Radeon 300 series is to the Radeon 200 series. The reality is that Vishera is a 2012 design.

What happened to the 4330 and 6330 FX chips?

Aren't you in tunnels all of the time in metro? Only encountering grups of monsters? I only played it a bit,but limited view distance and not much objects make for a light game.

The in game benchmark is intense,the game itself is a coridor shooter, or at least that is what I saw.

Are there AAA games where AMD's FX series doesn't perform well? Absolutely.

Are there AAA games where and i3 doesn't perform well? Absolutely. have just jumped into the future and purchased a Skylake i3, right?

http://www.techspot.com/review/1087-best-value-desktop-cpu/

The FX is getting thoroughly beat in games. They also tested the i3-6100 with 2133MT/s and 3000MT/s RAM. I would like to see how low timing 2133MT/s performs in comparison though.

That is a very brave thing to say looking at the optimization wise crap titles we got over the last years.What's more, you would be a fool to pick a dual core cpu going into the future! How is it the best bang for your buck when you have to upgrade in a year or two, because it can't handle the better optimized games of tomorrow?

Because you spend only half the money on a dual and in 1-2 years you will be able to get a 1-2 gen newer dual for the money you saved.How is it the best bang for your buck when you have to upgrade in a year or two