So by blowing the TDP budget, or waiting an extra 3 years for 14nm to be viable. Sounds like a great solution.

Not really. Just look at what mobile Maxwell can do with an Intel CPU.

So by blowing the TDP budget, or waiting an extra 3 years for 14nm to be viable. Sounds like a great solution.

I'm saying that Intel and Nvidia are entering in bad deals now while trying to break in proven markets, which are much bigger than the consoles, while AMD is entering in a bad deal today that won't generate further benefits in the future.

Beyond the console chips, what other players do you think that might be attracted to similar deals with AMD? That's right, none. Almost nobody needs bleeding edge graphics and custom solutions can't compete with off the shelf solution on costs for anyone who need some graphics capacity. The console deals are really a two of a kind and unlikely to generate further benefits for AMD except become a top contender for the next generation of console chips.

AMD was floating last year semi-custom deals on the pipeline of around 500MM, now they are floating 100MM deals and nothing to be seen so far. It is not a big market.

How many kids are going to unwrap their shiny new console on Christmas morning and then see AMD's name every time they play a game for the next five years?

Not really. Just look at what mobile Maxwell can do with an Intel CPU.

And AMD is entering a decent deal now while trying to win mind share in an existing and proven market. It goes beyond just the consoles. How many kids are going to unwrap their shiny new console on Christmas morning and then see AMD's name every time they play a game for the next five years? That could translate into sales with future PC gamers.

I am sorry, where is that AMD name on consoles? My previous Wii and now Wii U doesnt show such thing. Is it showing on the PS4 or the Xbox One?

Being an embedded supplier usually means being completely anonymous.

I am sorry, where is that AMD name on consoles? My previous Wii and now Wii U doesnt show such thing. Is it showing on the PS4 or the Xbox One?

5. Why would Microsoft want to make another console deal with nV, if AMD has what they need?1. Maxwell is more than 12 months later on the market than consoles

2. How much does Maxwell cost ?

3. How much Intel CPU cost ?

4. How high is the TDP for both of them ??

I dont believe there is an AMD logo on PS4 or XBone, but a lot of people already know the SoC is designed by AMD.

Edit : Even the SoC die doesnt have laser cut AMD Logo on it.

A discrete GPU and CPU means going back to a split memory model with higher costs for communication between GPU and CPU. An integrated design offers a lot of theoretical performance benefits, which console games will no doubt exploit in the years to come.

The real alternative would have been an APU from either NVidia or Intel- but 64 bit ARM cores weren't ready in time for this generation, which knocked Nvidia out of the running, and Intel GPU tech isn't that competitive compared to NVidia or Intel.

Using a more powerful core doesn't exclude using many cores on a given chip. I think that the real problem, as you stated in the first place, is that the console chips of current generation are designed to be very low cost solutions. Time, Costs, Scope, pick two and send the other to the sacrificial chamber, and this time it was Scope.

I don't know about all the bickering about the cpu is necessary when it is obvious that ubisoft is incompetent. Why would you design a game that cannot run well on the current game consoles in the first place. It would be like saying that current gen consoles are bad becuase they can't do real time ray tracing. This is a simple ploy to distract us from the parity comment and shift blame, clever marketing.

What about something like a G1820, but a quad core custom version with an extra 1MB of cache matched to a custom AMD dGPU? I wonder if MS actually approached Intel and tried to work out a decent chip option? So far those theoretical benefits are just that theoretical. The CPU just doesn't have enough puff.

Thing is, the game has been in development long before the PS4 and Xbox One became available.. The AC games are handled by multiple development teams working cycles..

Whatever AC game coming out next year has probably already been in development for a couple of years..

ok I'll admit that the incompetent comment was unfair and I acknowledge that making games takes alot of time and effort -especially for a game of this scope. I however, will stick to the point that they are using the cpu as a scapegoat and boy does the internet like their goats.

Did you ever think that they could actually have exceeded the CPU capacities of both console chips?

Funny how every comment by the developers about how great the consoles were going to be and how they would be better than a top end PC, etc., were greeted with total acceptance by a certain crowd, but now that a developer criticizes the hardware, it is totally dismissed as a lie or incompetence. And it may be true that Ubisoft is making excuses for their poor programming. It just seems a bit over the top, the outrage flowing from this thread just because somebody said something critical about a console chip.

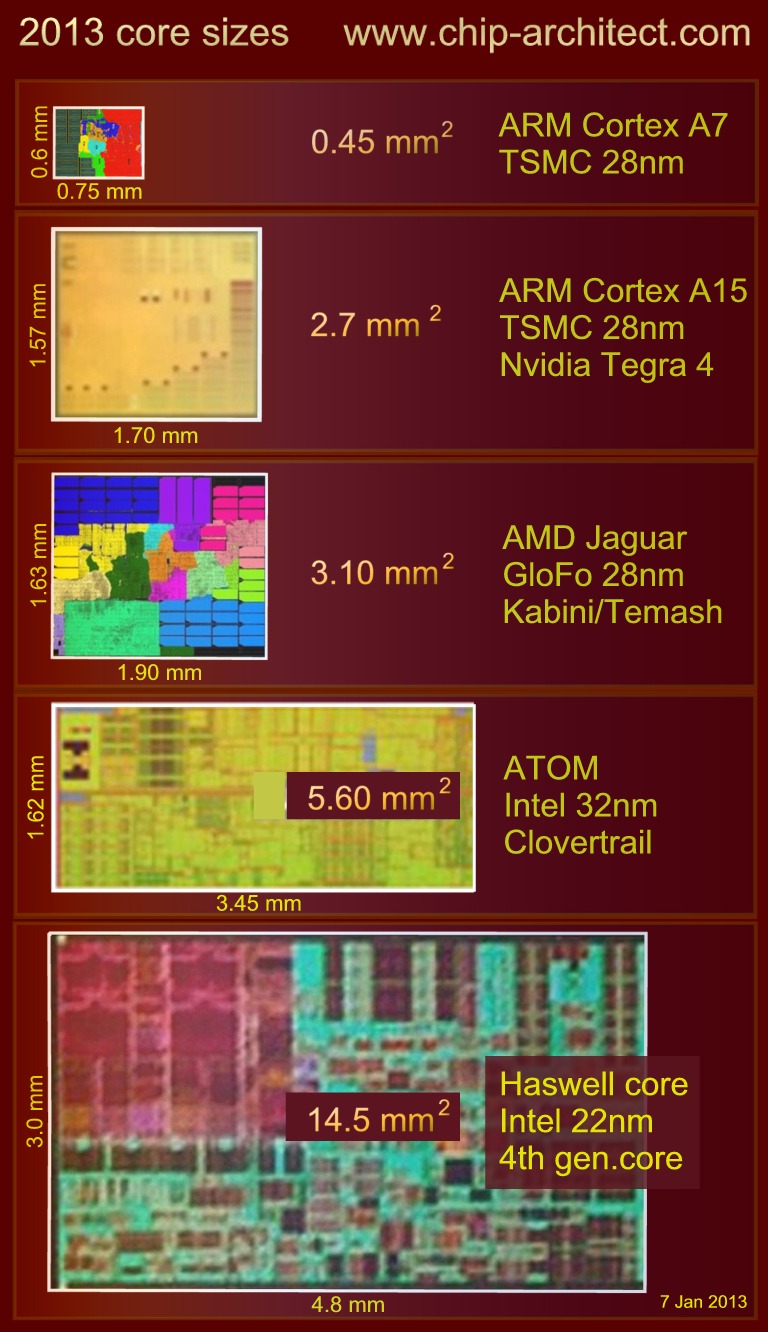

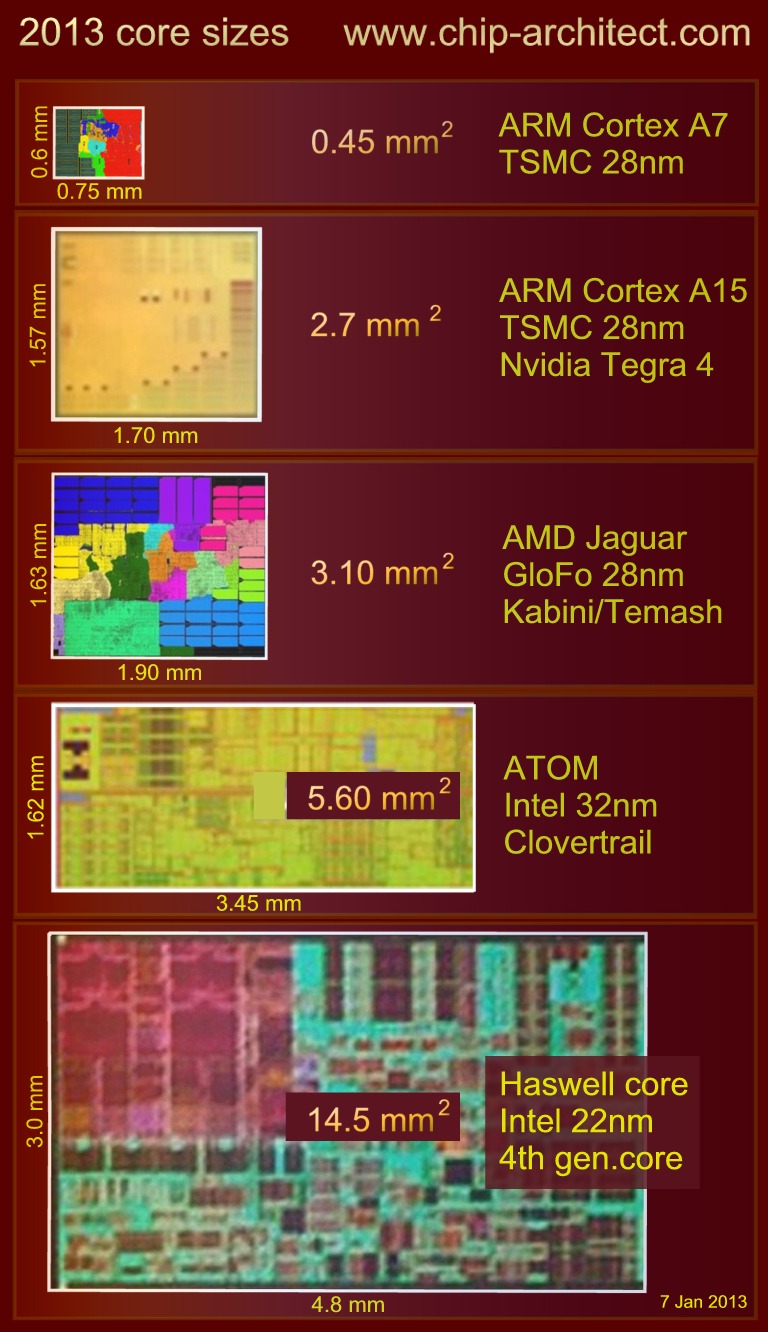

A single Haswell core at 22nm is the same size as 4x Jaquar cores at 28nm. So the overall die size of the SoC would be even bigger translating to higher cost. Dont think MS and Sony would like that

Did you ever think that they could actually have exceeded the CPU capacities of both console chips?

That post got me thinking for a moment, trying to name a prime example of work that can't be parallelized in the context of a modern game.Sadly not every job is as parallel (and almost free of inter dependencies) as rendering or ray tracing. Those always have been super parallel.

Running real stuff, even with nominally paralellizable algorithms is going to incur overhead and sadly the less powerful the cores, the bigger overhead fraction becomes ( cause some stuff like getting data into caches, synchronization, extra copies etc have fixed cost).

Why dont you link all the devs that celebrate the 6-8 weak cores? We have examples of devs complaining about they are too slow. So it would be nice if you can list those that think its the opposite.

And spending time to optimize for slow=more money, more time and more work. I am sure everyone loves that as well.

The team anticipated "a tenfold improvement over everything AI-wise," Pontbriand said, but they were "quickly bottlenecked."