It's not just about yield, it's about the design itself, which is very expensive - GPU's tend to be a bit simpler, but not enough to drastically counter the increase in spending on the silicon design process (ie all the masks and whatnot that come before the initial tape out stage).This reminds me: I wouldn't be surprised if AMD does away with chiplets and goes back to a monolithic die. They are working with what is now a very mature process, and they can easily bin chips based on silicon quality (as mobile is demonstrating).

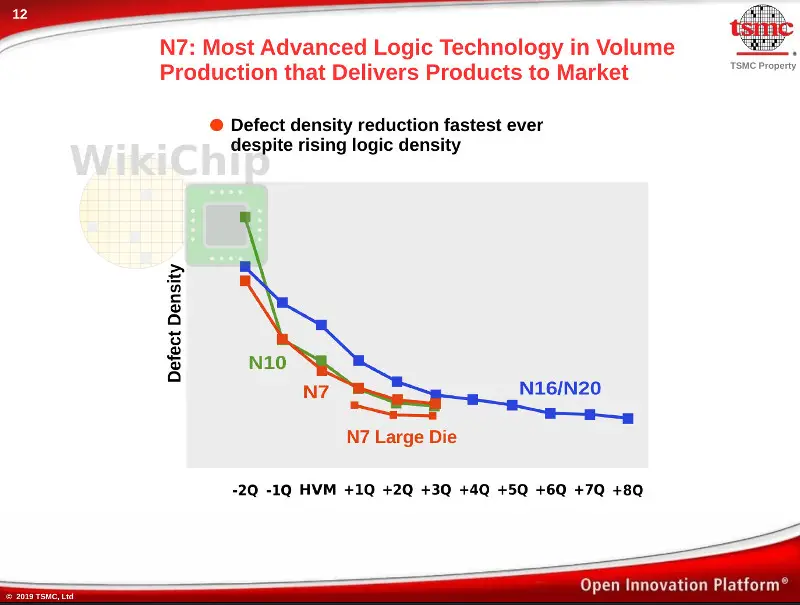

Apparently this has shot way up for 7nm, and 5nm+ will only get worse - I don't know if EUV will reduce the complexity of this for them much or at all.

Chiplets also allow them to tune each portion of the chip to a specific process for max performance/power, AMD have said before that having the APU on one process is not ideal as CPU and GPU can perform better if tuned separately - I can't remember the exact words they used, it could have been as far back as Carrizo or Kaveri.