Someone explain the AMD Nvidia DX12 difference?

- Thread starter desura

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The difference is on how DX11 and DX12 are different on each other and how developers have to write code for different IHVs to get maximum performance.

Nvidia had great presentation on DX12 (S6815 - Advanced Rendering with DirectX®), goes quite deep. (need a free registration, but there is huge amount of presentations..)

https://twitter.com/FilmicWorlds/status/751089390290472961

Nvidia had great presentation on DX12 (S6815 - Advanced Rendering with DirectX®), goes quite deep. (need a free registration, but there is huge amount of presentations..)

https://twitter.com/FilmicWorlds/status/751089390290472961

Last edited:

poofyhairguy

Lifer

- Nov 20, 2005

- 14,612

- 318

- 126

The way I think about it is this:

Directx 11 (and more specifically the Directx11 driver) is the way PC GPUs have always worked since the post-3DFX days. The driver is a middle man between the game and the wide varieties of GPUs out there. The middle man doesn't expose all the connections to the GPU (like any good middle man that wants to keep his position in life) but he facilitates the use of the GPU to a "good enough" point that we can all play games, and he takes care of edge cases (old GPUs, unpopular GPUs) so they work just like everything else (what middle men call "customer service").

Directx 12 is how consoles have always operated. All of the connections to the GPUs are exposed and you don't have a middle man in the middle. Without the middle man some edge cases (unpopular GPUs) might not work as well simply due to a lack of emphasis, and the "experience" of the middle man fixing a hundred little issues that are just practical parts of the transaction is also lost and can only replaced by more due diligence on the programmers who connect to the GPUs.

To go further with the analogy, Nvidia's Directx 11 middle man is toned as an olympic athlete while AMD's middle man looks like the fat slobby guy in the local mattress store commercials. AMD benefits more from getting rid of ALL middle men more than Nvidia, because their middle man is the fat slob. AMD also benefits more from the fact that the middle man on EITHER side isn't handling the edge cases in Directx 12 like they did in Directx 11, because it has more hardware (compute resources or VRAM) "surplus" than the competing Nvidia counterparts do on average so their cards have more margin to handle the crappy Directx 12 port that eats 6GB of VRAM just because when the Directx 11 version only uses 3.5GB of VRAM because the fit Nvidia middle man is using all his strength to optimize to that result.

Add in the fact that most game programmers are console programmers who aren't used to the middle men, and who are used to how AMD's GPUs work without that middle man, and AMD gets a double boost from Directx 12. And that isn't even considering hardware features like Async that neither Directx 11 middle man could handle properly and suddenly it's like the media industry post-Napster and post-Netflix and we are all wondering why we ever had middle men to begin with. Well except for edge case people who miss real top 40 radio stations or who can't figure out how to rent a movie when the Blockbuster closed, those are the people pining for that fit Nvidia middle man who made their life easy.

Directx 11 (and more specifically the Directx11 driver) is the way PC GPUs have always worked since the post-3DFX days. The driver is a middle man between the game and the wide varieties of GPUs out there. The middle man doesn't expose all the connections to the GPU (like any good middle man that wants to keep his position in life) but he facilitates the use of the GPU to a "good enough" point that we can all play games, and he takes care of edge cases (old GPUs, unpopular GPUs) so they work just like everything else (what middle men call "customer service").

Directx 12 is how consoles have always operated. All of the connections to the GPUs are exposed and you don't have a middle man in the middle. Without the middle man some edge cases (unpopular GPUs) might not work as well simply due to a lack of emphasis, and the "experience" of the middle man fixing a hundred little issues that are just practical parts of the transaction is also lost and can only replaced by more due diligence on the programmers who connect to the GPUs.

To go further with the analogy, Nvidia's Directx 11 middle man is toned as an olympic athlete while AMD's middle man looks like the fat slobby guy in the local mattress store commercials. AMD benefits more from getting rid of ALL middle men more than Nvidia, because their middle man is the fat slob. AMD also benefits more from the fact that the middle man on EITHER side isn't handling the edge cases in Directx 12 like they did in Directx 11, because it has more hardware (compute resources or VRAM) "surplus" than the competing Nvidia counterparts do on average so their cards have more margin to handle the crappy Directx 12 port that eats 6GB of VRAM just because when the Directx 11 version only uses 3.5GB of VRAM because the fit Nvidia middle man is using all his strength to optimize to that result.

Add in the fact that most game programmers are console programmers who aren't used to the middle men, and who are used to how AMD's GPUs work without that middle man, and AMD gets a double boost from Directx 12. And that isn't even considering hardware features like Async that neither Directx 11 middle man could handle properly and suddenly it's like the media industry post-Napster and post-Netflix and we are all wondering why we ever had middle men to begin with. Well except for edge case people who miss real top 40 radio stations or who can't figure out how to rent a movie when the Blockbuster closed, those are the people pining for that fit Nvidia middle man who made their life easy.

Last edited:

VirtualLarry

No Lifer

- Aug 25, 2001

- 56,353

- 10,050

- 126

The way I think about it is this:

Directx 11 (and more specifically the Directx11 driver) is the way PC GPUs have always worked since the post-3DFX days. The driver is a middle man between the game and the wide varieties of GPUs out there. The middle man doesn't expose all the connections to the GPU (like any good middle man that wants to keep his position in life) but he facilitates the use of the GPU to a "good enough" point that we can all play games, and he takes care of edge cases (old GPUs, unpopular GPUs) so they work just like everything else (what middle men call "customer service").

Directx 12 is how consoles have always operated. All of the connections to the GPUs are exposed and you don't have a middle man in the middle. Without the middle man some edge cases (unpopular GPUs) might not work as well simply due to a lack of emphasis, and the "experience" of the middle man fixing a hundred little issues that are just practical parts of the transaction is also lost and can only replaced by more due diligence on the programmers who connect to the GPUs.

To go further with the analogy, Nvidia's Directx 11 middle man is toned as an olympic athlete while AMD's middle man looks like the fat slobby guy in the local mattress store commercials. AMD benefits more from getting rid of ALL middle men more than Nvidia, because their middle man is the fat slob. AMD also benefits more from the fact that the middle man on EITHER side isn't handling the edge cases in Directx 12 like they did in Directx 11, because it has more hardware (compute resources or VRAM) "surplus" than the competing Nvidia counterparts do on average so their cards have more margin to handle the crappy Directx 12 port that eats 6GB of VRAM just because when the Directx 11 version only uses 3.5GB of VRAM because the fit Nvidia middle man is using all his strength to optimize to that result.

Add in the fact that most game programmers are console programmers who aren't used to the middle men, and who are used to how AMD's GPUs work without that middle man, and AMD gets a double boost from Directx 12. And that isn't even considering hardware features like Async that neither Directx 11 middle man could handle properly and suddenly it's like the media industry post-Napster and post-Netflix and we are all wondering why we ever had middle men to begin with. Well except for edge case people who miss real top 40 radio stations or who can't figure out how to rent a movie when the Blockbuster closed, those are the people pining for that fit Nvidia middle man who made their life easy.

LOL. Post of the year in VC&G.

n0x1ous

Platinum Member

- Sep 9, 2010

- 2,572

- 248

- 106

The way I think about it is this:

Directx 11 (and more specifically the Directx11 driver) is the way PC GPUs have always worked since the post-3DFX days. The driver is a middle man between the game and the wide varieties of GPUs out there. The middle man doesn't expose all the connections to the GPU (like any good middle man that wants to keep his position in life) but he facilitates the use of the GPU to a "good enough" point that we can all play games, and he takes care of edge cases (old GPUs, unpopular GPUs) so they work just like everything else (what middle men call "customer service").

Directx 12 is how consoles have always operated. All of the connections to the GPUs are exposed and you don't have a middle man in the middle. Without the middle man some edge cases (unpopular GPUs) might not work as well simply due to a lack of emphasis, and the "experience" of the middle man fixing a hundred little issues that are just practical parts of the transaction is also lost and can only replaced by more due diligence on the programmers who connect to the GPUs.

To go further with the analogy, Nvidia's Directx 11 middle man is toned as an olympic athlete while AMD's middle man looks like the fat slobby guy in the local mattress store commercials. AMD benefits more from getting rid of ALL middle men more than Nvidia, because their middle man is the fat slob. AMD also benefits more from the fact that the middle man on EITHER side isn't handling the edge cases in Directx 12 like they did in Directx 11, because it has more hardware (compute resources or VRAM) "surplus" than the competing Nvidia counterparts do on average so their cards have more margin to handle the crappy Directx 12 port that eats 6GB of VRAM just because when the Directx 11 version only uses 3.5GB of VRAM because the fit Nvidia middle man is using all his strength to optimize to that result.

Add in the fact that most game programmers are console programmers who aren't used to the middle men, and who are used to how AMD's GPUs work without that middle man, and AMD gets a double boost from Directx 12. And that isn't even considering hardware features like Async that neither Directx 11 middle man could handle properly and suddenly it's like the media industry post-Napster and post-Netflix and we are all wondering why we ever had middle men to begin with. Well except for edge case people who miss real top 40 radio stations or who can't figure out how to rent a movie when the Blockbuster closed, those are the people pining for that fit Nvidia middle man who made their life easy.

epic :thumbsup:

tviceman

Diamond Member

In layman's' terms: AMD currently has a better finesse approach to DX12 while Nvidia is relying on brute force. Nvidia's Pascal chips do a better than their previous Maxwell chips at DX12 performance, but AMD currently gets the biggest percentage boost when certain DX12 features are implemented.

However, because Nvidia cards are currently generally faster than AMD cards, it is important to examine whether or not the potential boost in DX12 is worth the trade off when not getting the boost in DX11 or other DX12 games that don't feature certain algorithms.

However, because Nvidia cards are currently generally faster than AMD cards, it is important to examine whether or not the potential boost in DX12 is worth the trade off when not getting the boost in DX11 or other DX12 games that don't feature certain algorithms.

The way I think about it is this:

Directx 11 (and more specifically the Directx11 driver) is the way PC GPUs have always worked since the post-3DFX days. The driver is a middle man between the game and the wide varieties of GPUs out there. The middle man doesn't expose all the connections to the GPU (like any good middle man that wants to keep his position in life) but he facilitates the use of the GPU to a "good enough" point that we can all play games, and he takes care of edge cases (old GPUs, unpopular GPUs) so they work just like everything else (what middle men call "customer service").

Directx 12 is how consoles have always operated. All of the connections to the GPUs are exposed and you don't have a middle man in the middle. Without the middle man some edge cases (unpopular GPUs) might not work as well simply due to a lack of emphasis, and the "experience" of the middle man fixing a hundred little issues that are just practical parts of the transaction is also lost and can only replaced by more due diligence on the programmers who connect to the GPUs.

To go further with the analogy, Nvidia's Directx 11 middle man is toned as an olympic athlete while AMD's middle man looks like the fat slobby guy in the local mattress store commercials. AMD benefits more from getting rid of ALL middle men more than Nvidia, because their middle man is the fat slob. AMD also benefits more from the fact that the middle man on EITHER side isn't handling the edge cases in Directx 12 like they did in Directx 11, because it has more hardware (compute resources or VRAM) "surplus" than the competing Nvidia counterparts do on average so their cards have more margin to handle the crappy Directx 12 port that eats 6GB of VRAM just because when the Directx 11 version only uses 3.5GB of VRAM because the fit Nvidia middle man is using all his strength to optimize to that result.

Add in the fact that most game programmers are console programmers who aren't used to the middle men, and who are used to how AMD's GPUs work without that middle man, and AMD gets a double boost from Directx 12. And that isn't even considering hardware features like Async that neither Directx 11 middle man could handle properly and suddenly it's like the media industry post-Napster and post-Netflix and we are all wondering why we ever had middle men to begin with. Well except for edge case people who miss real top 40 radio stations or who can't figure out how to rent a movie when the Blockbuster closed, those are the people pining for that fit Nvidia middle man who made their life easy.

Poor blockbuster...

poofyhairguy

Lifer

- Nov 20, 2005

- 14,612

- 318

- 126

poofyhairguy

Lifer

- Nov 20, 2005

- 14,612

- 318

- 126

Poor blockbuster...

The saddest thing was when my brother-in-law called me one day when his local Blockbuster closed. He had this desperate tone in his voice, "how will I rent movies for the kids?!?" "Uh, Redbox or Netflix?" He hadn't heard of either.

He doesn't PC game, but if he did it wouldn't be hard to guess what team color he would prefer.

renderstate

Senior member

- Apr 23, 2016

- 237

- 0

- 0

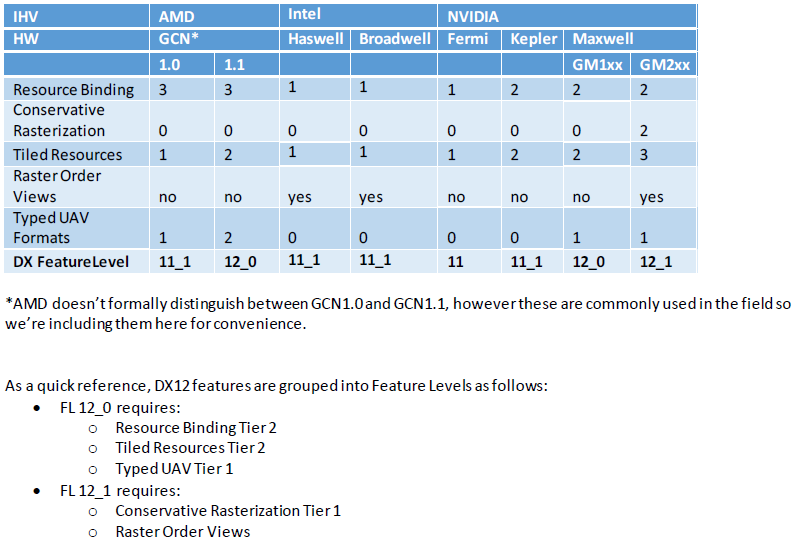

Also AMD still doesn't support very important DX12 features like conservative rasterization and raster order views. Unfortunately even Polaris doesn't support them.

Arachnotronic

Lifer

- Mar 10, 2006

- 11,715

- 2,012

- 126

Also AMD still doesn't support very important DX12 features like conservative rasterization and raster order views. Unfortunately even Polaris doesn't support them.

Really? That's a bummer.

Also AMD still doesn't support very important DX12 features like conservative rasterization and raster order views. Unfortunately even Polaris doesn't support them.

Those aren't important DX12 features, they are available in DX11 as well, and considering how much of a hit nvidia cards take when trying to use them for VXAO / HFTS they don't seem worth it at all.

VXAO:

https://www.computerbase.de/2016-03/rise-of-the-tomb-raider-directx-12-benchmark/3/

~22%- slower than HBAO+

HFTS:

https://www.computerbase.de/2016-03/the-division-benchmark/

~22% slower than HBAO+

Both for changes that are almost impossible to spot while playing the game. Personally I'd rather have quality performance improving hardware features than decreasing ones.

so essentially useless features that degrade performance by 20+% is a must for some people.Those aren't important DX12 features, they are available in DX11 as well, and considering how much of a hit nvidia cards take when trying to use them for VXAO / HFTS they don't seem worth it at all.

VXAO:

https://www.computerbase.de/2016-03/rise-of-the-tomb-raider-directx-12-benchmark/3/

~22%- slower than HBAO+

HFTS:

https://www.computerbase.de/2016-03/the-division-benchmark/

~22% slower than HBAO+

Both for changes that are almost impossible to spot while playing the game. Personally I'd rather have quality performance improving hardware features than decreasing ones.

The way I think about it is this:

Directx 11 (and more specifically the Directx11 driver) is the way PC GPUs have always worked since the post-3DFX days. The driver is a middle man between the game and the wide varieties of GPUs out there. The middle man doesn't expose all the connections to the GPU (like any good middle man that wants to keep his position in life) but he facilitates the use of the GPU to a "good enough" point that we can all play games, and he takes care of edge cases (old GPUs, unpopular GPUs) so they work just like everything else (what middle men call "customer service").

Directx 12 is how consoles have always operated. All of the connections to the GPUs are exposed and you don't have a middle man in the middle. Without the middle man some edge cases (unpopular GPUs) might not work as well simply due to a lack of emphasis, and the "experience" of the middle man fixing a hundred little issues that are just practical parts of the transaction is also lost and can only replaced by more due diligence on the programmers who connect to the GPUs.

To go further with the analogy, Nvidia's Directx 11 middle man is toned as an olympic athlete while AMD's middle man looks like the fat slobby guy in the local mattress store commercials. AMD benefits more from getting rid of ALL middle men more than Nvidia, because their middle man is the fat slob. AMD also benefits more from the fact that the middle man on EITHER side isn't handling the edge cases in Directx 12 like they did in Directx 11, because it has more hardware (compute resources or VRAM) "surplus" than the competing Nvidia counterparts do on average so their cards have more margin to handle the crappy Directx 12 port that eats 6GB of VRAM just because when the Directx 11 version only uses 3.5GB of VRAM because the fit Nvidia middle man is using all his strength to optimize to that result.

Add in the fact that most game programmers are console programmers who aren't used to the middle men, and who are used to how AMD's GPUs work without that middle man, and AMD gets a double boost from Directx 12. And that isn't even considering hardware features like Async that neither Directx 11 middle man could handle properly and suddenly it's like the media industry post-Napster and post-Netflix and we are all wondering why we ever had middle men to begin with. Well except for edge case people who miss real top 40 radio stations or who can't figure out how to rent a movie when the Blockbuster closed, those are the people pining for that fit Nvidia middle man who made their life easy.

/thread

renderstate

Senior member

- Apr 23, 2016

- 237

- 0

- 0

Those features are NOT available on DX11, they were introduced in DX12 and GCN does not support them.

Conservative rasterization is a cornerstone of computer graphics and it's used in hundreds of algorithms. Saying that it's useless because it is used in some algorithm you don't like because it runs on green HW makes no sense.

Raster order views are also a very powerful and new DX12 feature that allow to build a lot of new algorithms which completely impractical before.

You can't reject innovation simply because your favorite company doesn't support it yet.

BTW, even intel supports those features in HW. AMD is having problems keeping up by I am hopeful Vega will rectify this situation.

Conservative rasterization is a cornerstone of computer graphics and it's used in hundreds of algorithms. Saying that it's useless because it is used in some algorithm you don't like because it runs on green HW makes no sense.

Raster order views are also a very powerful and new DX12 feature that allow to build a lot of new algorithms which completely impractical before.

You can't reject innovation simply because your favorite company doesn't support it yet.

BTW, even intel supports those features in HW. AMD is having problems keeping up by I am hopeful Vega will rectify this situation.

MajinCry

Platinum Member

- Jul 28, 2015

- 2,495

- 571

- 136

MY TURN! MY TURN!

The Direct3D and OpenGL APIs are very high level. By removing much of the work on the part of the programmer, development time is reduced. But that comes at a cost; performance is dreadful. This manifests almost entirely through draw calls, a draw call being a piece of information that goes from CPU -> GPU.

The common threshold among developers, for Direct3D 9, is around 3,000 draw calls. For Direct3D 11, it's 6,000 at best. As an example, CryTek recommends that you stay around 2,000 draw calls with their engine.

With Direct3D 11, NVidia has spent a significant amount of time and money towards multithreading their driver for specific games, allowing for the general performance limit to be raised. So if the traditional limit for a specific renderer is 2,000 draw calls, and NVidia has developed the driver to accommodate the renderer, it should scale upwards with core count.

On a four core system, the performance guideline becomes 2,000 * 4 = 8,000 draw calls. Even so, it's still not all that high. Typically, an object will require at least five draw calls to be drawn in a deferred renderer. The sword your character is holding? +>5 draw calls. That rock at the character's feet? +>5 draw calls. The character's eyes? +>5 draw calls. A generic house? >+5 draw calls. etc etc.

And that all adds up, especially with many objects close together and/or large objects, due to lighting on the objects (+>1 draw call for each shadowmap being draw on an object, same again for lights).

With Mantle/Vulkan/Direct3D 12, however, draw calls are pretty much a non issue, as has been the case for consoles for quite some time. Multithreading the driver's handling of draw calls is trivial, and even without parallelization, the performance limit is, what, 20,000 draw calls per core? 50,000?

So even with the draw calls being handled by a single core, there is still way more CPU time available than on, say, a multithreaded Direct3D 11 driver. And since it's relatively easy to add in parallelized draw calls for the driver, AMD isn't behind NVidia in this regard.

Whew.

As a wee side note, intel CPUs are much better at handling draw calls than their AMD equivalents.

Boris Vorontsov did a bunch of tests a while back, around 2012 IIRC, and found that an intel CPU was able to handle >3x as many draw calls as the AMD equivalent (e.g, i7 920 vs PhII 965 BE, pretty much the same perf outside of draw calls @ stock vs stock) at a given threshold.

Even did an unintentional comparison for Fallout 4. In a spot where I was getting ~24fps with around 7k draw calls, a lad with an i7 920 was getting a solid 60fps, with the same number of draw calls, at the same spot.

And, as ya can guess, the new APIs also make this draw call deficit almost into a non-issue.

The Direct3D and OpenGL APIs are very high level. By removing much of the work on the part of the programmer, development time is reduced. But that comes at a cost; performance is dreadful. This manifests almost entirely through draw calls, a draw call being a piece of information that goes from CPU -> GPU.

The common threshold among developers, for Direct3D 9, is around 3,000 draw calls. For Direct3D 11, it's 6,000 at best. As an example, CryTek recommends that you stay around 2,000 draw calls with their engine.

With Direct3D 11, NVidia has spent a significant amount of time and money towards multithreading their driver for specific games, allowing for the general performance limit to be raised. So if the traditional limit for a specific renderer is 2,000 draw calls, and NVidia has developed the driver to accommodate the renderer, it should scale upwards with core count.

On a four core system, the performance guideline becomes 2,000 * 4 = 8,000 draw calls. Even so, it's still not all that high. Typically, an object will require at least five draw calls to be drawn in a deferred renderer. The sword your character is holding? +>5 draw calls. That rock at the character's feet? +>5 draw calls. The character's eyes? +>5 draw calls. A generic house? >+5 draw calls. etc etc.

And that all adds up, especially with many objects close together and/or large objects, due to lighting on the objects (+>1 draw call for each shadowmap being draw on an object, same again for lights).

With Mantle/Vulkan/Direct3D 12, however, draw calls are pretty much a non issue, as has been the case for consoles for quite some time. Multithreading the driver's handling of draw calls is trivial, and even without parallelization, the performance limit is, what, 20,000 draw calls per core? 50,000?

So even with the draw calls being handled by a single core, there is still way more CPU time available than on, say, a multithreaded Direct3D 11 driver. And since it's relatively easy to add in parallelized draw calls for the driver, AMD isn't behind NVidia in this regard.

Whew.

As a wee side note, intel CPUs are much better at handling draw calls than their AMD equivalents.

Boris Vorontsov did a bunch of tests a while back, around 2012 IIRC, and found that an intel CPU was able to handle >3x as many draw calls as the AMD equivalent (e.g, i7 920 vs PhII 965 BE, pretty much the same perf outside of draw calls @ stock vs stock) at a given threshold.

Even did an unintentional comparison for Fallout 4. In a spot where I was getting ~24fps with around 7k draw calls, a lad with an i7 920 was getting a solid 60fps, with the same number of draw calls, at the same spot.

And, as ya can guess, the new APIs also make this draw call deficit almost into a non-issue.

Silverforce11

Lifer

- Feb 19, 2009

- 10,457

- 10

- 76

All you need to know is Mantle is the foundation of Vulkan, DX12 and Vulkan are highly similar, like conjoint twins, therefore, AMD's GCN is prime suited for next-gen API.

Nobody here can say with a straight face that AMD GPUs will be worse at DX12 or Vulkan. Even the biased posters here who despises AMD, I doubt they can muster up the extreme ignorance to say AMD is worse at these APIs.

The rest is academic.

Nobody here can say with a straight face that AMD GPUs will be worse at DX12 or Vulkan. Even the biased posters here who despises AMD, I doubt they can muster up the extreme ignorance to say AMD is worse at these APIs.

The rest is academic.

so essentially useless features that degrade performance by 20+% is a must for some people.

I agree. However it looks like AMD did have plans for Conservative Rasterization...

And one of them is primitive discard acceleration, which in no certain terms is AMDs way of revealing that its upcoming GPUs will add support for additional key DirectX12 features such as Conservative Rasterization.

Conservative Rasterization may well be next to useless, but I guess AMD doesn't want another issue with gameworks pushing it just to make them look bad artificially again like tessellation.

digitaldurandal

Golden Member

- Dec 3, 2009

- 1,828

- 0

- 76

I tried to look at what conservative rasterization does but due to weekenditis I surrendered. Any chance of a simplified explanation of these DX12 features?

Also, does Pascal support hardware Async or no? Do we know yet?

Also, does Pascal support hardware Async or no? Do we know yet?

Those features are NOT available on DX11, they were introduced in DX12 and GCN does not support them.

Conservative rasterization is a cornerstone of computer graphics and it's used in hundreds of algorithms. Saying that it's useless because it is used in some algorithm you don't like because it runs on green HW makes no sense.

Raster order views are also a very powerful and new DX12 feature that allow to build a lot of new algorithms which completely impractical before.

You can't reject innovation simply because your favorite company doesn't support it yet.

BTW, even intel supports those features in HW. AMD is having problems keeping up by I am hopeful Vega will rectify this situation.

They aren't supported in DX11? Really? Why would you say that? Considering their only use so far has been in DX11 games. I already showed their amazing use in my last post. 20+% loss in games that use their features with almost no IQ gain in a blind test.

http://www.geforce.com/whats-new/gu...guide#tom-clancys-the-division-shadow-quality

http://www.dsogaming.com/news/direc...ill-be-packed-with-a-number-of-dx12-features/

Or maybe you are right, they aren't actually available and Nvidia is once again lying in their articles. Maxwell w/ Async compute comes to mind.

And there are parts that AMD supports better, and Nvidia supports better:

http://www.extremetech.com/extreme/...what-amd-intel-and-nvidia-do-and-dont-deliver

Intel doesn't support Conservative rasterization, and Nvidia supports 2 of the 3 tiers:

https://msdn.microsoft.com/en-us/library/windows/desktop/dn914594(v=vs.85).aspx

Oh yeah, and thats the DX11 version too, so thats 3 sources saying it works in DX11...

Also it doesn't exist in Vulkan as its Nvidia proprietary extension not standard:

https://forums.khronos.org/showthread.php/12997-Why-no-Conservative-Rasterization-in-Vulkan

Last edited:

sirmo

Golden Member

- Oct 10, 2011

- 1,012

- 384

- 136

Simplest way I can put it.. instead of CPU doing the all the computational tasks in a serial fashion and GPU doing all the rendering tasks. DX12 allows a lot of the CPUs work to be offloaded to the GPU.. which has thousands of cores.

End result is no more CPU bottleneck, and better GPU utilization, which means better final result.

The cons are.. it is still a relatively new technology and developers are still learning their ropes on how to best utilize it.

AMD's GPUs have more computational power, while Nvidia's GPUs are more streamlined for the old way of doing things and have more transistors dedicated to graphical tasks than computational ones.

End result is no more CPU bottleneck, and better GPU utilization, which means better final result.

The cons are.. it is still a relatively new technology and developers are still learning their ropes on how to best utilize it.

AMD's GPUs have more computational power, while Nvidia's GPUs are more streamlined for the old way of doing things and have more transistors dedicated to graphical tasks than computational ones.

Last edited:

TRENDING THREADS

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 10K

-

Discussion Speculation: Zen 4 (EPYC 4 "Genoa", Ryzen 7000, etc.)

- Started by Vattila

- Replies: 13K

-

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes Discussion Threads

- Started by Tigerick

- Replies: 7K

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.