LOL.Eat your heart out Fermi there's a new flame thrower in town.

So what happened to the hd7990?

Page 2 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Jacky60

Golden Member

- Jan 3, 2010

- 1,123

- 0

- 0

The beauty of the 6990 design is it allows you to fry eggs on the HD cage and if you're lucky on your HD itself.The 6990 was a 375W card with a center mounted blower. Half the heat went out of the case, the other half of the heat got shot towards the front of the case. So that's roughly 180W of heat going into the case.

Eh .. using your own number I came to a different conclusion.

6990 retains 350/850 = 41% of its original price

580 retains 200/565 = 35% of its original

I compare percentage-wise because otherwise its apple-to-orange comparison. A car can depreciate thousands of dollars annually while a graphic card has an annual depreciation of 200 dollars. By and large, cars still retain their value much longer than graphic cards.

ViRGE

Elite Member, Moderator Emeritus

- Oct 9, 1999

- 31,516

- 167

- 106

And yet they put on an HDMI port and only 2 miniDP ports. Morons.

They should have gone all DP, or at least used display outputs from both GPUs. As it stands it can only drive 3 120Hz or 2560 displays; 2 miniDP + 1 DL-DVI.

Try again. 3x150 + 75 = 525W.3x8pins+75W PCIe power=412.5W peak? Ouch.

Last edited:

railven

Diamond Member

- Mar 25, 2010

- 6,604

- 561

- 126

Wow, if I had stupid money I'd buy one of these just for the box. Sexy design. Time to win the lotto or mug someone, buy one of these for me and a GTX 690 for the GF. :wub:

Arkaign

Lifer

- Oct 27, 2006

- 20,736

- 1,379

- 126

Russian's interpretation is typically flawless.

7970 with the newer higher clocks and lower price is quite the attractive SKU, but due to power constraints it doesn't make sense to even mess with a dual-GPU variant.

Imho for this round :

AMD > NV for single cards

NV > AMD for dual-GPU and SLI

AMD > NV for three or more GPUs

At least that's the way I look at it, and I'm a very happy owner of a 670 FTW.

7970 with the newer higher clocks and lower price is quite the attractive SKU, but due to power constraints it doesn't make sense to even mess with a dual-GPU variant.

Imho for this round :

AMD > NV for single cards

NV > AMD for dual-GPU and SLI

AMD > NV for three or more GPUs

At least that's the way I look at it, and I'm a very happy owner of a 670 FTW.

ShintaiDK

Lifer

- Apr 22, 2012

- 20,378

- 146

- 106

Try again. 3x150 + 75 = 525W.Though in practice I doubt it goes too far above 400W.

Damn, my brain wanted to deny that. Our household only uses 3Kw/H a day!

Production seems to be between 200 and 500 cards.

exar333

Diamond Member

- Feb 7, 2004

- 8,518

- 8

- 91

Eh .. using your own number I came to a different conclusion.

6990 retains 350/850 = 41% of its original price

580 retains 200/565 = 35% of its original

I compare percentage-wise because otherwise its apple-to-orange comparison. A car can depreciate thousands of dollars annually while a graphic card has an annual depreciation of 200 dollars. By and large, cars still retain their value much longer than graphic cards.

Beat me to it. Before the current gen, 2x top-tier was usually a bit more than the msrp of the dual GPU card. That doesn't market prices were that, but meh. :/. Anyway, my point was the same, the percentage of value was similar. 2 580s or 1 590 is worth pretty similarly today, and didn't cost a whole lot if different when they were purchased. I'm not seeing RS' argument here...no offense. I have resold a LOT of GPUs over the years and just don't see this argument holding water.

Well looky there. Man is that thing going to dump all the heat back into the case???

blackened23

Diamond Member

- Jul 26, 2011

- 8,548

- 2

- 0

This thread comes across as a passive aggressive and sarcastic attempt at talking smack about AMD. Give it a rest already.

I think the answer to the question at hand is obvious, its hard to compete with the GTX 690 since it has better TDP characteristics. Nvidia won this round of dual gpu.

Blackened, quite frankly this thread was doing just fine without your analysis of the thread itself. So knock it off with the thread crapping.

-ViRGE

I think the answer to the question at hand is obvious, its hard to compete with the GTX 690 since it has better TDP characteristics. Nvidia won this round of dual gpu.

Blackened, quite frankly this thread was doing just fine without your analysis of the thread itself. So knock it off with the thread crapping.

-ViRGE

Last edited by a moderator:

96Firebird

Diamond Member

- Nov 8, 2010

- 5,749

- 345

- 126

This thread comes across as a passive aggressive and sarcastic attempt at talking smack about AMD. Give it a rest already.

It is only "the real question" in your delusional, twisted mind. Give it a rest already. D:

Ah nice edit, keep it up! :thumbsup:

This thread was also doing just fine without attacking Blackened. Personal attacks are never allowed

-ViRGE

Last edited by a moderator:

Keysplayr

Elite Member

- Jan 16, 2003

- 21,219

- 56

- 91

This thread comes across as a passive aggressive and sarcastic attempt at talking smack about AMD. Give it a rest already.

Threads like these should never be allowed. I mean, the moxy the OP has to ask whatever happened to AMD's dual GPU offering for this gen.

Kidding aside, AMD has had one every gen since the 3xxx series, so an obvious question, and one that should have been asked quite a while ago I might add, is most definitely something I know at least a few members here would be interested in.

And you probably need to rethink the way you interpret posts if you think the OP was passive aggressive. Nothing, not one iota of aggression, passive or otherwise, present.

blackened23

Diamond Member

- Jul 26, 2011

- 8,548

- 2

- 0

And you probably need to rethink the way you interpret posts if you think the OP was passive aggressive. Nothing, not one iota of aggression, passive or otherwise, present.

Fair enough. Anyway, AMD is probably in a flux of change of leadership - compounded by the fact that dual 7970s won't beat a 690 in any area except price unless it is clocked at GE speeds - and we all know that won't happen due to TDP characteristics.

I'm sure bitcoiners would love it though I suppose

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

Eh .. using your own number I came to a different conclusion.

6990 retains 350/850 = 41% of its original price

580 retains 200/565 = 35% of its original

I compare percentage-wise because otherwise its apple-to-orange comparison. A car can depreciate thousands of dollars annually while a graphic card has an annual depreciation of 200 dollars. By and large, cars still retain their value much longer than graphic cards.

Yes but I am not in an accounting class. A consumer loses $$$$ as a result of depreciation not percentages on a piece of paper. Your math is 100% correct, but what does your wallet lose? Could you have sold a GTX580 for $350 when HD7970 just launched? Yup. What about HD6990 for $600 when 7970 just launched? Unlikely. When the 7970 launched, used 6990s were going for $450-500. A car depreciates much worse than a videocard in monetary terms. That's key right there. Which videocard loses the least amount of $ "renting it"? Sure in % terms a car holds its value better over 5 years (let's say 40-50% of its retail value), or that 6990 holds its value better using your math, but in the end all that matters is actually how much $ you end up losing, not %s. If you bought a $10 million house and lost 10% of its value, that's a terrible investment. If you bought a $500 videocard and lost 50% of it's value, it's manageable

Using monetary context (which is the only thing that matters when you are spending it), not accounting definition of depreciation, a 6990 and a car are much worse depreciating assets than the 580 was, since you'd lose more $ owning either of those. What I am saying is the 6990 loses a lot more value (i.e., money), and it's super hard to time the resale of the 6990 against say 2x 6950s when 7970 launched. Using the same logic, relative to what happened in the past, a gamer is likely to lose more $ buying a GTX690 I bet than 2x 680s or 7970 Ghz Edition x 2 vs. a 7990. I've been reselling cards for a long time now and I've come to this conclusion based on my market. I am sticking to what I know in my market. Other people should stick to what they know. If they can flip a 690/7990 without losing a lot of value compared to 680x2 / 7970 GE x 2, then that's what they should be doing. I know I'll be able to resell 2 7970s for more than a 7990, so I won't touch the 7990.

I'm not seeing RS' argument here...no offense. I have resold a LOT of GPUs over the years and just don't see this argument holding water.

If based on your experience, there is no large loss of buying a dual-GPU single card, then that's great

Plus, I don't use EBay at all because of fees. I get way better resale value on Craigslist and Kijiji. It's in-comparatively easier to sell a single-GPU card due to the size of the target market. I can go out right now and sell a 680, easily, but it'll be a while before I can readily unload a 690 at a good price in person. This is because the market for people who want a single-GPU used card is huge, compared to the 10-15 people in all of Toronto who'll want a Devil13 card 12 months from now and probably 3-4 people who'll want it in 2 years from now. I followed prices of 4850X2, 4870X2, PowerColor 6870X2, GTX295, no one wanted those cards after a new generation. Now 6990 is only going for $350, but I doubt that person will even get that, which is more than a $500 loss of $$$, not %s. Used 6950s go for $180-200, which means $170-175 real resale. That means the 6990 was a terrible option vs. HD6950s Unlocked which only cost $565 and resold for $350.

I think the take-away here: People should stick to their personal experience. If in their market, they can sell a dual-GPU card without a significant monetary loss against 2 single cards, then this doesn't present an issue.

==========================================================================================================

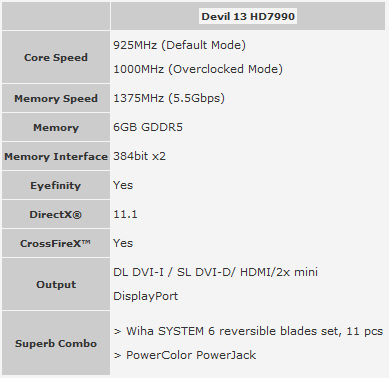

More pictures and info on the 7990 Devil 13 card. Looks like it'll be a power hungry beast.

http://videocardz.com/34576/powercolor-announces-radeon-hd-7990-devil13-6gb

Last edited:

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

what is bitcoin?

Virtual currency that allows you to buy things or exchange bitcoins for other world's currencies such as the US dollars. Watch this quick 5 min video: https://bitpay.com/home

You can use a videocard or special CPUs to "mine" the currency and it gets added to your wallet (like your bank account).

This has all the info you'd need --> LINK

This is why the 7990 will sell out. It'll make $120 every month after electricity cost of $0.15 per kWh assuming 400W of power use. If someone is willing to take the risk, they can just use it for 2-3 months and dump it and keep the $ from mining. For most people it's probably not worth the hassle, but since it'll be a limited run of 500 units (?), every one will sell out I bet.

Last edited:

exar333

Diamond Member

- Feb 7, 2004

- 8,518

- 8

- 91

In some respects, I like that AMD didn't release the 7990. If it wasn't a good product, they shouldn't release it. With how awesome the 78xx and 79xx products were, it would be a shame to release a low-clocked dual GPU that is already at 105% performance/power like the 590 was. I suspect it would have been released if it was competitive.

Edit: the 7990 may live as a niche product, but will definitely not be 'mainstream'. For what's that worth...

Edit: the 7990 may live as a niche product, but will definitely not be 'mainstream'. For what's that worth...

Last edited by a moderator:

exar333

Diamond Member

- Feb 7, 2004

- 8,518

- 8

- 91

Yes but I am not in an accounting class. A consumer loses $$$$ as a result of depreciation not percentages on a piece of paper. Your math is 100% correct, but what does your wallet lose? Could you have sold a GTX580 for $350 when HD7970 just launched? Yup. What about HD6990 for $600 when 7970 just launched? Unlikely. When the 7970 launched, used 6990s were going for $450-500. A car depreciates much worse than a videocard in monetary terms. That's key right there. Which videocard loses the least amount of $ "renting it"? Sure in % terms a car holds its value better over 5 years (let's say 40-50% of its retail value), or that 6990 holds its value better using your math, but in the end all that matters is actually how much $ you end up losing, not %s. If you bought a $10 million house and lost 10% of its value, that's a terrible investment. If you bought a $500 videocard and lost 50% of it's value, it's manageable

Using monetary context (which is the only thing that matters when you are spending it), not accounting definition of depreciation, a 6990 and a car are much worse depreciating assets than the 580 was, since you'd lose more $ owning either of those. What I am saying is the 6990 loses a lot more value (i.e., money), and it's super hard to time the resale of the 6990 against say 2x 6950s when 7970 launched. Using the same logic, relative to what happened in the past, a gamer is likely to lose more $ buying a GTX690 I bet than 2x 680s or 7970 Ghz Edition x 2 vs. a 7990. I've been reselling cards for a long time now and I've come to this conclusion based on my market. I am sticking to what I know in my market. Other people should stick to what they know. If they can flip a 690/7990 without losing a lot of value compared to 680x2 / 7970 GE x 2, then that's what they should be doing. I know I'll be able to resell 2 7970s for more than a 7990, so I won't touch the 7990.

If based on your experience, there is no large loss of buying a dual-GPU single card, then that's greatIn my market, there is a huge penalty for buying dual-GPU cards. In UK for example, some people say that dual-GPU single cards hold value better than 2x single-GPUs. So it depends on the market I suppose.

Plus, I don't use EBay at all because of fees. I get way better resale value on Craigslist and Kijiji. It's in-comparatively easier to sell a single-GPU card due to the size of the target market. I can go out right now and sell a 680, easily, but it'll be a while before I can readily unload a 690 at a good price in person. This is because the market for people who want a single-GPU used card is huge, compared to the 10-15 people in all of Toronto who'll want a Devil13 card 12 months from now and probably 3-4 people who'll want it in 2 years from now. I followed prices of 4850X2, 4870X2, PowerColor 6870X2, GTX295, no one wanted those cards after a new generation. Now 6990 is only going for $350, but I doubt that person will even get that, which is more than a $500 loss of $$$, not %s. Used 6950s go for $180-200, which means $170-175 real resale. That means the 6990 was a terrible option vs. HD6950 Unlocked which only cost $565 and resold for $350.

I think the take-away here: People should stick to their personal experience. If in their market, they can sell a dual-GPU card without a significant monetary less against 2 single cards, then this doesn't present an issue.

==========================================================================================================

More pictures and info on the 7990 Devil 13 card. Looks like it'll be a power hungry beast.

http://videocardz.com/34576/powercolor-announces-radeon-hd-7990-devil13-6gb

I re-sold a 690 for $700 profit, but I only had it for a day and intended to sell it all along. That's another story.

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

I re-sold a 690 for $700 profit, but I only had it for a day and intended to sell it all along. That's another story.

:thumbsup::thumbsup: Wow, good job Exar! That's amazing. You going to be flipping PS4s on launch?

MentalIlness

Platinum Member

- Nov 22, 2009

- 2,383

- 11

- 76

dave_the_nerd

Lifer

- Feb 25, 2011

- 16,999

- 1,628

- 126

The 580 lost 60% of its value, the 6990 lost 53% of its value.

Change in value over time is usually measured as a percentage.

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

The 580 lost 60% of its value, the 6990 lost 53% of its value. Change in value over time is usually measured as a percentage.

Not arguing the proper calculation for change of value using the mathematical formula for percentage change. Depreciation in monetary terms with respect to the total cost of ownership is what matters since that's your real cost of ownership. When you buy a car for $20k and sell it for $17k, you only lost 15% of value, but $3k. If you buy a car that costs $10k and sell it for $8k, you lost 20% of value, but only $2k. Which transaction was more costly, i.e., which item's cost of ownership was higher over the period of the period you used the asset? The first car, despite it depreciating lower in % terms. The depreciation rate was better for the first car but the total cost of ownership was higher, which makes it a more costly depreciating asset. Further, using today's prices for GTX580 on the used market doesn't take into consideration that a savy gamer could have sold the 580 for much higher at the 7970's launch. The resale values for 6990 at or near 7970 launch already dipped to $450-500. This is because the value of dual-GPU cards plummets quickly when a next generation GPU provides near or similar performance that a dual-GPU card could provide. A part of that has to do with: (1) smaller target market of buyers who want a dual-GPU card, (2) Inherent scaling SLI/CF issues that are unavoidable with a dual-GPU card. These factors hurt dual-GPUs once a new generation of cards launches. This is my point, in my market I can buy 2 GPUs and resell them, and walk away with a lower cost of ownership than buying a single card with dual-GPUs that has similar performance. If this scenario is not true in your market, then you are not penalized for buying a $1,000 GPU.

Last edited:

dave_the_nerd

Lifer

- Feb 25, 2011

- 16,999

- 1,628

- 126

It's always a losing battle. The most economical option is the same with GPUs as it is with cars. Buy an upper-midrange GPU (or low-mileage preleased car) and drive it into the ground. (At least three years of use for a GPU.)

Anything else, you're just paying extra money for performance nobody really "needs." (Game developers can't publish games that need a current-gen, top-of-the-line card to run, because they'd only sell a couple thousand copies.)

Now, there's definitely something to be said for "WANT!!!" but it's not an economic argument.

EDIT: Okay, now I'm probably going to get tarred & feathered for suggesting that you don't necessarily need SLI 680s.

Anything else, you're just paying extra money for performance nobody really "needs." (Game developers can't publish games that need a current-gen, top-of-the-line card to run, because they'd only sell a couple thousand copies.)

Now, there's definitely something to be said for "WANT!!!" but it's not an economic argument.

EDIT: Okay, now I'm probably going to get tarred & feathered for suggesting that you don't necessarily need SLI 680s.

Last edited:

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.