Curious as to what people's theories are. HD7970 came out in January, and here we are nearly eight months later and there is no dual gpu card from AMD. I'm more curious as to what the reasons, problems, and/or ramifications there is to no dual-GPU card from AMD for the first time since the hd2000 series.

So what happened to the hd7990?

- Thread starter tviceman

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AnandThenMan

Diamond Member

- Nov 11, 2004

- 3,991

- 627

- 126

Maybe they decided the 10 people that buy them just doesn't make it worth having the single card performance crown? But really, thermals are probably the real reason, although it looks like some version will be showing up.

MagnusTheBrewer

IN MEMORIAM

- Jun 19, 2004

- 24,122

- 1,594

- 126

So if true, what are the possible reasons AMD took so long to get a dual card out? They had the premium pricing command for 10 weeks, and now that their prices have dropped so much they won't be able to make nearly as much money off a dual gpu card had they gotten it out a 2 months after release.

AnandThenMan

Diamond Member

- Nov 11, 2004

- 3,991

- 627

- 126

They didn't make the card because they can't, or could not, too much power draw. Maybe binning has made it possible now who knows. But I don't see the point of these dual GPU cards anyway, it was different for AMD when they were making tiny dice and the top end was supposed to be a dual GPU affair. But now they have a very high transistor count die, with high performance to go along with it. Really AMD's strategy has softened from the "sweet spot" days they have moved the target upwards.

Dark Shroud

Golden Member

- Mar 26, 2010

- 1,576

- 1

- 0

Maybe they decided the 10 people that buy them just doesn't make it worth having the single card performance crown? But really, thermals are probably the real reason, although it looks like some version will be showing up.

Considering the 5970 & 6990 all sold very well thanks to bitcoin miners I think this could would also sell well.

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

So if true, what are the possible reasons AMD took so long to get a dual card out?

- PS4 will have 2x HD7990s so AMD has been busy stock piling millions for its launch.

- GTX690 is too power efficient. It has packaged nearly GTX680 SLI level of performance in a jaw-dropping 274W of peak power, packaged under a quiet magnesium/aluminum shrouded cooler. It's pure sexiness, other than I would have loved to see 8GB of VRAM (4 per each GPU) on a $1000 GPU.

For HD7990 to compete, it would probably need to draw 350-400W of power at 1000mhz each. 925mhz 7970 CF may not be enough to beat GTX690 to compensate for the huge power consumption increase.

Also, NV is selling the 690 as 2x 680 chips for $1000. The only way for AMD to be able to do that is to make a card faster since they are going to lose on power consumption for sure. But it's impossible to make it faster without 1000+mhz clocks which would make it use 350-400W I bet. So how do you design a GPU cooler that can handle that much heat? :sneaky:

The alternative then is to either let an AIB do it (limited run like HD6870 X2 by Powercolor) OR release an 850mhz HD7990 and price it for $800.

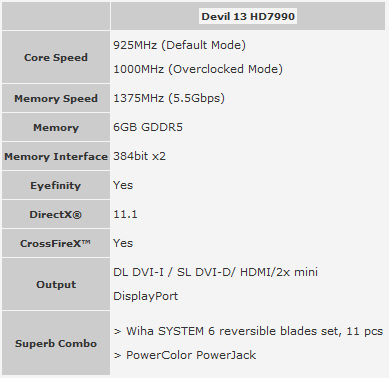

I guess AMD decided it's not worth to sell an 850mhz 7990 and lose badly to the 690, so they let AIBs handle it. I bet those 500 units of Devil 13 will sell out right away to bitcoin miners and such. 7970's key advantage is overclocking. Good luck overclocking those 7970s to 1150mhz+ on 1 single 7990 board! You'd probably need 3x 8-pin power connectors, or 4.

My biggest problem with the 690 is that it falls apart where the GPU power is needed the most. In a way it's actually a let down since it costs $1000. I'd get GTX670 4GB SLI over the 690 to be honest.

Last edited:

- PS4 will have 2x HD7990s so AMD has been busy stock piling millions for its launch.

- GTX690 is too power efficient. It has packaged nearly GTX680 SLI level of performance in a jaw-dropping 274W of peak power, packaged under a quiet magnesium/aluminum shrouded cooler. It's pure sexiness, other than I would have loved to see 8GB of VRAM (4 per each GPU) on a $1000 GPU.

For HD7990 to compete, it would probably need to draw 350-400W of power at 1000mhz each. 925mhz 7970 CF may not be enough to beat GTX690 to compensate for the huge power consumption increase.

Also, NV is selling the 690 as 2x 680 chips for $1000. The only way for AMD to be able to do that is to make a card faster since they are going to lose on power consumption for sure. But it's impossible to make it faster without 1000+mhz clocks which would make it use 350-400W I bet. So how do you design a GPU cooler that can handle that much heat? :sneaky:

The alternative then is to either let an AIB do it (limited run like HD6870 X2 by Powercolor) OR release an 850mhz HD7990 and price it for $800.

I guess AMD decided it's not worth to sell an 850mhz 7990 and lose badly to the 690, so they let AIBs handle it. I bet those 500 units of Devil 13 will sell out right away to bitcoin miners and such. 7970's key advantage is overclocking. Good luck overclocking those 7970s to 1150mhz+ on 1 single 7990 board! You'd probably need 3x 8-pin power connectors, or 4.

My biggest problem with the 690 is that it falls apart where the GPU power is needed the most. In a way it's actually a let down since it costs $1000. I'd get GTX670 4GB SLI over the 690 to be honest.

Its going to have 4 6 pin power connectors.

96Firebird

Diamond Member

- Nov 8, 2010

- 5,749

- 345

- 126

Whoa, I had no idea the GTX690 used so little power. Too bad it is so expensive, looks like a good card for those with 120Hz monitors.

SolMiester

Diamond Member

- Dec 19, 2004

- 5,330

- 17

- 76

ViRGE

Elite Member, Moderator Emeritus

- Oct 9, 1999

- 31,516

- 167

- 106

I agree with this interpretation. Given the similarities between Cayman and Tahiti, if AMD just does what they did last time for the 6990 they will end up with a 375W card that's clocked lower than the 7970. They more or less need 7970GE performance to match NVIDIA here, which takes yet more power, further widening the power/noise gap between the 7990 and the GTX 690. There really isn't a good solution here, I fear.GTX690 is too power efficient. It has packaged nearly GTX680 SLI level of performance in a jaw-dropping 274W of peak power, packaged under a quiet magnesium/aluminum shrouded cooler. It's pure sexiness, other than I would have loved to see 8GB of VRAM (4 per each GPU) on a $1000 GPU.

For HD7990 to compete, it would probably need to draw 350-400W of power at 1000mhz each. 925mhz 7970 CF may not be enough to beat GTX690 to compensate for the huge power consumption increase.

Also, NV is selling the 690 as 2x 680 chips for $1000. The only way for AMD to be able to do that is to make a card faster since they are going to lose on power consumption for sure. But it's impossible to make it faster without 1000+mhz clocks which would make it use 350-400W I bet. So how do you design a GPU cooler that can handle that much heat? :sneaky:

The alternative then is to either let an AIB do it (limited run like HD6870 X2 by Powercolor) OR release an 850mhz HD7990 and price it for $800.

I guess AMD decided it's not worth to sell an 850mhz 7990 and lose badly to the 690, so they let AIBs handle it. I bet those 500 units of Devil 13 will sell out right away to bitcoin miners and such. 7970's key advantage is overclocking. Good luck overclocking those 7970s to 1150mhz+ on 1 single 7990 board! You'd probably need 3x 8-pin power connectors, or 4.

The worst part is that AMD can't afford to be too much later with this. It's been nearly 8 months since 7970 launched; at this point most customers who would have wanted such a card will have given up and gone with 7970CF or GTX 690. At best they're looking at being able to sell it for half a year (if that) before it becomes functionally outdated by Sea Islands.

And for the love of Mike I hope that triple fan cooler isn't what they intend to go with. Those coolers are effective on the whole, but they also do a lousy job of pushing hot air out of the case. That means you'd be looking at 375W of heat going into your case, as opposed to the roughly 180W on a 6990.

AnandThenMan

Diamond Member

- Nov 11, 2004

- 3,991

- 627

- 126

misunderstood post nm.

I don't see why the 7990 is important to AMD. I always thought the dual GPU cards were a waste of resources, but maybe others feel differently. Anything above 300 watts to me for a single card is excessive, plus I'd much rather have 2 cards, down the road I can sell one or use it in a different machine, I like the flexibility.

I don't see why the 7990 is important to AMD. I always thought the dual GPU cards were a waste of resources, but maybe others feel differently. Anything above 300 watts to me for a single card is excessive, plus I'd much rather have 2 cards, down the road I can sell one or use it in a different machine, I like the flexibility.

Last edited:

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

That means you'd be looking at 375W of heat going into your case, as opposed to the roughly 180W on a 6990.

You mean 288W? A single 6970 draws ~200W.

I always find that dual-GPU cards plummet in value once next generation of fast high-end single-GPUs launch (GTX590/6990 last generation). I doubt either GTX690/7990 are even worth spending $ on. Probably when GTX780 launches (GK110?), a used 690 will go for $500, tops.

Last edited:

ViRGE

Elite Member, Moderator Emeritus

- Oct 9, 1999

- 31,516

- 167

- 106

The 6990 was a 375W card with a center mounted blower. Half the heat went out of the case, the other half of the heat got shot towards the front of the case. So that's roughly 180W of heat going into the case.You mean 288W? A single 6970 draws ~200W.

I always find that dual-GPU cards plummet in value once next generation of fast high-end single-GPUs launch (GTX590/6990 last generation). I doubt either GTX690/7990 are even worth spending $ on. Probably when GTX780 launches (GK110?), a used 690 will go for $500, tops.

Grooveriding

Diamond Member

- Dec 25, 2008

- 9,147

- 1,330

- 126

While the 7970GE is faster than the 680, its thermals are going to make it much tougher for 2 Tahiti XT cores on a single card to clock where they need to be to be faster than a 690, which is really close to 680SLI.

They shouldn't bother unless they can do it in a way that it is on parity or faster than the 690 while not sounding like a hair dryer. That or they could try make the value proposition by selling it for $800 or so. Can't see that working out too well though, $800 is not really a value card. High end dual gpu cards are all about having the best card. If they can't manage that, it's not worth it imo.

They shouldn't bother unless they can do it in a way that it is on parity or faster than the 690 while not sounding like a hair dryer. That or they could try make the value proposition by selling it for $800 or so. Can't see that working out too well though, $800 is not really a value card. High end dual gpu cards are all about having the best card. If they can't manage that, it's not worth it imo.

AnandThenMan

Diamond Member

- Nov 11, 2004

- 3,991

- 627

- 126

If AMD could release a 7990 that took the single card performance crown, they would have done it a long time ago. Otherwise it will just be seen as a space heater that is slower than the 690, so I guess no press is better than bad press for them in this case.

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

AnandThenMan

Diamond Member

- Nov 11, 2004

- 3,991

- 627

- 126

Beastly. Does it come with a fire extinguisher? Eat your heart out Fermi there's a new flame thrower in town.

exar333

Diamond Member

- Feb 7, 2004

- 8,518

- 8

- 91

You mean 288W? A single 6970 draws ~200W.

I always find that dual-GPU cards plummet in value once next generation of fast high-end single-GPUs launch (GTX590/6990 last generation). I doubt either GTX690/7990 are even worth spending $ on. Probably when GTX780 launches (GK110?), a used 690 will go for $500, tops.

Not sure what you are getting at here. Going ebay prices (sold, mind you) are ~$440 for a used 590 and $225 for a used 580. That's pretty equal resale. If anything, the duals do very well these days because I think people like to use them in either machines that don't do SLI configs well, or for compute applications. I don't see a big difference between the two in resale.

exar333

Diamond Member

- Feb 7, 2004

- 8,518

- 8

- 91

Curious as to what people's theories are. HD7970 came out in January, and here we are nearly eight months later and there is no dual gpu card from AMD. I'm more curious as to what the reasons, problems, and/or ramifications there is to no dual-GPU card from AMD for the first time since the hd2000 series.

Too power hungry. They learned from NV how not to release a dual GPU card (gtx 590, initial version). Clocks would have been terrible, most likely.

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

Not sure what you are getting at here. Going ebay prices (sold, mind you) are ~$440 for a used 590 and $225 for a used 580. That's pretty equal resale. If anything, the duals do very well these days because I think people like to use them in either machines that don't do SLI configs well, or for compute applications. I don't see a big difference between the two in resale.

I can get an HD6990 for $350 CDN or GTX580 for $200 CDN. The 6990 was $750 CDN new (+ 13% tax = $850 CDN). The GTX580 was $500 I believe (or $565 CDN with tax). That means 6990 lost $500 in value, and 580 lost $365. Thus the 6990 depreciated more. The same is true for the 590.

It's even worse last generation. HD6950 2GB cards sell for $175 used right now and they were already $250 CDN or so around February 2011 when I got mine new. So for 2x 6950s it would have been $250x2 + tax = $565CDN and with resale of $175 each, you would have lost only $215 over 1.5 years of ownership (and the 6950s unlocked). If you bought an HD6990 for $850 and sold it now for $350, you would have lost $500. That's absurd! Where I live the dual-GPU cards plummet in value when a new generation comes out. This has been true for all dual-GPU cards I've followed over the years in my local market. Toronto/GTA is 5.5 million people so it's a good market size. I don't know how things are in your local area. If dual-GPU cards hold value better there, then sure it doesn't matter what you buy. Because of how dual-GPU cards plummet in my local market, I'd take 2x 680s over a 690 or 2x 7970s over a 7990 any day since I know when it comes time to resell, I'll get more value out of the single cards. Also, it's much easier to flip single cards because very few people actually want to pay good $$ for a used dual-GPU card and if you just miss the perfect window to sell, the prices fall even more. With single cards I find there is way less risk involved and you have a lot more time to offload them at a better price.

And btw, those ebay prices aren't what you take home after you account fees, etc. So that ~ $440 for a used 590 will be way lower than that once you actually get cash. So the depreciation is huge.

Last edited:

blastingcap

Diamond Member

- Sep 16, 2010

- 6,654

- 5

- 76

RussianSensation

Elite Member

- Sep 5, 2003

- 19,458

- 765

- 126

Its going to have 4 6 pin power connectors.

three 8-pin PCI Express Power connectors it seems.

Press Release.

Silverforce11

Lifer

- Feb 19, 2009

- 10,457

- 10

- 76

- GTX690 is too power efficient.

What's the difference between 680 vs 7970, power use in gaming? Surpisingly little, especially factoring in the vram overhead (GDDR5 doesn't exactly sip power).

I fail to see why 2x 7970 would draw massively more power. 50W extra? Maybe. But look at the 6990 vs 590. It's not question of ability to make the 7990, its a question of need. Leaving it to AIBs is prolly better for them, less R&D, still sell 7970 dies.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 24K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.