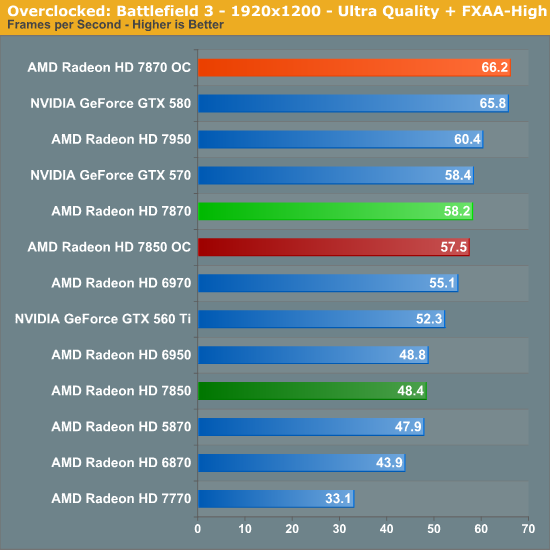

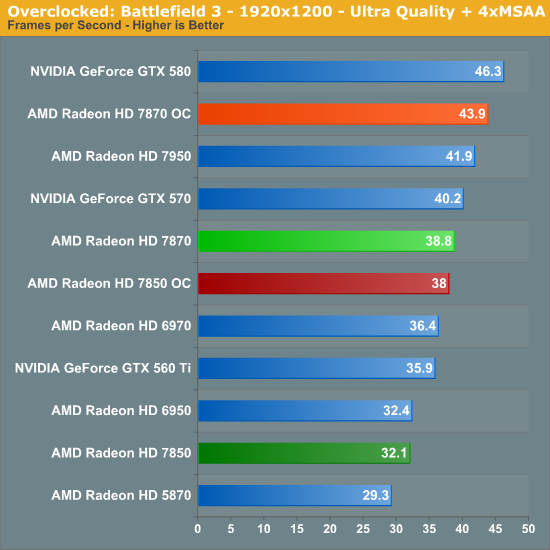

MSAA - GTX580 is 19.3% fater than HD7870

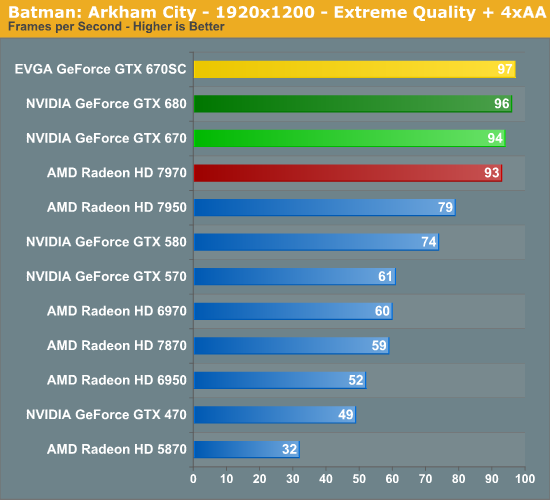

Tessellation is why Fermi will pummel Cayman and Cypress in DX11 and why HD7850 will suffer the exact same fate against the 670 (and it already does but you ignored the massive performance delta between 670 and 7850 in Batman AC, Crysis, Lost Planet 2, etc.).

If you don't care to use tessellation in games, that's another story. Ignoring it is not helping HD5870 users for example.

I prefer skinny GPUs, thanks.

You keep saying there is no point in upgrading from 69xx if you don't want better tessellation.

First of all, there are power/noise/heat and VRAM differences which is what makes it more worthwhile--that's why I got a 28nm GPU and 22nm CPU even though the last-gen stuff was quite good.

Secondly, yes, there isn't a compelling reason for people with 40nm GPUs to upgrade right now. It has little to do with tessellation and much to do with current price levels.

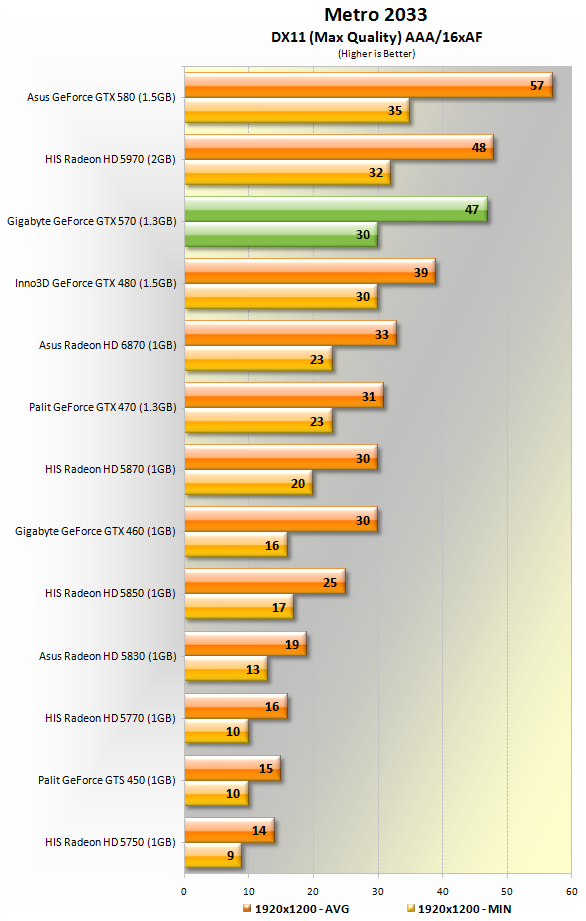

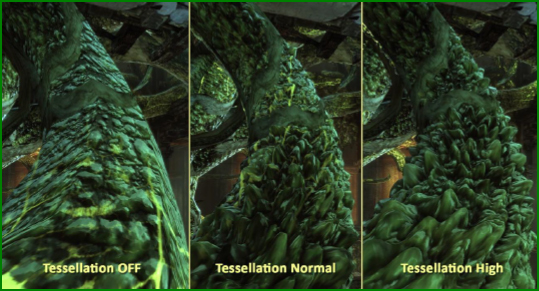

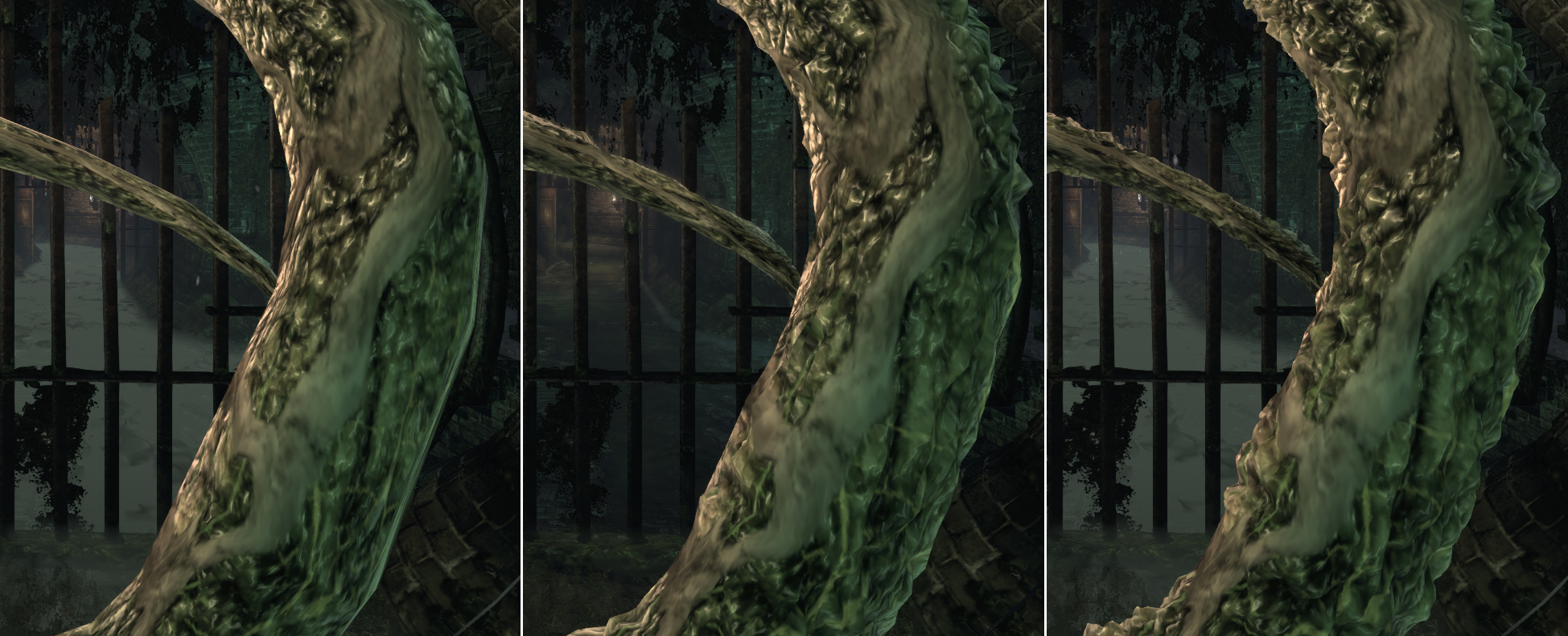

And yes, if tessellation is hurting your fps too much, turn it off. What is it really adding? It doesn't add anything most of the time. It's just a box to check so games can claim they used DX11. Tell me with a straight face that you could even tell if tessellation were on or off in-game in Metro 2033:

http://www.overclock.net/t/690441/pcgh-metro-2033-direct-x11-comparison-screenshots

Look at how gamedevs have implemented tessellation so far in other games:

http://www.xbitlabs.com/articles/graphics/display/hardware-tesselation_5.html

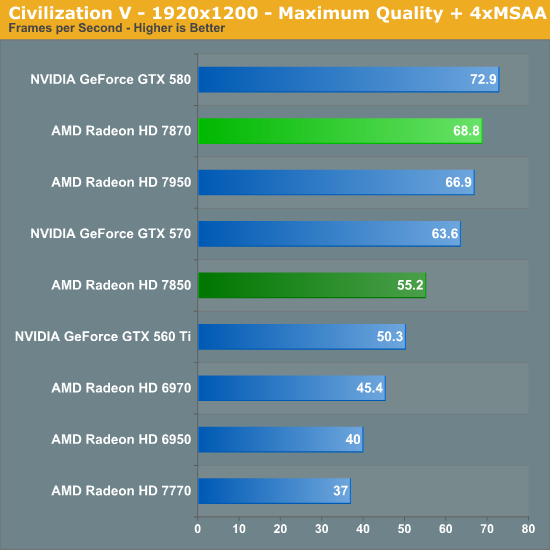

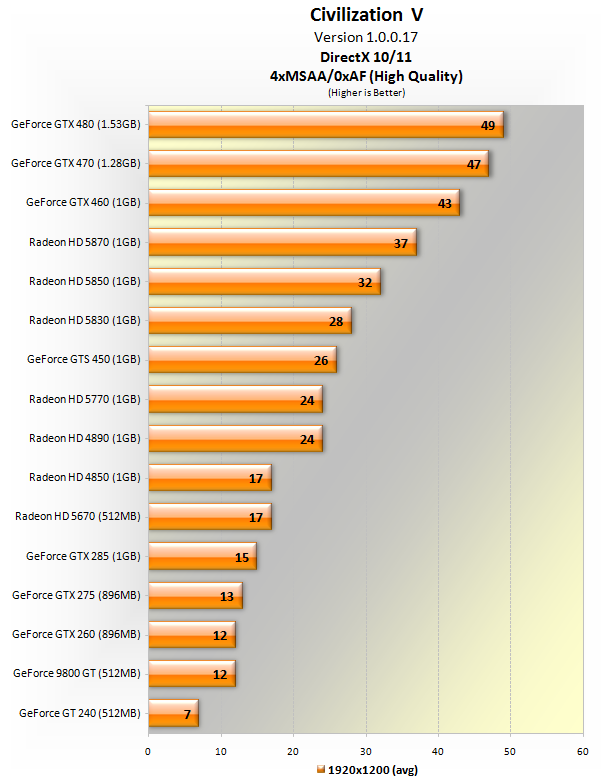

Instead of posting tons of graphs of benches (seriously, using Civ V at framerates over 60fps, as if Civ V were some shooter...?), how about finding before-and-after screenshots of when tessellation actually mattered in a popular game?

Do you honestly in your heart of hearts believe that we will see tessellation used in a game-changing way anytime before the next-gen consoles come out?

But fine, you are insistent that tessellation matters even if I can barely tell the difference. Even if we take your highly sensitive eyes to be standard, guess what? AMD can do tessellation just fine when its geometry logic is clocked high enough. I highly doubt tessellation is the limiting factor.

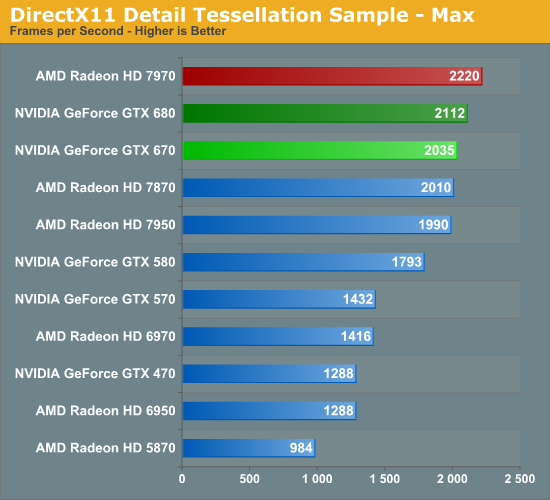

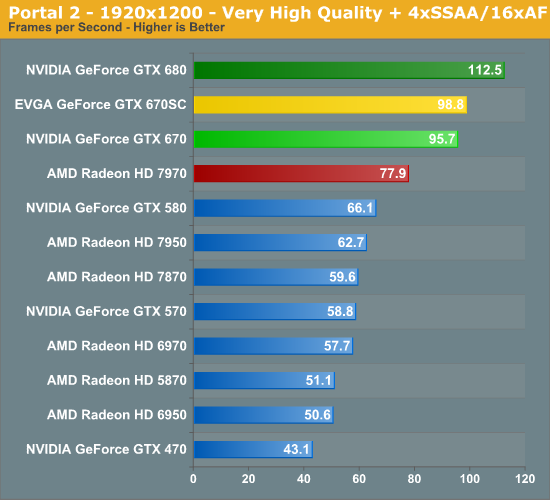

Let's look at AT's very own tessellation analysis, shall we? I'll use the GTX 670 review since that includes the top four GPUs:

http://www.anandtech.com/show/5818/nvidia-geforce-gtx-670-review-feat-evga/16

If you want to see the 7850 it's

here, but at stock clocks of course its tess power sucks. Clock it up to 1GHz and it will tessellate as well as a 7870. Even at its pitiful stock clock of 860MHz, though, the 7850 is still out-tessellating the GTX 580@stock.

Also see:

http://www.anandtech.com/show/5261/amd-radeon-hd-7970-review/12 which says:

"Of course both of these benchmarks are synthetic and real world performance can (and will) differ, but it does prove that AMD’s improvements in tessellation efficiency really do matter.

Even though the GTX 580 can push up to 8 triangles/clock, it looks like AMD can achieve similar-to-better tessellation performance in many situations with their Southern Islands geometry pipeline at only 2 triangles/clock.

Though with that said, we’re still waiting to see the “killer app” for tessellation in order to see just how much tessellation is actually necessary. Current games (even BF3) are DX10 games with tessellation added as an extra instead of being a fundamental part of the rendering pipeline. There are a wide range of games from BF3 to HAWX 2 using tessellation to greatly different degrees and none of them really answer the question of how much tessellation is actually necessary. Both AMD and NVIDIA have made tessellation performance a big part of their marketing pushes, so there’s a serious question over whether games will be able to utilize that much geometry performance, or if AMD and NVIDIA are in another synthetic numbers war."

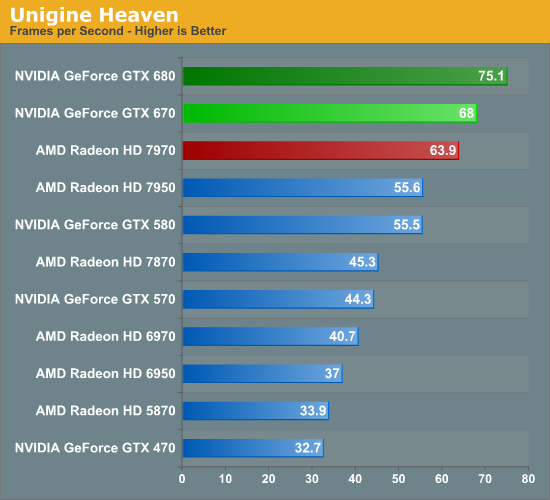

Note that the 7870 had better tess performance than the 7950, at least at stock, due to higher clocks, yet LOST in Unigine anyway. What this means is that you can have INFINITE tessellation power, but if that's not the bottleneck, then that's not the bottleneck, and apparently in Unigine, tessellation is not the bottleneck. What IS the bottleneck, then? I don't know, but I've heard some people complain about AMD not increasing the number of ROPs.

If you believe AT's analysis was wrong and that AMD's GPUs are so horrible at tessellation that it is the bottleneck, what are you expecting, exactly? Games that will bring down a 7970 due to tessellation but leave 680 standing? It might happen eventually, but I think that future is too far to worry about and that by the time that happens, both cards would be obsolete. If you disagree, then let's agree to disagree about the timeframe.

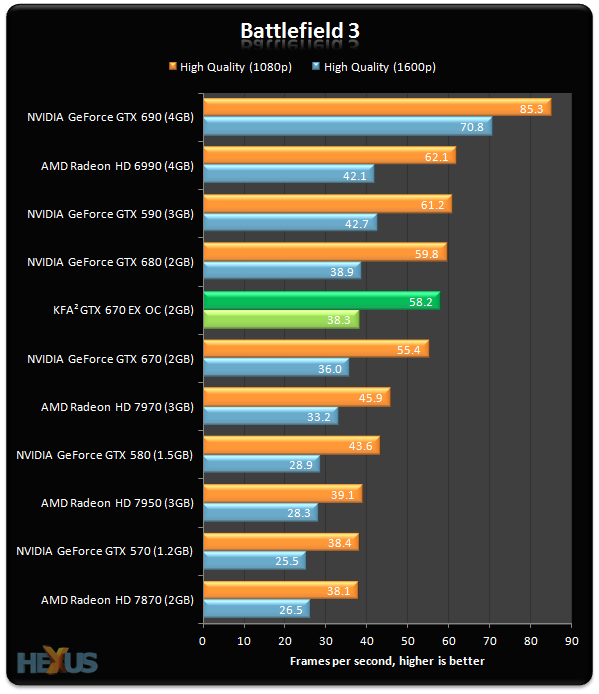

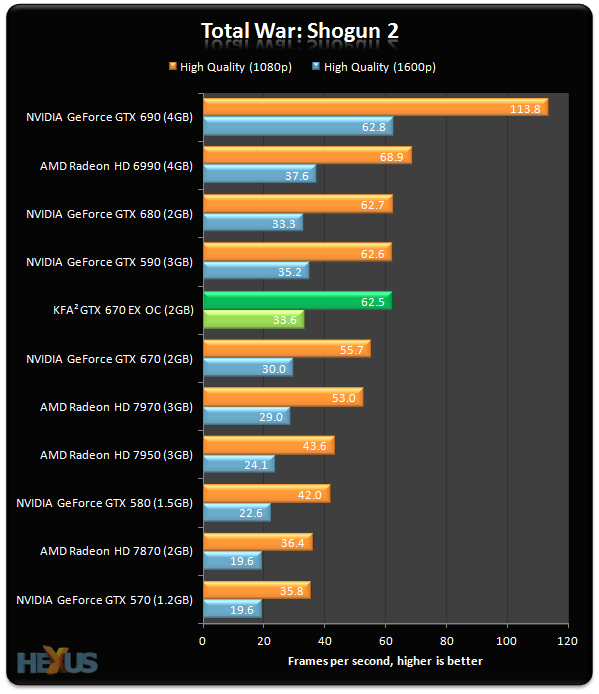

By the way, here are oc vs oc results for the top four GPUs today. Note that BF3 and Deus Ex have tessellation. Note also how the 7970 performs vs the 680 (basically a tie in BF3 and a win in Deus Ex):

http://hardocp.com/article/2012/05/14/geforce_680_670_vs_radeon_7970_7950_gaming_perf/3

BTW, John Carmack's opinion of tessellation (note that he thinks even Nvidia's tessellation ability isn't quite there yet): "Tessellation is one of those things that bolting it on after the fact is not going to do anything for anybody, really. It’s a feature that you go up and look at, specifically to look at the feature you saw on the bullet point rather than something that impacts the game experience. But if you take it into account from your very early design, and this means how you create the models, how you process the data, how you decimate to your final distribution form, and where you filter things, all of these very early decisions (which we definitely did not on this generation) I think tessellation has some value now. I think it’s interesting that there is a no-man’s land, and we are right now in polygon density levels at a no-man’s land for tessellation because tessellation is at it’s best when doing a RenderMan like thing going down to micro-polygon levels. Current generation graphics hardware really kind of falls apart at the tiny levels because everything is built around dealing with quads of texels so you can get derivatives for you texture mapping on there. You always deal with four pixels, and it gets worse when you turn on multi-sample anti-aliasing (AA) where in many cases if you do tessellate down to micro-polygon sizes, the fragment processor may be operating at less than 10% of its peak efficiency. When people do tessellation right now, what it gets you is smoother things that approach curves. You can go ahead and have the curve of a skull, or the curve of a sphere. Tessellation will do a great job of that right now. It does not do a good job at the level of detail that we currently capture with normal maps. You know, the tiny little bumps in pores and dimples in pebbles. Tessellation is not very good at doing that right now because that is a pixel level, fragment level, amount of detail, and while you can crank them up (although current tessellation is kind of a pain to use because of the fixed buffer sizes on the input and output [hardware]) it is a significant amount of effort to set an engine up to do that down to an arbitrary level of detail. Current hardware is not really quite fast enough to do that down to the micro-polygon level."

http://www.pcper.com/reviews/Editor...-Graphics-Ray-Tracing-Voxels-and-more/Transcr

If I'm interpreting Carmack right, he's saying that the really impactful level of tessellation he desires is beyond even Kepler right now.

Anyway, we've been over the economics of game development in this forum ad infinitum so you know that developers feel pressured to code to the lowest reasonable common denominator: consoles. Until consoles can tessellate, don't hold your breath on tessellation being anything more than a tacked on feature that doesn't do much, as Carmack noted.

Better MSAA performance, better multi-monitor support, a-vsync, etc. at least impact games in real ways today. Hardware-accelerated physics and tessellation not so much, because consoles don't support them. Hardware is useless without software.

NV knows this, hence TWIMTBP and such, but even they can do only so much against the anvil that is DX9 consoles that is weighing down graphics progression in the games development industry. So far NV has paid some devs to use PhysX for stuff like fog or papers (why so many papers all over the place? To show off PhysX, not because it necessarily makes sense) and to over-tessellate flat objects in Crysis 2 and stuff like that. Big deal.

But like I said, feel free to post with/without screenshots of games that use tessellation. Hopefully at least one game out there will actually use it right and not just tack it on afterwards which doesn't do much. So far, though, I think all the games I'm aware of that use tessellation do it in the lame way that Carmack was talking about (bolted on).