Ryzen's poor performance with Nvidia GPU's. Foul play? Did Nvidia know?

Page 5 - Seeking answers? Join the AnandTech community: where nearly half-a-million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

No problem. Just needed the correction. It's already a mess trying to explain the problem to deniers anyways. I wanted to make sure they aren't filling the thread with useless benches that don't actually apply to the issue.Thanks.

Sent from my Pixel XL using Tapatalk

Actually it has to do with the game and the drivers both. While drivers can't magically make a game scale beyond what it's programmed to do, a game can't fix poor drivers either.

I think you need to re-read what I wrote. I never said that drivers don't matter, I said that CPU scaling has more to do with the game programming more than the drivers. Both matter yes, but the game programming matters more. Otherwise why would we have a game like Ghost Recon scale on deca core CPU, compared to something like Far Cry 4 which only uses a quad core effectively?

He was wrong the problem is specific to DX 12 on Nvidia video cards. I posted just earlier today an example of previous scaling and the fact that it stopped somewhat recently in probably one of the best examples of well threaded gameplay.

Citing one game unfortunately does not prove your case. Anyone that knows anything about DX12, will tell you that the game's programming and optimization matter more than ever when it comes to how it will perform on any given hardware. BF1 obviously doesn't have a good DX12 implementation because it loses significant performance with DX12 on NVidia hardware, and can even lose performance on AMD hardware depending on the configuration.

At any rate, check out Gears of War 4. It scales to six threads on NVidia hardware, and is DX12 only unlike BF1

Citing one game unfortunately does not prove your case. Anyone that knows anything about DX12, will tell you that the game's programming and optimization matter more than ever when it comes to how it will perform on any given hardware. BF1 obviously doesn't have a good DX12 implementation because it loses significant performance with DX12 on NVidia hardware, and can even lose performance on AMD hardware depending on the configuration.

At any rate, check out Gears of War 4. It scales to six threads on NVidia hardware, and is DX12 only unlike BF1

Let me summarise:

Topweasel: nvidia used to scale fine with more cores, but with current drivers they don't scale above four cores anymore.

Carfax83: you are wrong, look at these old benchmarks nvidia drivers does scale with more cores.

3DVagabond

Lifer

- Aug 10, 2009

- 11,951

- 204

- 106

I know it doesn't fit your agenda, but you're wrong. Driver quality can bring a game performance to it's knees. To the point of being unplayable purely due to the drivers. Now you go ahead and repeat yourself again like it'll change anything.I think you need to re-read what I wrote. I never said that drivers don't matter, I said that CPU scaling has more to do with the game programming more than the drivers. Both matter yes, but the game programming matters more. Otherwise why would we have a game like Ghost Recon scale on deca core CPU, compared to something like Far Cry 4 which only uses a quad core effectively?

imported_jjj

Senior member

- Feb 14, 2009

- 660

- 430

- 136

Let me summarise:

Topweasel: nvidia used to scale fine with more cores, but with current drivers they don't scale above four cores anymore.

Carfax83: you are wrong, look at these old benchmarks nvidia drivers does scale with more cores.

Can you share any such tests where Nvidia doesn't have issues with more cores under DX12?

tamz_msc

Diamond Member

- Jan 5, 2017

- 3,865

- 3,730

- 136

Citing one game unfortunately does not prove your case. Anyone that knows anything about DX12, will tell you that the game's programming and optimization matter more than ever when it comes to how it will perform on any given hardware. BF1 obviously doesn't have a good DX12 implementation because it loses significant performance with DX12 on NVidia hardware, and can even lose performance on AMD hardware depending on the configuration.

At any rate, check out Gears of War 4. It scales to six threads on NVidia hardware, and is DX12 only unlike BF1

I would not use Gears of War 4 to say that DX12 and NVIDIA does not have issues. Only the AotS engine at the moment is built from the ground up with DX12 in mind.

Lets not forget about the UWP version of Quantum Break, which came with DX12, that Remedy themselves had said that it was difficult to implement DX12. But the results are very interesting nonetheless, an R9 390 almost 50% faster than the GTX 970 in DX12, and even with improved DX12 support the GTX 1060 still suffers a 15% drop compared to DX11, while the RX 480 is identical performance-wise in both the APIs.

No one is questioning that Nvidia doesn't do well in DX12. It's the cost of the Arch. But that isn't what is being pointed out to you.

And i said it multiple times: You have to understand what DX12 is. nVidia's DX11 driver "scales" over multiple threads. With DX12 it is the job of the application to mimic nearly everything of the DX11 driver. Watch the video i linked. nVidia is explaining it.

Citing one game unfortunately does not prove your case. Anyone that knows anything about DX12, will tell you that the game's programming and optimization matter more than ever when it comes to how it will perform on any given hardware. BF1 obviously doesn't have a good DX12 implementation because it loses significant performance with DX12 on NVidia hardware, and can even lose performance on AMD hardware depending on the configuration.

At any rate, check out Gears of War 4. It scales to six threads on NVidia hardware, and is DX12 only unlike BF1

I noted 4 games. 4 Games that scaled with more than 4 cores in DX11 and stopped scaling on DX12. I used BF1 as an example because I also showed evidence that it used to scale. I'll check out the Gear test later.

I'll watch those video's once you in particular can articulate why in games that scale fine in DX11 on Nvidia, Scale fine with AMD in DX12, now doesn't scale with Nvidia in DX12. Also please explain why that scaling stopped happening recently. Nothing you have stated actually describes the issue and I'd rather not waste my time on a "It's not us, it's them" video if it isn't actually going to cover that issue.And i said it multiple times: You have to understand what DX12 is. nVidia's DX11 driver "scales" over multiple threads. With DX12 it is the job of the application to mimic nearly everything of the DX11 driver. Watch the video i linked. nVidia is explaining it.

I know it doesn't fit your agenda, but you're wrong. Driver quality can bring a game performance to it's knees. To the point of being unplayable purely due to the drivers. Now you go ahead and repeat yourself again like it'll change anything.

LOL my agenda? This is the like the pot calling the kettle black. I don't even know why I'm talking to you to be honest, because you're intentionally misrepresenting what I'm saying and your reasons are suspect given your ardent nature when it comes to AMD. I never claimed at any point that driver quality doesn't matter as it relates to CPU scaling. I even provided evidence that it does matter on the previous page with Watch Dogs.

I'm only saying that it matters less than actual game programming when it comes to core/thread usage. But typically, you have now shifted goal posts by bringing up overall driver quality which had nothing to do with what we were discussing originally.

Like I said, typical.

I would not use Gears of War 4 to say that DX12 and NVIDIA does not have issues. Only the AotS engine at the moment is built from the ground up with DX12 in mind.

Lets not forget about the UWP version of Quantum Break, which came with DX12, that Remedy themselves had said that it was difficult to implement DX12. But the results are very interesting nonetheless, an R9 390 almost 50% faster than the GTX 970 in DX12, and even with improved DX12 support the GTX 1060 still suffers a 15% drop compared to DX11, while the RX 480 is identical performance-wise in both the APIs.

So a DX12 only game shouldn't be used, but a AMD sponsored DX11/DX12 hybrid game should because "reasons." And don't even bring up Quantum Break. That game is pathetic in terms of optimization.

But I'll humor you this time, just because I'm interested to see what excuses you will conjure up next

Do you agree or disagree?

GaiaHunter

Diamond Member

- Jul 13, 2008

- 3,700

- 406

- 126

NVIDIA doesn't care if their GPUs are on an AMD system or on an Intel system.

People just have this fantasy that the performance they see is all about the strengths of the hardware alone.

If Ryzen market share goes up, you can bet NVIDIA GPU performance on AMD systems will increase.

Ryzen atm is competing pretty much on the strengths of its hardware alone vs Intel processors that have a decade of software being optimized for them.

People just have this fantasy that the performance they see is all about the strengths of the hardware alone.

If Ryzen market share goes up, you can bet NVIDIA GPU performance on AMD systems will increase.

Ryzen atm is competing pretty much on the strengths of its hardware alone vs Intel processors that have a decade of software being optimized for them.

I noted 4 games. 4 Games that scaled with more than 4 cores in DX11 and stopped scaling on DX12. I used BF1 as an example because I also showed evidence that it used to scale. I'll check out the Gear test later.

You should also check out the Ashes of the Singularity test, which is scaling to an octa core CPU on NVidia hardware.

3DVagabond

Lifer

- Aug 10, 2009

- 11,951

- 204

- 106

LOL my agenda? This is the like the pot calling the kettle black. I don't even know why I'm talking to you to be honest, because you're intentionally misrepresenting what I'm saying and your reasons are suspect given your ardent nature when it comes to AMD. I never claimed at any point that driver quality doesn't matter as it relates to CPU scaling. I even provided evidence that it does matter on the previous page with Watch Dogs.

I'm only saying that it matters less than actual game programming when it comes to core/thread usage. But typically, you have now shifted goal posts by bringing up overall driver quality which had nothing to do with what we were discussing originally.

Like I said, typical.

I caught what you said the 1st two times you posted it. But carry on defending nVidia and blaming the devs. .

Oh, and for what it's worth if you read my posts in the thread I blamed AMD for not supplying nVidia with samples to use for optimizing their drivers as the most logical reason. Nothing nefarious by nVidia. So much for my agenda.

tamz_msc

Diamond Member

- Jan 5, 2017

- 3,865

- 3,730

- 136

So a DX12 only game shouldn't be used, but a AMD sponsored DX11/DX12 hybrid game should because "reasons." And don't even bring up Quantum Break. That game is pathetic in terms of optimization.

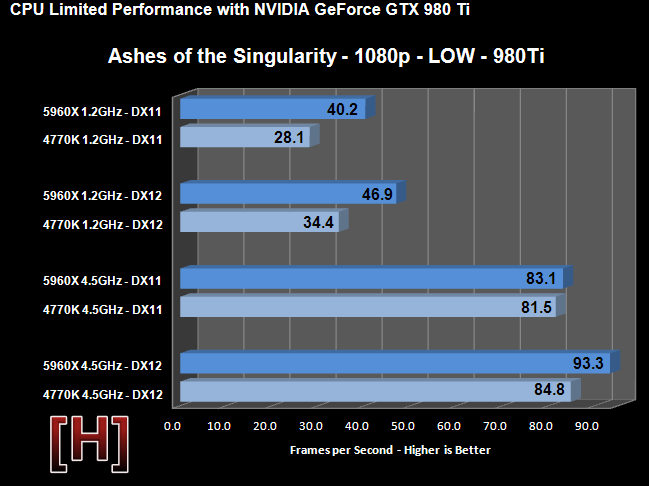

But I'll humor you this time, just because I'm interested to see what excuses you will conjure up nextHere is DX12 scaling on Ashes of the Singularity featuring a quad core and an octa core CPU. As you can see, the octa core CPU is significantly faster than the quad core CPU in this game, which implies that the game and the driver are able to utilize more than four threads in both DX11 and DX12.

Do you agree or disagree?

In one DX12 game, NVIDIA's drivers don't cause any issue, and that's because this "AMD sponsored" game has had optimizations done for both AMD and NVIDIA. In most of the other games that support DX12, NVIDIA seems to have issues.

Quantum Break DX12 ran flawlessly on AMD cards, and as I said before, R9 390 is almost 50% faster than the GTX 970. Remedy talked about how difficult it was to implement the advanced rendering features of the game in DX12 at GDC, that's why it is relevant because we know that in its first UWP iteration, it was built with DX12 in mind. Gears of War 4 doesn't count because because the tight integration of new APIs like DX12 and Vulkan isn't possible in engines like UE4 - developers who make their engines from scratch have better chances of implementing DX12 properly.

Oh and Kyle over at [H] where you pulled that graph from considers AotS more as a synthetic benchmark than an actual game benchmark, and I agree with him on this. So yeah, AotS results don't say much about say much after all about the efficiency of NVIDIA's drivers.

I caught what you said the 1st two times you posted it. But carry on defending nVidia and blaming the devs.

I'm not necessarily defending NVidia, I'm just stating the truth. I agreed with you when you said that it was both the drivers and the game programming that are responsible for proper CPU usage. NVidia's DX12 and Vulkan performance has increased substantially over the past few months, primarily due to driver optimizations.

But in certain games with bad DX12 optimization like BF1, then drivers obviously can't help.

tamz_msc

Diamond Member

- Jan 5, 2017

- 3,865

- 3,730

- 136

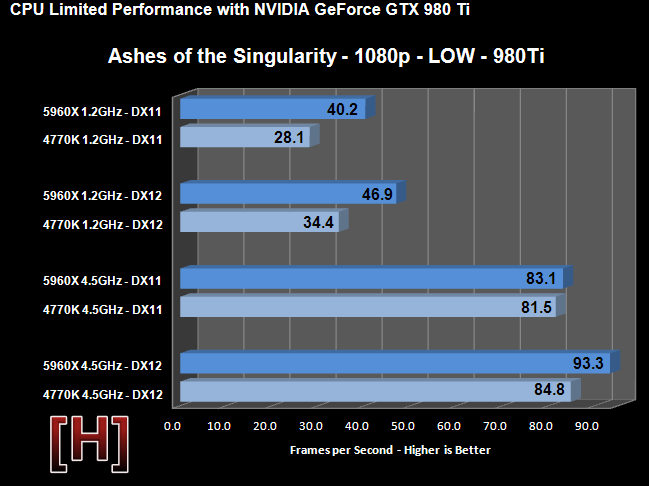

Speaking of AotS, Kyle did some testing with the heavy load preset as well, which stresses the GPU. This is what you would want under normal gaming scenarios. The results are quite revealing:

Interesting scaling on NVIDIA and AMD when the GPU is loaded, or so it seems.

EDIT: corrected FuryX graph for 6950X.

Interesting scaling on NVIDIA and AMD when the GPU is loaded, or so it seems.

EDIT: corrected FuryX graph for 6950X.

Last edited:

In one DX12 game, NVIDIA's drivers don't cause any issue, and that's because this "AMD sponsored" game has had optimizations done for both AMD and NVIDIA. In most of the other games that support DX12, NVIDIA seems to have issues.

I don't know if I would say most. NVidia's DX12 has definitely been improving lately and in DX12 only games, NVidia seems to have an edge over AMD.

Quantum Break DX12 ran flawlessly on AMD cards, and as I said before, R9 390 is almost 50% faster than the GTX 970. Remedy talked about how difficult it was to implement the advanced rendering features of the game in DX12 at GDC, that's why it is relevant because we know that in its first UWP iteration, it was built with DX12 in mind.

Quantum Break DX12 on AMD runs slower than Quantum Break DX11 on NVidia. That's all I need to say.

Gears of War 4 doesn't count because because the tight integration of new APIs like DX12 and Vulkan isn't possible in engines like UE4 - developers who make their engines from scratch have better chances of implementing DX12 properly.

This is you talking out of your ass for sure. Unless you're a graphics programmer that works for Epic, you have no idea what is and isn't possible on the UE4. That said, no current DX12 game is pure DX12 from the ground up, as all the engines are still hybridized to some degree. This will change eventually however.

Oh and Kyle over at [H] where you pulled that graph from considers AotS more as a synthetic benchmark than an actual game benchmark, and I agree with him on this. So yeah, AotS results don't say much about say much after all about the efficiency of NVIDIA's drivers.

The "excuse" I was waiting for. Glad to see you didn't disappoint.

So you went from pedestaling Ashes of the Singularity to criticizing it once you found out it didn't support your argument about NVidia driver scaling in DX12

Speaking of AotS, Kyle did some testing with the heavy load preset as well, which stresses the GPU. This is what you would want under normal gaming scenarios. The results are quite revealing:

I think you double posted the last graph. Anyway, the only revealing thing about those benchmarks is how terrible AMD's DX11 driver is in comparison to NVidia's. If AMD's DX11 driver is still CPU bottlenecked to that degree even at 1440p and crazy settings, then that's very revealing indeed.

tamz_msc

Diamond Member

- Jan 5, 2017

- 3,865

- 3,730

- 136

I don't know if I would say most. NVidia's DX12 has definitely been improving lately and in DX12 only games, NVidia seems to have an edge over AMD.

This is you talking out of your ass for sure. Unless you're a graphics programmer that works for Epic, you have no idea what is and isn't possible on the UE4. That said, no current DX12 game is pure DX12 from the ground up, as all the engines are still hybridized to some degree. This will change eventually however.

There are no DX12-only games. The closest thing to a pure DX12 engine we have is the AotS engine and even that has a DX11 pathway. This information is directly from Sebbi@Beyond3d forums.

Compared to the RX 480, the GTX 1060 fares worse in DX12 and the GTX 970 fares much worse.Quantum Break DX12 on AMD runs slower than Quantum Break DX11 on NVidia. That's all I need to say.

The "excuse" I was waiting for. Glad to see you didn't disappoint.

So you went from pedestaling Ashes of the Singularity to criticizing it once you found out it didn't support your argument about NVidia driver scaling in DX12

Anyway, the only revealing thing about those benchmarks is how terrible AMD's DX11 driver is in comparison to NVidia's. If AMD's DX11 driver is still CPU bottlenecked to that degree even at 1440p and crazy settings, then that's very revealing indeed.

I don't pedestal something that is more of a synthetic benchmark than an actual game benchmark. That AMD's DX11 drivers don't extract the maximum out of the card, except in certain situations, is well known. But that graph also shows that NVIDIA's DX12 implementation is nothing to write home about either. Driver version 378.78, which had the much touted DX12 'optimizations', doesn't affect Maxwell at all.

Mockingbird

Senior member

- Feb 12, 2017

- 733

- 741

- 136

Sorry, I don't believe in the crazy conspiracy.

NVIDIA is glad to sell you It's graphics cards. It doesn't matter which processor you have.

AMD didn't release Ryzen processors until a month ago and Nvidia probably didn't have it for testing.

Furthermore, most gamers until now use Intel Core processors which top out at 4-cores for mainstream processors.

NVIDIA is glad to sell you It's graphics cards. It doesn't matter which processor you have.

AMD didn't release Ryzen processors until a month ago and Nvidia probably didn't have it for testing.

Furthermore, most gamers until now use Intel Core processors which top out at 4-cores for mainstream processors.

William Gaatjes

Lifer

- May 11, 2008

- 22,565

- 1,472

- 126

It keeps me wondering, i do not think it is the lack or surplus of cpu computational power that is the issue. But what if more cores are present, the nvidia gpu driver creates more threads than the optimum value. Threads that prepare data for the gpu and that these threads have synchronization issues and create stalls and wait for each other. Dead lock issues perhaps...

That would create performance degradation.

That would create performance degradation.

TRENDING THREADS

-

Discussion Zen 5 Speculation (EPYC Turin and Strix Point/Granite Ridge - Ryzen 9000)

- Started by DisEnchantment

- Replies: 25K

-

Discussion Intel Meteor, Arrow, Lunar & Panther Lakes + WCL Discussion Threads

- Started by Tigerick

- Replies: 23K

-

Discussion Intel current and future Lakes & Rapids thread

- Started by TheF34RChannel

- Replies: 23K

-

-

AnandTech is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.