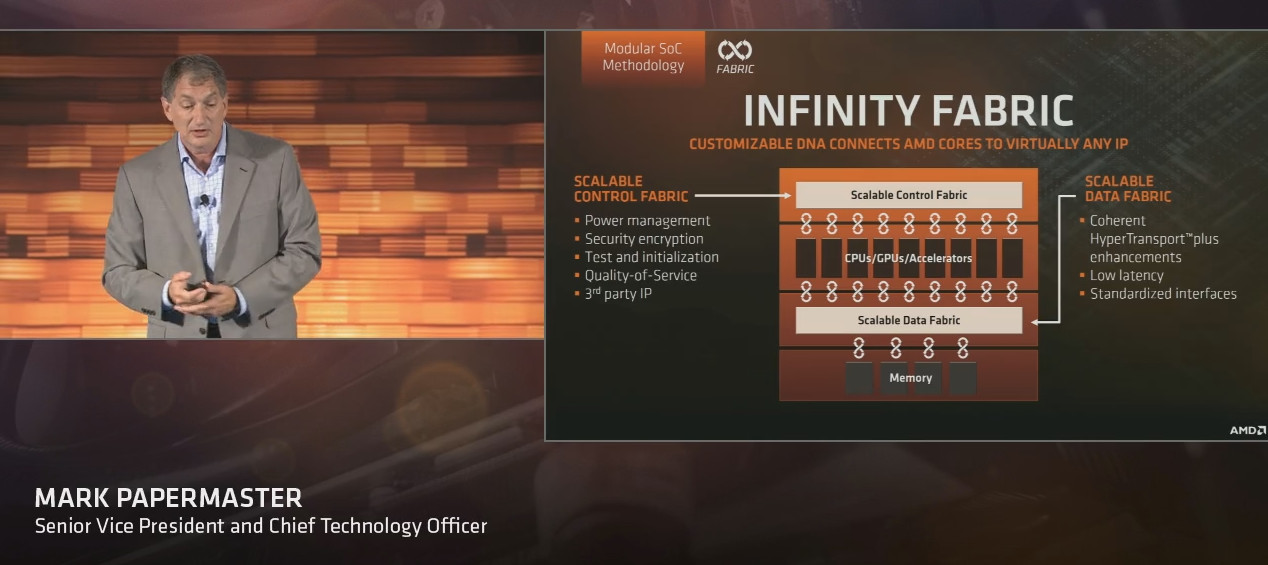

I find this kind of annoying ( just like the ryzen ECC crusade). IF actually has nothing to do with Interfaces, IF is interface agnostic. IF is a data/control plane specification. But when people say IF they are talking the entire methodology of how AMD is building the interconnects between major blocks within SOCs, between SOC's on the same package and between packages. Now what really annoys me about your 1/2 truths above is that as a part of that package of technologies they have different interface and physical implementations for those different interconnects.

The on SOC has an interconnect

The on package has GMI interconnect ( appears to be custom 16bit interfaces), according to the stilt they run 2x the clock rate of GMIx ( could be upto 25ghz)

The inter package has GMIx which runs on the DesignWare Enterprise 12G PHY, which can run Express 3.1, 40GBASE-KR4, 10GBASE-KR, 10GBASE-KX4, 1000BASE-KX, 40GBASE-CR4, 100GBASE-CR10, XFI, SFI (SFF-8431), QSGMII, and SGMII.

Now what AMD is doing that is completely different to Intel is using these common interconnects to connect major building blocks across a very broad range of building blocks all with the common dataplane/control packet (probably) based transport scheme:

Vega will use GMI to go on package with CPU

Vega uses IF for onchip interconnects

Navi will probably use IF for on package GPU cross connect

Navi will probably use IF for onchip interconnects

Zepplin uses it for onchip, on package and inter package

Any semi custom SOC's will use it

That's the differentiation between what has come before (hyper transport/QPI etc).

The other thing to recognize is that even between packages the extra latency is dominated by cache coherency not by encode/transmit/receive/decode. Zeppelin is already paying that probe/directory lookup price between the two CCX's so its likely that the only increase in latency will be the physical so we are looking at something like 15ns. Intel's latency grows with every core added. When the 6core CCX, 48 core server parts come out, intra CCX latency will probably go up a little and then every other latency will stay the same ( with all things being equal).